Manage Linux patching with Ansible and Netbox!

Nick Schmidt

Posted on April 7, 2024

Patching all of my random experiments took too much of my free time, so I automated it.

This is a pretty cheesy thing to do, but over the years it became more and more time-consuming to maintain all the different deployed workloads and infrastructure.

Requirements

With all system design, it's best to consider all relevant needs ahead of time. Given that this is a home lab, I decided to adopt an intentionally aggressive, but theoretically viable in production approach:

- Nightly patching

- Nightly reboots

- No exempt packages

- Distribution-agnostic, it should patch multiple distributions at once

- This workflow should execute consistently from-code

Iteration 1: Ansible with Jenkins

The earliest implementation I built here had the least refinement by far. Here I tied Jenkins to an internal repository:

To leverage this, I started out with an INI inventory, but it quickly became problematic. I wanted a hierarchy, with each distribution potentially fitting multiple categories. This became pretty messy pretty quickly, so I moved to a YAML Inventory:

debian_machines:

hosts:

hostname_1:

ansible_host: "1.1.1.1"

hostname_2:

ansible_host: "2.2.2.2"

ubuntu_machines:

hosts:

hostname_3:

ansible_host: "3.3.3.3"

apt_updates:

children:

debian_machines:

ubuntu_machines:

nameservers:

children:

hostname_2:

hostname_3:

This allowed me to simplify my playbooks and inventory by making "groups of groups", and avoid crazy stuff like taking down all nodes for an application at once. We'll use nameservers: as an example here:

---

- name: "Reboot APT Machines, except DNS"

hosts: apt_updates,!nameservers

tasks:

- name: "Ansible Self-Test!"

ansible.builtin.ping:

- name: "Reboot Apt Machines!"

ansible.builtin.reboot:

- name: "Reboot nameservers"

hosts: nameservers

serial: 1

tasks:

- name: "Ansible Self-Test!"

ansible.builtin.ping:

- name: "Reboot nameservers serially!"

ansible.builtin.reboot:

The serial: 1 key instructs the Ansible controller to only execute this playbook on one machine at a time, so DNS continuity is preserved.

Retrospective

I had several issues with this approach, but to my surprise, Linux patching and actual Ansible issues haven't cropped up at all. With most mainstream distributions, the QC must be good enough to patch nightly like this.

I did have issues with inventory management, however. To update the Ansible inventory, I could deploy as-code, which was nice, but it was still clunky. If I deployed 5 Alpine images in a day, I want them to automatically be added to my inventory for maximum laziness.

I also quickly discovered that maintaining Jenkins was labor-intensive. It's a truly powerful engine, and great if you need all the extra features, but there aren't many low-friction ways to automate all the required maintenance, particularly around plugins. I was able to update Jenkins itself with a package manager, but it seems like every few days I had to patch plugins (manually).

Iteration 2: Ansible, Netbox, GitHub Actions

I'll be up-front - for parameterized builds, GitHub Actions is less capable.

It has some pretty big upsides, however:

- You don't have to maintain the GUI at all

- Logging is excellent

- Integration with GitHub is excellent

- Pipelines are YAML defined in their own repository

- Status badges in Markdown (we don't need some stinkin' badges!)

This workflow has been much smoother to operate. Since the deployment workflow already updates Netbox, all machines are added to the "maintenance loop after first boot.

I was really surprised at how little work was required to convert these CI pipelines. This was naive of me - ease of conversion is the entire point of CI pipelines, but it's still mind-boggling to realize how effective it is at times.

To make this work, I first needed to create a CI process in .github/workflows on my Lab repository:

name: "Nightly: @0100 Update Linux Machines"

on:

schedule:

- cron: "0 9 * * *"

permissions:

contents: read

jobs:

build:

runs-on: self-hosted

steps:

- uses: actions/checkout@v3

- name: Execute Ansible Nightly Job

run: |

python3 -m venv .

source bin/activate

python3 -m pip install --upgrade pip

python3 -m pip install -r requirements.txt

python3 --version

ansible --version

export NETBOX_TOKEN=${{ secrets.NETBOX_TOKEN }}

export NETBOX_API=${{ vars.NETBOX_URL }}

ansible-galaxy collection install netbox.netbox

ansible-inventory -i local.netbox.netbox.nb_inventory.yml --graph

ansible-playbook -i local.netbox.netbox.nb_inventory.yml lab-nightly.yml

This executes on a GitHub Self-Hosted runner in my lab with a Python Virtual Environment. The workflow will run a clean build, every time - by wiping out the workspace prior to each execution. No configuration artifacts are left behind.

With GitHub Actions, all processes are listed alphabetically, you can't do folders and trees to keep it more organized. I developed a naming convention:

{{ workflow_type }}: {{ description}}

To keep things sane.

From there, we need a way to point to Netbox as an inventory source. This requires a few files:

requirements.txt is the Python 3 Pip inventory - since things are running in a virtual environment, it will only use python packages in this list.

txt

Requirements without Version Specifiers

pytz

netaddr

django

jinja2

requests

pynetbox

Requirements with Version Specifiers

ansible >= 8.4.0 # Mostly just don't use old Ansible (e.g. v2, v3)

The next step is to build an inventory file. This has to be named specifically for the plugin to work - `local.netbox.netbox.nb_inventory.yml`:

plugin: netbox.netbox.nb_inventory

validate_certs: False

config_context: True

group_by:

- tags device_query_filters:

- has_primary_ip: 'true'

- **Not featured here - The API Endpoint and API Token directives are handled by [GitHub Actions Secrets](https://docs.github.com/en/actions/security-guides/using-secrets-in-github-actions), and therefore don't need to be in this file.**

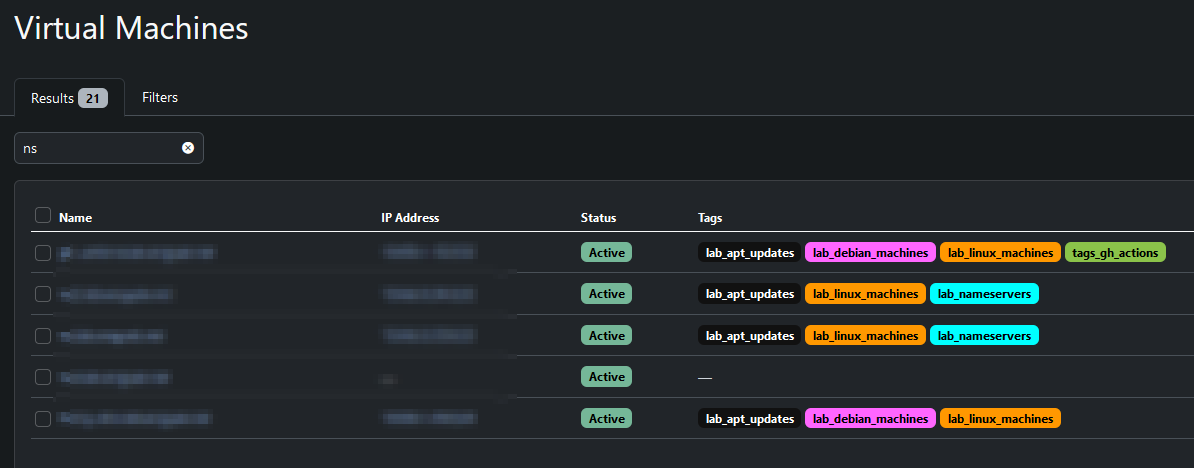

This file is pretty straightforward. It indicates that we should use Netbox tags to develop our inventory, and we can assign multiple tags in the Netbox application to each Virtual Machine. I also added the `has_primary_ip` directive - if a machine doesn't get an IP address for some reason, it won't try to reach that VM and patch it, causing late night failures.

Here's a preview of the Netbox application with these tags:

[](netbox_preview.png)

Refactoring the Ansible playbooks was hilariously easy. The Netbox inventory plugin prepends the `group_by` field onto the group, so all I had to do in each playbook was prepend `tags_` to each name. Here's an example:

- name: "Apt Machines"

hosts: tags_lab_apt_updates

tasks:

- name: "Ansible Self-Test!" ansible.builtin.ping:

- name: "Update Apt!" ansible.builtin.apt: name: "*" state: latest update_cache: true

- name: "Apk Machines"

hosts: tags_lab_apk_updates

tasks:

- name: "Ansible Self-Test!" ansible.builtin.ping:

- name: "Update Apk!" ansible.builtin.apt: available: true upgrade: true update_cache: true

After that, the CI tooling just takes care of it all for me!

### Retrospective

I'm going to stick with this method for a while. Netbox tagging makes inventory management much more intuitive, and I can develop tag "pre-sets" in my deployment pipeline to correctly categorize all the _stuff_ I deploy. Since it's effectively documentation, I have an easy place to put data I'll need to find later for those "what was I thinking?" moments.

I'll be honest - behind that, I haven't really given it much thought. This approach requires zero attention to continue, and it happens while I sleep. I haven't gotten any problems from it, and it allows me to focus my free time on things that are more important.

10/10 would recommend.

Posted on April 7, 2024

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.