Serverless Patterns

Marco

Posted on April 10, 2020

Serverless sets free developers from the burden of managing infrastructures. When we go serverless, we do not have to worry anymore about machines, containers and, in general, traditional devops or sysadmin. The benefits are lower costs for operations and fewer headaches.

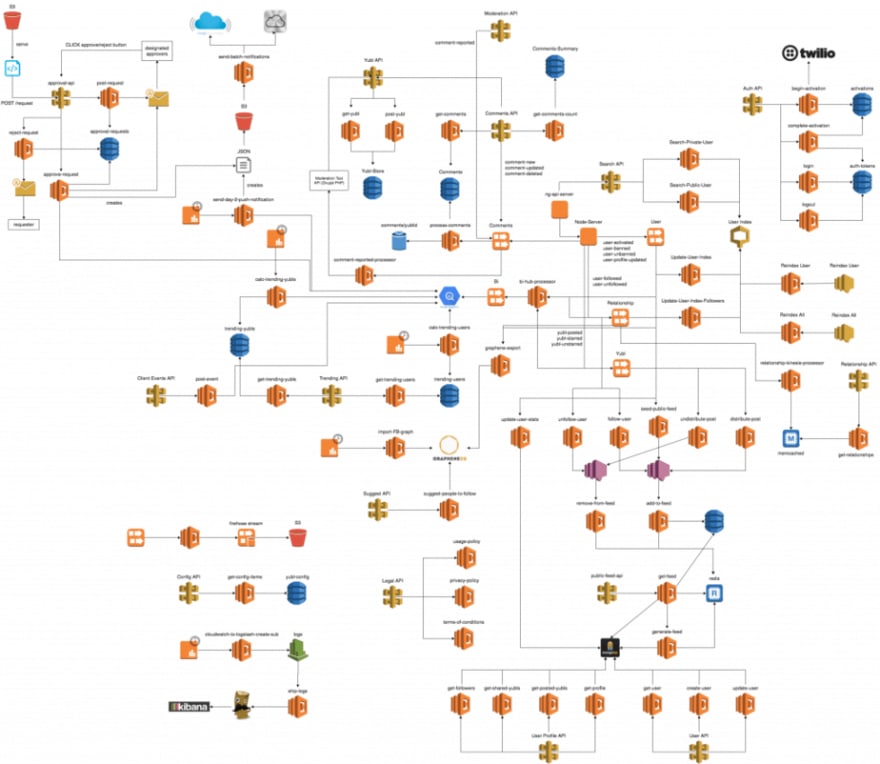

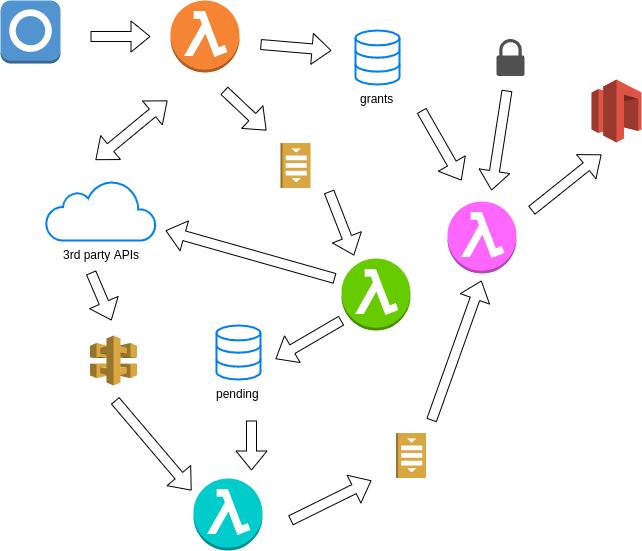

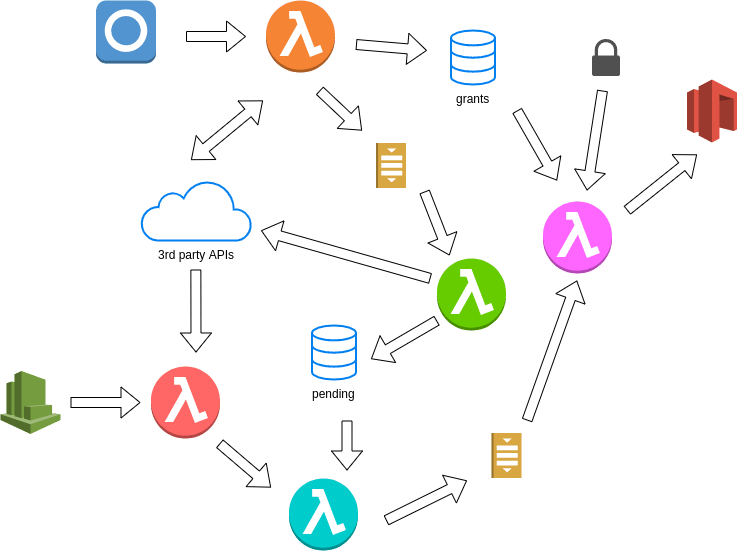

However, new problems arise. One of the most important ones is that Serverless is not configuration-less. As networks grow (and they do very soon), configuration file size explodes. Try to imagine the pain to manage and understand a network like this (from [1]).

We have a lot of tools that help us in building and deploying Serverless network, but working with large networks is still hard, error prone and time consuming.

To put it with the words [3] of Tim Wagner, AWS Lambda inventor

Configuration & AWS CloudFormation are still creating friction [...]

Whatever your position on recent approaches [...] it’s clear that CloudFormation and other vendor-provided options still aren’t nailing it.

When we solve complex problems, we usually split them into simpler ones. Can we use the "divide et impera" principle to tame the complexity of Serverless networks? Is it possible to decompose large networks into smaller ones? Can we build networks from reusable more manageable units?

In a recent paper, Davide Taibi and colleagues have identified some common patterns for serverless networks used in industry [2].

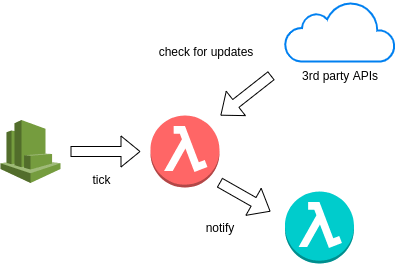

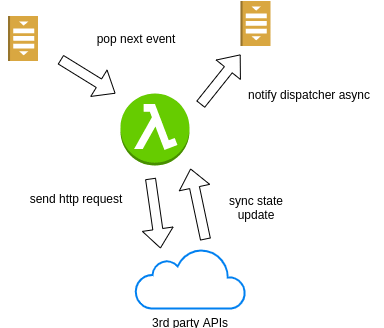

For example, this simple network is a Polling Event Processor (PEP) from [2]. It describes an architectural component for simulating real time updates when an external system does not support them. The basic idea is the following. A clock periodically triggers a Lambda whose role is to check if there are updates. In that case, a notification with the new state is sent to another Lambda.

We can imagine that the PEP is a Lego brick, aka the unit of composition. As a Lego brick, a PEP can be used in different contexts and more than once.

If we reason in terms of network patterns instead of individual links between lambdas and resources, as opposed to what most tools do, a complex Serverless network can be seen as a composition of simpler networks. I believe that, in this way, working with large network wouldn't be that hard.

Here, following Taibi's approach, I would like to talk about some other patterns I met while working with Alexa Home Skills. Then, I'll show how we can reuse patterns over different network configurations using a simple composition mechanism.

A case study: Serverless patterns for Alexa

Let's introduce how Alexa works. When you utter a voice command, your utterance is interpreted by Amazon cloud services. Then, a structured event is sent to a custom AWS Lambda. In this Lambda, you implement the logic corresponding to the voice command. That's it.

There are two kinds of responses you can send back to Alexa from your lambda: a Response or a DeferredResponse. Here, we consider only the latter one because it allows us to build an interesting scenario.

A DeferredResponse is equivalent to an HTTP 202 response. We tell Alexa that we got the event, but we are not ready to return a response. We will notify Alexa (within 6 seconds) in an asynchronous way. More precisely, AlexaResponses are sent to an AlexaGateway, represented by the red gate in the picture below.

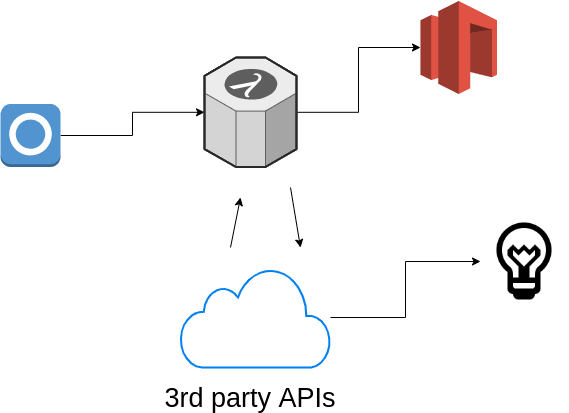

Typically, when you implement the custom logic for a Lambda, you interface with existing 3rd party APIs. For example, consider this simple use case.

Here, we want to map Alexa events to Http requests for the APIs of a bulb's manufacturer. So, when we receive a TurnOn directive, we will switch on lights.

By definition, we do not control 3rd party APIs. Hence, we need to adapt our infrastructure to different technical needs.

Here, we consider two kinds of APIs: sync and async. Sync APIs contain an updated state in their HTTP responses, e.g. when we send a "turn on" command to the APIs, we get back a response with the new state of the bulb (e.g. on) or an error. Async APIs, instead, respond with a 202 status code, i.e. the command has been received but the system state is not updated synchronously. Besides, we can have even more complex scenarios: some APIs have push notification mechanisms, others don't.

It is clear that we need to make different infrastructure choices if APIs are sync or async, if they have push notifications or not and so on. On the top of that, we would like to reuse as much infrastructure as we can for different projects.

Functional patterns

A functional pattern describes a function or role of a subnetwork in the context of a larger network. Which are the functional patterns for Alexa? Let's see.

The entrypoint

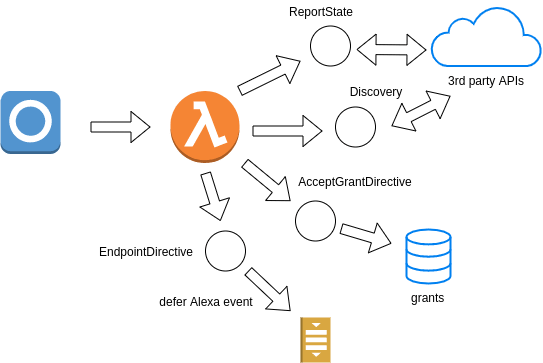

The entrypoint is a simple component whose aim is to accept Alexa events. It acts like a router from [2] in the sense that it "distribute[s] the execution based on payload".

- If the Alexa event is a

AcceptGrantDirective, then it returns anAcceptGrantResponse, synchronously. We will see what it means later. - If it is a

ReportState, it returns aReportState(i.e. the current state of a device), synchronously. - If it is a

Discover, it returns aDiscoverEvent(i.e. a list of available devices), synchronously. - Otherwise, it returns a

DeferredResponseand forwards the event to a queue in such a way that it can be processed by another lambda asynchronously.

The sync processor

The sync-processor takes an event from the queue of deferred events and builds an HTTP request for sync APIs. Since APIs are sync, an HTTP response contains the system's updated state and so the sync-processor can also build a proper AlexaResponse. The AlexaResponse will be sent over to the dispatcher. In other words, a sync-processor is a combination of proxy, fifo and router patterns described in [2].

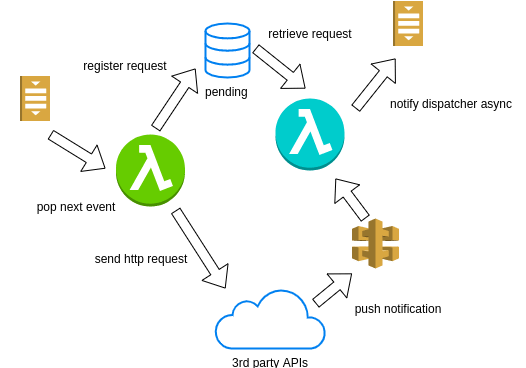

The async processor

The async-processor takes an event from the queue of deferred events and builds HTTP requests for async APIs. However, it cannot build an AlexaResponse because the HTTP response from the APIs is only a 202 response. We need to store the information that we are waiting for an async response from the APIs. So, the async-processor will store this information (i.e. Alexa event, HTTP requests and status) in a database. In this way, when the APIs notify the system of a state update, another Lambda will be able to build a proper AlexaResponse to be sent over. I do not see this pattern in [2], but it is a common pattern in distributed systems.

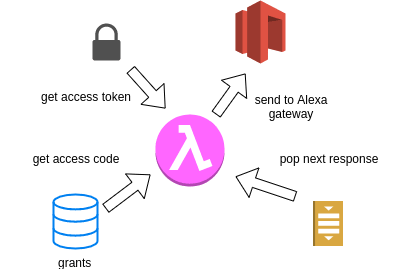

The dispatcher

When a DeferredResponse is sent back to Alexa, we have 6 seconds to return an AlexaResponse, asynchronously. Asynchronous responses are sent to the AlexaGateway.

So, when a sync-processor or an async-processor have built an AlexaResponse, the response should be sent to the AlexaGateway. However, it is not that simple. We need to be authorized by the Alexa user.

Explaining the details about how it works is out of the scope of this post. If you are interested, you can read Alexa documentation.

In a few words, the user must have already authorized push notifications sending an AcceptGrantDirective. We need to keep the grant code somewhere (here, for simplicity's sake, we will store it into a table). Then, when we need to send an AlexaResponse to the AlexaGateway, we have to obtain an access token using the grant code we stored previously. Then, we can send the AlexaResponse with the access token to the AlexaGateway.

Does it sound complicated? Not that much. Fortunately, this is the job for the dispatcher pattern.

Polling Event Processor (from [2])

If APIs have push notifications, then we need only an http gateway that invokes the second Lambda in the async-processor components. In this way, an AlexaResponse is built and sent over, when APIs notify a state update.

If APIs don't have push notifications, we need another component. The Polling Event Processor defined in [2] is exactly what we should use.

Composing sub-networks

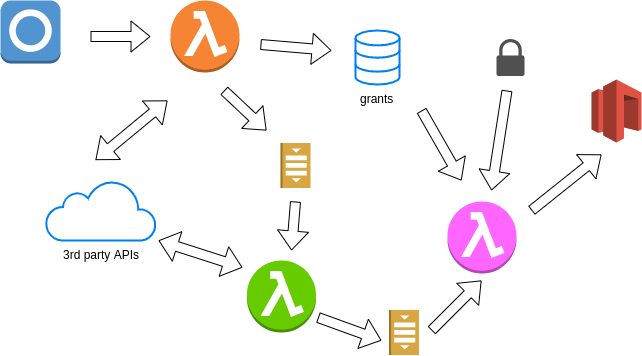

Now we have some reusable patterns. Let's see how we can glue them together in order to implement different use cases.

The composition mechanism is pretty simple: you compose two networks overlapping entities with the same names/colors. In this way, from the patterns we defined above, we can define different network configurations reusing the same components and code.

First, if APIs are synchronous, we can use the sync-processor composed with the entrypoint and the dispatcher.

Instead, if APIs are asynchronous, we need the async-processor. In this version, we assume that APIs have push notifications, so we are updated about new state changes using an API gateway.

In the last example, APIs are asynchronous, but they do not have push notifications. As discussed above, we can simulate real time updates using polling.

In an upcoming post, we will describe the composition mechanism more in detail. However, without going too deep into the technicalities, you can realize how simple it is. As an exercise, you can imagine more network configurations reusing the same components and implementing new ones.

Some suggestions:

- An

optimistic-processoris a processor for Async APIs that acts like async-processorassuming that the Async APIs will update the state as we expect. - What are the possible concerns with simulating push notifications using polling in a Serverless context? Can you imagine a better solution?

- What if we store access grants on a local hub within users' premises?

Conclusions

Building networks is a well-known problem in Serverless computing. Here, we show how we can build networks from smaller ones. In this way, we can share and reuse architectural components more easily.

Acknowledgements

Thanks to Purepoint team for criticisms and comments. This material is a re-adaptation of an internal tech talk I gave a few weeks ago. Besides, if you want to check it out, I have an incomplete WIP PoC where I tried to explain these ideas with code.

Credits

Diagrams made with Drawio.

The idea of overlapping graphs comes from Algebraic Graphs.

References

[1] Yan Cui Yubls Road to Serverless Architecture 2016

[2] Davide Taibi Serverless Patterns 2020 (via off-by-none)

[3] Tim Wagner The State of Serverless, circa 2019 2019

Posted on April 10, 2020

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.