Getting Started with Lambda functions, SLS and Node

Mikhail Levkovsky

Posted on September 2, 2019

At work my team had an interesting task of processing certain files daily as they were uploaded to an S3 bucket.

Instead of having an application deployed and perpetually running in the background, we decided to try out AWS Lambda.

This article will give an overview of how we setup our Lambda from beginning to end. I'll review how we manage the cloud formation stack with SLS, explore ways to set it up for different environments, and finally go over its deployment.

First, you'll need a few things:

- An AWS account

- Node v8.x (at a minimum) installed on your machine

- AWS CLI (Command Line Interface) installed on your machine

- SLS CLI installed on your machine

In order to manage our Lambda deployment successfully, I decided to use the Serverless Library. This library is extremely powerful and allows us to essentially manage our entire stack with YAML files.

In our use case, we wanted to create an S3 bucket that would trigger a specific method in a Lambda function upon receiving a file. All of this is defined in a few lines of a YAML file. Let's take a look.

First, in our project we added a serverless.yml file with the following parameters:

pssst I tweet about code stuff all the time. If you have questions about how to level up your dev skills give me a follow @mlevkov

The first part of the serverless.yml file establishes some basic requirements (i.e. what region in AWS to deploy, your AWS profile, etc…), the second part is where the fun starts.

This file declares that the s3Handler function inside of the app file will be triggered when a .csv file is created in the mybucket S3 bucket.

The last part of the file declares the plug-ins, which allow us to use TypeScript and run our serverless deployment locally.

To give you an idea of the code that will be processing the files, here is a simplified set of snippets to demonstrate the flow:

app.ts

app/controllers/S3Controller

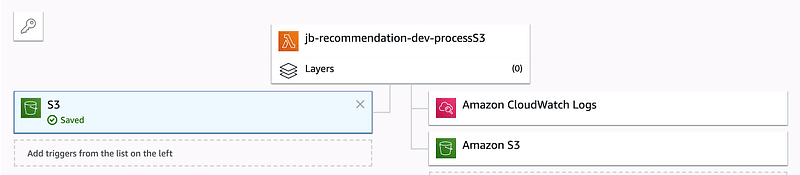

And what does this deployment look like once it's on AWS?

On the left you have the S3 trigger which is activated when .csv files are uploaded. In the middle you have the jb-recommendation Lambda, and on the right you have your Amazon CloudWatch Logs and the S3 bucket where your Lambda function will be uploaded to.

Deployment

SLS makes deployment easy as pie.

First, let's setup your local AWS profile:

aws configure - profile localdev

AWS Access Key ID [None]: <ENTER YOUR ACCESS KEY>

AWS Secret Access Key [None]: <ENTER YOUR SECRET KEY>

Default region name [None]: <ENTER 'us-east-1'>

Default output format [None]: <ENTER 'text'>

After which, you just run sls deploy and you're good to go.

Environment variables

What we did earlier will deploy your application, but chances are that you'd want some environment specific variables to isolate development, QA, and production environments.

Here's how I recommend introducing these:

The first step is to create a folder called configurations and create 3 separate YAML files:

- dev

- qa

- prod

We won't add anything too complicated to these files, just a change in

the Node environment to ensure that our environments work as expected.

/configuration/dev

NODE_ENV: 'development'

profile: 'localdev'

region: 'us-west-2'

stage: 'dev'

/configuration/qa

NODE_ENV:'qa'

profile: 'qa'

region: 'us-west-2'

stage: 'qa'

/configuration/prod

NODE_ENV:'prod'

profile: 'prod'

region: 'us-west-2'

stage: 'prod'

Now that we have separate environment variables, let's modify our serverless file to use them.

We changed our serverless file to also include custom variables such as stage and configuration. Now when we deploy, we can specify the stage which in turn will select the appropriate configuration file:

To toggle environments, all we have to do is add the -s [env] flag as follows:

sls deploy -s [dev/qa/prod]

The -s stands for the stage you want to deploy.

This will automagically create everything necessary for your entire CloudFormation infrastructure. It creates the S3 bucket, creates the S3 trigger events, deploys our Lambda function (hosted in a different s3 bucket) and adds the cloud formation logs.

With a few YAML files we were able to deploy our Node application, create our S3 buckets, and setup the right events for 3 separate environments. Hopefully this article helps to provide context around when and how to integrate Lambda into your stack.

If you want to level up your coding skills, I'm putting together a playbook that includes:

30+ common code smells & how to fix them

15+ design pattern practices & how to apply them

20+ common JS bugs & how to prevent them

Get early access to the Javascript playbook.

Posted on September 2, 2019

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.