Mariano Ramborger

Posted on May 3, 2021

One of the key features of cloud computing is scalability – the capability to scale a system according to demand.

The ability to grow (or shrink) the EC2 instances that contain and serve our applications is a powerful tool, but it can’t reach its full potential unless there is a way to distribute the incoming requests among them.

No sense in having 10 web servers if all the traffic ends up in server #1. Introducing a “Load Balancer” will help us avoid this scenario.

Elastic Load Balancer

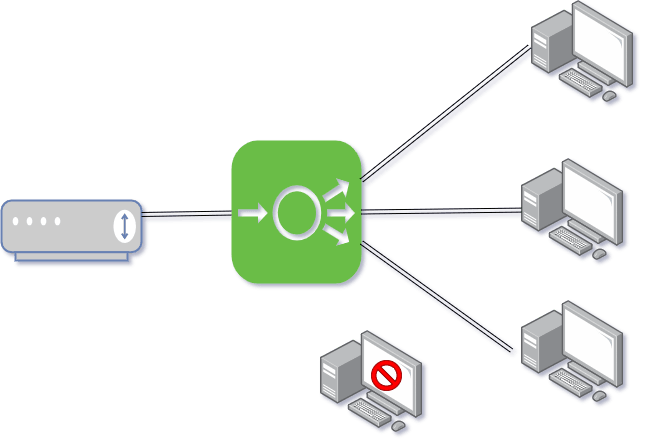

Load balancing is, simply put, the process of distributing the workload among your resources, to avoid overworking your infrastructure, and to maximize its efficiency.

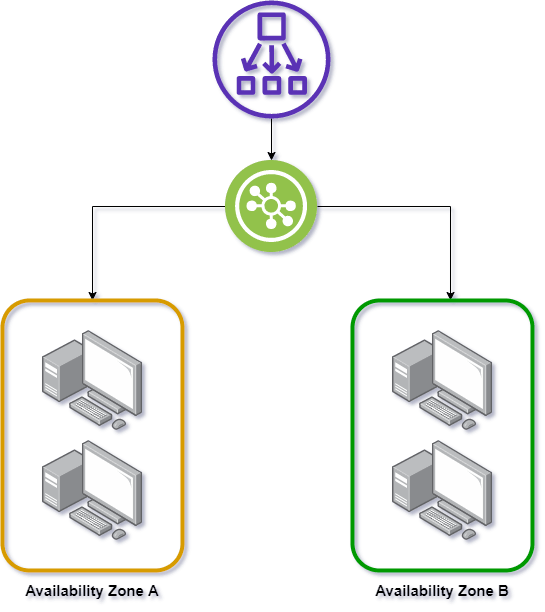

AWS offers us Elastic Load Balancer (ELB), a managed service that can automatically distribute traffic among our resources, even in different availability zones. By positioning ELB as a single point of contact for clients, it can sort their requests and route them to the appropriate targets. ELB can even monitor the health of our resources, and avoid routing to unhealthy ones.

AWS gives us, however, a few options when it comes to set up our Load Balancer.

Application Load Balancer

This Load Balancer is used to manage HTTP & HTTPs traffic. It works on the layer 7 of the OSI model which means it’s application-aware. Thanks to this, it supports advanced request routing, letting us target web-servers based on the request headers.

But how does it work?

An Application Load Balancer is composed of “Listeners” and “Target Groups”.

Listener, well… listen for requests from clients on certain ports, which you can define. You can configure a series of rules for the listeners, which will define how they will route those requests. Each rule is composed of a priority, conditions, and actions to be performed when those conditions are met.

Target groups are collections of registered possible destinations (EC2 instances, for example), where the requests can be routed to. Targets can be dynamically added or removed without interrupting the workflow. Health checks can be configured on a group-level.

Network Balancer

Working on the 4th OSI layer (Transport), this balancer is a high performance option for TCP and UDP connections.

The general workflow is similar to the application load balancer. Listeners can be configured to check client requests and forward them to target groups, even across different availability zones.

Classic Load Balancer

Neither the fastest nor the fanciest choice. In the truth, it’s a legacy option, and one AWS advices you to move from, if possible. It supports some Layer-7 specific options, particularly x-forwarded-for header (which identifies the IP of the original client, rather than the Load Balancer’s) support, and sticky sessions. It also supports layer-4 load balancing.

In a future post, we’ll go over the steps required to implement an Application Load Balancer, and how to maximize the advantages it can bring us.

Posted on May 3, 2021

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.