AWS Lambda with Localstack

Mairon Costa

Posted on May 26, 2020

AWS Lambda with Localstack

for portguese, click here.

Nowadays it's very common to see people using online platform stacks services like the AWS, Azure, GoogleCloud. They're the most famous "Cloud". And, as anything as others developer, it's very important to test your code before putting it on production, and in this case, some question shows up, "How is possible to test the code that is in production?", "How could I update a code that works without broke it?" or something like that. And so, to these cases, this work was thought. The main goal is to explain how it's possible to run an AWS Lambda in a machine using PyCharm with python and localstack.

and so... let's go!

Setup

- Ubuntu 20.04 LTS;

- IDE: PyCharm Professional;

- Python 3.8;

- Docker;

- AWS SAM CLI: It's necessary to IDE works with AWS Services;

- AWS CLI: You can use real credentials (as described here), or dummy ones. Localstack requires that these values are present, but it doesn’t validate them;

- Serverless framework;

Configuration

AWS CLI

About the AWS CLI, it's possible to check the AWS material to install it, click here.

AWS SAM CLI

To install the AWS SAM CLI is informed in the AWS docs that it's necessary to install it by Homebrew, but in some cases is hard to install the Homebrew, and thinking about this, there is another way to install it, it's possible to get the source code from Github repository here.

$ git clone https://github.com/awslabs/aws-sam-cli.git

$ cd aws-sam-cli

$ sudo python3.8 ./setup.py build install

$ sam --version

The final output:

SAM CLI, version 0.47.0

Localstack Docker

At this time, the "docker-compose.yaml" have to be created at the root of the project. With this, it will be possible to run localstack from a docker container.

$ touch docker-compose.yml

docker-compose.yml

version: '2.1'

services:

localstack:

image: localstack/localstack

ports:

- "4567-4597:4567-4597"

- "${PORT_WEB_UI-8080}:${PORT_WEB_UI-8080}"

environment:

- SERVICES=${SERVICES- }

- DEBUG=${DEBUG- }

- DATA_DIR=${DATA_DIR- }

- PORT_WEB_UI=${PORT_WEB_UI- }

- LAMBDA_EXECUTOR=${LAMBDA_EXECUTOR- }

- KINESIS_ERROR_PROBABILITY=${KINESIS_ERROR_PROBABILITY- }

- DOCKER_HOST=unix:///var/run/docker.sock

volumes:

- "${TMPDIR:-/tmp/localstack}:/tmp/localstack"

- "/var/run/docker.sock:/var/run/docker.sock"

And now, let's run the docker-compose with this command:

$ docker-compose up

Now it's possible to access different AWS services through different ports on your local server. For a while, let's test the AWS S3 service.

$ curl -v http://localhost:4572

Some part of output:

<ListAllMyBucketsResult xmlns="http://s3.amazonaws.com/doc/2006-03-01">

<Owner><ID>bcaf1ffd86f41161ca5fb16fd081034f</ID>

<DisplayName>webfile</DisplayName></Owner><Buckets></Buckets>

</ListAllMyBucketsResult>

This test can be done in the web browser, too. Just copy the address and then paste it in the browser URL or just click here.

Some ports from AWS Service with Localstack

- S3: 4572

- DynamoDB: 4570

- CloudFormation: 4581

- Elasticsearch: 4571

- ES: 4578

- SNS: 4575

- SQS: 4576

- Lambda: 4574

- Kinesis: 4568

PyCharm with AWS Lambda

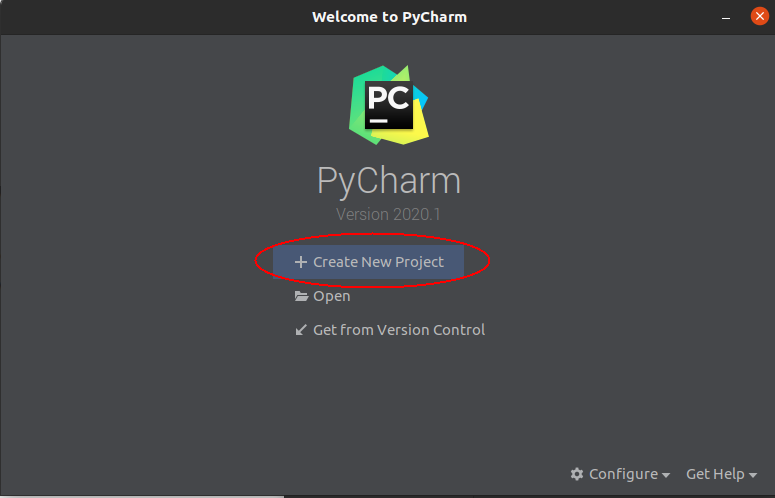

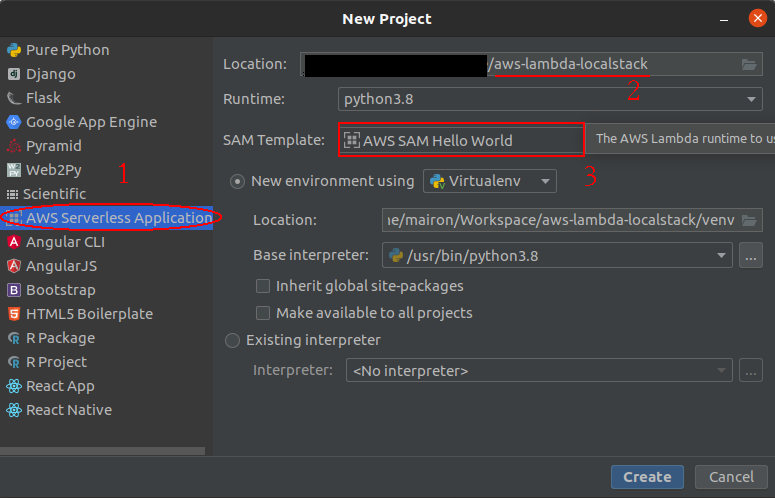

Once the docker-compose it's working and running, it's time to create the project. Let's create a new project in the PyCharm:

- Creating a new project

In step number 3 it's very important to check if the "SAM CLI" was automatically recognized.

- Creating the main file, the

lambda_function.py, in the root of the project.

import urllib.parse

import boto3

import json

# print('Loading function')

HOST = "http://[YOUR_IP]"

# Get the service resource

# To production it's not necessary inform the "endpoint_url" and "region_name"

s3 = boto3.client('s3',

endpoint_url= HOST + ":4572",

region_name="us-east-1")

sqs = boto3.client('sqs',

endpoint_url= HOST + ":4576",

region_name="us-east-1")

def lambda_handler(event, context):

# print("Received event: " + json.dumps(event, indent=2))

# Get the object from the event and show its content type

bucket = event['Records'][0]['s3']['bucket']['name']

key = urllib.parse.unquote_plus(event['Records'][0]['s3']['object']['key'], encoding='utf-8')

url_queue = HOST + ":4576/queue/lambda-tutorial"

try:

response = s3.get_object(Bucket=bucket, Key=key)

deb = {

"request_id": response['ResponseMetadata']['RequestId'],

"queue_url": url_queue,

"key": key,

"bucket": bucket,

"message": "aws lambda with localstack..."

}

print("#########################################################")

print("Send Message")

#Send message to SQS queue

response = sqs.send_message(

QueueUrl=deb["queue_url"],

MessageBody=json.dumps(deb)

)

print("response: {}".format(response))

print("#########################################################")

print("Receive 10 Messages From SQS Queue")

response = sqs.receive_message(

QueueUrl=deb["queue_url"],

MaxNumberOfMessages=10,

VisibilityTimeout=0,

WaitTimeSeconds=0

)

print("#########################################################")

print("Read All Messages From Response")

messages = response['Messages']

for message in messages:

print("Message: {}".format(message))

print("Final Output: {}".format(json.dumps(response)))

return json.dumps(response)

except Exception as e:

print(e)

raise e

If the "boto3" is not installed the IDE will warning, and so, open a terminal and executing the following command:

$ pip3.8 install boto3

- Creating files that will support the project

At this time, there are some folders and files to be created, the folders must contain the files test. Let's create the folders "test/files" in the root of the project, and then, creating the files to run the project.

aws-lambda-localstack

|test/

|- file/

|- - test_file.log

|- - input-event-test.json

|requirements.txt

test_file.log: this file will be used as an example from the bucket. It could be just an empty file.

requirements.txt: this file is required by "SAM CLI".

input-event-test.json: this one is the input test that will be called when running the project.

{

"Records": [

{

"eventVersion": "2.0",

"eventSource": "aws:s3",

"awsRegion": "us-east-1",

"eventTime": "1970-01-01T00:00:00.000Z",

"eventName": "ObjectCreated:Put",

"userIdentity": {

"principalId": "EXAMPLE"

},

"requestParameters": {

"sourceIPAddress": "127.0.0.1"

},

"responseElements": {

"x-amz-request-id": "EXAMPLE123456789",

"x-amz-id-2": "EXAMPLE123/5678abcdefghijklambdaisawesome/mnopqrstuvwxyzABCDEFGH"

},

"s3": {

"s3SchemaVersion": "1.0",

"configurationId": "testConfigRule",

"bucket": {

"name": "tutorial",

"ownerIdentity": {

"principalId": "EXAMPLE"

},

"arn": "arn:aws:s3:::example-bucket"

},

"object": {

"key": "lambda/test_file.log",

"size": 1024,

"eTag": "0123456789abcdef0123456789abcdef",

"sequencer": "0A1B2C3D4E5F678901"

}

}

}

]

}

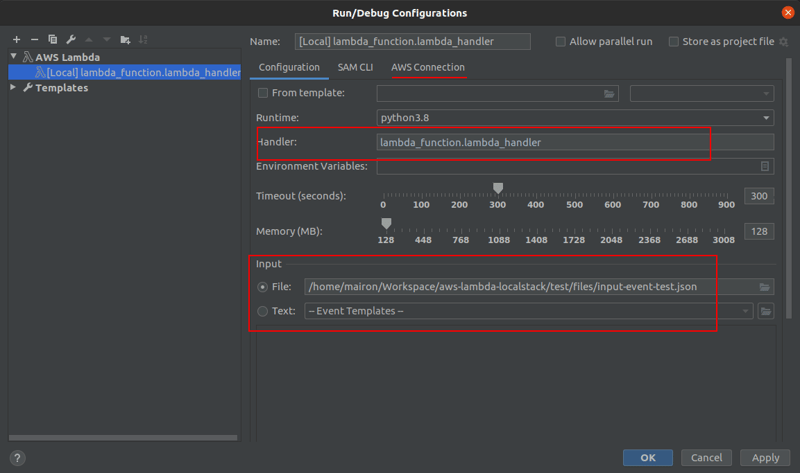

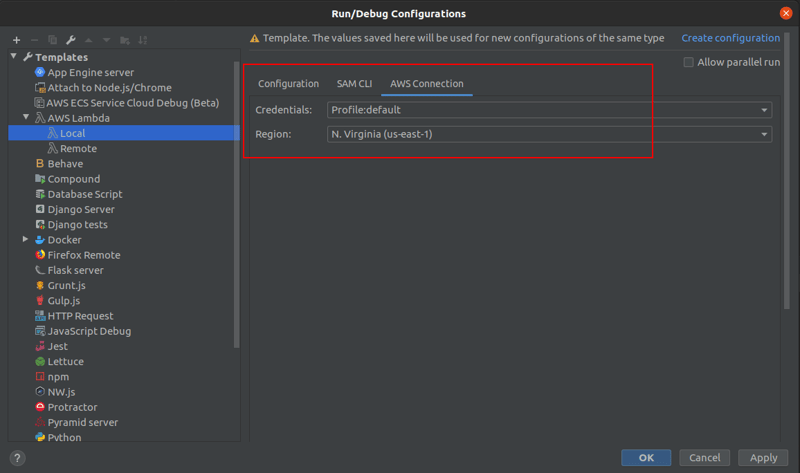

- Once the steps before are done, the next step will configure the "run configuration".

Now the IDE configuration is done, and then, let's configure the entries. For configuring the entries it's mandatory to run some commands to create the files on the S3 and to create the SQS Queue.

1 - Creating the bucket on S3: the bucket will be named "tutorial".

$ aws --endpoint-url=http://localhost:4572 s3 mb s3://tutorial

2 - Creating a folder on S3: it'll be creating a folder named "lambda".

$ aws --endpoint-url=http://localhost:4572 s3api put-object --bucket tutorial --key lambda

The response will be something like that:

{

"ETag": "\"d41d8cd98f00b204e9800998ecf8427e\""

}

3 - Copying files to bucket: copying files from "./test/files" to "s3://tutorial/lambda/"

$ aws --endpoint-url=http://localhost:4572 s3 cp ./test/files/ s3://tutorial/lambda/ --recursive

To check if the bucket was created, copy the value from "endpoint-url" and execute a "curl" or paste in the browser, for example:

$ curl -v http://localhost:4572/tutorial

The output will be something like:

<?xml version="1.0" encoding="UTF-8"?>

<ListBucketResult xmlns="http://s3.amazonaws.com/doc/2006-03-01/">

<Name>tutorial</Name>

<MaxKeys>1000</MaxKeys>

<Delimiter>None</Delimiter>

<IsTruncated>false</IsTruncated>

<Contents>

<Key>lambda</Key>

<LastModified>2020-04-28T01:36:04.128Z</LastModified>

<ETag>"d41d8cd98f00b204e9800998ecf8427e"</ETag>

<Size>0</Size>

<StorageClass>STANDARD</StorageClass>

<Owner>

<ID>75aa57f09aa0c8caeab4f8c24e99d10f8e7faeebf76c078efc7c6caea54ba06a</ID>

<DisplayName>webfile</DisplayName>

</Owner>

</Contents>

<Contents>

<Key>lambda/input-event-test.json</Key>

<LastModified>2020-04-28T01:40:27.882Z</LastModified>

<ETag>"4e114da7aa17878f62bf4485a90a97a2"</ETag>

<Size>1011</Size>

<StorageClass>STANDARD</StorageClass>

<Owner>

<ID>75aa57f09aa0c8caeab4f8c24e99d10f8e7faeebf76c078efc7c6caea54ba06a</ID>

<DisplayName>webfile</DisplayName>

</Owner>

</Contents>

<Contents>

<Key>lambda/test_file.log</Key>

<LastModified>2020-04-28T01:40:27.883Z</LastModified>

<ETag>"4ac646c9537443757aff7ebd0df4f448"</ETag>

<Size>29</Size>

<StorageClass>STANDARD</StorageClass>

<Owner>

<ID>75aa57f09aa0c8caeab4f8c24e99d10f8e7faeebf76c078efc7c6caea54ba06a</ID>

<DisplayName>webfile</DisplayName>

</Owner>

</Contents>

</ListBucketResult>

4 - Creating an SQS Queue

$ aws --endpoint-url=http://localhost:4576 sqs create-queue --queue-name lambda-tutorial

The exit will be somthing like:

{

"QueueUrl": "http://localhost:4576/queue/lambda-tutorial"

}

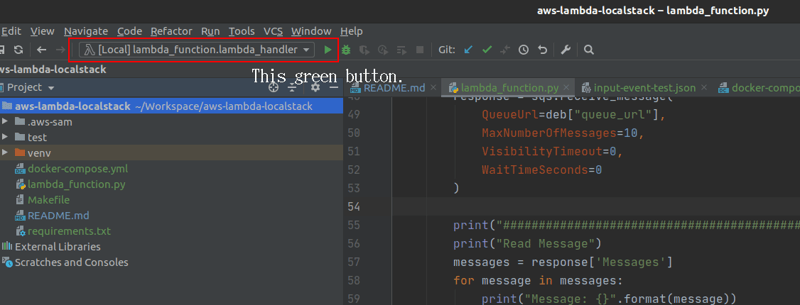

Once everything is done, and all output was okay, it's time to run the project. Note, in the code was written the input and the output for this text. And so, to run click in the "Run '[Local] lambda_function.lambda_handler'" button.

After this, it's possible to see the output from this process on the console. It's showing the entries, and in the final ten messages are recovered from the queue and printed in the console.

GREAT!

It's Done!

This can be just the initial point, and I hope that this text helps you test your AWS services... I hope that all of you reach your goals.

Repository: aws-lambda-localstack.

Thank you for reading 'till the end!

References

Some references that I have used to complete this text.

- AWS SAM CLI;

- AWS CLI;

- Serverless;

- Localstack;

- Using Serverless Framework & Localstack to test your AWS applications locally;

Some Cloud Computing

- AWS;

- Azure;

- GoogleCloud;

- Openshift;

- Oracle;

Posted on May 26, 2020

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.