Object Detection and Tracking

Levin

Posted on July 7, 2022

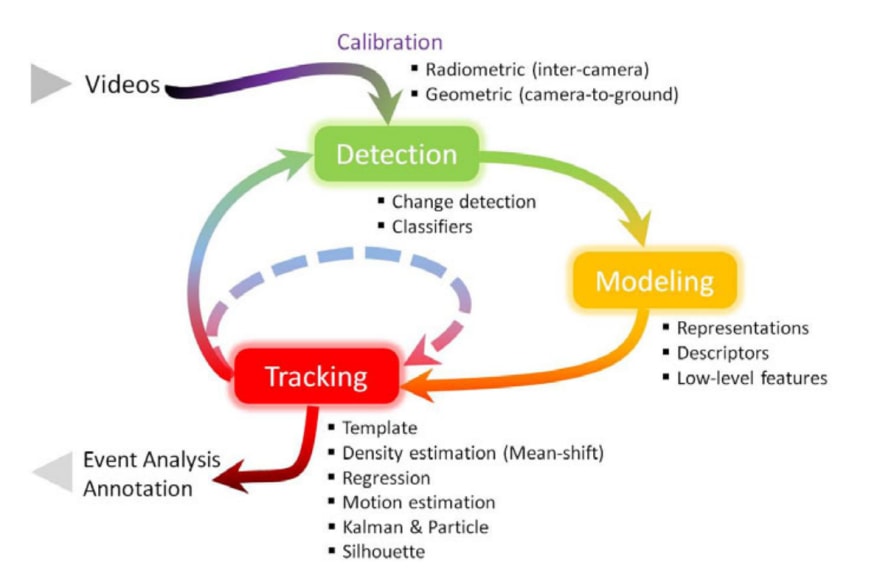

Detecting and tracking objects are among the most prevalent and challenging tasks that a surveillance system has to accomplish in order to determine meaningful events and suspicious activities, and automatically annotate and retrieve video content.

In this article, I am going to give a brief description of each task and introduce common approaches to attempt them.

Let’s delve into each task.

1. Object Detection

1.1 Change Detection

Change detection is the identification of changes in the state of a pixel through the examination of the appearance values between sets of video frames.

Following are the most common changed detection techniques.

- Frame Differencing and Motion History Image

- Background Subtraction

- Motion Segmentation

- Matrix Decomposition

1.2 Classifiers

Object detection can be performed by learning a classification function that captures the variation in object appearances and views from a set of labeled training examples in supervised frameworks.

Following are the most common learning approaches.

- Boosting

- Support Vector Machines (SVM)

- Supervised Learning

2. Object Modeling

2.1 Model Representations

Object representations are usually chosen according to the application domain.

Following are the most used representation approaches.

- Point and Region

- Silhouette

- Connected Parts

- Graph and Skeletal

- Spatiotemporal

2.2 Model Descriptors

Descriptors are the mathematical embodiments of object regions. The size of the region, dynamic range, imaging noise and artifacts play a significant role in achieving discriminative descriptors.

Following are the most used descriptors.

- Template

- Histogram, HOG, SIFT

- Region Covariance

- Ensembles and Eigenspaces

- Appearance Models

2.3 Model Features

The use of a particular feature set for tracking can also greatly affect the performance. Generally, the features that best discriminate between multiple objects and, between the object and background are also best for tracking the object.

Following are the most used features.

- Color

- Gradient

- Optical Flow

- Texture

- Corner Points

3. Object Tracking

3.1 Template Matching

Template matching is a brute-force method of searching the image or a region similar to the object template defined in the previous frame.

3.2 Density Estimation: Mean-Shift

Mean-shift is a nonparametric density gradient estimator to find the image window that is most similar to the object’s color histogram in the current frame.

3.3 Regression

Regression refers to understanding the relationship between multiple variables.

3.4 Motion Estimation

Motion estimation is the process of determining motion vectors that describe the transformation between adjacent frames in the video sequence.

3.5 Kalman Filtering

Kalman Filtering is used to optimally estimate the variables of interest when they can’t be measured directly, but an indirect measurement is available.

3.6 Particle Filtering

One limitation of the Kalman filter is the assumption that the state variables are Gaussian. With particle filtering, we can overcome this limitation.

3.7 Silhouette Tracking

The goal of a silhouette based object tracker is to find object region by means of an object model generated using the previous frames.

Thanks for reading my article carefully.

To encourage me continue work, please follow me on Dev.to and Github.

Posted on July 7, 2022

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.