Lankinen

Posted on June 16, 2020

My Twitter feed is full of tweets about this event as Snapchat released some interesting AR features. I wanted to figure out what happened in that event as it felt like there were a bunch of small things.

New Snapchat update: Every major feature announced at Summit

First of all the app's UI changes in a way that there will be navigation bar at the bottom of the screen similar to for example Instagram. On the front page there will be Snapchat originals which is similar to Youtube originals. There were also some other small things like these but I'm not interested of those but the AR part.

SnapML

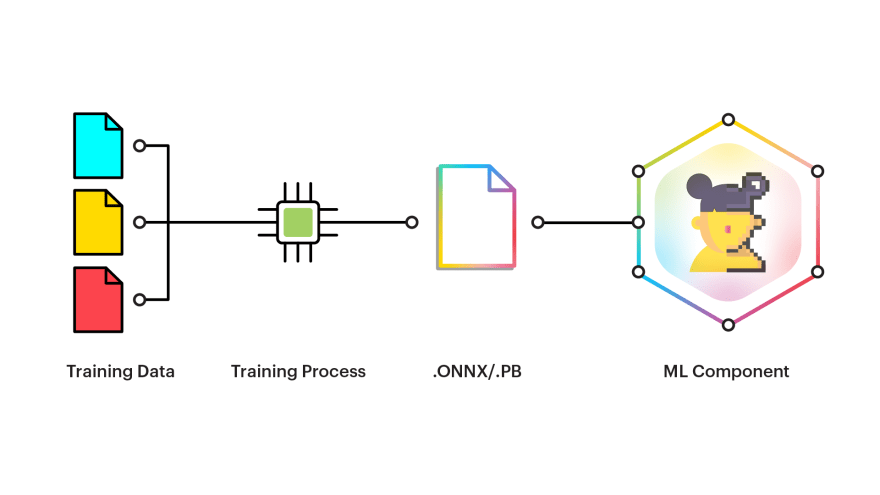

is a new feature to Lens Studio (Snapchat lens developer tool) which allows developers to use their own machine learning models. I didn't get it right away what this means because I wasn't sure if it really means what it says or is it more like training existing models with own data (kind of making them own even though the architecture stays the same).

It seems like they really support machine learning models created with PyTorch, Tensorflow, and some others (supporting ONNX).

from https://lensstudio.snapchat.com/guides/machine-learning/ml-overview/

It seems like there are two use cases for ML in Snapchat: CV tasks like recognition and style transfer.

Local Lenses

If I understand correctly Snapchat already supported lenses to some limited buildings like Flat Iron. What they do now is make it possible to add AR to any place. This happens by creating point cloud of the real world. It basically means that Snapchat creates digital 3D version of the real world.

Scan

For some not so interesting but for me the most interesting announcement. The idea is that Snapchat can recognize some plants, trees, dogs, and other and then give some information about them. I'm so interested of this because if Snapchat ever creates AR glasses this will be probably really used feature. It would be so cool to get information about everything so I could play a smart person by talking others what breed that dog is or when certain building was built.

Lens Voice Scan

Use voice to choose filters. e.g. "make my hair pink". This is pretty useful feature as there is so many filters that it's hard to find the right filter. Maybe in the future we can basically say anything and Snapchat will do it.

Snap Minis

It's kind of like apps but on Snapchat. They are built using HTML 5 meaning that they work both iOS and Android. They event went to comparing how it's easier to develop these and how this platform is newer and less crowded making it better for the app developers. One interesting point was how the network effect is easier to get on Snapchat platform as people will share the app to others pretty easily. One really good comment one interviewee gave said how users shouldn't see what's Adam (the product they were building) and what's Snapchat.

Camera Kit

Snapchat offers all app developers capability to use their AR system on their own apps. This is another really interesting move that just makes Snapchat stronger in AR space.

Squad used Camera Kit in a way that user can send Snap for another Snapchat user saying that they are in the app ready to video call (it's an app where users can watch things together) and then other users can join them via Snapchat. They also added feature that allows sending stories to Squad which then allows people to watch them together on Squad. With Camera Kit they were also available to add lenses they created with Lens Studio.

Posted on June 16, 2020

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.