Every second matters - the hidden costs of unoptimized developer workflows

Kaspar Von Grünberg

Posted on July 20, 2020

We tend to underestimate how inefficient workflows impact developer productivity and distract from the task at hand. In this article we explain the first- and second-order effects of inefficient developer workflows. We share real-life examples and analyse at what point you should invest in automation vs. doing things manually.

"We've already automated our setup so much, there is nothing left to do." We hear this sentence frequently when talking to DevOps practitioners and engineering managers. No-one is ever automated to a degree that is enough. This article shares best practices and how these relate to the order costs of non-automated tasks.

Seconds pile up

Unlocking a smartphone using a 5-digit pin takes 2.21 seconds including failed attempts. It gets more interesting if you multiply this with the amount of times the average adult (US-data) unlocks their phone every day (79 times). Multiply this by 365 and you will end up with 60.553 seconds which equals 1009 minutes or nearly 17 hours a year unlocking your freaking phone. That's an entire day (awake) that you spend unlocking your phone!

You buy the new iPhone with a FaceID. All of the sudden you have almost one day more for leisure - every year.

Compare the mobile phone scenario to a 10 person engineering team with an average cost per headcount per hour of 70$. You end up with annual costs of nearly 14.000$. Beware of seconds, they matter.

First-order effect is known, but following effects are ignored

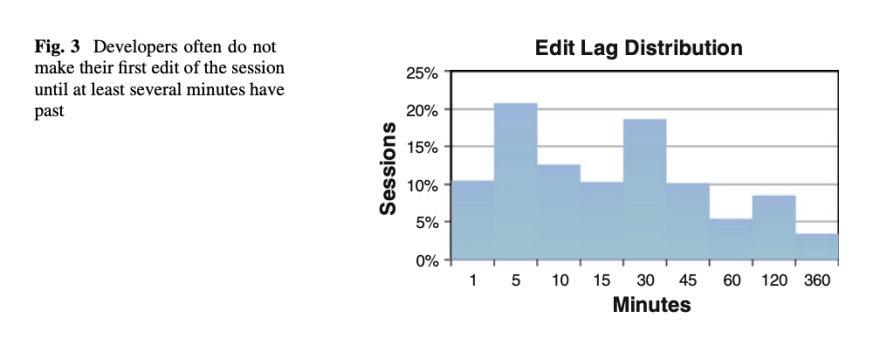

In the above scenario we looked at direct time lost due to inefficiency. But an often overlooked component is distraction. Whatever takes you out of deep focus mode is fatal to your productivity. Even little distractions lead to an enormous time to recover the task process according to a study of the Georgian Institute of Technology. The top 10% of people managed to get back into focus after 1 minute, but the average person needs 15 minutes.

Source: Parnin, C. & Rugaber, S. (2010)

If you apply this to our calculation above, we would end up with a 6-digit cost for an engineering team of 10 using pins rather than FaceID. It's worth exploring this time wasting in your developer workflow.

Reasons for losing time in the engineering workflow

Insufficient test-automation

You have done a pull-request, there is no automated integration test and the service goes into production after a manual review failed to identify the edge-case. It fails in one specific scenario that surfaces in a user-complaint a week later. By that time, you have already moved on to another feature which you need to interrupt now, get back into the specific task from a week ago and on goes the wheel.

The first order effect in this case is the time spent fixing the feature a second time. The second order effect is the time spent on getting in the code again. Research by IBM demonstrates how the cost of fixing a bug increases through the stages. The better the hit-rate of your QA automation the less cost-effects you produce.

Vulnerability scans before commit vs. after

Nigel Simpson is the Director of Enterprise Technology at a Fortune 100 company. He's laser focused on reaching the highest degree of developer experience possible for his teams. His article The Self-Aware Software Lifecycle is definitely worth a read as are all of his other articles. Previously his intervention teams would ship a feature, then colleagues in security would analyse the shipped packages for open source vulnerabilities. They'd reject the package due to risk profiles meaning the developers had to fix the package again and ship it once more. Nigel introduced Snyk, which allows developers to get these screenings in real time while committing. Nigel explained the first and second order affects to me in his own words:

"Developers didn't have a sense of ownership over the security of the applications they were developing. Since a security organization had established security analysis as a service, and were subject matter experts in vulnerability remediation, developers didn't focus on security until late in the development process. As a result, the findings of the security review generated re-engineering disruption shortly before applications were due to be released. By introducing a developer-oriented security analysis tool, Snyk, developers gained actionable intelligence about vulnerabilities, enabling them to take action sooner with less disruption to the development schedule."

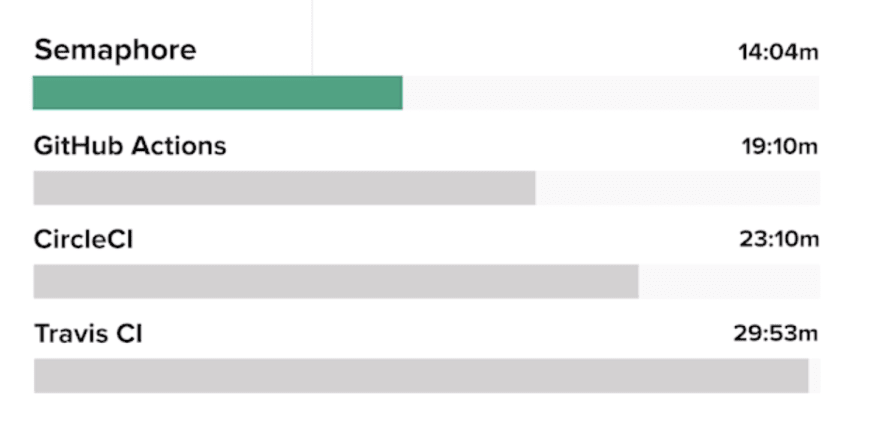

Time spend waiting for container build times

Travis CI takes twice as long to build an average web app (tested for a 7-service PHP ecommerce app, containerized) as Semaphore. We have 10 developers at Humanitec. We do around 110 builds that take us 743 minutes on average every month. We are using Github Actions. If we switched to a provider optimized for lower container build times such as Semaphore, we would save 196 minutes every month. These would be your first order effects. You could of course still do another task in the meantime, but if you look at the cost of distraction you end somewhere in this range.

Source: Parnin, C. & Rugaber, S. (2010)

Static vs. Dynamic environment setups

When developing a web application, the defined development environment consists of:

- Application configuration (e.g. environment variables, access keys to 3rd party APIs).

- Infrastructure configuration (e.g. aK8s cluster, DNS configuration, SSL certificate, databases).

- Containerized code as build artefacts from your CI pipeline

If someone is told to spin up a new environment this is firstly usually undertaken manually or semi-manually. The second time, when you set up your staging environment, you will start over again. Someone needs a Feature-branch? Same config, same work. This is what we call a static environment setup. It comes with several negative first and second order effects.

Conversely, dynamic environments allow you to create any environments with any configuration for as long as needed. Afterwards, they can be torn down to minimize any running costs.

This is only possible if:

- The environment setup is scripted

- All dependencies are extracted into configuration variables

- Resources like k8s clusters and databases can be issued customized to your application.

But suddenly as a first order effect, it's possible to create and utilize additional environments on demand. This enables feature development and feature testing without blocking the static environment for others.

Second order effects results:

- Less time seeking specific DevOps and infrastructure knowledge

- No waiting someone else to build a new environment for you

- No asking colleagues to do that which doesn't allow you to test sub-feature branches to reduce your error rate.

- No need to pay for a permanent second static development environment to be able to develop and test two features at the same time.

Scripting Hell

A lot of the teams we see script everything and tell us "it's stable, and if something comes up we go in and change it." If you go down the microservice alley, the .YML files will start piling up. Add Kubernetes on top and the pile is growing. You are running on top of GCP and they are rolling out an enforced update to their cluster configs. So you go in every file and change the setting and dependencies. Picture a really small, simple app with only 5 microservices. These are already 10 places you are working on now.

Your first order effects are easy to calculate:

- Understand the problem (30 minutes),

- Fix all the files (30 minutes),

- Get back into the other task (15 minutes).

The second order effects are even more significant:

- Key-person dependency: Our above calculation assumes you know exactly about all the files you have to update. If you don't you end up in a badly documented setting trying to find your way through scripts.

- Increased security incidences: Our favourite example is back-up scripts in databases that you forgot to update. (The second order effects of a hacked MongoDB is something I probably don't have to calculate for you).

- It's very hard to achieve true Continuous Delivery with scripting. Even ecommerce giant Zalando has a platform team of 110 people full-time scripting their workflows.

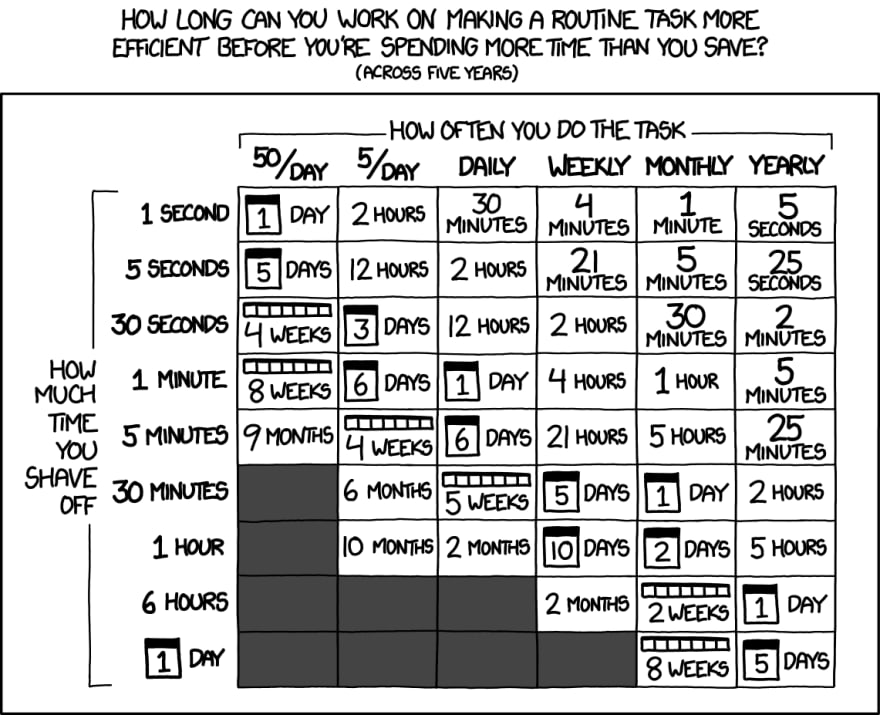

When to start investing in further automation?

The graphic below explores whether automation is worth the effort:

- How often do you repeat the task in a given time-frame

- How long does every run-through take?

This is the most time you should spend automating the task. Keep the second order effect in mind and it really pays off, you'll be working not only faster but more effectively.

Source: xkcd.com; (CC BY-NC 2.5)

Conclusion

Even for my very personal day to day work, set aside an hour a month to look at how I can optimize. I will sort applications on my devices for fast access depending on usage, I will look into my project management setup or simply the way I organize my inbox.

I very much encourage every team to regularly (maybe ones a quarter) spend an afternoon as a team and reflect on your workflow. We do groomings for everything right? Take the time and think how you want to work together as a team. It will pay off faster than you think.

If you liked the article or if you want to discuss developer workflows in more detail, feel free to register for one of our free webinars.

Posted on July 20, 2020

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.

Related

October 30, 2024