Scaling GitHub Actions Runners on AWS: A Cost-Effective and Scalable Approach

Omolayo Victor

Posted on November 14, 2023

In the realm of software development, continuous integration (CI) and continuous delivery (CD) have become indispensable practices for ensuring the quality and timely release of software applications. GitHub Actions, a cloud-based CI/CD platform, has emerged as a popular choice among developers for its ease of use and flexibility. However, as the number of repositories and workflows under management grows, the need for scalable and cost-effective runner infrastructure becomes increasingly important.

To address this challenge, we have developed a self-hosted on-demand runner infrastructure on AWS that utilizes a combination of GitHub, Amazon Web Services (AWS), and other tools. This infrastructure enables us to scale our runner capacity up or down based on demand, ensuring that we have enough runners to handle the workload without incurring unnecessary costs.

Key Design Considerations

In designing the self-hosted on-demand runner infrastructure, we focused on several key considerations:

Cost-effectiveness: The infrastructure should minimize cloud resource consumption and avoid unnecessary costs when not in use.

Scalability: The infrastructure should be able to handle fluctuating workloads by scaling up or down the number of runners dynamically.

Reliability: The infrastructure should be highly available and ensure consistent execution of workflows.

Ease of management: The infrastructure should be easy to deploy, manage, and maintain.

Key Components of the Infrastructure

The key components of our self-hosted on-demand runner infrastructure include:

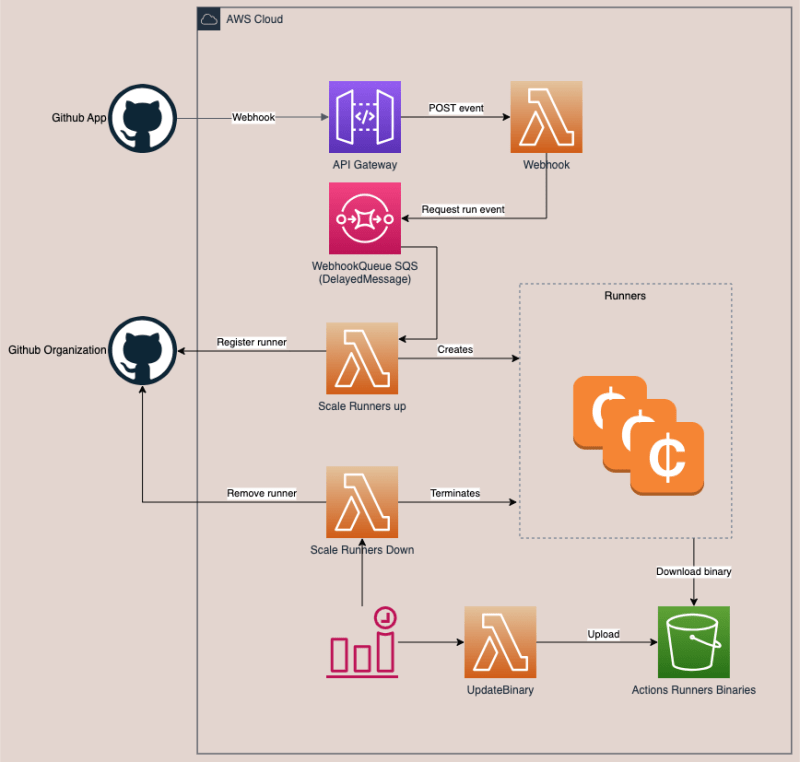

GitHub App: This GitHub App acts as a bridge between GitHub and AWS, receiving webhook events from GitHub repositories and triggering the creation or removal of EC2 instances based on those events.

API Gateway: API Gateway serves as an HTTP endpoint for the webhook events sent by the GitHub App, providing a secure and reliable channel for communication.

Lambda Functions: Lambda functions are the workhorses of the infrastructure, handling the incoming webhook events, verifying their authenticity, and triggering the scaling up or scaling down of EC2 instances.

SQS (Simple Queue Service): SQS acts as a message queue, decoupling the incoming webhook events from processing these events. This ensures that events are not lost if there are temporary delays in processing.

S3 (Simple Storage Service): S3 serves as a repository for storing the runner binaries that are downloaded from GitHub. This allows EC2 instances to fetch the runner binaries locally instead of downloading them from the internet, improving performance.

EC2 (Elastic Compute Cloud): EC2 instances provide the computational resources for running GitHub Actions workflows. The number of EC2 instances is dynamically scaled up or down based on the demand for runners.

SSM Parameters: SSM Parameters store configuration information for the runners, registration tokens, and secrets for the Lambdas. This centralized approach simplifies management and access control.

Amazon EventBridge: Amazon EventBridge schedules Lambda functions to execute at regular intervals, ensuring that idle runners are detected and terminated when no longer needed.

CloudWatch: CloudWatch provides real-time monitoring of the resources and applications in the AWS environment, enabling us to collect and track metrics for debugging and performance optimization.

Workflow and Scalability

The self-hosted on-demand runner infrastructure operates seamlessly to handle the scaling of runners based on workflow demands. When a workflow is triggered on a pull request action, the GitHub App sends a webhook event to the API Gateway, which in turn triggers the webhook Lambda function. The Lambda function verifies the event authenticity, processes it, and posts it to an SQS queue.

The scale-up Lambda function monitors the SQS queue for new events and evaluates various conditions to determine if a new EC2 spot instance needs to be created. If a new instance is required, the Lambda function requests a JIT configuration or registration token from GitHub, creates an EC2 spot instance using the launch template and user data script, and fetches the runner binary from the S3 bucket for installation. The runner registers with GitHub and starts executing workflows once it is fully configured.

In contrast, the scale-down Lambda function is triggered by Amazon EventBridge at regular intervals to check for idle runners. If a runner is not busy, the Lambda function removes it from GitHub and terminates the corresponding EC2 instance, ensuring efficient resource utilization and cost savings.

What's Next?

So far, we've defined a solid foundation of components that are crucial for building a cost-effective and scalable solution for GitHub runners. These components include the GitHub App, API Gateway, Lambda functions, SQS, S3, EC2, SSM Parameters, Amazon EventBridge, and CloudWatch. These components work together to provide a robust and dynamic infrastructure that can seamlessly handle fluctuating workloads.

Next, we'll embark on the practical implementation of this infrastructure using Terraform, an infrastructure as code (IaC) tool. Terraform will enable us to automate the provisioning of AWS resources, ensuring consistency and repeatability in our infrastructure setup. We'll delve into the process of creating the necessary AWS resources, including EC2 instances, VPCs, and IAM roles.

We'll also configure the GitHub App to act as a bridge between GitHub and AWS, triggering the creation or removal of EC2 instances based on webhook events. This will ensure that we always have the right number of runners available to handle the current workload.

Finally, we'll set up Lambda functions to orchestrate the scaling of runner instances. Lambda functions will be responsible for verifying the authenticity of incoming webhook events, processing them, and triggering the scaling up or scaling down of EC2 instances based on demand. This will ensure that our infrastructure is always optimized for cost and performance.

By the end of this series, you'll have a comprehensive understanding of how to build and deploy a scalable and cost-effective runner infrastructure on AWS using Terraform. You'll be able to leverage this infrastructure to improve your CI/CD performance and reduce your infrastructure costs.

Stay tuned for Part 2: Building the Self-Hosted On-Demand Runner Infrastructure with Terraform

Posted on November 14, 2023

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.

Related

November 14, 2023