Kane Hooper

Posted on January 18, 2023

In previous articles I have covered how you can use OpenAI in your Ruby application to take advantage of the GPT-3 AI model. While it is powerful, it can be complicated to use, especially when it comes to getting the specific responses you want. There are two key components to achieving success with GPT-3; the prompt and the temperature parameter.

In this article, we’ll look at how to master the temperature parameter when using OpenAI with Ruby. If you need an introduction to working with Ruby and OpenAI check out this article:

How GPT generates its output

GPT-3 was trained on a massive dataset of over 45TB of text, including books, articles, and webpages. This data was used to train the AI to recognise patterns and respond appropriately. GPT-3 was also trained with reinforcement learning, allowing it to learn from its mistakes and improve its accuracy over time.

When you provide a prompt to the AI model as a developer, it will begin generating output based on the probability of consecutive words based on its training data.

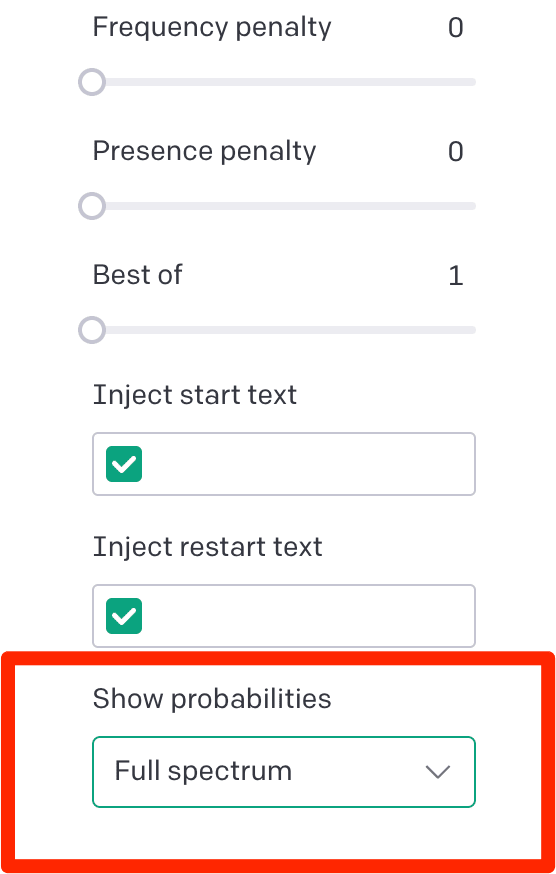

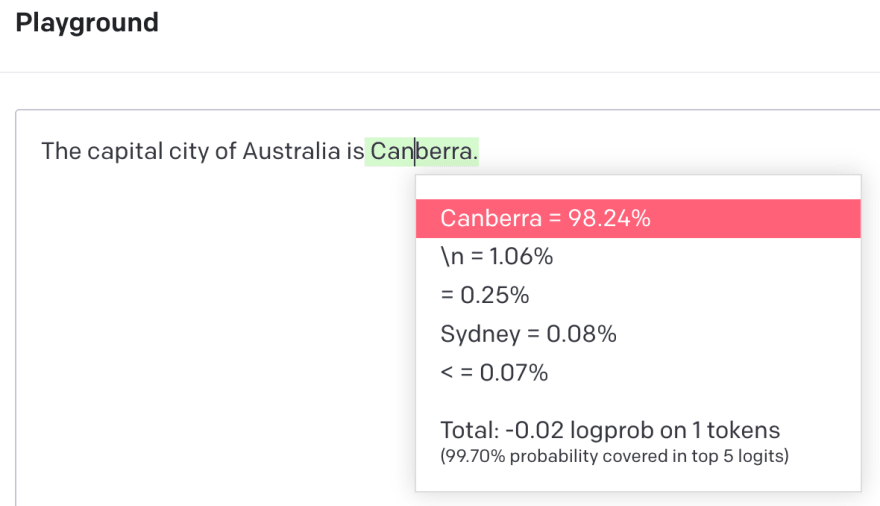

The OpenAI playground’s “show probabilities” setting allows developers to see the probabilities of each word in the output. This can help you understand why the AI chose a particular word or phrase. It displays a visual representation of how the temperature parameter is likely to affect the output. Developers can use this knowledge to fine-tune their applications to produce the desired results.

Here is an example using the OpenAI playground.

I provided the prompt:

The capital city of Australia is

With the temperature set low I received the following output:

Canberra.

This by the way is the correct answer. But using the “show probability” tool I was able to see other probabilities that GPT-3 might draw on.

Based on GPT-3’s training data there is a 0.08% probability of the next word being ‘Sydney.’ Depending on the temperature setting, there is a possibility that GPT will respond with ‘Sydney’ rather than Canberra. This is modified based on the temperature parameter.

GPT-3 is not infallible. Its accuracy is ultimately determined by the probability of data it was trained on. This shows that will being trained that 0.08% of the time when it encountered the text “The capital city of Australia is” the word following was ‘Sydney’. Be very aware of this when working with the AI model. It is only as good as the accuracy of its training data.

What is the Temperature Parameter?

The temperature parameter is the setting in OpenAI that affects the randomness of the output. A lower temperature will result in more predictable output, while a higher temperature will result in more random output. The temperature parameter is set between 0 and 1, with 0 being the most predictable and 1 being the most random.

With a temperature of 0, GPT-3 will select the highest probable response each time. When the temperature parameter is set to 1, the randomness of the output is increased. This means that the AI model will produce more unpredictable results and is less likely to repeat the same output for a given prompt. As the temperature is increased, the probabilities become more spread out and random. As the temperature is decreased, the probabilities become more concentrated and predictable.

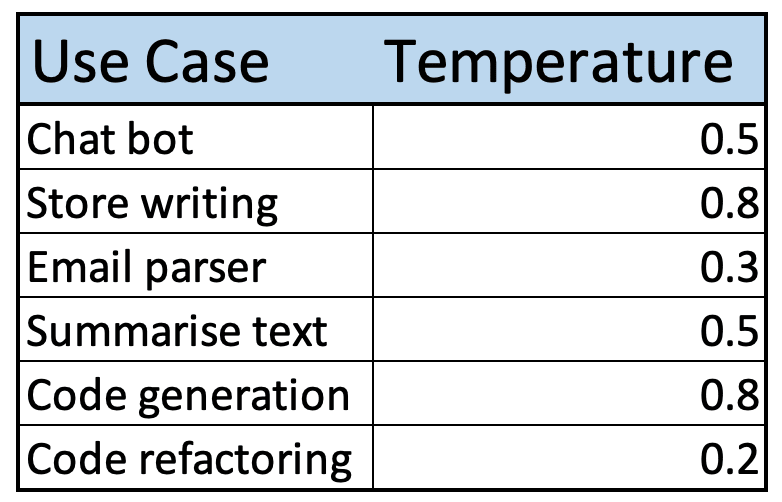

Suggested Temperature Settings

Here are some recommended temperatures settings for different use cases. Note, the best way to achieve the desired output with the temperature setting is to test it within the playground and use the “show probabilities” tool to debug your output.

Note the difference between the code generation and refactoring. When using OpenAI for code generation, it is important to use a higher temperature setting, such as 0.8 or higher. This will allow the AI to generate more unpredictable and creative code, which can be particularly useful for generating complex programs.

For code refactoring use a lower temperature setting, such as 0.2 or 0.3. This will ensure that the AI produces more accurate responses and is less likely to make mistakes.

Using the Temperature Parameter in Ruby

Here is an example of how to use the temperature parameter in Ruby. This code returns the sentiment of an email as positive, negative or neutral.

A temperature setting of around 0.5 is recommended for sentiment analysis. This ensures that the AI can correctly interpret the sentiment of the text and deliver the desired results.

require "ruby/openai"

def analyseEmail(email)

client = OpenAI::Client.new(access_token: 'YOUR_API_TOKEN')

prompt = "Provide a sentiment analysis of the following email.

Your response should be positive, neutral or negative.

\n\nEmail: #{email}"

response = client.completions(

parameters: {

model: "text-davinci-003",

prompt: prompt,

temperature: 0.5,

max_tokens: 10

}

)

puts response['choices'][0]['text'].lstrip

end

email = "I am happy with the service recived by your team. Even though it

was late, they provided a very high quality result which I am

greatful for."

analyseEmail(email)

Output:

Positive

Conclusion

The temperature parameter in OpenAI is a critical setting that can be used to control the randomness of the AI’s output. Using the temperature parameter in Ruby is straightforward and requires only a few lines of code. With this knowledge, Ruby developers can take advantage of the temperature parameter when developing AI applications with OpenAI.

Kane Hooper is the CEO of reinteractive, the longest running dedicated Ruby on Rails development firm in the world.

You can contact Kane directly for any help with your Ruby on Rails application.

Posted on January 18, 2023

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.