Operating System

limjy

Posted on May 9, 2021

My summary of Operating system self study

TOC

Memory

Memory

- CPU-virtualization

- Time Sharing

- Address Space

- Stack VS heap Memory

- Virtualization

- Address Translation: MMU

- Segmentation

- Fragmentation

- Paging-Intro

- Page Table

- Page Table contents

- TLB

- Virtual Memory: Secondary memory

- Swap Space

- Page Fault

- Page Replacement + Caching

- Paging: conclusion

Processes & threads

- Process

- Context Switching

- Thread

- User level VS kernel level thread

- MultiThreading

- MultiThreading models

- Process VS Threads

Caching

- [cache-memory]

- caching

- LRU

Concurrency

- Deadlock

- Semaphore ### Memory Basics https://www.geeksforgeeks.org/introduction-to-memory-and-memory-units/ Memories made up of registers. Each register in memory is 1 storage / memory location. Memory location identified using Address.

word = group of bits where memory unit store binary information

byte = word with group of 8 bits.

Operating System

An operating system is a program that controls the execution of application programs and acts as an interface between the user of a computer and the computer hardware.

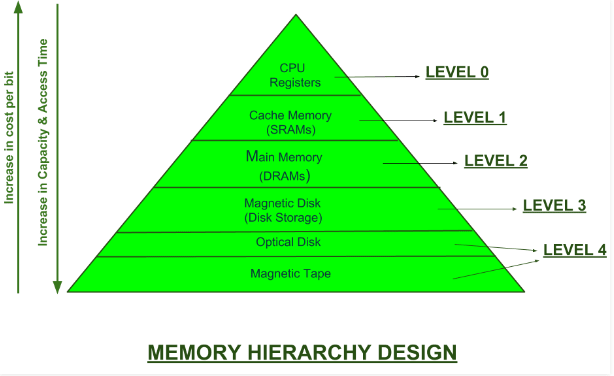

Memory Hierarchy Design

this section is taken from geeksforgeeks

Memory Hierarchy is an enhancement to organize memory so that it can minimize access time

There are 2 main types of memory

-

External / Secondary memory

- magnetic / optical disk. magnetic tape. accessible by processor via I/O module

-

Internal / Primary Memory

- main / cache memory & CPU register. directyl accessible by processor.

RAM Memory

RAM is part of computer's main memory, directly accessible by CPU.

RAM is volatile, so it power is off, stored information is lost.

RAM used to store data that is currently processed by CPU.

2 forms

- Static RAM (SRAM)

- used to build cache memory

- memories that consist of circuits capable of retaining state as long as power is long

- fast

- Dynamic RAM (DRAM)

- used for main memory (were currently opened programs / processes reside) vairable & state data / OS all are in DRAM

- stored information tends to loose over period of time. capacitor must be periodically recharged to retain usage.

- less expensive than SRAM

Further reading

https://www.quora.com/What-is-the-difference-between-DRAM-cache-memory

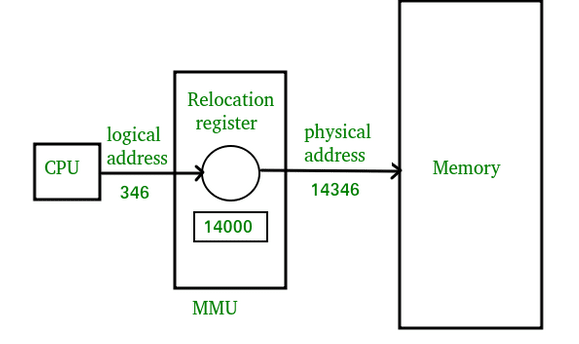

OS: Logical VS Physical Address

logical address: generated by CPU when program is running. It does not exist physically. Used as reference to access physical memory location by CPU

physical address: physical location of required data in memory. User never directly deals with physical address but can access it by logical address.

Logical address is mapped to physical address by MMU (memory management unit)

WHY

- isolaiont & protection

- Logical / Virutal address are needed to ensure secure memory management. If processes can directly modify physical memory they can potentially disrupt other processes. (see SO answer)

- ease of use

- OS give each program the view that it has large contiguous address space to put code and data in, programmers need not worry about memory space / addresses.

taken from geeksforgeeks

CPU Virtualization

Each process access its own private virtual address space which the OS maps to the physical memory of the machine.

As far as the running program is concerned it has physical memory all to itself. however, in reality the physical memory is shared amongst all processes and managed by the OS.

User often runs >1 program at once. User is never concerned with whether CPU is available, only with running programs. --> illusion of many CPUs.

OS creates illusion by virtualizing CPU.

- Time sharing

- run 1 process, stop it & run another (implemented via context-switching)

OS also has policies

- Scheduling policy

- given number of possible programs to run on CPU, policy decides which program OS should run.

Time Sharing

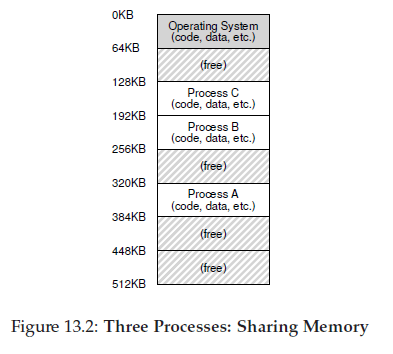

In multiprogramming multiple processes are ready to run at given time, OS will switch between them.

Time-sharing

- run 1 process for a short while

- give it full access to all memory then stop it, save all state to some kind of disk load other process's state, run it fr a while

- very slo

- saving entire contents to disk is very slow

- leave process in memory while switching between them

- OS can implement time sharing efficiently

- with multiple programs residing in memory concurrently, protection is important, process should not be able to read or write other process's memory.

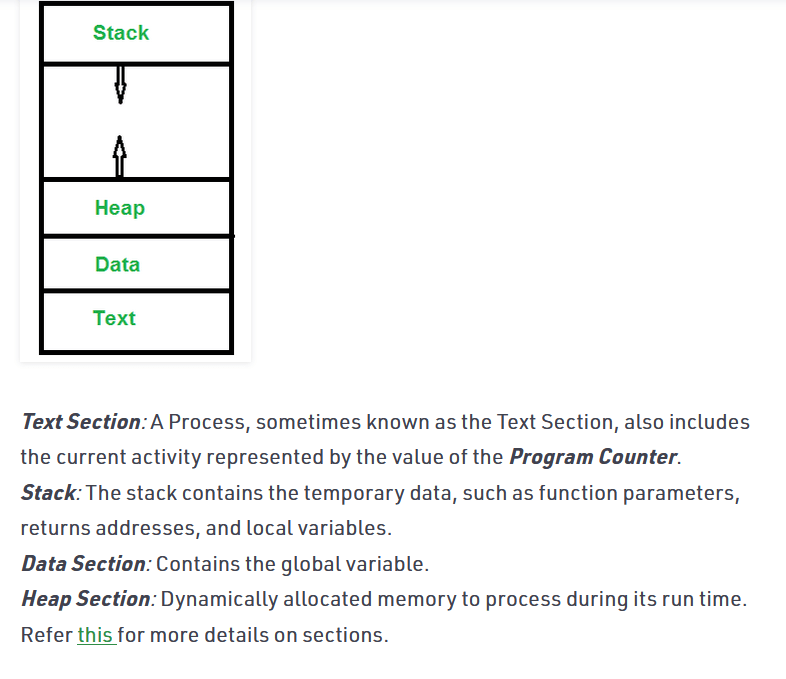

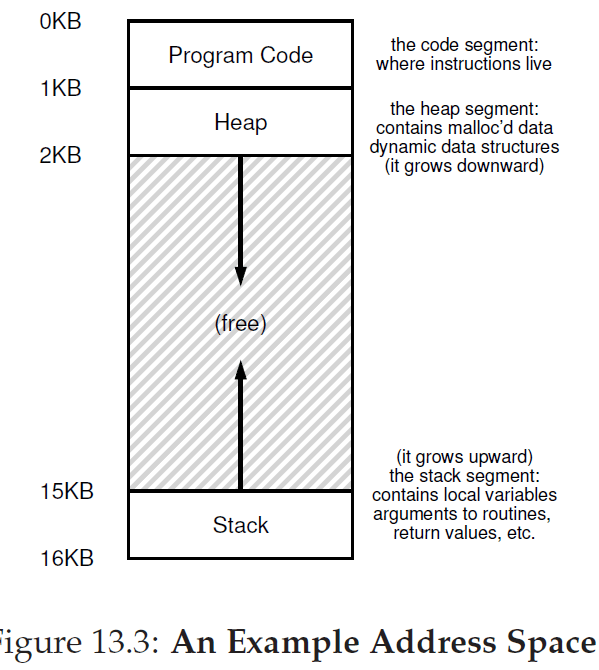

Address Space

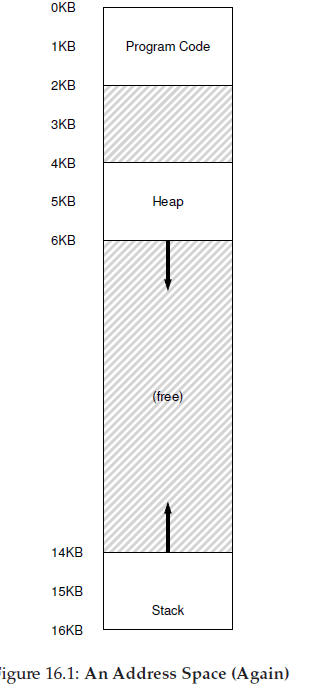

address space is OS abstraction of physical memory that is easy to use

Address space of process contains all memory state of running program

- code

- instructions for program will be in memory

- stack

- keep track of where program is in the function

- allocate local variables

- pass parameters

- heap

- dynamically-allocated / user-managed memory

- eg. creating new object in Java

Stack VS heap memory

Stack

- stores temporary values created by function

- when task completed memory is automatically erased

- contains methods/ local variables reference variables Heap

- store global variables / static variables

Heap has nothing to do with data structure

Stack

- only access from top (push and pop top one)

- quick access speed

Heap

- can take data out from anywhere --> fragmentation

https://icarus.cs.weber.edu/~dab/cs1410/textbook/4.Pointers/memory.html

https://www.guru99.com/stack-vs-heap.html

Virtualization

virtualizing memory is when OS provides layer of abstraction to running program.

The running program thinks it is loaded into memory at particular address and has potentially very large address space but the reality if quite different.

Address Traslation: MMU

Address tarnslation: converting hardware address to virtual address to program is done by *memory management unit (MMU)

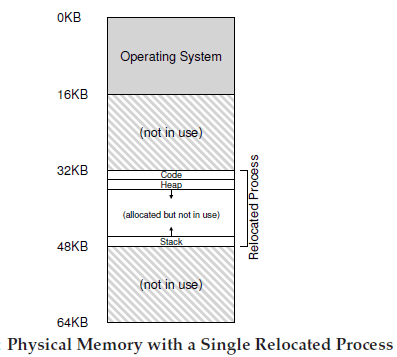

Technique is dynamic relocation There are two hardware registers. one base & one bounds / limits.

The base-and-bounds register will allow OS to place address space anywhere in physical memory.

In virutal memory there is 0-16KB memory. base and bounds register will put it in 32KB-48KB of physical address.

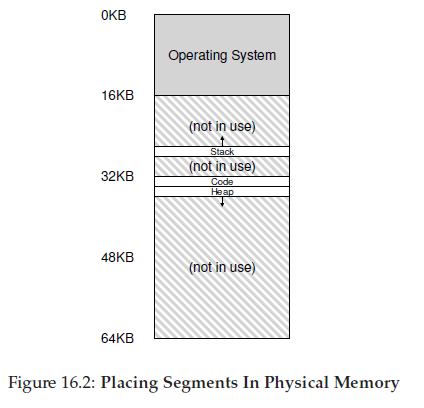

Segmentation: Generalized Base / bounds

instead of having 1 base/bounds pair. Have a base/bounds pair per logical segment of address space. (code, stack & heap)

Must keep track of each segment + direction it grows (stack grows backwards)

Fragmentation

physcial memory becomes full of little holes of free space making it difficult to allocate new segments or grow existing ones

Problem because of segmentation

- In simple MMU, each address space is same size so physical memory is just a bunch of slots where processes fit in

- In segmentation, there are many segments / process and each segment is different size

- Physical memory can become full of little holes

Solutions

- Compact physical memory

- compact physical meory by rearranging exisiting segment

- Management algorithm

- algo that tries to keep large extents of memory available for allocation

- eg. best-fit / worst-fit / buddy algorithm

external fragmentation will always exists algos will just minimize it.

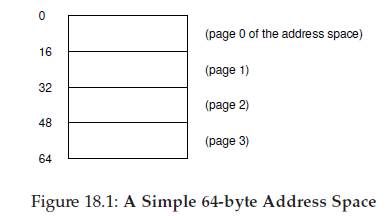

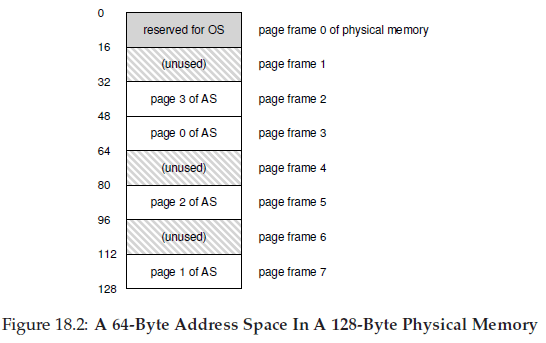

Paging

Virtual memory: address space is divided into fixed-sized pieces.

as opposed to diving it into some number of variable-sized logical segments

Physical Memory: physical memory is now viewed as fixed-sized slots called page frames each frame contains a single virtual-memory page.

This helps prevent fragmentation which occurs when dividing space into different-size chunks (as in segmentation)

Pages in virtual address space will be places at different locations throughout physical memory

Advantages

- flexibility

- can effectively support abstraction of address space. no need to make assumption about direction of heap / stack

- simplicity

- OS needs to place 4 page of address space in eight page of physical memory, It just find 4 free pages.

Disadvantages

can be slow

- hardware must know where page table for currently running process is

- fetch proper page table entry from process's page table

- perform translation of virtual memory to physical memory

- load data from physical memory

Page Table

Used for address translations

It is a per process data stricture that records where each virtual page of address space is places in physical memory

Its per-process. If another process were to run OS will have to manage different page table for it since virtual pages will map to different physical page (isolation --> no sharing of memory)

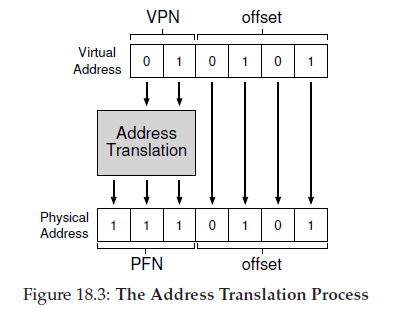

Virtual Address -> Physical Address

address split into 2 parts:

- VPN: Virtual Page Number

- offset: offset within the page (which byte within the page)

In converting.

- VPN. converted to PFN (physical fram / page number)

- offset: not changed.

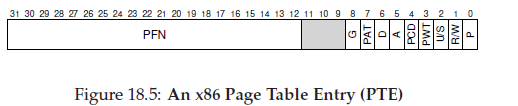

Page Table Contents

page table is just data structure mapping virtual address to physical address. simplest one can be linear page table (array)

Each page table entry (PTE) can include

- protection bit

- page can be read / written / exeucted

- present

- page is in physical memory or on disk (i.e. it's swapped out)

- dirty

- page modified since it was brought into memory

- reference bit

- track if page was accessed, determine which pages are popular and should be kept in memory --> useful for page replacement

TLB

translation lookaside buffer(TLB) is part of chip's MMU. It is a address-translation cache.

It's a hardware cache for popular virtual-to physical address translations

will speed up address translations & paging

Context Switch

address translation only valid per process. to handle this,

- OS flush TLB on context switch

- hardware support

- provide address space identifier (something like process identifier (PID)) in TLB

Virtual Memory: Secondary memory

https://www.geeksforgeeks.org/virtual-memory-in-operating-system/

Virtual Memory is a storage allocation scheme in which secondary memory can be addressed as though it were part of main memory

To support many concurrently-tuning large address spaces, we can use secondary memory (hard disk). OS can make use of this larger, slower device to provide the illusion of large virtual address sapce.

Further Reading:

https://www.guru99.com/virtual-memory-in-operating-system.html

https://www.geeksforgeeks.org/virtual-memory-in-operating-system/

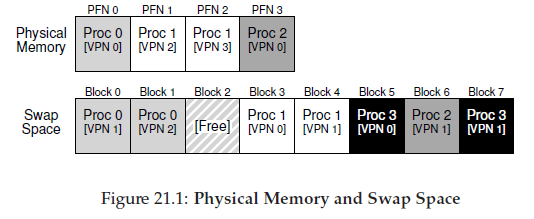

Swap Space

Swap Space is space reserved on the disk for moving pages back and forth

OS will need to remember disk address of given page

proc 0-2 are actively sharing physical memory only some of their valid pages are in memory others are in swap space on disk.

Proc 4 has all ages swapped out to disk and isn't currently running.

Page Fault

process accesses a memory page that is mapped to virtual address space but not loaded in physical memory.

Remember that in Page table Entry (PTE) there is a present bit, which represents if page table is present in physical memory.

OS handles page fault (page-fault handler)

If page is not present and has been swapped to disk, OS will need to swap page into memory to service the page fault.

Page Replacement

https://www.geeksforgeeks.org/page-replacement-algorithms-in-operating-systems/

Page Replacement Policy When physical memory is full, OS must pick a page to kick out/ replace to make room to page in a page from swap space

- FIFO

- Optimal Page Replacement

- kick out page that would not be used for the longest duration of time in the future.

- LRU (least recently used)

- kick out page that has been least recently used

Paging: Putting it all together

what happens when program fetches some data from memory?

- Find VPN from virtual address

- Check TLB (cache) to translate VPN to PPN

- TLB Hit (cache hit)

- physical address = PPN + offset

- TLB miss

- check page table entry(PTE) in page table

- PTE.present

- grab PPN from PTE and retry

- PTE is absent (page fault)

- OS page fault handler

- find physical frame for page to be in

- No page

- Wait for replacement algorithm to kick out page

- Read page in from swap space

Process

Process is a program in execution.

When write program in java & compile. Compiler creates binary code. Original code & binary code -> program. When u run the binary code -> process.

Process is 'active' program is 'passive'

Context Switching

process of saving context of 1 process & loading context of another process

loading & unloading process form running state to the ready state

occurs when:

- process with higher priority then running process comes to ready state

- interrupt occurs

- CPU scheduling

Thread

unit of execution within a process.

Threads are executed one after another but gives the illusion as if they are executing in parallel.

Threads are not independent of each other. they share code data OS resources.

Lightweight: have own stack space but can access shared data

Each thread has:

- a program counter

- a register set

- a stack space

Two types of thread:

- User level thread

- Kernel level thread https://www.geeksforgeeks.org/threads-and-its-types-in-operating-system/

Multithreading

thread is known as a lightweight process.

Achieve parallelism by dividing a process into multiple threads.

2 types of threads

- User level thread

- kernal level thread

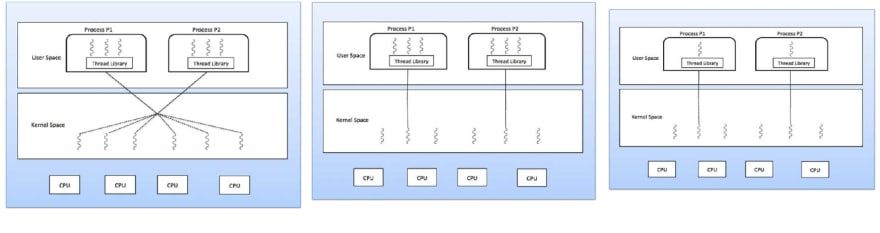

User VS Kernel level thread

user level - user managed threads

kernel level - OS managed threads acting on kernel on OS core.

https://www.tutorialspoint.com/operating_system/os_multi_threading.htm

MultiThreading

concurrency

Multithreaded process on single processor

processor switches execution resources between threads resulting in cocurrent execution

concurrency means more than 1 thread is making progress but threads are not actually running simultaneously. switching happens quick enough that threads might appear to run simultaneously.

Multithreaded process in shared-memory multiprocessor environment

Each thread in process can run concurrently on separate processor resulting in parallel execution. (when number of threads in process <= number of processors available)

concurrency: interleaving threads in time to give appearance of simultaneous execution

parallelism: genuine simultaneous execution

https://docs.oracle.com/cd/E19253-01/816-5137/mtintro-25092/index.html

MultiThreading models

system to support kernal and user thread in combined way

- many to many model

- multiple user threads -> same / lesser kernel threads

- user thread blocked can schedule other user thread to other kernel thread

-

many to one model

- multiple user thread -> one kernel thread

- user makes blocking system call, entire process blocks

- one kernel thread & only 1 use can access kernel at a time -> multiple threads cannot access multiprocessor at same time

-

one to one model

- 1-1 relationship for user and kernel thread

- multiple thread can run on multiple processor

- creating user thread requires corresponding kernel thread.

Process VS Threads

Threads within same process run in shared memory space.

Process run in separate memory space

Threads are not independent of one another like processes, threads share with other threads OS resources

Like process, threads have their own program counter (PC), register set & stack space.

reference

blackblaze has a really comprehensive read and is where the pictures in this section are from

Posted on May 9, 2021

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.

Related

November 30, 2024

November 30, 2024