Deploying and Managing Your Machine Learning Pipeline with Terraform and Doppler

Jammie sandy

Posted on November 23, 2021

Introduction to ML pipeline

As a machine learning engineer or data scientist, when working on projects it may sound boring or tiring to repeat the same processes over and over again and you might want to automate the whole process, that’s where machine learning pipelines come in. A machine learning pipeline is a way of automating your whole ML workflow, it carries out each step in a sequential manner, from data extraction to model deployment.

In this tutorial, we are going to see how to manage an ML pipeline using Terraform and Doppler and will be using Homebrew to install the required packages and you can look out for more information at https://docs.brew.sh/Homebrew-on-Linux

Brief intro to Terraform and Doppler

There’s an intense growth in infrastructure-as-a-Code (IaaC) amongst big public cloud providers like Google, AWS, and Azure and it involves managing a group of resources using the same way developers use to manage their application code, and terraform is one of the most popular tools used by developers to automate their infrastructure. It is an open-source Infrastructure as Code tool which was made by HashiCorp that aids developers to use a high-level configuration language called HCL (HashiCorp Configuration Language) to explain the infrastructure of a running application.

Doppler is simply a tool that helps an organization manage, sync, and organize its secret keys seamlessly and efficiently. Instead of sharing important keys carelessly, it can be safer to use doppler to handle the sharing.

Let's assume you are building a model to help you decide on how to predict the age of a person using either Pytorch or TensorFlow, when you are done with the model training and evaluation, visualizing the loss and accuracy and you’re satisfied with the outcome, the next step will be to deploy the model and let it make predictions to users.

If you are deploying as an API and you are done building the API with any service of your choice the next thing to consider is to run this code on a cloud service to make it accessible and for this tutorial, we will be using AWS because of its variety of services (amazon web service) we can use Terraform with all major cloud providers.

Settling up Doppler

We want to use doppler to manage our secret keys on AWS and to get started simply go to https://www.doppler.com/register to create an account, then on your dashboard, create a workspace and give it a name, then create a new project, now install Doppler on your CLI:

See https://docs.doppler.com/docs/cli#installation for other OS’

brew install dopplerhq/cli/doppler

Confirm that your download was successful by checking its version

doppler --version

Then login with your credentials by using the command below on your terminal

doppler login

Installing Terraform and AWS to Set Up Model Infrastructure

First, let’s install Terraform, and to do that on your machine simply go to your terminal and use the command below

brew install tfenv && tfenv install latest

Now check the version of terraform you have installed by typing the code below on your terminal. Be sure you have from version 0.12 upwards.

terraform version

The next step is to install the AWS CLI, then set up your AWS account by getting your AWS Access keys and Access secrets from the AWS IAM page and running the command below

aws configure

And now to set up run a test by using the code below

aws s3 ls # this will list all s3 buckets in the region

Setting up an S3 Bucket on AWS

In order for us to successfully deploy with Terraform on AWS, you first need to disintegrate your code into multiple files or we can dump it all in one single file. For this tutorial, we will be using one file for ease, if you choose to do either of the methods it’s advisable to save your code in the main.tf file.

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 3.0"

}

}

}

provider "aws" {

region = "us-east-1" # you can change if you are in a different country/region

}

resource "aws_s3_bucket" "bucket" {

bucket = "my-super-cool-tf-bucket"

acl = "private"

tags = {

Name = "machine-learning" # tags are important for cost tracking

Environment = "prod"

}

}

To run terraform on your terminal there are 3 major commands which are terraform validate, terraform apply, and terraform plan, Terraform validate will ensure that your syntax is validated, terraform apply will run your code and then create the resources that you specified and terraform plan will produce the result of what you intend to run.

aws s3 ls | grep my-super-cool-tf-bucket

2021-09-05 66:66:66 my-super-cool-tf-bucket

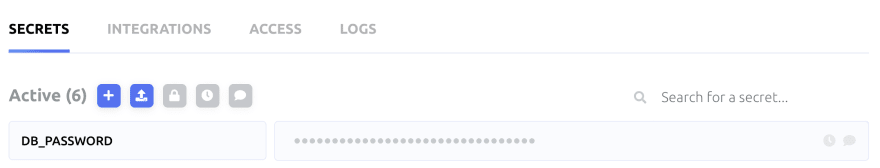

Managing secrets with Doppler

Now we want to create secrets using doppler_secrets in our terraform config so that other developers on the team can easily access these secrets. Ensure that you get your service token and add it to the code below. A service token simply enables read-only secrets to access to a peculiar config within a project, you can get more info at https://docs.doppler.com/docs/enclave-service-tokens. To get this token you go to the project and select config, then click on the Access tab and next click on Generate, now all you have to do is copy the service token as it displays just once.

# Install the Doppler provider

terraform {

required_providers {

doppler = {

source = "DopplerHQ/doppler"

version = "1.0.0" # Always specify the latest version

}

}

}

# Define a variable so we can pass in our token

variable "doppler_token" {

type = string

description = "A token to authenticate with Doppler"

}

# Configure the Doppler provider with the token

provider "doppler" {

doppler_token = var.doppler_token

}

# Generate a random password

resource "random_password" "db_password" {

length = 32

special = true

}

# Save the random password to Doppler

resource "doppler_secret" "db_password" {

project = "rocket"

config = "dev"

name = "DB_PASSWORD"

value = random_password.db_password.result

}

# Access the secret value

output "resource_value" {

# nonsensitive used for demo purposes only

value = nonsensitive(doppler_secret.db_password.value)

}

Then on your CLI enter

terraform init

And after that

terraform apply

Terraform will now create a new password and save it in our Doppler config as DB_PASSWORD.

Deploying model using Terraform

To deploy the model we will use AWS Lambda to tame the cost but if you aim to run a large number of predictions on your model it is not advised to use lambda so your software is scalable but lambda is an easier and faster solution. First off you’ll have to store the coefficients of your model on that S3 bucket you created above by running

aws cp model.pt s3://my-super-cool-tf-bucket

Other files like your tokenizer needed for the project to run can be loaded, then use EFS to store the models because some models are large in size and lambda is not an option because of its limit.

Create a file and call it providers.tf

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 3.0"

}

}

}

provider "aws" {

region = "us-east-1" # you can change if you are in a different country/region

}

The next step is to create the EFS in a file and save it as efs.tf

resource "random_pet" "vpc_name" {

length = 2

}

module "vpc" {

source = "terraform-aws-modules/vpc/aws"

name = random_pet.vpc_name.id

cidr = "10.10.0.0/16"

azs = ["us-east-1"]

intra_subnets = ["10.10.101.0/24"]

tags = {

Name = "machine-learning" # tags are important for cost tracking

Environment = "prod"

}

}

resource "aws_efs_file_system" "model_efs" {}

resource "aws_efs_mount_target" "model_target" {

file_system_id = aws_efs_file_system.shared.id

subnet_id = module.vpc.intra_subnets[0]

security_groups = [module.vpc.default_security_group_id]

tags = {

Name = "machine-learning" # tags are important for cost tracking

Environment = "prod"

}

}

resource "aws_efs_access_point" "lambda_ap" {

file_system_id = aws_efs_file_system.shared.id

posix_user {

gid = 1000

uid = 1000

}

root_directory {

path = "/lambda"

creation_info {

owner_gid = 1000

owner_uid = 1000

permissions = "0777"

}

}

tags = {

Name = "machine-learning" # tags are important for cost tracking

Environment = "prod"

}

}

type terraform apply to run the code and put your model and its files on AWS

We need to create Data sync that will load the model from the S3 to the volume or the datasync.tf

resource "aws_datasync_location_s3" "s3_loc" {

s3_bucket_arn = "arn" # copy the bucket arn you created in the previous step

}

resource "aws_datasync_location_efs" "efs_loc" {

efs_file_system_arn = aws_efs_mount_target.model_target.file_system_arn

ec2_config {

security_group_arns = [module.vpc.default_security_group_id]

subnet_arn = module.vpc.intra_subnets[0]

}

}

resource "aws_datasync_task" "model_sync" {

name = "named-entity-model-sync-job"

destination_location_arn = aws_datasync_location_s3.efs_loc.arn

source_location_arn = aws_datasync_location_nfs.s3_loc.arn

options {

bytes_per_second = -1

}

tags = {

Name = "machine-learning" # tags are important for cost tracking

Environment = "prod"

}

}

Now let’s create the lambda that will run the prediction, you will have to make sure your requirements are installed and other prediction files are available then you can create a lambda.tf file. Your code needs to follow the lambda syntax and load the model. You can get the BERT Base pretrained model to try it out HERE

def predict(event, ctx):

...

To deploy your model, simply place all the files in the same folder

resource "random_pet" "lambda_name" {

length = 2

}

module "lambda" {

source = "terraform-aws-modules/lambda/aws"

function_name = random_pet.lambda_name.id

description = "Named Entity Recognition Model"

handler = "model.predict"

runtime = "python3.8"

source_path = "${path.module}"

vpc_subnet_ids = module.vpc.intra_subnets

vpc_security_group_ids = [module.vpc.default_security_group_id]

attach_network_policy = true

file_system_arn = aws_efs_access_point.lambda.arn

file_system_local_mount_path = "/mnt/shared-storage"

tags = {

Name = "machine-learning" # tags are important for cost tracking

Environment = "prod"

}

depends_on = [aws_efs_mount_target.model_target]

}

Now run everything on your terminal by typing terraform apply

And that is how simple it is to deploy using Terraform on AWS, when you’re done simply go on your terminal and use the command below to clean up and remove all resources

terraform destroy

Conclusion

This tutorial should be able to give you a headstart on how you can deploy your model with Terraform on AWS, there are other awesome ways you can deploy your models and also use doppler for team collaboration. The code to this tutorial can be found in this Github repo

Posted on November 23, 2021

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.

Related

November 23, 2021