Ismail Egilmez

Posted on September 12, 2022

Cloud-native applications provide benefits such as rapid deployment, scalability, and reduced costs. The downside is that testing, debugging, and troubleshooting on these types of apps is more difficult than on traditional applications.

In recent years, software testing has matured from manual testing to automated testing. There are multiple testing tools, strategies, and patterns in the market, which are very useful and important in making cloud-native application development fault-free. In this article, we explain the basics of testing cloud-native applications.

Let’s quickly bring up what is covered in this article:

Why do we test applications?

Performance

Operativeness

Resilience

What Are the Testing Concepts?

TDD (Test Driven Development)

BDD (Behavior Driven Development)

What Are the Testing Patterns?

A/B Testing

Test Doubles

What Are the Testing Types?

Unit Testing

Integration Testing

Load Testing

Regression Testing

Testing Never Ends

Why do we test applications?

From a traditional software development standpoint, testing occurs after development is completed and handed over to test engineers by software developers. Test engineers then perform their tests and, if everything works well, the code is deployed to production.

Errors can emerge at any phase of the development life cycle in a software development project (and while there are various types of software bugs, only a few of them are known to be irreparable). It is, therefore, crucial to perform software testing to prevent the occurrence of software bugs in critical environments like production.

Quality assurance plays a big role in minimizing the chances that the production code has functionality or design errors – and software quality is assured by testing in the cloud-native era, which has become an integral part of the development cycle. Although testing does have a cost, it is incredibly useful because the cost of production failures would be much higher and impactful.

Any development team that owns an application would need to ensure service quality in terms of the following aspects:

- Performance

- Operativeness

- Resilience

These are the bare minimum requirements to ensure software quality. Depending on the requirements of your business logic or your market-specific requirements, you might want to consider testing for security, availability, usability, UX/UI, etc.

Performance

The performance of your application as it’s perceived by the end-user is affected by complex combinations of environmental effects, access patterns, and most importantly, the data being processed. That’s why it’s so unfortunate that many software teams do not actually test for performance as much as they could, since it is a vital quality aspect.

There are a lot of test concepts, patterns, and types that can improve the performance of our cloud-native applications, but it’s crucial to construct and follow a testing strategy that aligns with the development life cycle. As an example, benchmark testing is one of the most preferred and economical ways to collect performance data about our application code.

If you don’t test your application in production, then you can’t be sure that performance regressions won’t occur. Even if you heavily invest in testing in pre-production environments, you should still expand testing practices to the entire software life cycle to ensure quality with less cost and effort.

For instance, debugging and performance management tooling is an excellent testing strategy to utilize in production for detecting and understanding issues in your distributed cloud applications.

Operativeness

Running unit tests and integration tests can help you ensure that your service performs the way you want it to without defects. In these tests, we generally define a set of expected responses from our service to make sure it operates well and correctly. However, unit and integration tests aren’t the only tests available – many others, such as mutation tests, fuzz tests, etc. can be utilized to test the accuracy of application behavior at a wide range.

As mentioned before, though, you should extend your testing strategy to production to make sure the operativeness and accuracy of your service is as perfect as it can be. You should also be agile in receiving and incorporating error feedback with functional debugging and troubleshooting capabilities. But if the application is on the cloud, there’s no guarantee that it will act flawlessly in production. The end-users, therefore, will be your best testers.

Resilience

Before the cloud-native era, testing for robustness and resilience was not a priority because mostly monolithic applications did not have as many dependencies and the types of errors that could be encountered were well-known. Microservice architectures in the cloud era, however, brought the concept of dependency to applications and made them vulnerable and fragile. Due to these circumstances, if your application is not tested thoroughly for resilience, then your end-users are likely to be impacted.

With a cloud-native microservice application, you need to carefully manage the interactions between your service and dependencies. Both errors and latencies might occur and thus impact the end-user’s experience.

Having testing procedures and standards in place is imperative to make sure service is resilient even when dependencies fail. For example, automated tests that simulate the known failures in pre-production settings should be applied frequently. Additionally, for errors and failures that cannot be simulated in pre-production environments, enrolling chaos engineering strategies allows you to test for these issues in production.

What Are the Testing Concepts?

TDD: Test-Driven Development

The idea of test-driven development originated in the ’70s in opposition to Glenford Myers’ publication “Software Reliability,” which asserted the “axiom” that “a developer should never test their own code.” Test-driven development (TDD) refers to a style of programming that involves a three-action cycle: coding, testing, and design. Testing in TDD consists mainly of writing unit tests and designs which contain the activities needed to refactor code.

A TDD project normally begins by defining and understanding the requirements necessary to reach the solution to a problem. Next, we write test cases to verify that the requirements are valid. And finally, we write the code that has passed the tests. Until business functionality is ensured and satisfied, this process iterates to complete the test cases and the required code.

TDD is also called “Test-First Development,” as it forces the developer to develop and run automated tests before the application’s actual code can be developed.

The image above shows the difference between the waterfall and TDD model processes.

The benefits of TDD are:

- Test coverage increases. Almost every line of code is run by test methods.

- Errors are localized with unit tests, increasing the trustworthiness of the code.

- Tests serve as documentation describing the way the code works. Developers can understand how the code works in a short period of time by analyzing these tests.

- The need to debug code is minimized.

- You have the design-first point of view in software development.

- Over-engineering is more easily avoided.

BDD - Behavior-Driven Development

Behavior-driven development (BDD) is a branch of test-driven development that inherits agile development principles and user stories, which presents development as a set of scenarios in which applications behave in a certain way.

It reduces the chance of mistakes and brings more clarification to development because it allows the scenarios, actions, and conditions to be transposed into a simple, common terminology between the software development and business teams.

In other words, BDD enhances communication and collaboration between product management and software development teams as it helps to describe requirements in the form of scenarios, making it easier for all parties involved to stay on the same page.

What Are the Testing Patterns?

There are a few testing patterns for cloud applications that enable us to meet quality standards in an easier way.

A/B Testing

A/B testing, also known as split testing, was originally intended to discover user responses to two different web pages that have the same functionality. Essentially, a small group of users is selected to see version A of the web page and another small group of users is selected to see version B of the web page. If the users respond in favor of a certain version, then that version of the web page is selected to move forward with.

This approach can be applied to the introduction of new features in an application in a phased way. A new service or feature is introduced to a selected set of users and their responses are measured. The results are compared to the responses of the other selected groups who used the original version of the application.

Test Doubles

Most of the time, the performance of your application under tests depends on its interactions with other components of the application being produced by other developers. A test double replaces the actual component and mimics its behaviors. The test double, unlike real components that are dependencies or external third parties, is typically a lightweight version of the components under the control of the development team.

There are many types of test doubles, such as Dummy, Fakes, Test Stubs, and Mocks.

There are some disadvantages to using test doubles, however:

- The test doubles may not be available to give data to the required tests for different cases.

- The functionality of the test doubles may not be suitable for testing at the time of development.

- Trying to use the actual dependencies during tests may slow down the testing.

Test Stubs

A test stub holds predefined data and answers the calls it receives during tests with that data. When we don’t want to use real dependencies in our tests (or when we simply can’t), we tend to use test stubs to eliminate the side effects of the real data.

Test stubs are useful when a dependency’s response alters the system’s behavior during tests. For example, if we have a service that takes input from an external service, that input determines our service’s response. A test stub allows us to mimic various scenarios that cause a change in the behavior of the product service.

Mock Objects

Mock objects, on the other hand, record the system’s behavior and then present the recording for us to verify. Mocks register received calls, and we can then check to see if all the expected actions were performed.

When there is no easy way to verify that our application code was executed, or when we simply cannot invoke the production code, we use mocks. The downside is that there is no return value and it’s not easy to check the system’s state.

As an example, let’s take the SMS sending service in an incident management tool like OpsGenie. You don’t want to send SMSs each time you test your code, and it’s not always possible or easy to verify that the correct SMS is sent during tests. You can only verify the output of the functionality that’s done in tests. To overcome this, you can use mocks to take the place of the SMS sending service in tests.

Fakes

Fakes are objects with limited capabilities. Essentially, they work like the real object but they have a simplified version of the production code. We generally use fakes to simplify the implementation of our tests without affecting the system’s behavior.

A perfect example of a fake is an in-memory database. It’s a lightweight database that isn’t used in production, but is still perfectly adequate to use as a database in a testing environment.

What Are the Testing Types?

Even before cloud computing became popular, software testing types were known and applied in software development. CI/CD pipelines used in DevOps or Agile methodologies made it possible to automate these testing types so that they could run each time a code build was taken or a check was required.

Unit Testing

Unit tests are used for individual units and functions of an application’s code. In cloud-native applications, the essential purpose of unit tests is to test the smallest possible part of the application. It is possible with this testing type to isolate the dependencies of tested services using mock objects or test stubs in order to focus solely on testing applications.

Unit testing aims to test each class or code component to ensure they perform as expected. If you are a Java user, JUnit is one of the popular Java frameworks.

Integration Testing

The purpose of integration testing is to check whether the components of your application, such as different services, are being performed correctly. Microservice cloud applications consist of various software modules, coded by different developers. The purpose of this level of testing is to expose defects in the interactions between these software modules when they are integrated. Because of the focus on testing the efficacy of data communication, integration testing is sometimes called “string testing” or “thread testing.”

Although each piece of code is unit tested, errors can still exist for multiple reasons. These include:

- The programming logic of different developers who are all contributing to the project may be different. This nuance in their understanding may require verification that the software modules work well together.

- Exception handling is not always done correctly.

- Database connections of the software modules may be faulty.

- The project’s requirements may change by the time development continues.

Load Testing

Load testing is the process of simulating the demand on your system within a specific period of time, and then measuring the performance effect of the load on the system (such as error rates and response time). Load tests are sometimes called performance testing, non-functional testing, or stress testing.

Load testing is a crucial part of cloud-native application development and DevOps as it helps application teams build and deploy their applications with confidence in pre-production and production environments. It’s critical for the business component of your application to be able to scale to meet demand. That’s why load testing your applications is so important: If you don’t use load testing on your applications, your end-users can be impacted at times when demand bumps up.

Regression Testing

The existing functionality of applications should not break when a new functionality is introduced. Regression testing ensures that a code change has not negatively affected the existing features of an application. Simply put, regression testing is a full or partial selection of already-executed test cases (whether functional or nonfunctional) that are then re-executed to ensure existing functionalities are working fine. If a functionality doesn’t work as it should, this is called a regression.

Whenever we do a bug fix, change a configuration, or even refactor or enhance code , we may need to run the regression tests.

Testing Never Ends

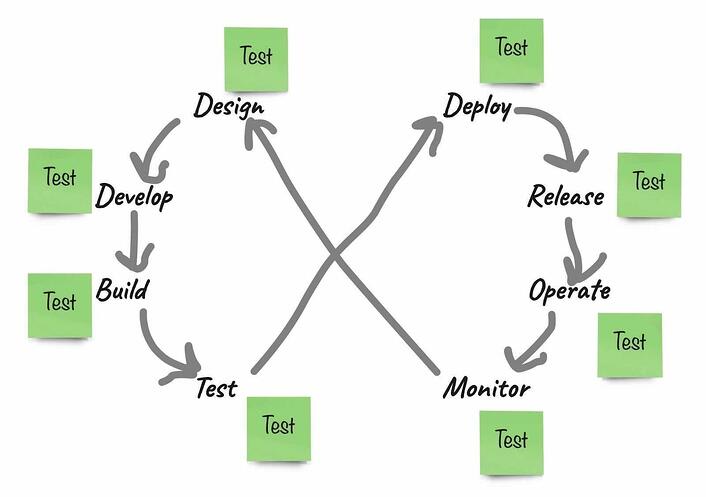

The DevOps software development cycle has evolved to include tests at all phases of development. Testing never ends, and never should, in order to keep end-users happy.

Back in the monolith age and at the beginning of the cloud age, developers used to complete development and then hand the application over to quality assurance engineers before deployment. But now, since applications are almost atomized in the cloud and applications are more complex, distributed, and asynchronous, the old routine for testing has irrevocably changed. Deployment is much more frequent than before and automated thanks to CI/CD pipelines.

On the other hand, monitoring and observability are widely used in production environments, but pre-production environments are left out in the cold by observability solutions. To ease the load on developers, observability should be applicable to software tests for cloud applications by default.

You run several unit tests every time before you push your code, and integration tests are run by CI automatically. You continuously test for errors to make sure your service is served to end-users fault-free.

Testing helps us build more reliable, resilient, well-performing, and maintainable applications – if we have the ability to troubleshoot our tests quickly via observability. Instrumentation that enables us to troubleshoot our tests in CI environments is a valuable asset to have.

As discussed, tens of thousands of tests run automatically in a CI pipeline before a change in an application goes to production but this process is not easy as to speak. Foresight is a product that addresses visibility problems in CI environments.

Foresight is a comprehensive solution that provides insights and analytics for both CICD pipelines and tests furthermore automatically assessing the level of risk of software changes and suggesting optimization and prioritization tips for automated tests in CI pipelines.

Explore how Foresight works with your projects now.

Save the date! 🕊️

On the 28th of September, we are launching Foresight on Product Hunt 🎉🍻

Take your seat before we launch on Product Hunt 💜

Posted on September 12, 2022

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.