AI Fundamentals Series: Algorithms

Infinite Waves

Posted on March 11, 2021

Continuing on from the first instalment in the AI Fundamentals Series I want to provide some basic definitions of terms I have, and will continue, to use. In the spirit of trying to assume no prior knowledge, the aim is to keep everything as simple as possible to pave the way for more in-depth understanding at a later point.

Algorithm

In computer science a broad definition is:

"Any well-defined computational procedure that takes some value, or set of values, as input and produces some value, or set of values, as output. An algorithm is thus a sequence of computational steps that transform the input into the output.

We can also view an algorithm as a tool for solving a well-specified computational problem. The statement of the problem specifies in general terms the desired input/output relationship. The algorithm describes a specific computational procedure for achieving that input/output relationship."

(Introduction to Algorithms, Second Edition)

In this way we can think of an algorithm, metaphorically, as a list of directions that takes you from your start point to your destination if you had to go on a journey. A list of steps. But it also defines the relationship between the input and the output. A set of instructions, to put it another way. A recipe is often given as a common example of an algorithm.

Artificial Intelligence Algorithms

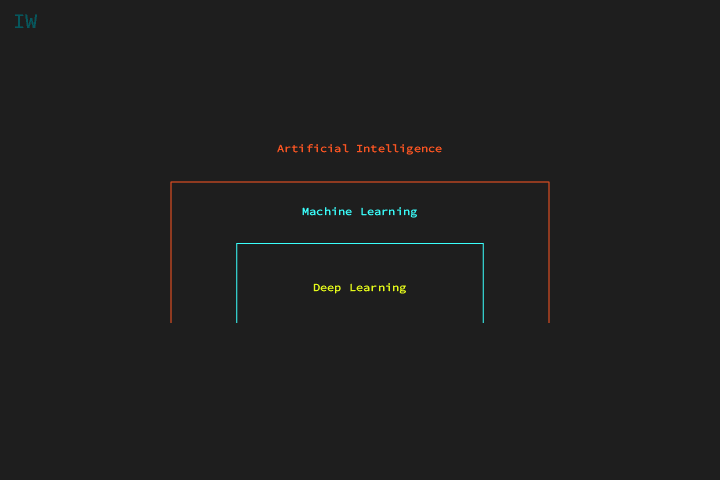

Artificial Intelligence is the broadest definition of what we are trying to do when augmenting human intelligence via computers. Under this umbrella there are subsets. Machine Learning (ML) is a subset of AI, and Deep Learning is a subset of Machine Learning. Although AI and ML are often used interchangebly.

Machine Learning:

"The study of computer algorithms that improve automatically through experience and by the use of data."

Deep Learning:

"Is representation learning: the automated formation of useful representations from data."

All Deep Learning is Machine Learning, and all Machine Learning is AI.

There are different techniques and algorithms at the different layers, and this categorization is not exhaustive. In the next issue of this AI Fundamentals series these topics, definitions, and their utilities will be broken down further.

The progression of AI algorithms

The AI algorithms that are used today are more sophisticated than was used in the 1970s and 1980s. The algorithms were mostly hand-coded in what is referred to as Good Old Fashioned AI (GOFAI), as Nick Bostrum points out in his book 'Superintelligence'. Whilst effective in very narrow domains (playing chess for example) they struggled to map onto other problems.

They were also fragile and would not return expected results if the programmers made even slight errors in their assumptions (P.9, Bostrum).

"One reason for this is the 'combinatorial explosion' of possibilities that must be explored by methods that rely on something like exhaustive search."

(P. 7, Bostrum)

Failures occurred when complexity increased beyond simple instances. Now, with increased compute power, we are able to implement algorithms that, effectively, write themselves. The initial algorithm is programmed but then it updates istself as it learns from data.

In some sense the first wave of AI, classical AI, worked by identifying a problem, a possible solution, and implementing the solution, a program that plays chess [for example]. Right now we are in the second wave of AI. So instead of writing the algorithm that implements the solution, we write an algorithm that automatically searches for an algorithm that implements the solution. So the learning system in some sense is an algorithm that iself discovers the algorithm that solves the problem.

Today some Machine Learning algorithms behave more like the brain in the form of 'Artificial Neural Networks' (ANNs), artificial 'neurons', links in a chain, that can be more finely tuned or produce degrees of inaccuracy and still function, rather than a full-system crash.

This is, after all, one of the aims of AI. To reproduce, synthetically, what the brain can do, what we can do.

If you're not already signed up subscribe by clicking the button below to get the next issue in the AI Fundamentals series:

Sources:

Introduction to Algorithms, Second Edition. Thomas H. Cormen Charles E. Leiserson Ronald L. Rives.

MIT Deep Learning Basics: Introduction and Overview with TensorFlow

Superintelligence: Paths, Dangers, Strategies

Joscha Bach: Artificial Consciousness and the Nature of Reality | Lex Fridman Podcast

Posted on March 11, 2021

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.