DALL-E vs. Midjourney AI vs. Stable Diffusion: A Comparison of AI Models that Can Generate Images from Text

JerryMaoMao

Posted on May 12, 2023

Artificial intelligence (AI) is one of the most exciting and impactful fields of technology today. AI is enabling new applications and possibilities across various domains and industries, such as healthcare, education, entertainment, and more. AI is also advancing the frontiers of human creativity and expression, by allowing us to generate novel and realistic content from data or prompts.

One of the most fascinating and challenging tasks in AI is natural language generation (NLG), the task of creating coherent and meaningful texts from data or prompts. NLG has many applications, such as summarizing information, writing captions, generating headlines, and more. But one of the most impressive and creative applications of NLG is generating images from text descriptions. Imagine being able to create any image you can think of, just by typing in a few words. For example, you could type in “a cat wearing a hat” and get an image of a cat wearing a hat.

This may sound like science fiction, but it is actually possible thanks to three groundbreaking AI models: DALL-E, Midjourney AI and Stable Diffusion. These models can generate realistic and diverse images from text descriptions, demonstrating a high degree of creativity and flexibility. But how do these models work, and which one is better? In this blog, we will compare DALL-E, Midjourney AI and Stable Diffusion, and show you how you can use them to create your own images with ILLA CLOUD.

What is DALL-E?

DALL-E is an AI model that was introduced by OpenAI earlier this year. DALL-E is a variant of GPT-3, a large-scale language model that can generate texts on almost any topic given a prompt. DALL-E extends the capabilities of GPT-3 by enabling it to generate images from text descriptions, such as “an armchair in the shape of an avocado” or “a snail made of a harp”. DALL-E can produce multiple images for each prompt, demonstrating a high degree of creativity and flexibility.

DALL-E uses a neural network architecture called Transformer, which consists of layers of attention mechanisms that learn to encode and decode sequences of data. DALL-E uses a single Transformer model that can process both text and image tokens as inputs and outputs. DALL-E also uses a technique called VQ-VAE to compress high-resolution images into discrete tokens that can be fed into the Transformer model.

DALL-E can generate images at 64x64 pixels resolution, which is relatively low compared to other image generation models. However, DALL-E can still produce impressive results that capture the essence and details of the text descriptions. DALL-E can also handle complex and abstract concepts, such as “a painting of a capybara sitting in a field at sunrise” or “a diagram explaining how DALL-E works”.

What is Midjourney AI?

Midjourney AI is an AI model that was developed by an independent research lab called Midjourney Lab. Midjourney AI is also a variant of GPT-3, but it uses a different approach to generate images from text descriptions. Midjourney AI uses a technique called CLIP to guide the image generation process.

CLIP is another AI model that was introduced by OpenAI earlier this year. CLIP stands for Contrastive Language-Image Pre-training, and it is a model that can learn to associate texts and images across different domains and tasks. CLIP can perform various image-related tasks, such as classification, captioning, retrieval, and more.

Midjourney AI uses CLIP as a feedback mechanism to optimize the image generation process. Midjourney AI starts with a random noise image and then iteratively modifies it until it matches the text description as closely as possible according to CLIP’s evaluation. Midjourney AI also uses another technique called StyleGAN2 to inject style and diversity into the generated images.

Midjourney AI can generate images at 256x256 pixels resolution, which is higher than DALL-E’s resolution. Midjourney AI can also produce realistic and diverse images that reflect the style and mood of the text descriptions. Midjourney AI excels at generating artistic and expressive images, such as “a portrait of Frida Kahlo with flowers in her hair” or “a surreal landscape with floating islands”.

What is Stable Diffusion?

Stable Diffusion is an AI model that was developed by Stability ai, a startup that focuses on building and sharing state-of-the-art natural language technologies. Stable Diffusion is based on two breakthroughs in generative modeling: diffusion models and DALL-E. Stable Diffusion combines these two techniques to create a model that can generate images from text descriptions using diffusion models instead of GPT-3.

Diffusion models are a class of generative models that learn to reverse a stochastic process that gradually corrupts an image or a text until it becomes pure noise. By reversing this process, diffusion models can generate high-quality samples from noise by applying a series of learned denoising steps. Diffusion models have been shown to outperform other generative models such as GANs and VAEs in terms of fidelity and diversity.

Stable Diffusion uses a diffusion model that is trained on a large dataset of images and their corresponding text descriptions, which are obtained from DALL-E. Stable Diffusion uses the same text encoder as DALL-E to encode the text descriptions into latent vectors, which are then used to condition the diffusion model. Stable Diffusion also uses a technique called annealed sampling to improve the quality and diversity of the generated images.

Stable Diffusion can generate images at 256x256 pixels resolution, which is higher than DALL-E’s resolution. Stable Diffusion can also generate more diverse and realistic images by leveraging the strengths of diffusion models. Stable Diffusion can handle various types of text descriptions, such as “a photo of a dog wearing sunglasses” or “a sketch of a dragon breathing fire”.

How to compare DALL-E, Midjourney AI and Stable Diffusion?

Now that we have introduced the three models, how can we compare them and decide which one is better? Well, there is no definitive answer to this question, as each model has its own strengths and weaknesses, and different use cases and preferences. However, we can use some criteria to evaluate and compare them, such as:

Resolution: This refers to the size and quality of the generated images. Higher resolution means more details and clarity. Among the three models, Midjourney AI and Stable Diffusion have higher resolution than DALL-E.

Diversity: This refers to the variety and uniqueness of the generated images. Higher diversity means more options and creativity. Among the three models, Stable Diffusion and Midjourney AI have higher diversity than DALL-E.

Realism: This refers to the degree of similarity between the generated images and real-world images. Higher realism means more accuracy and plausibility. Among the three models, Midjourney AI and DALL-E have higher realism than Stable Diffusion.

Style: This refers to the aesthetic and artistic aspects of the generated images. Higher style means more expression and mood. Among the three models, Midjourney AI has higher style than DALL-E and Stable Diffusion.

Speed: This refers to the time and resources required to generate images. Higher speed means more efficiency and convenience. Among the three models, DALL-E has higher speed than Midjourney AI and Stable Diffusion.

Based on these criteria, we can see that each model has its own advantages and disadvantages, depending on what you are looking for. For example, if you want high-resolution, diverse and realistic images, you might prefer Midjourney AI or Stable Diffusion over DALL-E. If you want fast and simple image generation, you might prefer DALL-E over Midjourney AI or Stable Diffusion. If you want artistic and expressive images, you might prefer Midjourney AI over DALL-E or Stable Diffusion.

Of course, these criteria are not exhaustive or objective, and you might have other factors or preferences that influence your choice. The best way to compare these models is to try them out yourself and see which one suits your needs and tastes better.

How to use DALL-E, Midjourney AI and Stable Diffusion with ILLA CLOUD?

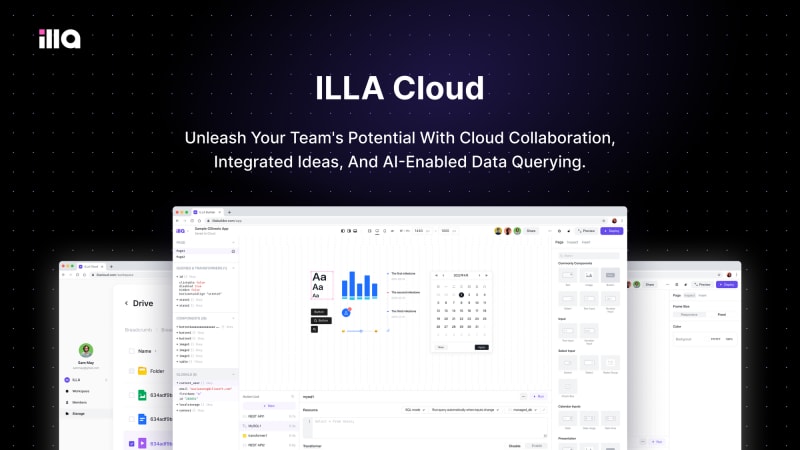

If you are interested in using DALL-E, Midjourney AI or Stable Diffusion to generate your own images from text descriptions, you might be wondering how you can access and use them easily and affordably. The answer is ILLA CLOUD.

ILLA CLOUD is a cloud-based platform that provides easy and affordable access to the latest AI technologies and tools. ILLA CLOUD allows you to use DALL-E, Midjourney AI or Stable Diffusion without any coding or installation required. You can simply type in your text description

and get your image generated in seconds. You can also customize your image by changing the style, color, size, and other parameters.

ILLA CLOUD is not only a platform for using AI models, but also for building and sharing them. ILLA CLOUD enables you to create your own custom AI models using Hugging Face Transformers and other frameworks. You can also upload your own data and fine-tune existing models to suit your specific needs and preferences. You can then share your models with other users or deploy them to your own applications.

ILLA CLOUD is also developing a lot of new AI features that will make your experience even better and more enjoyable. Some of these features include:

A collaborative editor that allows you to work on your AI projects with your team members or friends in real time.

A gallery that showcases the best and most popular AI creations made by other users.

A community that connects you with other AI enthusiasts and experts, where you can exchange ideas, feedback, and support.

ILLA CLOUD is more than just a platform. It is a vision of democratizing AI and making it accessible and fun for everyone. ILLA CLOUD believes that AI is not only a technology, but also a form of art and expression. ILLA CLOUD wants to empower you to unleash your creativity and imagination with AI.

That’s why ILLA CLOUD has partnered with Hugging Face, the leading AI company behind Stable Diffusion and other amazing models. Together, they aim to provide you with the best and most advanced AI tools and resources. They also share a common mission of building a friendly and collaborative AI community, where everyone can learn, grow, and create together.

If you are interested in using DALL-E, Midjourney AI or Stable Diffusion or other Hugging Face models, or if you want to create your own AI models and projects, you can sign up for ILLA CLOUD today. You will get a free trial and a generous credit to start your AI journey. You will also get access to exclusive tutorials, guides, and tips from Hugging Face and ILLA CLOUD experts.

Don’t miss this opportunity to join the AI revolution and become an AI creator. Join ILLA CLOUD and Hugging Face today and discover the endless possibilities of AI.

Join our Discord Community: discord.com/invite/illacloud

Try ILLA Cloud for free: cloud.illacloud.com

ILLA Home Page: illacloud.com

GitHub page: github.com/illacloud/illa-builder

Posted on May 12, 2023

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.