Idris Rampurawala

Posted on May 24, 2023

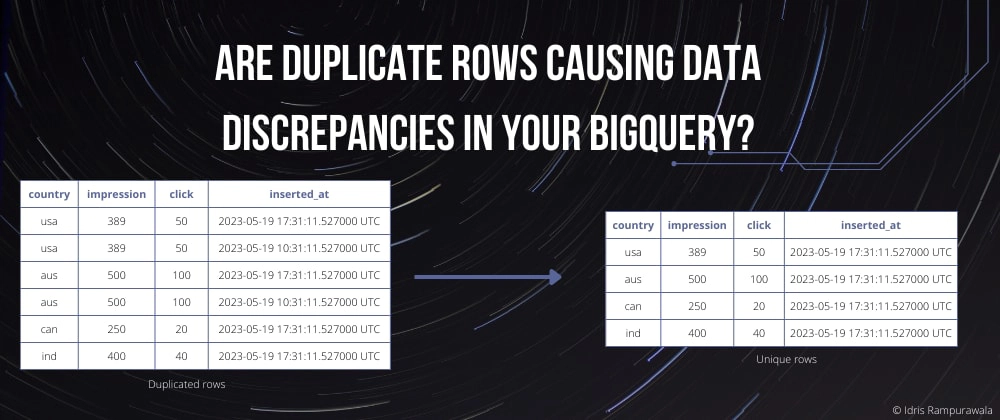

BigQuery is a fully managed enterprise data warehouse that helps us manage and analyze our data. It is a prominent product offered by Google Cloud Platform (GCP). BigQuery's scalable, distributed analysis engine lets us query terabytes and petabytes in minutes. Having duplicate rows of data can cause incorrect aggregates or query results. There are various methods to deal with data duplication in BigQuery. We will explore a couple of them in this post.

Consider a scenario where we schedule a job that runs once a day to load data from a data source in BigQuery. That is, each day's data is unique. If for any reason, this job fails in the middle of the processing, then we will have to re-run the job. Now, re-running it could add duplicate rows which would have been inserted before this failure. This duplication of rows may hamper our analytics, reports, etc. that depends on this dataset. How do we handle these duplicate rows? 🤔 Let's take a look.

1. Deleting the duplicates before a re-run

The simplest way out here is to implement the processing in a way that makes sure the current day's data do not exist before inserting. That is, we first delete the current day's data before re-running the job. Now, this may sound like an easy task, but in the world of concurrency, it becomes difficult to scale in the future.

2. Selecting the unique records

Since BigQuery can handle terabytes of data, we can allow data duplication in INSERTs and remove (skip) duplicates in SELECT queries. Oftentimes, various non-technical teams use BigQuery to generate different reports and this duplication may generate unwanted results. Guess what? We have Views to the rescue 😎. Views can abstract the complexity of fetching unique rows on top of the actual dataset.

In general, there are two types of views - Logical and Materialized. See the comparison chart below to select an appropriate option based on your use case.

🔹 Logical Views vs Materialized Views

| Feature | Logical Views | Materialized Views |

|---|---|---|

| Definition | The query that defines the view is run each time the view is queried. | Views are precomputed views that periodically cache the results of a query for increased performance and efficiency. It stores results in a separate table for caching the results. |

| Cost | Queries are billed according to the total amount of data in all table fields referenced directly or indirectly by the top-level query. | Cost associated with these views are - Querying materialized view - Maintaining materialized views (refreshing records) - Storing materialized view tables |

| Storage | No extra storage required. | Requires its own storage. |

| Maintenance | No maintenance required. | Refreshing the records of materialized views can be either manual or automatic. If manual, then we have to maintain it. Automatic refreshes have cost associated with them. |

👉 If you are not generating aggregations, then go for the Logical view. For this post, I will focus on the Logical view that projects the unique rows on top of the dataset.

🔹 Logical View Syntax

CREATE OR REPLACE VIEW

`table_name_view` OPTIONS ( friendly_name="table_name_view",

description="A view that fetches latest unique entries processed from table_name" ) AS

SELECT

*

FROM

`dataset.table_name`

WHERE

TRUE QUALIFY ROW_NUMBER() OVER(PARTITION BY column_1, column_2 ORDER BY inserted_at DESC ) = 1;

🔍 In the above query, we are addressing two major issues:

- finding unique rows based on multiple key columns using the

PARTITION BY - selecting the latest record using

inserted_atcolumn that records the row insertion timestamp

💪 Our team has been relying on this View syntax for quite some time now and it has proven to be a valuable asset for our production process.

📑 References

Posted on May 24, 2023

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.