Tuning Neural Networks

hoganbyun

Posted on June 8, 2021

When modeling a neural network, you most likely won’t run into satisfactory results immediately. Whether it’s underfitting or overfitting, there are always small, tuning changes that can be made to improve upon the initial model. For the most part, for overfit models, these are the main techniques you can use: normalization, regularization, optimization

Dealing with Overfitting

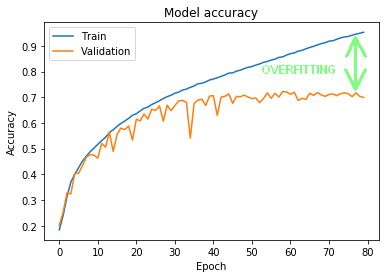

Here is an example of what an overfit model would look like:

Here we can see that as the training accuracy increases, at a certain point, the validation accuracy stagnates. This means that the model is getting too good at recognizing purely the training data that it fails to recognize general patterns.

Regularization

Regularization is often used when the initial model is overfit. In general, you have three types to choose from: l1, l2, and dropout.

L1 and l2 regularization basically penalizes weight matrices that are too large and in the back propagation phase. An example of it being used:

model.add(Dense(128, activation='relu',

kernel_regularizer=regularizers.l2(0.005)))

Dropout, on the other hand, sets random nodes in the network to 0 on a given rate. This is also an effective counter measure to overfitting. The number within the dropout function represents the rate at which dropout will occur. An example:

model.add(Dropout(.2))

Normalization

Another countermeasure to overfitting a model is to normalize the input data. The easiest thing to do is to normalize to scale the data to be between 0 and 1. What this does is potentially cut down training time and stablize convergence. You could also normalize within layers such as the random normal:

model.add(Dense(64, activation='relu',

kernel_initializer=initializers.RandomNormal())

Optimization

Lastly, you could try different optimization functions. The three most used are probably Adam, SGD, and RMSprop.

Adam (“Adaptive Moment Estimation”) is one of the most popular and works very well.

Dealing with Underfitting

Underfit models would look like the opposite of the above graph, where training accuracy/loss fails to improve. There are a few ways to deal with this.

Add Complexity

A likely reason that a model is underfit is that it is not complex enough. That is, it isn't able to identify abstract patterns. A way to fix this is to add complexity to the model by: 1) adding more layers or 2) increasing the number of neurons

Training Time

Another reason that a model may be underfit is the training time. By giving a model more time and iterations to train, you give it more chances to converge to a more ideal solution.

Summary

To summarize, overfit models require regularization, normalization, and optimization while underfit models require more complexity and training time. Neural networks are all about making small, incremental changes until you reach a good balance. These tips will ensure that you are moving in the correct direction when you inevitably find the need to tune a model.

Posted on June 8, 2021

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.

Related

November 30, 2024