How to use machine learning to recognize cat and dog meow?

Jackson

Posted on December 27, 2021

Sound detection is widely used in daily life. For example, for a user whose hearing is impaired, it is difficult to receive a sound event such as an alarm, a car horn, or a doorbell. The service may be used to assist in receiving a surrounding sound signal, so as to remind the user to make a timely response when an emergency occurs. In addition, parents not accompanying their baby may not be able to learn about the baby's status in a timely manner. This service enables them to detect the key sounds of the baby, such as crying, to learn about the baby's troubles as soon as possible.

For some apps, it's necessary to have a function called sound detection that can recognize sounds like knocks on the door, rings of the doorbell, and car horns. Developing such a function can be costly for small- and medium-sized developers, so what should they do in this situation? In this article, we’ll introduce how to implement sound detection function on android device quickly with machine learning.

Preparations

Configuring the Development Environment

1.Create an app in AppGallery Connect.

For details, see Getting Started with Android.

2.Enable ML Kit.

Click here to get more information.

3.After the app is created, an agconnect-services.json file will be automatically generated. Download it and copy it to the root directory of your project.

4.Configure the Huawei Maven repository address.

To learn more, click here.

5.Integrate the sound detection SDK.

- It is recommended to integrate the SDK in full SDK mode. Add build dependencies for the SDK in the app-level build.gradle file.

// Import the sound detection package.

implementation 'com.huawei.hms:ml-speech-semantics-sounddect-sdk:2.1.0.300'

implementation 'com.huawei.hms:ml-speech-semantics-sounddect-model:2.1.0.300'

- Add the AppGallery Connect plugin configuration as needed using either of the following methods: Method 1: Add the following information under the declaration in the file header:

apply plugin: 'com.android.application'

apply plugin: 'com.huawei.agconnect'

Method 2: Add the plugin configuration in the plugins block:

plugins {

id 'com.android.application'

id 'com.huawei.agconnect'

}

- Automatically update the machine learning model. Add the following statements to the AndroidManifest.xml file. After a user installs your app from HUAWEI AppGallery, the machine learning model is automatically updated to the user's device.

<meta-data

android:name="com.huawei.hms.ml.DEPENDENCY"

android:value= "sounddect"/>

- For details, go to Integrating the Sound Detection SDK.

Development Procedure

1.Obtain the microphone permission. If the app does not have this permission, error 12203 will be reported.

(Mandatory) Apply for the static permission.

<uses-permission android:name="android.permission.RECORD_AUDIO" />

(Mandatory) Apply for the dynamic permission.

ActivityCompat.requestPermissions(

this, new String[]{Manifest.permission.RECORD_AUDIO

}, 1);

2.Create an MLSoundDector object.

private static final String TAG = "MLSoundDectorDemo";

// Object of sound detection.

private MLSoundDector mlSoundDector;

// Create an MLSoundDector object and configure the callback.

private void initMLSoundDector(){

mlSoundDector = MLSoundDector.createSoundDector();

mlSoundDector.setSoundDectListener(listener);

}

3.Create a sound detection result callback to obtain the detection result and pass the callback to the sound detection instance.

// Create a sound detection result callback to obtain the detection result and pass the callback to the sound detection instance.

private MLSoundDectListener listener = new MLSoundDectListener() {

@Override

public void onSoundSuccessResult(Bundle result) {

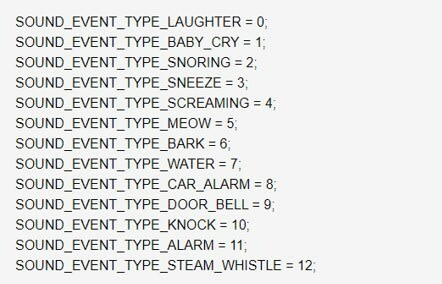

// Processing logic when the detection is successful. The detection result ranges from 0 to 12, corresponding to the 13 sound types whose names start with SOUND_EVENT_TYPE. The types are defined in MLSoundDectConstants.java.

int soundType = result.getInt(MLSoundDector.RESULTS_RECOGNIZED);

Log.d(TAG,"Detection success:"+soundType);

}

@Override

public void onSoundFailResult(int errCode) {

// Processing logic for detection failure. The possible cause is that your app does not have the microphone permission (Manifest.permission.RECORD_AUDIO).

Log.d(TAG,"Detection failure"+errCode);

}

};

Note: The code above prints the type of the detected sound as an integer. In the actual situation, you can convert the integer into a data type that users can understand.

Definition for the types of detected sounds:

<string-array name="sound_dect_voice_type">

<item>laughter</item>

<item>baby crying sound</item>

<item>snore</item>

<item>sneeze</item>

<item>shout</item>

<item>cat's meow</item>

<item>dog's bark</item>

<item>running water</item>

<item>car horn sound</item>

<item>doorbell sound</item>

<item>knock</item>

<item>fire alarm sound</item>

<item>alarm sound</item>

</string-array>

4.Start and stop sound detection.

@Override

public void onClick(View v) {

switch (v.getId()){

case R.id.btn_start_detect:

if (mlSoundDector != null){

boolean isStarted = mlSoundDector.start(this); // context: Context.

// If the value of isStared is true, the detection is successfully started. If the value of isStared is false, the detection fails to be started. (The possible cause is that the microphone is occupied by the system or another app.)

if (isStarted){

Toast.makeText(this,"The detection is successfully started.", Toast.LENGTH_SHORT).show();

}

}

break;

case R.id.btn_stop_detect:

if (mlSoundDector != null){

mlSoundDector.stop();

}

break;

}

}

5.Call destroy() to release resources when the sound detection page is closed.

@Override

protected void onDestroy() {

super.onDestroy();

if (mlSoundDector != null){

mlSoundDector.destroy();

}

}

Testing the App

1.Using the knock as an example, the output result of sound detection is expected to be 10.

2.Tap Start detecting and simulate a knock on the door. If you get logs as follows in Android Studio's console, they indicates that the integration of the sound detection SDK is successful.

More Information

1.Sound detection belongs to one of the six capability categories of ML Kit, which are related to text, language/voice, image, face/body, natural language processing, and custom model.

2.Sound detection is a service in the language/voice-related category.

3.Interested in other categories? Feel free to have a look at the HUAWEI ML Kit document.

Posted on December 27, 2021

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.

Related

November 29, 2024