Democratizing ML: Rise of the Teachable Machines

Shuvam Manna

Posted on May 11, 2021

Late in 2018, Google Creative Labs came out with the concept of Teachable Machines. A Web-based demo that allowed anyone to train a Neural Net into recognizing and distinguishing between three things and bring up suitable responses. It was a fun example to play around with and served to teach many the fundamentals of how Machine Learning works at a fairly high level of abstraction. Recently, they released Teachable Machines v2, a full-fledged web-based dashboard to play around with models that can be retrained with your data, and the models which can further be exported to work with different projects and frameworks, thus letting it out into the wild.

The models you make with Teachable Machine are real Tensorflow.js models that work anywhere javascript runs, so they play nice with tools like Glitch, P5.js, Node.js & more. And this led me to think about how this tool was making some really powerful ML capabilities available to everyone, in the process, democratizing the idea that everyone — from the noob to the pro can use this for prototyping their vision or even put things into production at a scale. But with the availability of these Teachable Machines, let’s take a peek under the hood.

Holy Grail of Machine Learning

The idea of Machine Learning is pretty simple — a machine that learns on its own, similar to how humans learn. But these machines are governed by a representation of the primal human instinct — *Algorithms. *A voice in your head saying Do this, no don’t jump off a cliff, you’re not Superman, nor do you have a parachute or the very act of learning why an Apple looks like an Apple is governed by these small instincts.

Hundreds of learning algorithms are invented every year, but they’re all based on the same few ideas and the same repeating questions. Far from being eccentric or exotic, and besides their use in building these algorithms, these are questions that matter to all of us: How do we learn? Can this be optimized? Can we trust what we’ve learned? Rival schools of thought within Machine Learning have different answers to these questions.

Symbolists **view learning as the inverse of deduction and take ideas from philosophy, psychology, and logic.

**Connectionists reverse engineer the brain and are inspired by neuroscience and physics.

Evolutionaries simulate the environment on a computer and draw on genetics and evolutionary biology.

Bayesians believe learning is a form of Probabilistic inference and have their roots in statistics.

Analogizers learn by extrapolating from similarity judgments and are influenced by psychology and mathematical optimization.

Each of the five tribes of Machine Learning has its own general-purpose learner that you can in principle use to discover knowledge from data in any domain. For the Symbologist, it's the Inverse Deduction, the Connectionists’ is Backpropagation, the Evolutionaries’ is Genetic programming, and the Analogizers’ is the Support Vector Machine. In practice, however, each of these algorithms is good for some things and not for others. What we ideally want, in these cases — is a single Master Algorithm to combine all of their best benefits.

Enter the Neuron

The buzz around Neural Networks was pioneered by the Connectionists in their quest to reverse engineer the brain. Such systems “learn” to perform tasks by considering examples, generally without being programmed with task-specific rules. For example, in image recognition, they might learn to identify images that contain donuts by analyzing example images that have been manually labeled as “donut” or “not donut” and using the results to identify donuts in other images.

An Artificial Neural Net or ANN is based on a collection of connected units or nodes called artificial neurons, which loosely model the neurons in a biological brain. Each connection, like the synapses in a biological brain, can transmit a signal to other neurons. An artificial neuron that receives a signal then processes it and can signal neurons connected to it.

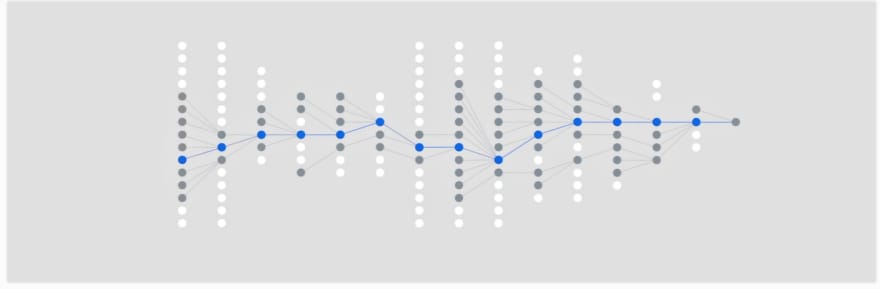

The most first neural networks only had one neuron but these aren’t very useful for anything so we’ve had to wait for computers to get more powerful before we could do more useful and complex things with them, hence the recent rise of neural networks. Today’s neural nets consist of multiple neurons arranged in multiple layers.

In the figure, the leftmost layer is known as the Input Layer, and by happenstance, the rightmost — Output Layer.

TL;DR: Neural networks consist of neurons arranged in layers where every neuron in a layer is connected to every neuron in the next layer. A neuron multiplies the data that is passed into it by a matrix of numbers called the weights (and then adds a number called a bias) to produce a single number as output. These weights and biases for each neuron are adjusted incrementally to try to decrease the loss (the average amount the network is wrong by across all the training data).

A great website if you wish to learn more is machinelearningmastery.com

Teachable Machine

The Teachable Machine relies on a pre-trained image recognition network called MobileNet. This network has been trained to recognize 1,000 objects (such as cats, dogs, cars, fruit, and birds). During the learning process, the network has developed a semantic representation of each image that is maximally useful in distinguishing among classes. This internal representation can be used to quickly learn how to identify a class (an object) the network has never seen before — this is essentially a form of transfer learning.

The Teachable Machine uses a “headless” MobileNet, in which the last layer (which makes the final decision on the 1,000 training classes) has been removed, exposing the output vector of the layer before. The Teachable Machine treats this output vector as a generic descriptor for a given camera image, called an embedding vector. This approach is based on the idea that semantically similar images also give similar embedding vectors. Therefore, to make a classification, the Teachable Machine can simply find the closest embedding vector of something it’s previously seen, and use that to determine what the image is showing now.

This approach is termed as the k-Nearest Neighbor.

*Let’s say we want to distinguish between images of different kinds of objects we hold up to the camera. Our process will be to collect a number of images for each class, and compare new images to this dataset and find the most similar class.

The particular algorithm we’re going to take to find similar images from our collected dataset is called *k-nearest neighbors. We’ll use the semantic information represented in the logits from MobileNet to do our comparison. In k-nearest neighbors, we look for the most similar k examples to the input we’re making a prediction on and choose the class with the highest representation in that set.

TL;DR: The **k-nearest neighbors** (KNN) algorithm is a simple, supervised machine learning algorithm that can be used to solve both classification and regression problems. It’s easy to implement and understand but has a major drawback of becoming significantly slows as the size of that data in use grows.

Read more here.

What can you do with TM? (Yellow Umbrella, anyone?)

Teachable Machine is flexible — you can use files or capture examples live. The entire pathway of using and building depends on your use case. You can even choose to use it entirely on-device, without any webcam or microphone data leaving your computer.

The subsequent steps to using these for your projects/use cases are pretty simple. You open a project, train the model on your custom data — either by uploading images/audio or capturing data using your webcam or microphone.

This model can be further exported and used on your projects just like you’d use any Tensorflow.js model.

Barron Webster, from the Google Creative Lab, has put together some really amazing walkthroughs to get started with TM. Check out how to build a Bananameter with TM here.

The demo is also out in the wild as a *Glitch *app at https://tm-image-demo.glitch.me/

Happy Questing!

If you want to talk about Communities, Tech, Web & Star Wars, hit me up at @shuvam360 on Twitter.

Originally Published on Medium in 2019

Posted on May 11, 2021

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.