How to build a simple Machine Learning Classification Model.

EzeanaMichael

Posted on June 24, 2023

When you hear the word classify, what comes to mind is a group of things based on their differences. The same is so when it comes to machine learning, based on features and data gathered, the machine learning model can learn to distinguish between different classes through different patterns found by the machine learning model. In this article, you’ll learn how to build a supervised classification machine-learning model.

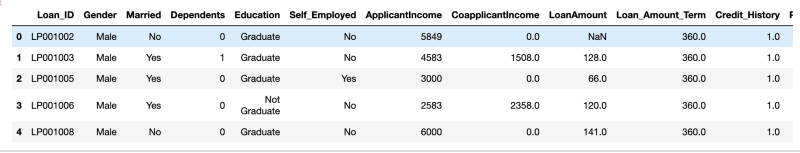

The dataset that would be used is a loaded dataset obtained from kaggle. The model we train in this article would be able to predict the possibility of a person repaying his/her loan based on their circumstances, Y is for loan approval and N is for loan declined.

Training the model.

Import useful libraries.

import numpy as np

import pandas as pd

import matplotlib as plt

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import ConfusionMatrixDisplay, confusion_matrix, classification_report

import seaborn as sns

We import Numpy, a Python based library used for numerical calculations.

Pandas: To read the dataset and store it in data frame format in order to perform analysis, cleaning, and some calculations in the data frame.

Sklearn: Also known as Scikit-learn, which is one of the most popular libraries which contains several classes and some functions for analysis and machine learning models, One of which we’ll use in the article is the Random Forest Classifier. The Random forest classifier model is a very powerful classification model which can achieve high accuracy with little or no hyperparameter tuning.

Reading the data.

Using the pandas' library which has been imported as ‘pd’, we import the dataset using the datasets file path.

df=pd.read_csv('/Users/user/Documents/loan_sanction_train.csv')

To check if you’ve imported the file correctly type:

df.head()

Output:

Expository Data Analysis

Let's find out more about our data before we start training the model.

df.info()

Output:

From above we deduce the presence of 614 rows and 13 columns. We can also see some values are missing in them, to see the number of values missing in each column use,

df.isnull().sum()

Output:

We’ll discuss how to handle missing values in later articles, for now, let's drop all rows with missing values.

df=df.dropna()

We can get the summary of statistical values which include, mean, minimum value, maximum value, standard deviation, 25th percentile, 50th percentile(mid), 75th percentile, and number of the numerical columns in the distribution.

df.describe()

Output:

Data Cleaning And Preparation

Machine learning models only accept numerical and boolean (true or false) values, therefore in our features or columns, we’ll need to convert all strings or objects to integers, floats, or boolean values.

We can check the various categories in the feature with the code.

Example:

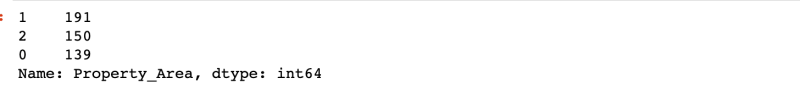

df['Property_Area'].value_counts()

Output:

We can then convert all the features that aren’t numerical or boolean by creating a function, calling the df.astype(“category”).cat.codes, and passing the dataframe and columns through it.

def category_val(df,col):

df[col]=df[col].astype('category')

df[col]=df[col].cat.codes

return df[col]

df['Gender']=category_val(df,'Gender')

df['Married']=category_val(df,'Married')

df['Education']=category_val(df,'Education')

df['Property_Area']=category_val(df,'Property_Area')

df['Self_Employed']=category_val(df,'Self_Employed')

df['Dependents']=category_val(df,'Dependents')

You can confirm by either the value_counts function to see your new categorical values.

df['Property_Area'].value_counts()

Output:

Model Building

Now that we’ve cleaned our data a bit, let’s split the features and target variable, lets's say x are our features and y is our target.

x= df.drop(['Loan_ID','Loan_Status'], axis =1)

y=df['Loan_Status']

We use the dataframe.drop function to remove the feature that won’t be used and the target feature, then we collect the target feature into y.

Next, we would split our data into a training set and a test set. To check how our model would perform on some real-world data, we use a test split, we divide our dataset into a training set and test set. We train our model with the training set and evaluate how it performs on the test set.

To split our model, we use the train_test_split function setting our test size to 30%(generally it's advisable to use 20%-30% of our dataset for the test set, so as not to lose too much data when training the model).

x_train,x_test,y_train,y_test=train_test_split(x,y,test_size=0.3,random_state=True)

Next, we load our classification model to be used, which in this case, is the random forest classifier.

The random forest classifier is built off multiple decision trees, which is a model that branches to a particular result based on certain parameters.

The random forest model is then made of multiple decision trees, which makes thousands of branches on features and evaluates outcomes.

rf=RandomForestClassifier()

After loading our model, we fit the training set into our model.

rf.fit(x_train,y_train)

Model Evaluation

There are several ways to evaluate our machine learning in this article, we’ll consider one.

Accuracy

Accuracy determines how well your model performed when it predicts the test target data compared with the actual target results.

We can check our model accuracy after fitting the model with the simple code.

rf.score(x_test,y_test)

This gives the score of 0.743 which when converted is 74.3%.

That's it, you’ve successfully trained your first machine-learning model. In future articles, we’ll consider how to train unsupervised machine learning models, how to deploy them, and other ways of evaluating machine learning models.

Give a like and feel free to comment your thoughts down below.

Posted on June 24, 2023

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.

Related

August 22, 2023