Fine Tuning LLMs to Process Massive Amounts of Data 50x Cheaper than GPT-4

Jamesb

Posted on January 8, 2024

In this article I'll share how I used OpenPipe to effortlessly fine tune Mistral 7B, reducing the cost of one of my prompts by 50x. I included tips and recommendations if you are doing this for the first time, because I definitely left some performance increases on the table. Skip to Fine Tuning Open Recommender if you are specifically interested in what the fine tuning process looks like. You can always DM me on Twitter (@experilearning) or leave a comment if you have questions!

Background

Over the past month I have been working on Open Recommender, an open source YouTube video recommendation system which takes your Twitter feed as input and recommends you relevant YouTube-shorts style video clips.

I've successfully iterated on the prompts, raising the interestingness and relevancy of the clip recommendations from 50% to over 80%. See this article where I share the prompt engineering techniques I used. I also implemented a basic web UI you can try out. Open Recommender even has 3 paying users (not my Mum, Dad and sister lol 😃).

So we are one step closer to scaling to millions of users.

But lets not get ahead of ourselves. There are a couple of problems I need to solve before I can even consider scaling things up. Each run is currently very expensive, costing at least $10-15 per run 😲, with the bulk of the cost coming from pouring vast numbers of tokens from video transcripts into GPT-4. I expect the cost will get even worse when we add more input sources like blogs and articles!

It also takes 5-10 minutes to run the entire pipeline for a single user 🫣. There are still some things unrelated to model inference that can be improved here, so I'm not massively concerned, but it would be great if we could speed things up.

Fine Tuning

Fine tuning provides a solution to both the cost and speed issues I'm experiencing. The most powerful LLMs like GPT-4 are trained on a vast array of internet text to understand language generally and perform a wide array of tasks at a high level. But most applications outside of highly general AI agents only require the LLM to be good at a specific task, like summarising documents, search result filtering or recommendation reranking. Instead of a generalist Swiss Army knife LLM, we'd rather have a highly specialised natural language processing function.

Fine-tuning involves taking an LLM with some general understanding of language and then training it further on a specific type of task. It turns out that if we only require proficiency at one specific language-based task, then we can get away with using a much smaller model. For instance, we could fine tune a 7 billion parameter model like Llama or Mistral and achieve performance that is just as good or better than GPT-4 on our specific task, but 10x cheaper and faster.

Now traditional fine tuning is a massive pain, because it requires a bunch of setup, manual dataset curation, buying or renting GPUs, and potential frustration if you set something up incorrectly and your fine tuning run blows up in the middle. Then once you have your fine tuned model, you have to figure out how to host it and manage the inference server, scaling, LLM ops etc... That's where OpenPipe comes in.

OpenPipe

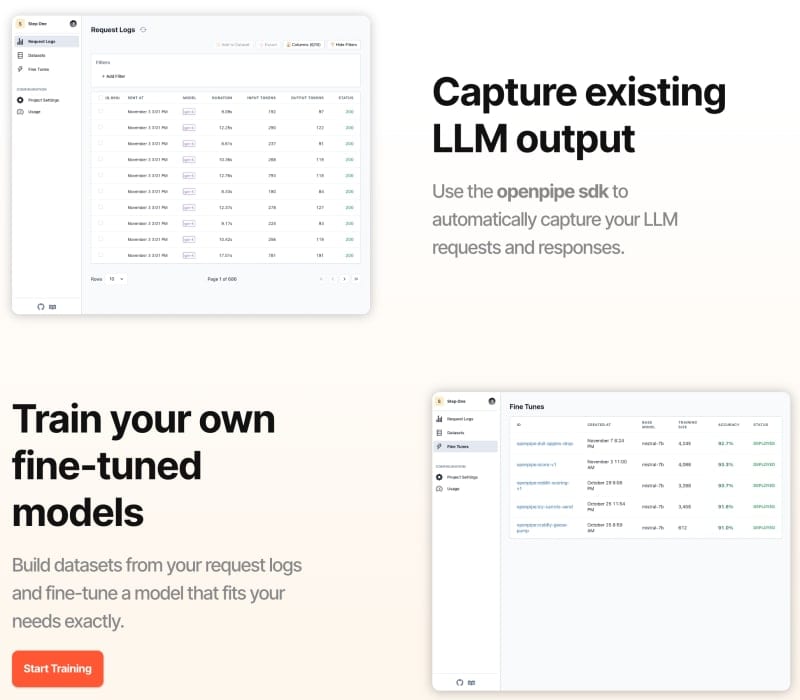

OpenPipe is an open source project that specialises in helping companies incrementally replace expensive OpenAI GPT-4 prompts with faster, cheaper fine-tuned models.

They have a simple drop-in replacement for OpenAI's library which records your requests into a web interface to help you curate a dataset from your GPT-4 calls and use them to fine-tune a smaller, faster, cheaper open source model.

Effectively OpenPipe helps you use GPT-4 to "teach" a smaller model how to perform at a high level on your specific task through fine tuning.

The OpenAI Wrapper Playbook

You need to have a dataset of examples to fine tune these models first, because out of the box performance of these smaller models cannot compete with GPT-4. So we need to start out with GPT-4 to collect synthetic training data, then fine tune an open source model once we have enough examples.

So at a high level the playbook for LLM startups looks like this:

- Use the most powerful model available and iterate on your prompts until you get something working reasonably well.

- Record each request, building up a dataset of input and output pairs created with GPT-4.

- Use the dataset to train a smaller, faster, cheaper open source model.

✨ When exactly you decide to fine tune will vary depending on your use case. If you use LLMs for heavy amounts of text processing, you might need to do it before you scale, to avoid huge OpenAI bills. But if you only make a moderate number of calls, you can focus more on prompt iteration and finding product market fit before fine tuning. It all depends on your revenue, model usage and costs.

Fine Tuning Open Recommender

Here's what the process looked like in practice for Open Recommender.

Setup OpenPipe

Sign up for an OpenPipe API key.

OPENPIPE_API_KEY="<your key>"

Then replace the OpenAI library with OpenPipe's wrapper.

Replace this line

from openai import OpenAI

with this one

from openpipe import OpenAI

✨ Turn on prompt logging. It's enabled by default, but I disabled it during the prompt iteration phase thinking that I'd bloat the request logs with a bunch of data that I'd need to filter out later. With hindsight, it's better to just keep it turned on from the start and use OpenPipe's request tagging feature to save the prompt_id on each request log. Later when you want to create a dataset for fine tuning, you can easily filter your dataset down to a particular version of your prompt.

Creating a Dataset

The next step is to run your prompts a bunch to create some data!

After running my prompts a bunch on my initial set of users, I had a moderate sized dataset. I decided to do a quick and dirty fine tune to see what kind of performance I could get out of Mistral 7B without any dataset filtering or augmentation.

✨ I exported all of my request logs and did some quick analysis with a script to figure out which prompts my pipeline account for the bulk of the latency and cost. I figured it makes sense to prioritise fine tuning for the costliest and slowest prompts. For Open Recommender, the "Recommend Clips" prompt which is responsible for cutting long YouTube transcripts into clips is very slow and costly, so I started there.

In the OpenPipe request log UI you can add lots of different filters to create your dataset. I just did a simple filter for all requests with a particular prompt name.

Then you can create the dataset and get to fine tuning!

Which Model?

Before you start a fine tuning job, you need to pick which model you want to fine tune. Since this is my first time doing this, I can't give very specialised advice, but from internet research larger models generally have higher capacity and can perform better on a wider range of tasks but require more computational resources and time for both fine-tuning and inference. Smaller models are more efficient but might not capture as complex features as larger ones.

I decided to go with Mistral 7B because my prompt is quite simple - it's a single task with a single function call response. Also I want to speed up the pipeline. If the outcome with a smaller model is good enough, then I can avoid needing to optimise the dataset or switching to a larger, slower the model.

A few hours later:

It's automatically deployed!

Evaluation

Clicking this link takes you to a page where you can see a comparison between GPT-4's output and the fine tuned model on the test set (OpenPipe automatically uses 20% of your dataset for testing).

From a quick scan it looks promising...

But the only way to know for sure is to play with it. I decided to run it on three test cases:

| Video | Tweets | Relatedness |

|---|---|---|

| Lex Fridman Podcast with Jeff Bezos | Three tweets about LLM recommender systems and LLM data processing pipelines | Medium |

| Advanced RAG Tutorial | 1 tweet about AI therapists with advanced RAG | Strong |

| Podcast about plants | Three tweets about LLM recommender systems and LLM data processing pipelines | Unrelated |

Medium

❌ 2 clips hallucinated that the user's tweets

indicated interests in Amazon / startups.

✅ 2 clips correctly linking moderately related

clips to the user's tweets about data pipelines

Strong

✅ 1 clip extracting the most relevant clip from the transcript

Unrelated

❌ 1 clip hallucinating that user's tweets indicated interests in plants

So there were definitely some performance decreases over GPT-4. I suspect this was largely due to my dataset - I realised that my dataset contained very few cases where the transcript was unrelated to the tweets, because I was collecting data from the end of the pipeline where it's already been through a bunch of filter steps.

✨ Because these smaller models have weaker reasoning abilities than GPT-4, you need to make sure your training dataset covers all possible input cases.

The fine tuned model is absolutely still usable though given its performance on strongly related transcripts. Additionally, since my pipeline has a re-ranking step to filter and order the clips after the create clips stage, any unwanted clips should get filtered out. Couple that with the 50x price decrease over GPT-4, and it's a no brainer!

Future Improvements

We can actually make some additions to the LLM startup playbook above. After collecting our dataset from GPT-4, we can improve the dataset quality by filtering out or editing failed cases. Improving the quality of the dataset can help us get better output performance than GPT-4.

I've been thinking about ways the dataset filtering and curation workflow can be improved. OpenPipe already lets you attach data to your request logs. This is useful for tagging each request with the name of the prompt. Something that the OpenPipe team are working on is adding extra data to a request at a later time. Then you would be able to use user feedback to filter down the dataset, making dataset curation a lot less work.

For example, in Open Recommender, likes and dislikes could be used to filter the request log:

Finally, OpenPipe supports a couple of cool additional features like GPT-4-powered LLM evals and token pruning. I'll do a walkthrough of those when I do more intensive fine tunes later.

Next Steps

That's it for now! Thanks for reading. If you want to try out the Open Recommender Beta or have questions about how to use OpenPipe, please DM me on Twitter @experilearning.

Posted on January 8, 2024

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.