Eden AI

Posted on April 26, 2023

In today's digital era, search engines have become an indispensable tool for individuals to access information on almost any topic effortlessly and promptly.

This article aims to provide a step-by-step guide to build a search engine in Python using text embeddings. Word embeddings encode text into a numerical format in order to measure the similarity between two text pieces.

By following this tutorial, you will be able to construct your own search API using Eden AI embeddings and subsequently deploy it to Flask with ease.

Prerequisites:

- Basic knowledge of Python

- Familiarity with APIs

- Eden AI Account (API key)

Step 1. Prepare the Dataset

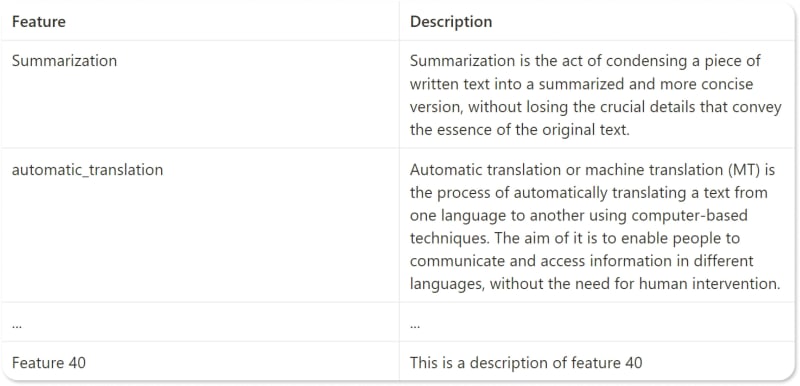

First, prepare your dataset. For the purpose of this tutorial, we'll use a dataset of 40 AI features, where each feature is described by a short text description. The dataset can be in any format, but for simplicity, we'll use a CSV file. Here's a sample of what the dataset might look like:

edenai_dataset = pd.read_csv('edenai_dataset.csv')

Step 2. Use Eden AI API to Generate Embeddings.

Eden AI offers an extensive collection of APIs, encompassing pre-trained embeddings among others. For this tutorial, we will use Open AI to transform the text descriptions into numerical representations. This tutorial applies to other providers, such as Cohere (also available on Eden AI). However, bear in mind that when representing your text as embeddings, you should only use a single provider and not merge embeddings from multiple providers.

# Load your dataset

edenai_dataset = pd.read_csv('edenai_dataset.csv')

# Apply embeddings to your dataset

subfeatures_description['description-embeddings'] = subfeatures_description.apply(lambda x: edenai_embeddings(x['Description'], “openai”), axis=1)

In the above code, we are applying Eden AI's embeddings API to each row of the "Description" column within the dataset, and saving the resulting embeddings in a new column called "description-embeddings".

Here's the code to generate embeddings using the Eden AI API:

def edenai_embeddings(text : str, provider: str):

"""

This function sends a request to the Eden AI API to generate embeddings for a given text using a specified provider.

"""

url = "https://api.edenai.run/v2/text/embeddings"

headers = {

"accept": "application/json",

"content-type": "application/json",

"authorization": "Bearer YOUR_API_KEY"

}

payload = {

"response_as_dict": True,

"attributes_as_list": False,

"texts": [text],

"providers": provider

}

response = requests.post(url, json=payload, headers=headers).json()

try:

return response[provider]['items'][0]['embedding']

except:

return None

NOTE: Don’t forget to replace with your actual Eden AI API key.

Step 3. Build your search API with Flask

Now that we have the embeddings for each description of our dataset we can build a REST API with Flask that allows users to search for features in the dataset based on their query.

Create a virtual environments for your Flask project

First, you'll need to create a virtual environment for your Flask project and install the required dependencies. Complete the following steps to do so:

Open up your command line interface and navigate to the directory where you want to create your Flask project.

Create a new virtual environment using the command python3 -m venv . Replace with a name of your choice, this will create a new virtual environment with its own Python interpreter and installed packages, separate from your system's Python installation.

Activate the virtual environment by running the command source /bin/activate.

Install the required dependencies for the project by running the following commands:

pip install flask

pip install pandas

pip install requests

pip install numpy

pip install flask-api

5. Import the dataset in your project and create two python file search.py that’s going to have the logic for our search and app.py for the REST API. This is the structure of our project:

6. In the file "search.py", we will implement the process of calling the Eden AI embeddings API (which was demonstrated earlier), as well as calculating the cosine similarity.

def cosine_similarity(embedding1: list, embedding2: list):

"""

Computes the cosine similarity between two vectors.

"""

vect_product = np.dot(embedding1, embedding2)

norm1 = np.linalg.norm(embedding1)

norm2 = np.linalg.norm(embedding2)

cosine_similarity = vect_product / (norm1 * norm2)

return cosine_similarity

- Our objective is to convert the user's search query into embeddings, and subsequently read the dataset to measure the cosine similarity between the query embeddings and the subfeature description embeddings. Our output will be a sorted list of the subfeatures based on their similarity scores, starting with the most similar:

def search_subfeature(description: str):

"""

This function searches a dataset of subfeature descriptions and returns a list of the subfeatures

with the highest cosine similarity to the input description.

"""

# load the subfeatures dataset into a pandas dataframe

subfeatures = pd.read_csv('subfeatures_dataset.csv')

# generate an embedding for the input description using the OpenAI provider

embed_description = edenai_embeddings(description, 'openai')

results = []

# iterate over each row in the subfeatures dataset

for subfeature_index, subfeature_row in subfeatures.iterrows():

similarity = cosine_similarity(

ast.literal_eval(subfeature_row['Description Embeddings']),

embed_description)

# compute the cosine similarity between the query and all the rows in the corpus

results.append({

"score": similarity,

"subfeature": subfeature_row['Name'],

})

results = sorted(results, key=lambda x: x['score'], reverse=True)

return results[:5]

- In app.py, we will create an instance of our Flask project and define an endpoint for searching sub-features in our dataset. This endpoint will call the search_subfeature() function, which takes a description (query) as input.

from search import search_subfeature

from flask import Flask, request, jsonify

from flask_api import status

app = Flask(__name__)

@app.route('/search', methods=['GET'])

def search_engine():

search_result = search_subfeature(request.args.get('description'))

return jsonify(search_result), status.HTTP_200_OK

if __name__ == "__main__":

app.run(debug=True)

- Finally, start the Flask app by running the command flask --app app.py --debug run.

If everything runs successfully, you should see a message in your command line interface that says "Running on http://127.0.0.1:5000/".

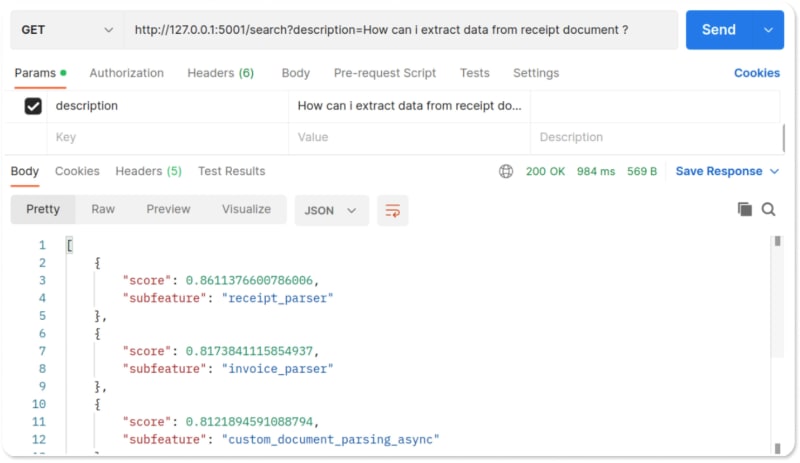

To test our Search Engin API, we can send a GET request to the http://127.0.0.1:5000/search endpoint with the query as the payload. To do this, we can use a tool like Postman, which allows us to easily send HTTP requests and view the responses.

The most relevant result for the query "How can I extract data from a receipt document?" is indeed the receipt_parser subfeature.

You can access to the full code in this github repository : https://github.com/Daggx/embedding-search-engine

Posted on April 26, 2023

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.