Understanding the Brain Inspired Approach to AI

EdemGold

Posted on May 7, 2023

"Our Intelligence is what makes us human and AI is an extension of that Quality" -Yan LeCun

Since the advent of Neural Networks (also known as artificial neural networks), the AI industry has enjoyed unparalleled success. Neural Networks are the driving force behind modern AI systems and they are modelled after the human brain. Modern AI research involves the creation and implementation of algorithms that aim to mimic the neural processes of the human brain with the aim of creating systems that learn an act in ways similar to human beings. In this article we will attempt to understand the brain inspired approach to building AI systems.

How I hope to approach this

This article will begin by providing background history on how researchers began to model AI to mimic the human brain and end by discussing the challenges currently being faced by faced by researchers in attempting to imitate the human brain. Below is an in-depth description of what to expect from each section.

It is worth noting that while this topic is an inherently broad one, I will necessarily be as brief and succinct as possible so as to maintain interest while providing a broad overview and I plan to treat sub-topics which have more intricate sub-branches as standalone articles, and I will, of course, leave references at the end of the article .

History of the brain inspired approach to AI:

Here we'll discus about how scientists Norman Weiner ann Warren McCulloch brought about the convergence of neuroscience and computer science, how Frank Rosenblatt's Perceptron was the first real attempt to mimic human intelligence and how it's failure brought about ground breaking work which would serve as the platform for Neural Networks.How the human brain works and how it relates to AI systems:

In this section we'll dive into the biological basis for the brain inspired approach to AI. We will discuss the basic structure and functions of the human brain, understand its core building block, the neuron, and how they work together to process information and enable complex actions.The Core Principles behind the brain inspired approach to AI:

Here we will discuss the fundamental concepts behind the brain inspired approach to AI. We will explain how concept such as; Neural networks, Hierarchical processing, plasticity and how techniques parallel processing, distribute representations, an recurrent feedback aid AI in mimicking the brain's functioning.Challenges in building AI systems modelled after the human brain:

Here we will talk about the challenges and limitations inherent in attempting to build systems that mimic the human brain. Challenges such as; the complexity of the brain, the lack of a unified theory of cognition an explore the ways these challenges an limitation are being adressed.

Let us begin!

The History of the brain inspired approach to AI

The drive to build machines that are capable of intelligent behaviour owes much inspiration to MIT Professor, Norbert Weiner. Norbert Weiner was a child prodigy who could read by the age of three. He had a broad knowledge of various fields such as Mathematics, Neurophysiology, medecine, and physics. Norbert Weiner believed that the main opportunities in science lay in exploring what he termed as Boundary Regions -areas of study that are not clearly within a certain discipline but rather a mixture of disciplines like the study of medicine and engineering coming together to create the field of Medical Engineering-, he as quote saying:

"If the difficulty of a physiological problem is mathematical in nature, ten physiologists ignorant of mathematics will get precisely as far as one physiologist ignorant of mathematics"

In the year 1934, Weiner and a couple of other academics gathered monthly to discuss paper involving boundary region science. Weiner was quoted saying:

It was a perfect catharsis for half baked ideas, insufficient self-criticism, exaggerated self confidence and pomposity"

From these sessions and from his own personal research, Weiner learned about new research on biological nervous systems as well as about pioneering work on electronic computers, and his natural inclination was to blend these two fields and so a relationship between neuroscience and computer science was formed and this relationship became the cornerstone for the creation of artificial intelligence, as we know it.

After World War II, Wiener began forming theories about intelligence in both humans and machines an this new field was named Cybernetics. Wiener ha successfully gotten scientists talking about the possibility of biology fusing with engineering and of the said scientists was a neurophysiologist named Warren McCulloch. Warren Mcculloch then proceeded to drop out of Haverford university and went to Yale to study philosophy and psychology. While attending a scientific conference in New York, he came in contact with papers written by colleagues on biological feedback mechanisms. The following year, in collaboration with his brilliant 18 year old portage named Walter Pitts, McCulloch proposed a theory about how the brain works -a theory that would help foster the widespread perception that computer and brains function essentially in the same way.

They based their conclusions on research by McCuloch on the possibility of neuron to process Binary Numbers (for the unknowledgeable, computers communicate via binary numbers). This theory became the foundation for what became the first model of an artificial neural network, which was named the McCulloch-Pitts Neuron(MCP).

The MCP served as the foundation for the creation of the first ever neural network which came to be known as the perceptron. The Perceptron was created by Psychologist, Frank Rosenblatt who, inspired by the synapses in the brain, decided that as the human brain could process and classsify information through synapses(communication between neurons) then perhaps a digital computer could do the same via a neural network. The Perceptron essentially scaled the MCP neuron from one artificial neuron into a network of neurons, but, unfortunately, the perceptron had some technical challenges which limited its practical application, most notable of it's limitations was its inability to perform complex operations(like classify between more than one item, for example a perceptron could not perform classification between a cat, a dog, and a bird).

In the year 1969, a book published by Marvin Minsky and Seymour Papert titled Perceptron lay out in detail the flaws of the Perceptron and due to that research on Artificial Neural Networks stagnated until until the proposal of Back Propagation by Paul Werbos. Back Propagation hope to solve the issue of classifying complex data that hindered the industrial application of Neural Network at the time. It was inspired by synaptic plasticity; the way the brain modifies the strengths of connections between neurons and as such improve performance. Back Propagation was designed to mimic the process the brain strengthens connections between neurons via a process called weight adjustment.

Despite the early proposal by Paul Werbos, the concept of back propagation only gained widespread adoption when researchers such as David Rumelheart, Geoffrey Hinton, Ronal Williams published papers that demonstrated the effectiveness of back propagation for training neural networks. The implementation of back propagation led to the creation of Deep Learning which powers mosts of the AI systems available in the world.

"People are smarter than today's computers because the brain employs a basic computational architecture that is more suited to deal with a central aspect of the natural information processing tasks that people are so good at." - Parallel Distributed Processing

How the human brain works and how it relates to AI systems

We have discussed how researchers began to model AI to mimic the human brain, let us now look at how the brain works and define the relationship between the brain and AI systems.

How the brain works: A simplified description

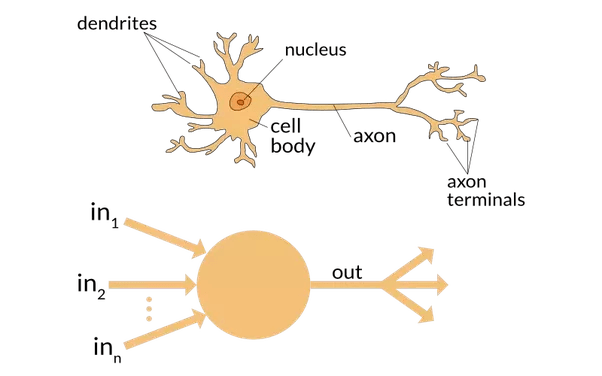

The human brain, essentially process thoughts via the use of neurons, a neuron is made up of 4 core sections; Dendrite, Axon, and the Soma. The Dendrite is responsible for receiving signals from other Neurons, The Soma processes information received from the Dendrite, and the Axon is responsible for transferring the processed information to the next Dendrite in the sequence.

To grasp how the brain processes thought, imagine you see a car coming towards you, your eyes immediately send electrical signals to your brain through the optical nerve and then the brain forms a chain of neurons to make sense of the incoming signal and so the first neuron in the chain collects the signal through its Dendrites and sends it to the Soma to process the signal after the Soma finishes with its task it sends the signal to the Axon which then sends it to the Dendrite of the next neuron in the chain, the connection between Axons and Dendrites when passing on information is called a Synapse. So the entire process continues until the brain finds a Sapiotemporal Synaptic Input(that's scientific Lingo for the brain continues processing until it finds an optimal response to the signal sent to it) and then it sends signals to the necessary effectors eg, you're legs and then the brain sends a signal to your legs to run away from the oncoming car.

The relationship between the brain and AI systems

The relationship between the brain and AI is largely mutually beneficial with the brain being the main source of inspiration behind the design of AI systems and advances in AI leading to better understanding of the brain and how it works.

There is a reciprocal exchange of knowledge and ideas when it comes to the brain and AI, and there are several examples that attest to the positively symbiotic nature of this relationship;

Neural Networks: Arguably the most significant impact made by the human brain to the field Artificial Intelligence is the creation of Neural Networks. In essence, Neural Networks are computational models that mimic the function and structure of biological neurons, the architecture of neural networks and their learning algorithms are largely inspired by the way neurons in the brain interact and adapt.

Brain Simulations: AI systems have been used to simulate the human brain and study its interactions with the physical world. For example, researchers have Machine Learning techniques to simulate the activity of biological neurons involved in visual processing, and the result have provide insight into how the brain handles visual information.

Inights into the brain: Researchers have begun using Machine Learning Algorithms to analyse and gain insights from brain data, and fMRI scans. These insights serve to identify patterns and relationships which would otherwise have remained hidden. The inights gotten can help in the understanding of internal cognitive functions, memory, and ecision making, it alsos aids in the treatment of brain native illnesses such as Alzheimers.

Core Principles behind the brain inspired approach to AI

Here we will discuss several concepts which aid AI in imitating the way the human brain functions. These concepts have helped AI researchers create more powerful and intelligent systems which are capable of performing complex tasks.

Neural Networks

As discussed earlier, neural networks are arguably the most signifant impact made by the human brain to the field Artificial Intelligence. In essence, Neural Networks are computational models that mimic the function and structure of biological neurons, the networks are made up of various layers of interconnected nodes, called artificial neurons, which aid in the processing and transmitting of information, similar to what is done by dendrites, somas, and axons in biological neural networks. Neural Networks are architected to learn from past experiences the same way the brain does.

Distributed Representations

Distributed representations are simply a way of encoding concepts or ideas in a neural network as a pattern along several nodes in a network in order to form a pattern. For example, the concept of smoking could be represented(encoded) using a certain set of nodes in a neural network and so if that network comes along an image of a man smoking it then uses those selected nodes to make sense of the image (it's a lot more complex than that but for the sake of simplicity), this technique aids AI systems in remembering complex concepts or relationships between concepts the same way the brain recognizes and remembers complex stimuli.

Recurrent Feedback

This is a technique used in training AI models where the output of a neural network is returned back as input in order to allow the network to integrate its output as extra data input in training. This is similar to how the brain makes use of feedback loops in order to adjust its model based on previous experiences.

Parallel Processing

Parallel processing involves breaking up complex computational tasks into smaller bit in an effort to process the smaller bits on other processor in attempt to improve speed. This approach enables AI systems to process more input data faster, similar to how the brain is able to perform different tasks at the same time(multi-tasking).

Attention Mechanisms

This is a techniue used which enables AI models to focus on specific parts of input data, it is commonly employed in sectors such as Natural Language Processing which contain complex an cumbersome data. It is inpspired by the brain's ability to attend to only specific parts of a largely distracting environment; like your ability to tune into and interact in one conversation out of a cacophony of conversations.

Reinforcement Learning

Reinforcement Learning is a technique used to train AI systems which was inspired by how human beings learn skills through trial an error. It involves an AI agent receiving rewards or punishments based on its actions, this enables the agent to learn from its mistakes and be more efficient in it's future actions (this technique is usually used in the creation of games).

Unsupervised Learning

The brain is constantly receiving new streams of data in the form of sounds, visual content, sensory feelings to the skin, etc and it has to make sense of it all and attempt to form a coherent an logical understanding of how all these seemingly disparate events affect its physical state.

Take this analogy as an example, you feel water drip on your skin, you hear the sound of water droplets dropping quickly on rooftops, you feel your clothes getting heavy and in that instant you know rain is falling, you then search your memory bank to ascertain if you carried an umbrella and if you did, you are fine, else, you check to see the distance from your current location to your home, if it is close, you are fine, else you try to gauge how intense the rain is going to become, if it is a light drizzle you can attempt to continue journey back to your home, but if it is priming to become a shower, then you have to fin shelter.

The ability to make sense of seemingly disparate data points(water, sound, feeling, distance) is implemented in Artificial intelligence in the form of a technique called Unsupervised Learning. It is an AI training technique where AI systems are taught to make sense of raw, unstructured data without explicit labelling( no one tell you rain is falling when it is falling, do they/).

Challenges in Building Brain Inspired AI systems

We have spoken about how the approach for using the brain as inspiration for AI systems came about, how the brain relates to AI, and the core principles behind brain inspired AI. In this section, we are going to talk about some of the technical and conceptual challenges inherent in building AI systems modelled after the human brain.

Complexity

This is a pretty daunting challenge. The brain inspired approach to AI is based on modelling the brain and building AI systems after that model, but the human brain is an inherently complex system with 100 billion neurons and approximately 600 trillion synaptic connections(each neuron, has on average, 10,000 synaptic connections with other neurons), and these synapses are constantly interacting in dynamic an unpredictable ways. Building AI systems that are aimed to mimic, and hopefully exceed, that complexity is in itself a challenge and requires equally complex statistical models.

Data Requirements for training Large Models

Open AI's GPT 4, which is presumably at the cutting edge of AI models required 47 Giga Bytes of data, in comparison its predecessor GPT3 was trained on 17 Gigabytes of data, that is approximately 3 orders of magnitude higher, imagine how much GPT 5 will be trained on. As has been proven in order to get acceptible results, Brain Inspired AI systems require vast amounts of data and data for tasks especially auditory and visual tasks and this places a lot of emphasis on the creation of data collection pipelines, for instance, Tesla has 780 million miles of driving data and its data collection pipeline adds another million every 10 hours.

Energy Efficiency

Building brain inspired AI systems that emulate the brain' energy efficency is a huge challenge,. the human brain consumes approximately 20 watts of power, in comparison, Tesla's Autopilot, on specialized chips, consumes about 2,500 watts per second andit takes around 7.5 megawatt hours(MWh) to train an AI model the size of ChatGPT.

The Explainaibility Problem

Developing brain inspired AI sytems that can be trusted by users is crucial to the growth and adoption of AI, but therein lies the problem, the brain, which AI systems are meant to be modelled after, are essentially a black box. The inner workings of the brain are not easy to understand, this is due to the lack of information sorrounding how the brain processes thought, there is no lack of research on how the biological structure of the human brain but there is a certain lack of empirical information on the functional qualities of the brain, that is, how thought is formed, how deja vu occurs, etc, and this leads to a problem in the building of brain inspired AI systems.

The Interdisciplinary Requirements

The act of building brain inspired AI systems requires the knowledge of experts of different fields, such as; Neuroscience, Computer Science, Engineering, Philosophy, and Psychology. But there lies a challenge there both logistical and foundational, in the sense that getting experts from different fields very financially tasking and also there lies the problem of knowledge of knowledge conflict; it is is really difficult to get an engineer to care about the psychological effects of what he/she is building, not to talk of the problem of egos colliding.

Summary

In Conclusion, while the brain inspired approach is the obvious route to building AI systems(we have discussed why), it is wrought with challenges but we can look to the future with hope that the efforts are being made to solve these problems.

If you enjoyed the article, you can subscribe to my newsletter.

References

- FreeCode Camp Machine Learning

- Tesla' Vehicle Safety Report

- Basic Neural Units of the Brain: Neurons, Synapses and Action Potential

- When Brain inspired AI meets AGI

- Perceptron: The artificial Neuron (An Essential Upgrade To The McCulloch-Pitts Neuron)

- McCulloch-Pitts Neuron — Mankind’s First Mathematical Model Of A Biological Neuron

- BackPropagation through time: What it does and how to do it

- The history of AI

- BrainOS: A Novel Artificial Brain-Alike Automatic Machine Learning Framework

Posted on May 7, 2023

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.

Related

November 25, 2024