Introduction to Containerization and Docker

Ednah

Posted on March 11, 2024

In today's fast-paced world of software development, efficiency and agility are key. Developers are constantly seeking ways to streamline their workflows, reduce dependencies, and improve the portability of their applications. This is where Docker comes in. Docker is a powerful tool that allows developers to package their applications and dependencies into lightweight, portable containers that can run consistently across different environments. In this blog, we will explore the fundamentals of containerization, Docker and how it has revolutionized the way we build, ship, and run applications.

What is a Container?

A container is a way to package an application with all the necessary dependencies and configurations it needs. This package is highly portable and can be easily shared and moved around between people, devices and teams. This portability and the compactness of having everything packaged in one isolated environment gives containers the advantages it confers in making development and deployment processes more efficient.

Where do containers live?

As we mentioned, containers are portable. Thus, a question arises. Where are they stored? There must be a place where they are stored, enabling them to be shared and moved around.

Containers are stored in a container repository. This is a special storage area for containers. Many companies have their own private repositories where they host or store all their containers. There are also public repositories such as Dockerhub. In these public repositories, you can browse and find any application container that you want.

Image Above: Dockerhub, a cloud-based registry service that allows you to store and share Docker container images. Docker Hub hosts millions of container images, including official images from Docker and user-contributed images. Users can search for images, pull them to their local environment, and push their own images to share with others.

How do containers improve the development process?

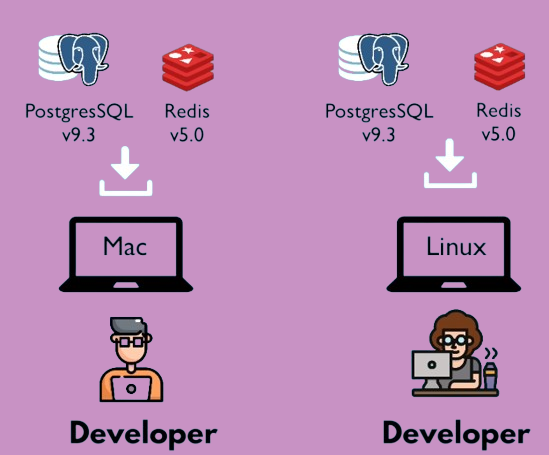

In the era before containers revolutionized development, teams building applications faced a cumbersome process. When a team of developers and engineers are working on an application, they would have to individually install the services needed directly on their operating systems. Suppose the team is building a web application that needs PostgresSQL and Redis. They would each have to install and configure the technologies on their local development environments.

This approach can be tedious and error prone if every developer were to do it individually. Given that each person in the team may have different underlying configurations locally or even operating systems powering their devices, the transferability of the configurations is diminished, probably leading to errors when this application is shared between developers.

With containers, those services/technologies don’t have to be installed directly on a developer's operating system. The container provides a self-contained environment that encapsulates the application and its dependencies, including libraries, binaries, and configuration files. This means that developers can work in a consistent environment regardless of their underlying operating system. Each developer only needs to fetch this established container and run it on their local machines.

How do containers improve the deployment process?

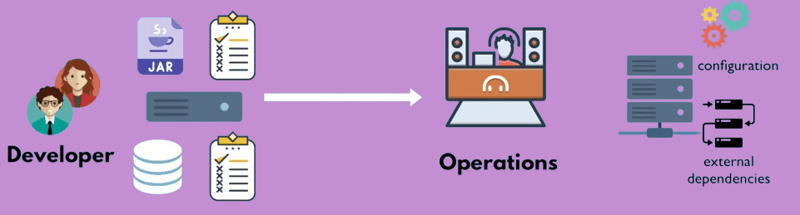

In the traditional deployment process, the development team produces artifacts, such as a JAR file for the application, along with instructions on how to install and configure these artifacts on the server. Additionally, other services, like databases, are included with their own set of installation and configuration instructions. These artifacts and instructions are then handed over to the operations team, who is responsible for setting up the environment for deployment.

However, this approach has several drawbacks. Firstly, configuring and installing everything directly on the operating system can lead to conflicts with dependency versions and issues when multiple services are running on the same host. Secondly, relying on textual guides for instructions can result in misunderstandings between the development and operations teams. Developers may forget to mention important configuration details, or the operations team may misinterpret the instructions, leading to deployment failures.

With containers, this process is simplified because developers and operations teams work together to package the entire application, including its configuration and dependencies, into a single container. This encapsulation eliminates the need to configure anything directly on the server. Instead, deploying the application is as simple as running a Docker command to pull the container image from a repository and then running it. While this is a simplified explanation, it addresses the challenges we discussed earlier by making environmental configuration on the server much easier. The only initial setup required is to install and set up the Docker runtime on the server, which is a one-time effort.

Containers vs Virtual Machines

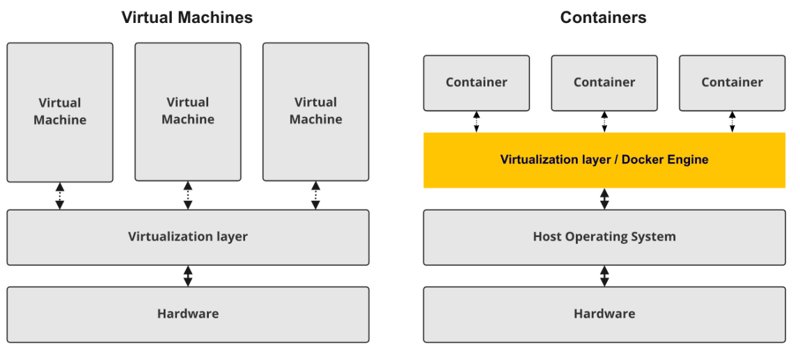

Containers and virtual machines (VMs) are both used to isolate applications and their dependencies, but they differ in several key ways.

The following are the key differences:

Architecture: VMs virtualize the hardware, creating a full-fledged virtual machine with its own operating system (OS), kernel, and libraries. Containers, on the other hand, virtualize the OS, sharing the host OS kernel but providing isolated user spaces for applications.

Resource Usage: VMs are typically heavier in terms of resource usage because they require a full OS to be installed and run for each VM. Containers are lightweight, as they share the host OS kernel and only include the necessary libraries and dependencies for the application.

Isolation: VMs provide stronger isolation between applications since each VM has its own OS and kernel. Containers share the host OS kernel but provide process-level isolation using namespaces and control groups (cgroups).

Startup Time: Containers have faster startup times compared to VMs, as they do not need to boot an entire OS. VMs can take longer to start as they need to boot a full OS.

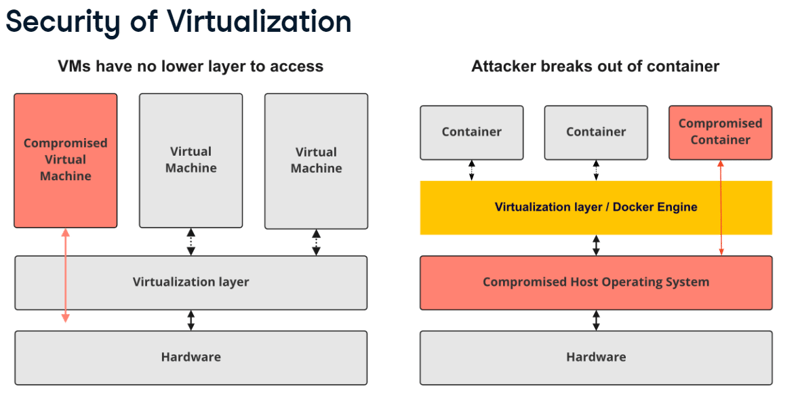

Security: While both containers and VMs provide isolation, VMs are often considered more secure due to the stronger isolation provided by virtualizing the hardware. Containers share the host OS kernel, which can potentially introduce security vulnerabilities if not properly configured.

Docker

Now that we have an idea of what containers are, let’s delve into what Docker is.

Docker is an open-source platform that facilitates the development, deployment, and management of applications using containers. It uses the above discussed concepts of containerization to package an application and its dependencies into a single unit, which can then be shared and deployed across different environments without any changes, ensuring consistency.

Here are some key concepts to know with regards to Docker:

Docker Engine:

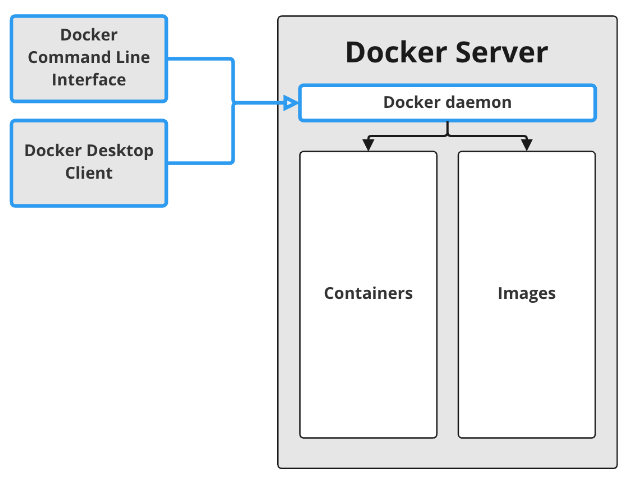

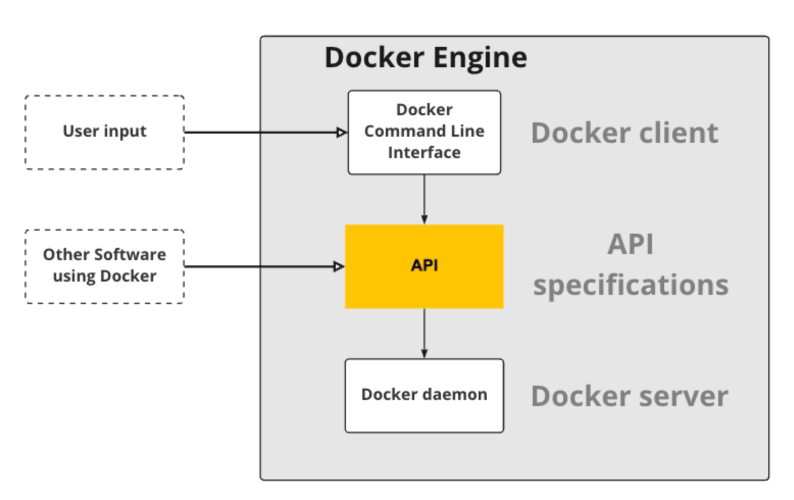

Docker Engine is an open source containerization technology for building and containerizing your applications. Simply put, it is the workhorse behind the scenes, managing the entire container lifecycle.

It consists of three main components:

Docker Daemon (dockerd): This is a background service that runs continuously on your system. It's responsible for:

- Creating and managing containers: The daemon listens for commands from the CLI and interprets instructions to build, run, stop, and manage container instances.

- Image management: It handles tasks like pulling images from registries, building images from Dockerfiles, and managing storage for container images and layers.

- Network configuration: The daemon sets up networking for containers, allowing them to communicate with each other and the outside world, following configurations you define.

- Resource allocation: The daemon manages resource allocation for containers like CPU, memory, and storage, ensuring smooth operation within defined limits.

REST API: This built-in API allows programmatic interaction with Docker Engine. Developers can leverage tools and scripts to automate tasks like container deployment and management through the API.

Docker CLI (docker): This is the user interface most people interact with. It's a command-line tool that communicates with the Docker daemon to execute various commands related to container operations.

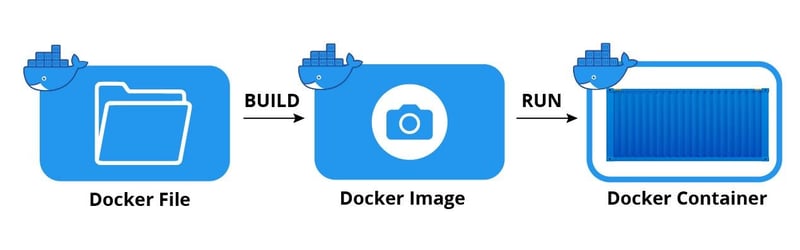

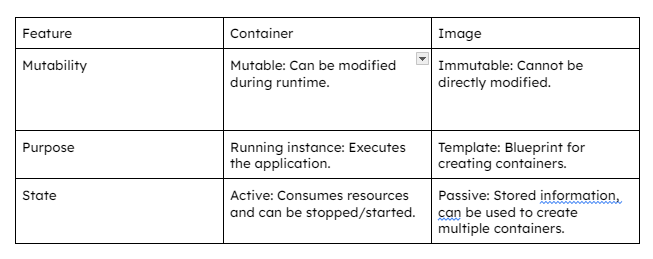

Docker Images

Image is the artefact or the application package (application code, runtime, libraries, and dependencies etc) required to configure a fully operational container environment. This is the artefact that can be moved around from device to device or between developers in a team. It is a read-only template containing a set of instructions for creating a container that can run on the Docker platform.

You can create a Docker image by using one of two methods:

Dockerfile: This is the most common method used to create Docker images. A Dockerfile is a text file that contains a series of instructions for building a Docker image. You specify the base image, add any dependencies, copy the application code, and configure the container. Once you have written the Dockerfile, you can build the image using the docker build command. Here’s an example an dockerfile for a Node.js application:

# Use the official Node.js 14 image as the base image

FROM node:14

# Set the working directory in the container

WORKDIR /app

# Copy package.json and package-lock.json to the working directory

COPY package*.json ./

# Install npm dependencies

RUN npm install

# Copy the rest of the application code to the working directory

COPY . .

# Expose port 3000

EXPOSE 3000

# Command to run the application

CMD ["node", "app.js"]

From Existing Container: In this method, you start a container from an existing Docker image, make changes to the container interactively (e.g., installing software, updating configurations), and then save the modified container as a new Docker image using the docker commit command.

While the latter method is possible, it is generally not recommended for production use because it can lead to inconsistencies and difficulties in managing images. Using a Dockerfile to define the image's configuration and dependencies is a more standard and reproducible approach.

Docker Containers

Think of a container as a running image with its own isolated environment. It represents a lightweight, isolated, and portable environment in which an application can run. They are created from images using the docker run command and can be started, stopped, deleted, and managed using Docker commands. Any changes made to a container (e.g., modifying files, installing software) are lost when the container is deleted unless those changes are committed to a new image.

Conclusion

In conclusion, Docker has revolutionized the way we develop, package, and deploy applications. Its lightweight containers provide a flexible and efficient solution for managing dependencies and isolating applications, leading to faster development cycles, improved scalability, and enhanced portability. By simplifying the deployment process and enabling greater consistency across different environments, Docker has become an essential tool for modern software development. As technology continues to evolve, Docker is sure to remain at the forefront, empowering developers and organizations to innovate and deliver high-quality software solutions.

Stay tuned for more articles on Docker.

Happy Dockering!

Posted on March 11, 2024

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.

Related

November 25, 2024