How We Built Our Own Prerenderer (And Why) - Part 1: Why

Eco Web Hosting

Posted on February 12, 2020

Context: We built a Vue site

Recently, we built ourselves a lovely new site using Vue.js. We wanted to be able to easily build reusable components and generally provide a speedier experience for users navigating the site.

As with almost any design decision, there were trade-offs. The main one was the kind we like to call “find-a-solution-that-seems-easy-on-StackOverflow-and-then-spend-a-week-trying-to-iron-out-the-bits-that-don’t-work”. Namely, the site needed a prerenderer in order to be read by some search engines.

When looking at the problem objectively, this was just a case of methodologically breaking any problems down and working through them, one-by-one.

But really, it was more a case of sinking into a minor existential crisis, questioning my career choices and deciding whether I should, in fact, pack it all in, buy a trawler and spend the rest of my days getting lashed by briny mist in the North Sea.

A cup of tea and a Hobnob later, I considered the possibility that I was being a little dramatic and got back to the drawing board.

What is prerendering?

Web terminology sometimes feels deliberately ambiguous. Is prerendering something that happens before rendering, or rendering that happens before something else? What is being rendered? Markup? DOM nodes?

When we talk about prerendering websites, we’re talking about generating the static page source that is served to the browser, which will build the Document Object Model (DOM), which is then painted to make the web page users see.

If your website has just a few static HTML files, where no content changes when served, there is no prerendering to do. The pages are already prepared for service.

So say you have an HTML file containing the following:

<!DOCTYPE html>

<html>

<head>

<title>Prerenderer test</title>

</head>

<body>

<h1>Prerenderer test</h1>

<section id="static">

<h2>Static bit</h2>

<p>Nothing dynamic here…</p>

</section>

</body>

</html>

A browser would render this HTML something like this:

Thrilling stuff.

Then say you then add some JavaScript to add some elements to the page, so your file now looks like this:

<!DOCTYPE html>

<html>

<head>

<title>Prerenderer test</title>

</head>

<body>

<h1>Prerenderer test</h1>

<section id="static">

<h2>Static bit</h2>

<p>Nothing dynamic here…</p>

</section>

<script>

window.onload = () => {

const body = document.querySelector('body');

const section = document.createElement('section');

const h2 = document.createElement('h2');

const p = document.createElement('p');

section.setAttribute('id', 'dynamic');

h2.innerHTML = 'Dynamic bit';

p.innerHTML = `But here, everything is generated dynamically.`;

body.append(section);

section.append(h2);

section.append(p);

};

</script>

</body>

</html>

Your page would render like this:

Ooo-ee. That’s the stuff I got into web development for.

This is a pretty basic example. Single Page Application frameworks, like Vue.js, React.js and Angular, take dynamic rendering and do something much more useful with it.

Vue.js apps are dynamically rendered

Our old website was a fairly traditional affair. You’d go to ecowebhosting.co.uk, a PHP page would be requested, assembled, and the fully-fledged markup would be returned.

Our new site doesn’t do that. Instead, it serves a small HTML file that acts as a mounting point for other DOM nodes.

It also contains JavaScript that has the entire remainder of the site served in that first request (save for static assets like images).

When you navigate around the new site, bits of that JavaScript are being executed, updating and re-rendering the markup of the page in the browser. This is why it feels fairly speedy. The browser doesn’t need to send new requests for pages each time the URL changes, as it already holds most of the site locally.

This means the source for each page looked the same. Something like this:

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="utf-8">

<meta http-equiv="X-UA-Compatible" content="IE=edge">

<meta name="viewport" content="width=device-width,initial-scale=1.0">

<meta name="theme-color" content="#577e5e">

<link rel="manifest" href="/manifest.json" />

<link rel="apple-touch-icon" href="/logo_192px.png">

<link rel="icon" href="/favicon.ico">

<link href="/0.js" rel="prefetch"><link href="/1.js" rel="prefetch">

<link href="/10.js" rel="prefetch"><link href="/11.js" rel="prefetch">

<link href="/12.js" rel="prefetch"><link href="/13.js" rel="prefetch">

<link href="/14.js" rel="prefetch"><link href="/15.js" rel="prefetch">

<link href="/16.js" rel="prefetch"><link href="/17.js" rel="prefetch">

<link href="/18.js" rel="prefetch"><link href="/19.js" rel="prefetch">

<link href="/2.js" rel="prefetch"><link href="/20.js" rel="prefetch">

<link href="/21.js" rel="prefetch"><link href="/3.js" rel="prefetch">

<link href="/4.js" rel="prefetch"><link href="/5.js" rel="prefetch">

<link href="/6.js" rel="prefetch"><link href="/7.js" rel="prefetch">

<link href="/8.js" rel="prefetch"><link href="/9.js" rel="prefetch">

<link href="/app.js" rel="preload" as="script">

</head>

<body>

<noscript>

<strong>

We're sorry but the Eco Web Hosting site doesn't work

properly without JavaScript enabled. Please enable it to continue.

</strong>

</noscript>

<div id="app"></div>

<!-- built files will be auto injected -->

<!--JavaScript at end of body for optimized loading-->

<script src="https://cdnjs.cloudflare.com/ajax/libs/materialize/1.0.0/js/materialize.min.js"></script>

<script>

document.addEventListener('DOMContentLoaded', function() {

const sideNav = document.querySelector('.sidenav');

M.Sidenav.init(sideNav, {});

});

</script>

<script type="text/javascript" src="/app.js"></script></body>

</html>

Yet a browser’s Inspect tool would show the dynamically generated markup:

All’s well that ends well, right? The browser runs the JavaScript, the JavaScript constructs the view and the user is shown that view. What’s the problem? Well…

Most search engines don’t run JavaScript

Moz.com did some research in 2017 to see which search engines correctly index JavaScript, and found that just Google and Ask did. At the time of writing, this was the most recent evidence I could find. Bing does index synchronous JavaScript, but it does not wait for asynchronous JavaScript to finish loading.

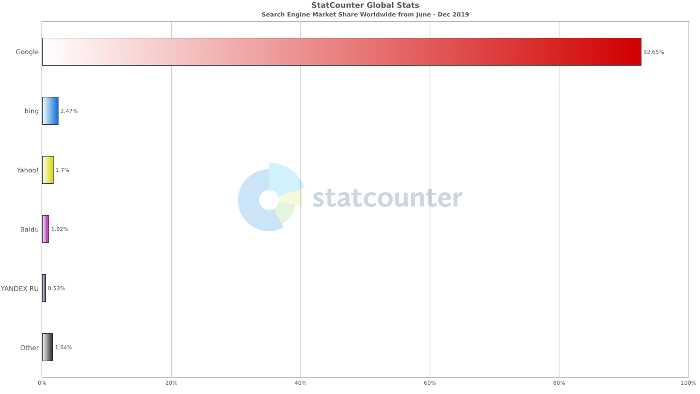

It’s tempting to discount users of other search engines, ‘cause everyone uses Google now anyway, right? And what kind of maniac uses Bing, anyway? Unfathomable though it is, it seems that people do actually use other search engines. StatCounter reported that in the second half of last year, Google had 92.65% of the global search engine market share. 92.65% is a high number, but 100% it is not.

Since Ask would appear to be included in “Other” in this graph, I’m going to round down the “not Google or Ask” share to an estimated 7%.

That’s 7% of your potential customers who will never see your beautifully crafted new site, let alone convert into sales. So yeah. Looks like we can’t neglect other search engines. Not even Bing.

Two ways of indexing dynamic pages

What’s the answer then? There are two common solutions to this problem. Both involve rendering the site before it is served from the server. One is Server-Side Rendering (SSR) and the other is prerendering.

On server-side rendered sites, the HTML is rendered (you guessed it) on the server and sent back to the client. This general idea is much the same as a PHP site assembling the HTML to serve, only it’s the JavaScript that does it. But once the site has loaded to the browser once, further navigation changes are made client-side.

SSR, therefore, allows a speedier first load, with search engines reading the requested content as if it were a static page. Dynamic data is readied in advance, so the site retains the reusability and snappier user experience that SPAs have after the first load completes.

But it can be a bit of work to implement, and also be overkill if dynamic data doesn’t need to be prepared in advance for a particular route in a Single Page App.

Prerendering, on the other hand, generates a static HTML page for each route of an SPA when the app is initially built, rather than whenever that route is requested.

This is easier to implement than SSR, and the static page is ready to be served whenever the page is requested, but it does also mean that there’s no ability to dynamically prepare markup in advance within the same route.

Since we would only have varying content that didn’t need to be dynamically prepared in advance, prerendering was our answer.

Prerendering one’s woes away

To our collective delight, it seemed prerendering was a problem for which many solutions had already been provided. Not being fans of reinventing the wheel for the sake of it, we were happy to go along with what the Vue.js documentation recommended — the prerender-spa-plugin.

Integrating it was to be a fairly simple task, in theory. It could be installed via npm and then configured via our Vue.js app’s Webpack configuration file. We just had to provide the site’s docroot directory and an array of the routes to prerender.

We got the prerenderer working, and all was good until we noticed something we could not ignore.

Curse of the mixed content warning

There was a blight in the browser console, and it was a mixed content warning.

Yet the element in question loaded just fine.

And the inspector showed it loading over https, just like the rest of the site:

<iframe

style="position: relative; height: 240px; width: 100%; border-style: none; display: block; overflow: hidden;" scrolling="no"

title="Customer reviews powered by Trustpilot"

src="https://widget.trustpilot.com/trustboxes/54ad5defc6454f065c28af8b/index.html?templateId=54ad5defc6454f065c28af8b&businessunitId=582d86750000ff000597a398#v-6df015a4=&vD20690f8=&tags=ewh-gc&locale=en-GB&styleHeight=240px&styleWidth=100%25&theme=light&stars=5"

frameborder="0">

</iframe>

The source told another story though:

<iframe

frameborder="0" scrolling="no" title="Customer reviews powered by Trustpilot" loading="auto"

src="http://widget.trustpilot.com/trustboxes/54ad5defc6454f065c28af8b/index.html?templateId=54ad5defc6454f065c28af8b&businessunitId=582d86750000ff000597a398#v-6df015a4=&vD20690f8=&tags=ewh-gc&locale=en-GB&styleHeight=240px&styleWidth=100%25&theme=light&stars=5"

style="position: relative; height: 240px; width: 100%; border-style: none; display: block; overflow: hidden;">

</iframe>

The prerendered markup’s source URL for the widget was http, but once all the scripts on the page executed, the DOM was ‘hydrated’ with the correct https:// source URL.

Besides looking unprofessional to any console adventurers, as Chrome’s Lighthouse pointed out to us, it could incur an SEO penalty.

It seems that TrustPilot’s Trustbox widget script itself created the iframe element with a source relative to the protocol the site was being served on and that the prerenderer served the site over https on a local server during the build process.

To fix it, we had a few options, though some felt rather hacky (post-build search and replace), while others relied on the addition of an https-served prerender that there didn’t seem to be much appetite for from others on the original project.

I began to get ideas.

Next: Andy gets into the details of how he built our own prerenderer, and the issues he faced...

Written 5 February 2020 by Andy Dunn, our Senior Technical Lead, originally on the Eco Web Hosting blog.

Cover image by Fatos Bytyqi on Unsplash.

Posted on February 12, 2020

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.