How to setup Vault with Kubernetes

Souvik Dey

Posted on February 18, 2020

In our not so ideal world, we tend to leave all our application secrets (passwords, API tokens) exposed in our source code. Storing secrets in plain sight isn't such a good idea, is it? We, at DeepSource have embraced the issue by incorporating a robust secrets management system in our infrastructure from day one. This post explains how to setup secret management in Kubernetes with HashiCorp Vault.

What is Vault?

Vault acts as your centrally managed service which deals with encryption and storage of your entire infrastructure secrets. Vault manages all secrets in secret engines. Vault has a suite of secrets engines at its disposal, but for the sake of brevity, we will stick to the kv (key-value) secret engine.

Overview

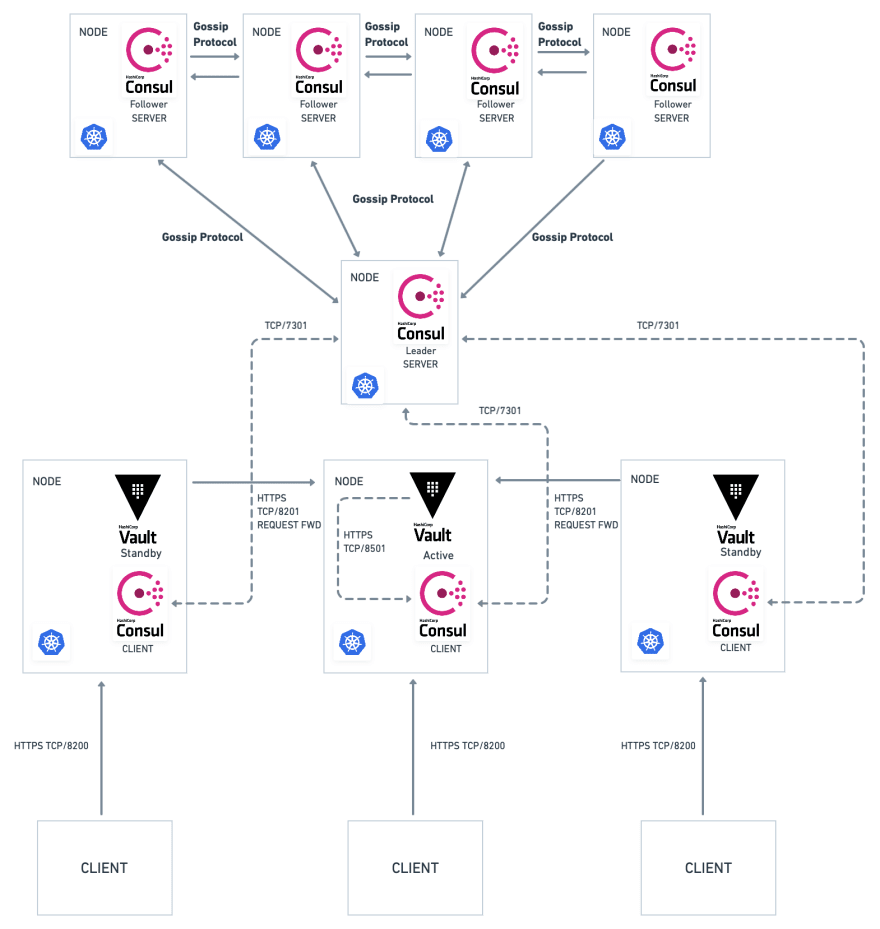

The above design depicts a three-node Vault cluster with one active node, two standby nodes and a Consul agent sidecar deployed talking on behalf of the Vault node to the five-node Consul server cluster. The architecture can also be extended to a multi-availability zone, rendering your cluster to be highly fault-tolerant.

You might be wondering why are we using the Consul server when the architecture is already a bit complex to wrap your head around. Vault requires a backend to store all encrypted data at rest. It can be your filesystem backend, a cloud provider, a database or a Consul cluster.

The strength of Consul is that it is fault-tolerant and highly scalable. By using Consul as a backend to Vault, you get the best of both. Consul is used for durable storage of encrypted data at rest and provides coordination so that Vault can be highly available and fault-tolerant. Vault provides higher-level policy management, secret leasing, audit logging, and automatic revocation.

The client talks to the Vault server through HTTPS, the Vault server processes the requests and forwards it to the Consul agent on a loopback address. The Consul client agents serve as an interface to the Consul server, are very lightweight and maintain very little state of their own. The Consul server stores the secrets encrypted at rest.

The Consul server cluster is essentially odd-numbered, as they are required to maintain consistency and fault tolerance using the consensus protocol. The consensus protocol is primarily based on Raft: In search of an Understandable Consensus Algorithm. For a visual explanation of Raft, you can refer to The Secret Lives of Data.

Vault on Kubernetes - easing your way out of operational complexities

Almost all of DeepSource's infrastructure runs on Kubernetes. From analysis runs to VPN infrastructure, everything runs on a highly distributed environment and Kubernetes helps us achieve that. For setting up Vault in Kubernetes, Hashicorp highly recommends using Helm charts for Vault and Consul deployment on Kubernetes, rather than using mundane manifests.

Prerequisites

For this setup, we'll require kubectl and helm installed, along with a local minikube setup to deploy into.

$ kubectl version

Client Version: version.Info{Major:"1", Minor:"16", GitVersion:"v1.16.3", GitCommit:"b3cbbae08ec52a7fc73d334838e18d17e8512749", GitTreeState:"clean", BuildDate:"2019-11-14T04:24:29Z", GoVersion:"go1.12.13", Compiler:"gc", Platform:"darwin/amd64"}

Server Version: version.Info{Major:"1", Minor:"14+", GitVersion:"v1.14.8-gke.33", GitCommit:"2c6d0ee462cee7609113bf9e175c107599d5213f", GitTreeState:"clean", BuildDate:"2020-01-15T17:47:46Z", GoVersion:"go1.12.11b4", Compiler:"gc", Platform:"linux/amd64"}

$ helm version

version.BuildInfo{Version:"v3.0.1", GitCommit:"7c22ef9ce89e0ebeb7125ba2ebf7d421f3e82ffa", GitTreeState:"clean", GoVersion:"go1.13.4"}

$ minikube version

minikube version: v1.5.2

commit: 792dbf92a1de583fcee76f8791cff12e0c9440ad

The setup

Let's get minikube up and running.

$ minikube start --memory 4096

😄 minikube v1.5.2 on Darwin 10.15.2

✨ Automatically selected the 'hyperkit' driver (alternates: [virtualbox])

🔥 Creating hyperkit VM (CPUs=2, Memory=4096MB, Disk=20000MB) ...

🐳 Preparing Kubernetes v1.16.2 on Docker '18.09.9' ...

🚜 Pulling images ...

🚀 Launching Kubernetes ...

⌛ Waiting for: apiserver

🏄 Done! kubectl is now configured to use "minikube"

The --memory is set to 4096 MB to ensure there is enough memory for all the resources to be deployed. The initialization process takes several minutes as it retrieves necessary dependencies and starts downloads multiple container images.

Verify the status of your Minikube cluster,

$ minikube status

host: Running

kubelet: Running

apiserver: Running

kubeconfig: Configured

The host, kubelet, apiserver should report that they are running. On successful execution, kubectl will be auto configured to communicate with this recently started cluster.

The recommended way to run Vault on Kubernetes is with the Helm chart. This installs and configures all the necessary components to run Vault in several different modes. Let's install Vault Helm chart (this post deploys version 0.3.3) with pods prefixed with the name vault:

$ helm install --name vault \

--set "server.dev.enabled=true" \

https://github.com/hashicorp/vault-helm/archive/v0.3.0.tar.gz

NAME: vault

LAST DEPLOYED: Fri Feb 8 11:56:33 2020

NAMESPACE: default

STATUS: DEPLOYED

RESOURCES:

..

NOTES:

..

Your release is named vault. To learn more about the release, try:

$ helm status vault

$ helm get vault

To verify, get all the pods within the default namespace:

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

vault-0 1/1 Running 0 80s

vault-agent-injector-5945fb98b5-tpglz 1/1 Running 0 80s

Creating a secret

The applications that you deploy in the later steps expect Vault to store a username and password stored at the path internal/database/config. To create this secret requires that a kv secret engine is enabled and a username and password is put at the specified path.

Start an interactive shell session on the vault-0 pod:

$ kubectl exec -it vault-0 /bin/sh

/ $

Your system prompt is replaced with a new prompt / $. Commands issued at this prompt are executed on the vault-0 container.

Enable kv-v2 secrets at the path internal:

/ $ vault secrets enable -path=internal kv-v2

Success! Enabled the kv-v2 secrets engine at: internal/

Add a username and password secret at the path internal/exampleapp/config:

$ vault kv put internal/database/config username="db-readonly-username" password="db-secret-password"

Key Value

--- -----

created_time 2019-12-20T18:17:01.719862753Z

deletion_time n/a

destroyed false

version 1

Verify that the secret is defined at the path internal/database/config:

$ vault kv get internal/database/config

====== Metadata ======

Key Value

--- -----

created_time 2019-12-20T18:17:50.930264759Z

deletion_time n/a

destroyed false

version 1

====== Data ======

Key Value

--- -----

password db-secret-password

username db-readonly-username

Make Kubernetes familiar for Vault

Vault provides a Kubernetes authentication method that enables clients to authenticate with a Kubernetes Service Account Token.

Enable the Kubernetes authentication method:

/ $ vault auth enable kubernetes

Success! Enabled kubernetes auth method at: kubernetes/

Vault accepts this service token from any client within the Kubernetes cluster. During authentication, Vault verifies that the service account token is valid by querying a configured Kubernetes endpoint.

Configure the Kubernetes authentication method to use the service account token, the location of the Kubernetes host, and its certificate:

/ $ vault write auth/kubernetes/config \

token_reviewer_jwt="$(cat /var/run/secrets/kubernetes.io/serviceaccount/token)" \

kubernetes_host="https://$KUBERNETES_PORT_443_TCP_ADDR:443" \

kubernetes_ca_cert=@/var/run/secrets/kubernetes.io/serviceaccount/ca.crt

Success! Data written to: auth/kubernetes/config

The token_reviewer_jwt and kubernetes_ca_cert reference files written to the container by Kubernetes. The environment variable KUBERNETES_PORT_443_TCP_ADDR references the internal network address of the Kubernetes host. For a client to read the secret data defined in the previous step, at internal/database/config, requires that the read capability be granted for the path internal/data/database/config.

Write out the policy named internal-app that enables the read capability for secrets at path internal/data/database/config

/ $ vault policy write internal-app - <<EOH

path "internal/data/database/config" {

capabilities = ["read"]

}

EOH

Success! Uploaded policy: internal-app

Now, create a Kubernetes authentication role named internal-app:

/ $ vault write auth/kubernetes/role/internal-app \

bound_service_account_names=internal-app \

bound_service_account_namespaces=default \

policies=internal-app \

ttl=24h

Success! Data written to: auth/kubernetes/role/internal-app

The role connects the Kubernetes service account, internal-app, and namespace, default, with the Vault policy, internal-app. The tokens returned after authentication are valid for 24 hours.

Lastly, exit the vault-0 pod:

/ $ exit

$

Create a Kubernetes service account

The Vault Kubernetes authentication role defined a Kubernetes service account named internal-app. This service account does not yet exist.

View the service account defined in exampleapp-service-account.yml:

$ kubectl get serviceaccounts

NAME SECRETS AGE

default 1 43m

vault 1 34m

vault-agent-injector 1 34m

Apply the service account definition to create it:

$ kubectl apply --filename service-account-internal-app.yml

serviceaccount/internal-app created

Verify that the service account has been created:

The name of the service account here aligns with the name assigned to the bound_service_account_names field when creating the internal-app role when configuring the Kubernetes authentication.

Secret Injection from sidecar to application

View the deployment for the orgchart application:

$ cat deployment-01-orgchart.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: orgchart

labels:

app: vault-agent-injector-demo

spec:

selector:

matchLabels:

app: vault-agent-injector-demo

replicas: 1

template:

metadata:

annotations:

labels:

app: vault-agent-injector-demo

spec:

serviceAccountName: internal-app

containers:

- name: orgchart

image: jweissig/app:0.0.1

The name of the new deployment is orgchart. The spec.template.spec.serviceAccountName defines the service account internal-app to run this container under.

Apply the deployment defined in deployment-01-orgchart.yml:

$ kubectl apply --filename deployment-01-orgchart.yml

deployment.apps/orgchart created

The application runs as a pod within the default namespace.

Get all the pods within the default namespace:

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

orgchart-69697d9598-l878s 1/1 Running 0 18s

vault-0 1/1 Running 0 58m

vault-agent-injector-5945fb98b5-tpglz 1/1 Running 0 58m

The Vault-Agent injector looks for deployments that define specific annotations. None of these annotations exist within the current deployment. This means that no secrets are present on the orgchart container within the orgchart-69697d9598-l878s pod.

Verify that no secrets are written to the orgchart container in the orgchart-69697d9598-l878s pod:

$ kubectl exec orgchart-69697d9598-l878s --container orgchart -- ls /vault/secrets

ls: /vault/secrets: No such file or directory

command terminated with exit code 1

The deployment is running the pod with the internal-app Kubernetes service account in the default namespace. The Vault Agent injector only modifies a deployment if it contains a very specific set of annotations. An existing deployment may have its definition patched to include the necessary annotations.

View the deployment patch deployment-02-inject-secrets.yml:

$ cat deployment-02-inject-secrets.yml

spec:

template:

metadata:

annotations:

vault.hashicorp.com/agent-inject: "true"

vault.hashicorp.com/role: "internal-app"

vault.hashicorp.com/agent-inject-secret-database-config.txt: "internal/data/database/config"

These annotations define a partial structure of the deployment schema and are prefixed with vault.hashicorp.com.

-

agent-injectenables the Vault Agent injector service -

roleis the Vault Kubernetes authentication role -

roleis the Vault role created that maps back to the K8s service account -

agent-inject-secret-FIlEPATHprefixes the path of the file,database-config.txtwritten to/vault/secrets. The values is the path to the secret defined in Vault.

Patch the orgchart deployment defined in deployment-02-inject-secrets.yml:

$ kubectl patch deployment orgchart --patch "$(cat deployment-02-inject-secrets.yml)"

deployment.apps/orgchart patched

This new pod now launches two containers. The application container, named orgchart, and the Vault Agent container, named vault-agent.

View the logs of the vault-agent container in the orgchart-599cb74d9c-s8hhm pod:

$ kubectl logs orgchart-599cb74d9c-s8hhm --container vault-agent

==> Vault server started! Log data will stream in below:

==> Vault agent configuration:

Cgo: disabled

Log Level: info

Version: Vault v1.3.1

2019-12-20T19:52:36.658Z [INFO] sink.file: creating file sink

2019-12-20T19:52:36.659Z [INFO] sink.file: file sink configured: path=/home/vault/.token mode=-rw-r-----

2019-12-20T19:52:36.659Z [INFO] template.server: starting template server

2019/12/20 19:52:36.659812 [INFO] (runner) creating new runner (dry: false, once: false)

2019/12/20 19:52:36.660237 [INFO] (runner) creating watcher

2019-12-20T19:52:36.660Z [INFO] auth.handler: starting auth handler

2019-12-20T19:52:36.660Z [INFO] auth.handler: authenticating

2019-12-20T19:52:36.660Z [INFO] sink.server: starting sink server

2019-12-20T19:52:36.679Z [INFO] auth.handler: authentication successful, sending token to sinks

2019-12-20T19:52:36.680Z [INFO] auth.handler: starting renewal process

2019-12-20T19:52:36.681Z [INFO] sink.file: token written: path=/home/vault/.token

2019-12-20T19:52:36.681Z [INFO] template.server: template server received new token

2019/12/20 19:52:36.681133 [INFO] (runner) stopping

2019/12/20 19:52:36.681160 [INFO] (runner) creating new runner (dry: false, once: false)

2019/12/20 19:52:36.681285 [INFO] (runner) creating watcher

2019/12/20 19:52:36.681342 [INFO] (runner) starting

2019-12-20T19:52:36.692Z [INFO] auth.handler: renewed auth token

Vault Agent manages the token lifecycle and the secret retrieval. The secret is rendered in the orgchart container at the path /vault/secrets/database-config.txt.

Finally, view the secret written to the orgchart container:

$ kubectl exec orgchart-599cb74d9c-s8hhm --container orgchart -- cat /vault/secrets/database-config.txt

data: map[password:db-secret-password username:db-readonly-user]

metadata: map[created_time:2019-12-20T18:17:50.930264759Z deletion_time: destroyed:false version:2]

The secret is successfully present in the container. Secrets injected into the container can further be templatized to suit the application needs.

Right now, you should very well assess the significance of Vault in a highly dynamic cloud native infrastructure, removing operational overheads in managing application and service secrets and permitting your infrastructure to scale gracefully.

Here is the link to the actual post.

Posted on February 18, 2020

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.