Remplacer MetalLB par Cilium BGP pour la mise en œuvre d’un équilibreur de charge dans Kubernetes …

Karim

Posted on March 1, 2023

Cilium est un logiciel open source permettant de sécuriser de manière transparente la connectivité réseau entre les services applicatifs déployés à l’aide de plateformes de gestion de conteneurs Linux comme Docker et Kubernetes.

À la base de Cilium se trouve une nouvelle technologie du noyau Linux appelée eBPF, qui permet l’insertion dynamique d’une puissante logique de visibilité et de contrôle de la sécurité au sein même de Linux. Comme eBPF s’exécute à l’intérieur du noyau Linux, les politiques de sécurité de Cilium peuvent être appliquées et mises à jour sans aucune modification du code de l’application ou de la configuration du conteneur.

Introduction to Cilium & Hubble - Cilium 1.13.0 documentation

"How the Hive Came To Bee" - a story of eBPF and Cilium so far - Isovalent

BGP fournit un moyen d’annoncer des routes à l’aide de protocoles de réseau traditionnels pour permettre aux services gérés par Cilium d’être accessibles en dehors du cluster. Ici on va voir comment configurer la prise en charge native de Cilium pour annoncer les IP des services de l’équilibreur de charge et la plage CIDR de Pod d’un nœud Kubernetes via BGP.

Il s’appuie sur l’implémentation simple et efficace de l’allocation d’IP de MetalLB et sur le support minimal du protocole BGP pour ce faire. La configuration de Cilium est la même que celle de MetalLB. Cette fonctionnalité demeure encore en version beta :

- BGP (beta) - Cilium 1.13.0 documentation

- Déploiement d’un cluster Kubernetes sur du BareMetal dans Scaleway avec Kontena Pharos, MetalLB et…

- MetalLB

Plus précisément, si un service de type LoadBalancer est créé, Cilium lui allouera une IP à partir d’un pool spécifié. Une fois l’IP allouée, les agents annonceront via BGP en fonction de la ExternalTrafficPolicy du service. Application ici avec le lancement d’une instance dans Linode (maintenant Akamai) avec Ubuntu 22.04 LTS :

Akamai prend le virage du cloud computing - Le Monde Informatique

linode-cli linodes create \

--image 'linode/ubuntu22.04' \

--region eu-central \

--type g7-highmem-1 \

--label lxdserver\

--authorized_users $USER\

--booted true \

--backups_enabled false \

--private_ip false

Installation de l’hyperviseur LXD sur cette instance Ubuntu 22.04 LTS :

root@localhost:~# snap install lxd

2023-03-01T19:27:40Z INFO Waiting for automatic snapd restart...

lxd 5.11-ad0b61e from Canonical✓ installed

root@localhost:~# lxd init

Would you like to use LXD clustering? (yes/no) [default=no]:

Do you want to configure a new storage pool? (yes/no) [default=yes]:

Name of the new storage pool [default=default]:

Name of the storage backend to use (lvm, zfs, btrfs, ceph, dir) [default=zfs]: dir

Would you like to connect to a MAAS server? (yes/no) [default=no]:

Would you like to create a new local network bridge? (yes/no) [default=yes]:

What should the new bridge be called? [default=lxdbr0]:

What IPv4 address should be used? (CIDR subnet notation, “auto” or “none”) [default=auto]:

What IPv6 address should be used? (CIDR subnet notation, “auto” or “none”) [default=auto]: none

Would you like the LXD server to be available over the network? (yes/no) [default=no]:

Would you like stale cached images to be updated automatically? (yes/no) [default=yes]:

Would you like a YAML "lxd init" preseed to be printed? (yes/no) [default=no]:

root@localhost:~# lxc ls

To start your first container, try: lxc launch ubuntu:22.04

Or for a virtual machine: lxc launch ubuntu:22.04 --vm

+------+-------+------+------+------+-----------+

| NAME | STATE | IPV4 | IPV6 | TYPE | SNAPSHOTS |

+------+-------+------+------+------+-----------+

Configuration du profil par défaut dans LXD :

root@localhost:~# ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa): Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa

Your public key has been saved in /root/.ssh/id_rsa.pub

The key fingerprint is:

SHA256:Nk0YMZ5JM5i5EMOjZ/YTJ+GiWNTOhv/9jhLtVAz7f8k root@localhost

The key's randomart image is:

+---[RSA 3072]----+

| oo. +B. |

| . =.=o.O |

| . = + o=+. |

| + O = ooo |

| o B o =So. |

|. . . +.o.. |

| . * . . . |

| o o. . E |

| ..oo . |

+----[SHA256]-----+

root@localhost:~# lxc profile show default > lxd-profile-default.yaml

root@localhost:~# cat lxd-profile-default.yaml

config:

user.user-data: |

#cloud-config

ssh_authorized_keys:

- @@SSHPUB@@

environment.http_proxy: ""

user.network_mode: ""

description: Default LXD profile

devices:

eth0:

name: eth0

network: lxdbr0

type: nic

root:

path: /

pool: default

type: disk

name: default

used_by: []

root@localhost:~# sed -ri "s'@@SSHPUB@@'$(cat ~/.ssh/id_rsa.pub)'" lxd-profile-default.yaml

root@localhost:~# lxc profile edit default < lxd-profile-default.yaml

et d’un profil dédié pour l”installation de Kubernetes :

root@localhost:~# lxc profile create k8s

Profile k8s created

root@localhost:~# wget https://raw.githubusercontent.com/ubuntu/microk8s/master/tests/lxc/microk8s.profile -O microk8s.profile

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 2606:50c0:8000::154, 2606:50c0:8001::154, 2606:50c0:8002::154, ...

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|2606:50c0:8000::154|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 816 [text/plain]

Saving to: ‘microk8s.profile’

microk8s.profile 100%[=============================================================================================>] 816 --.-KB/s in 0s

root@localhost:~# cat microk8s.profile | lxc profile edit k8s

root@localhost:~# rm microk8s.profile

Lancement de trois instances LXC avec Ubuntu 22.04 LTS et de ce profil k8s :

root@localhost:~# for i in {1..3}; do lxc launch -p default -p k8s ubuntu:22.04 k3s$i; done

Creating k3s1

Starting k3s1

Creating k3s2

Starting k3s2

Creating k3s3

Starting k3s3

root@localhost:~# lxc ls

+------+---------+---------------------+------+-----------+-----------+

| NAME | STATE | IPV4 | IPV6 | TYPE | SNAPSHOTS |

+------+---------+---------------------+------+-----------+-----------+

| k3s1 | RUNNING | 10.26.23.163 (eth0) | | CONTAINER | 0 |

+------+---------+---------------------+------+-----------+-----------+

| k3s2 | RUNNING | 10.26.23.75 (eth0) | | CONTAINER | 0 |

+------+---------+---------------------+------+-----------+-----------+

| k3s3 | RUNNING | 10.26.23.87 (eth0) | | CONTAINER | 0 |

+------+---------+---------------------+------+-----------+-----------+

Je procède à l’installation d’un cluster k3s conformément à ce que préconise la documentation fournie par Cilium.

La première étape consiste en effet à installer un nœud maître K3s en veillant à désactiver la prise en charge du plugin CNI par défaut et de l’applicateur de politique réseau intégré :

Installation Using K3s - Cilium 1.13.0 documentation

root@localhost:~# ssh ubuntu@10.26.23.163

Warning: Permanently added '10.26.23.163' (ED25519) to the list of known hosts.

The programs included with the Ubuntu system are free software;

the exact distribution terms for each program are described in the

individual files in /usr/share/doc/*/copyright.

Ubuntu comes with ABSOLUTELY NO WARRANTY, to the extent permitted by

applicable law.

To run a command as administrator (user "root"), use "sudo <command>".

See "man sudo_root" for details.

ubuntu@k3s1:~$ curl -sfL https://get.k3s.io | INSTALL_K3S_EXEC='--flannel-backend=none --disable-network-policy' sh -

[INFO] Finding release for channel stable

[INFO] Using v1.25.6+k3s1 as release

[INFO] Downloading hash https://github.com/k3s-io/k3s/releases/download/v1.25.6+k3s1/sha256sum-amd64.txt

[INFO] Downloading binary https://github.com/k3s-io/k3s/releases/download/v1.25.6+k3s1/k3s

[INFO] Verifying binary download

[INFO] Installing k3s to /usr/local/bin/k3s

[INFO] Skipping installation of SELinux RPM

[INFO] Creating /usr/local/bin/kubectl symlink to k3s

[INFO] Creating /usr/local/bin/crictl symlink to k3s

[INFO] Creating /usr/local/bin/ctr symlink to k3s

[INFO] Creating killall script /usr/local/bin/k3s-killall.sh

[INFO] Creating uninstall script /usr/local/bin/k3s-uninstall.sh

[INFO] env: Creating environment file /etc/systemd/system/k3s.service.env

[INFO] systemd: Creating service file /etc/systemd/system/k3s.service

[INFO] systemd: Enabling k3s unit

Created symlink /etc/systemd/system/multi-user.target.wants/k3s.service → /etc/systemd/system/k3s.service.

[INFO] systemd: Starting k3s

Après récupération du node-token , installation des deux workers dans ce cluster k3s :

root@localhost:~# ssh ubuntu@10.26.23.75

Warning: Permanently added '10.26.23.75' (ED25519) to the list of known hosts.

The programs included with the Ubuntu system are free software;

the exact distribution terms for each program are described in the

individual files in /usr/share/doc/*/copyright.

Ubuntu comes with ABSOLUTELY NO WARRANTY, to the extent permitted by

applicable law.

To run a command as administrator (user "root"), use "sudo <command>".

See "man sudo_root" for details.

ubuntu@k3s2:~$ curl -sfL https://get.k3s.io | K3S_URL='https://10.26.23.163:6443' K3S_TOKEN=K100df339c075cfa287710648ed7ba381667496813d04b7603150856c8c673cc07b::server:2300bb645db019e47caaa971f0f8460b sh -

[INFO] Finding release for channel stable

[INFO] Using v1.25.6+k3s1 as release

[INFO] Downloading hash https://github.com/k3s-io/k3s/releases/download/v1.25.6+k3s1/sha256sum-amd64.txt

[INFO] Downloading binary https://github.com/k3s-io/k3s/releases/download/v1.25.6+k3s1/k3s

[INFO] Verifying binary download

[INFO] Installing k3s to /usr/local/bin/k3s

[INFO] Skipping installation of SELinux RPM

[INFO] Creating /usr/local/bin/kubectl symlink to k3s

[INFO] Creating /usr/local/bin/crictl symlink to k3s

[INFO] Creating /usr/local/bin/ctr symlink to k3s

[INFO] Creating killall script /usr/local/bin/k3s-killall.sh

[INFO] Creating uninstall script /usr/local/bin/k3s-agent-uninstall.sh

[INFO] env: Creating environment file /etc/systemd/system/k3s-agent.service.env

[INFO] systemd: Creating service file /etc/systemd/system/k3s-agent.service

[INFO] systemd: Enabling k3s-agent unit

Created symlink /etc/systemd/system/multi-user.target.wants/k3s-agent.service → /etc/systemd/system/k3s-agent.service.

[INFO] systemd: Starting k3s-agent

ubuntu@k3s2:~$ exit

logout

Connection to 10.26.23.75 closed.

root@localhost:~# ssh ubuntu@10.26.23.87

Warning: Permanently added '10.26.23.87' (ED25519) to the list of known hosts.

The programs included with the Ubuntu system are free software;

the exact distribution terms for each program are described in the

individual files in /usr/share/doc/*/copyright.

Ubuntu comes with ABSOLUTELY NO WARRANTY, to the extent permitted by

applicable law.

To run a command as administrator (user "root"), use "sudo <command>".

See "man sudo_root" for details.

ubuntu@k3s3:~$ curl -sfL https://get.k3s.io | K3S_URL='https://10.26.23.163:6443' K3S_TOKEN=K100df339c075cfa287710648ed7ba381667496813d04b7603150856c8c673cc07b::server:2300bb645db019e47caaa971f0f8460b sh -

[INFO] Finding release for channel stable

[INFO] Using v1.25.6+k3s1 as release

[INFO] Downloading hash https://github.com/k3s-io/k3s/releases/download/v1.25.6+k3s1/sha256sum-amd64.txt

[INFO] Downloading binary https://github.com/k3s-io/k3s/releases/download/v1.25.6+k3s1/k3s

[INFO] Verifying binary download

[INFO] Installing k3s to /usr/local/bin/k3s

[INFO] Skipping installation of SELinux RPM

[INFO] Creating /usr/local/bin/kubectl symlink to k3s

[INFO] Creating /usr/local/bin/crictl symlink to k3s

[INFO] Creating /usr/local/bin/ctr symlink to k3s

[INFO] Creating killall script /usr/local/bin/k3s-killall.sh

[INFO] Creating uninstall script /usr/local/bin/k3s-agent-uninstall.sh

[INFO] env: Creating environment file /etc/systemd/system/k3s-agent.service.env

[INFO] systemd: Creating service file /etc/systemd/system/k3s-agent.service

[INFO] systemd: Enabling k3s-agent unit

Created symlink /etc/systemd/system/multi-user.target.wants/k3s-agent.service → /etc/systemd/system/k3s-agent.service.

[INFO] systemd: Starting k3s-agent

ubuntu@k3s1:~$ mkdir .kube && sudo cp /etc/rancher/k3s/k3s.yaml .kube/config && sudo chown -R $USER .kube/*

ubuntu@k3s1:~$ curl -LO https://storage.googleapis.com/kubernetes-release/release/v1.25.6/bin/linux/amd64/kubectl && chmod +x kubectl && sudo rm /usr/local/bin/kubectl && sudo mv kubectl /usr/bin

ubuntu@k3s1:~$ kubectl cluster-info

Kubernetes control plane is running at https://127.0.0.1:6443

CoreDNS is running at https://127.0.0.1:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

Metrics-server is running at https://127.0.0.1:6443/api/v1/namespaces/kube-system/services/https:metrics-server:https/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

ubuntu@k3s1:~$ kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k3s2 NotReady <none> 3m31s v1.25.6+k3s1 10.26.23.75 <none> Ubuntu 22.04.1 LTS 5.15.0-60-generic containerd://1.6.15-k3s1

k3s3 NotReady <none> 3m8s v1.25.6+k3s1 10.26.23.87 <none> Ubuntu 22.04.1 LTS 5.15.0-60-generic containerd://1.6.15-k3s1

k3s1 NotReady control-plane,master 7m19s v1.25.6+k3s1 10.26.23.163 <none> Ubuntu 22.04.1 LTS 5.15.0-60-generic containerd://1.6.15-k3s1

ubuntu@k3s1:~$ kubectl get po,svc -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system pod/metrics-server-5f9f776df5-jzq2f 0/1 Pending 0 7m13s

kube-system pod/local-path-provisioner-79f67d76f8-tg2dj 0/1 Pending 0 7m13s

kube-system pod/coredns-597584b69b-4bg2h 0/1 Pending 0 7m13s

kube-system pod/helm-install-traefik-k9m7j 0/1 Pending 0 7m14s

kube-system pod/helm-install-traefik-crd-4vh8j 0/1 Pending 0 7m14s

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default service/kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 7m28s

kube-system service/kube-dns ClusterIP 10.43.0.10 <none> 53/UDP,53/TCP,9153/TCP 7m25s

kube-system service/metrics-server ClusterIP 10.43.252.18 <none> 443/TCP 7m24s

Suivi de l’installation de Helm 3 :

ubuntu@k3s1:~$ curl https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3 | bash

Downloading https://get.helm.sh/helm-v3.11.1-linux-amd64.tar.gz

Verifying checksum... Done.

Preparing to install helm into /usr/local/bin

helm installed into /usr/local/bin/helm

ubuntu@k3s1:~$ chmod 400 .kube/config

ubuntu@k3s1:~$ helm ls

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

suivi de Cilium BGP (beta) …

BGP (beta) - Cilium 1.13.0 documentation

ubuntu@k3s1:~$ helm repo add cilium https://helm.cilium.io/

"cilium" has been added to your repositories

ubuntu@k3s1:~$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "cilium" chart repository

Update Complete. ⎈Happy Helming!⎈

Le support BGP est activé en fournissant la configuration BGP via un ConfigMap et en définissant quelques valeurs avec Helm. Sinon, BGP est désactivé par défaut. Je me base pour cela sur la passerelle fournie dans LXD :

root@localhost:~# ifconfig lxdbr0

lxdbr0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 10.26.23.1 netmask 255.255.255.0 broadcast 0.0.0.0

ether 00:16:3e:a3:ba:e8 txqueuelen 1000 (Ethernet)

RX packets 8742 bytes 563385 (563.3 KB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 18343 bytes 264191495 (264.1 MB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

root@localhost:~# lxc ls

+------+---------+---------------------+------+-----------+-----------+

| NAME | STATE | IPV4 | IPV6 | TYPE | SNAPSHOTS |

+------+---------+---------------------+------+-----------+-----------+

| k3s1 | RUNNING | 10.26.23.163 (eth0) | | CONTAINER | 0 |

+------+---------+---------------------+------+-----------+-----------+

| k3s2 | RUNNING | 10.26.23.75 (eth0) | | CONTAINER | 0 |

+------+---------+---------------------+------+-----------+-----------+

| k3s3 | RUNNING | 10.26.23.87 (eth0) | | CONTAINER | 0 |

+------+---------+---------------------+------+-----------+-----------+

et ce segment d’adresses IP libres :

d’où ce fichier YAML pour la configuration :

ubuntu@k3s1:~$ cat config.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: bgp-config

namespace: kube-system

data:

config.yaml: |

peers:

- peer-address: 10.26.23.1

peer-asn: 64512

my-asn: 64512

address-pools:

- name: default

protocol: bgp

addresses:

- 10.26.23.32/27

ubuntu@k3s1:~$ kubectl apply -f config.yaml

configmap/bgp-config created

Installation du Pod Cilium avec Helm :

ubuntu@k3s1:~$ helm install cilium cilium/cilium --version 1.13.0 \

--namespace kube-system \

--set bgp.enabled=true \

--set bgp.announce.loadbalancerIP=true \

--set bgp.announce.podCIDR=true

NAME: cilium

LAST DEPLOYED: Wed Mar 1 20:05:18 2023

NAMESPACE: kube-system

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

You have successfully installed Cilium with Hubble.

Your release version is 1.13.0.

For any further help, visit https://docs.cilium.io/en/v1.13/gettinghelp

Mise à jour de cette installation avec l’insertion de Cilium Hubble (avec une interface utilisateur de Hubble pouvant accéder à la carte graphique des services):

- Service Map & Hubble UI - Cilium 1.13.0 documentation

- Tutorial: Tips and Tricks to install Cilium - Isovalent

ubuntu@k3s1:~$ helm upgrade cilium cilium/cilium --version 1.13.0 \

--namespace kube-system \

--set bgp.enabled=true \

--set bgp.announce.loadbalancerIP=true \

--set bgp.announce.podCIDR=true \

--set sctp.enabled=true \

--set hubble.enabled=true \

--set hubble.metrics.enabled="{dns,drop,tcp,flow,icmp,http}" \

--set hubble.relay.enabled=true \

--set hubble.ui.enabled=true \

--set hubble.ui.service.type=LoadBalancer \

--set hubble.relay.service.type=LoadBalancer

Release "cilium" has been upgraded. Happy Helming!

NAME: cilium

LAST DEPLOYED: Wed Mar 1 20:09:00 2023

NAMESPACE: kube-system

STATUS: deployed

REVISION: 2

TEST SUITE: None

NOTES:

You have successfully installed Cilium with Hubble Relay and Hubble UI.

Your release version is 1.13.0.

For any further help, visit https://docs.cilium.io/en/v1.13/gettinghelp

root@localhost:~# lxc ls

+------+---------+--------------------------+------+-----------+-----------+

| NAME | STATE | IPV4 | IPV6 | TYPE | SNAPSHOTS |

+------+---------+--------------------------+------+-----------+-----------+

| k3s1 | RUNNING | 10.26.23.163 (eth0) | | CONTAINER | 0 |

| | | 10.0.2.99 (cilium_host) | | | |

+------+---------+--------------------------+------+-----------+-----------+

| k3s2 | RUNNING | 10.26.23.75 (eth0) | | CONTAINER | 0 |

| | | 10.0.0.117 (cilium_host) | | | |

+------+---------+--------------------------+------+-----------+-----------+

| k3s3 | RUNNING | 10.0.1.16 (cilium_host) | | CONTAINER | 0 |

+------+---------+--------------------------+------+-----------+-----------+

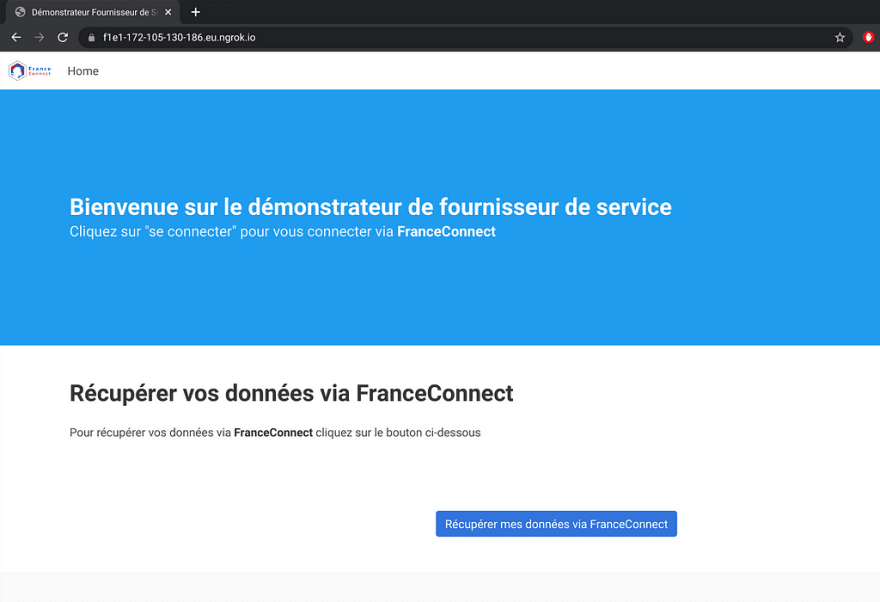

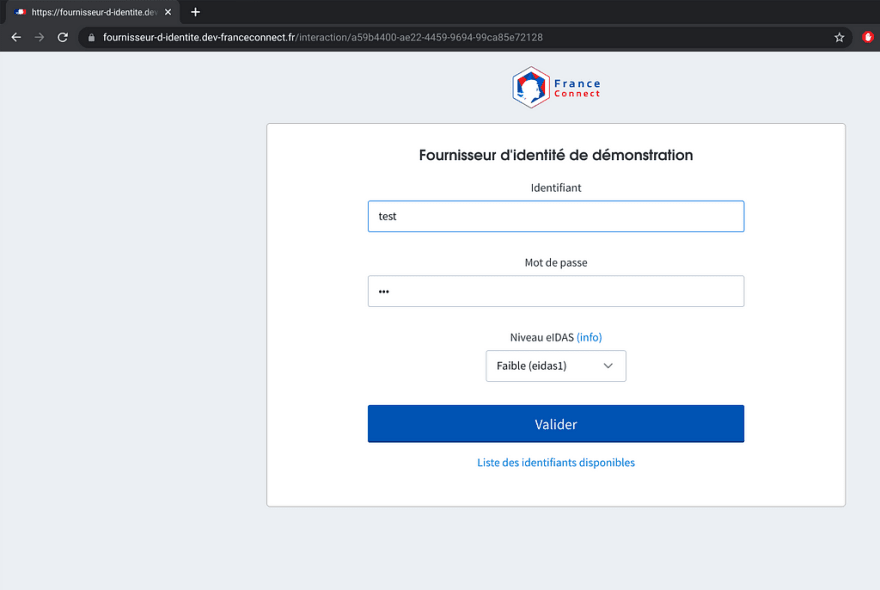

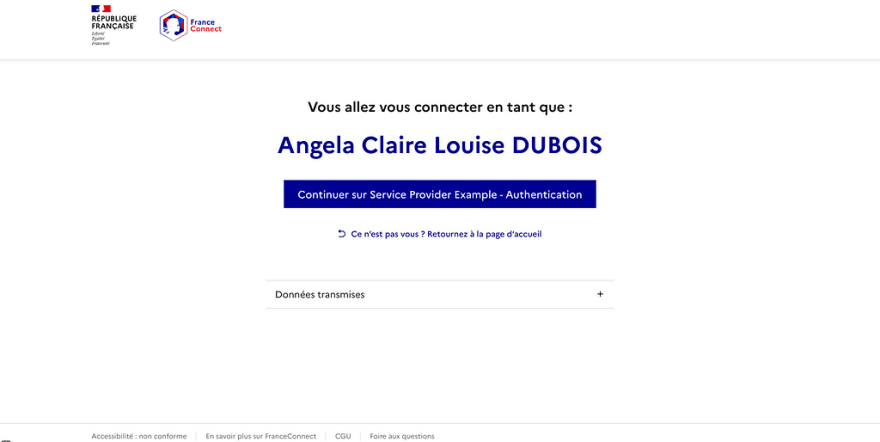

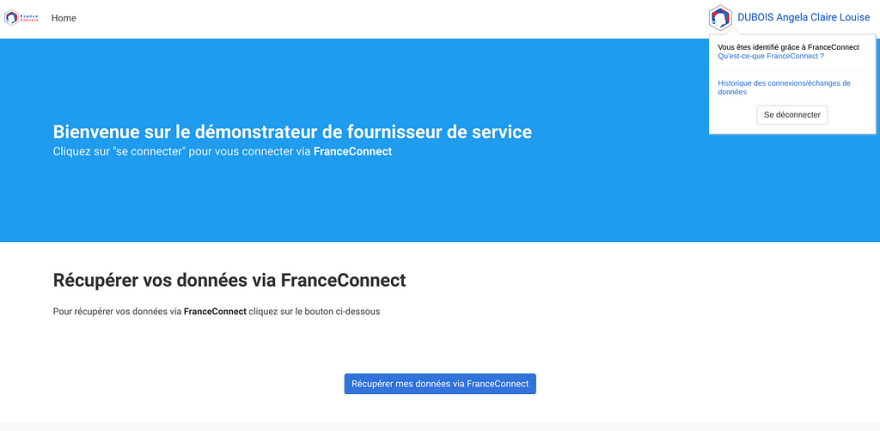

Dès lors, test avec le déploiement encore une fois du sempiternel démonstrateur FC :

ubuntu@k3s1:~$ cat <<EOF | kubectl apply -f -

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: fcdemo3

labels:

app: fcdemo3

spec:

replicas: 4

selector:

matchLabels:

app: fcdemo3

template:

metadata:

labels:

app: fcdemo3

spec:

containers:

- name: fcdemo3

image: mcas/franceconnect-demo2:latest

ports:

- containerPort: 3000

---

apiVersion: v1

kind: Service

metadata:

name: fcdemo-service

spec:

type: LoadBalancer

selector:

app: fcdemo3

ports:

- protocol: TCP

port: 80

targetPort: 3000

EOF

deployment.apps/fcdemo3 created

service/fcdemo-service created

ubuntu@k3s1:~$ kubectl get po,svc

NAME READY STATUS RESTARTS AGE

pod/fcdemo3-6bff77544b-n8x5j 1/1 Running 0 56s

pod/fcdemo3-6bff77544b-n7shv 1/1 Running 0 56s

pod/fcdemo3-6bff77544b-z6s44 1/1 Running 0 56s

pod/fcdemo3-6bff77544b-wmcrg 1/1 Running 0 56s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 54m

service/fcdemo-service LoadBalancer 10.43.212.198 10.26.23.35 80:30918/TCP 56s

Et il est en effet possible à ce stade d’accéder au démonstrateur avec l’adresse IP fournie avec Cilium BGP :

ubuntu@k3s1:~$ http http://10.26.23.35

HTTP/1.1 200 OK

Connection: keep-alive

Content-Length: 2740

Content-Type: text/html; charset=utf-8

Date: Wed, 01 Mar 2023 20:39:41 GMT

ETag: W/"ab4-v8wreE8WoQJ/5EqjkvRXgbacjz4"

X-Powered-By: Express

set-cookie: connect.sid=s%3AiC15aGdDdBtHWW7zrIjqNiUw0Kq93-W6.WBQcxUEQIloR1IqQTlWXFRUOijoLNsdvAq4bVcSKOso; Path=/; HttpOnly

<!doctype html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport"

content="width=device-width, user-scalable=no, initial-scale=1.0, maximum-scale=1.0, minimum-scale=1.0">

<meta http-equiv="X-UA-Compatible" content="ie=edge">

<link rel="stylesheet" href="https://cdnjs.cloudflare.com/ajax/libs/bulma/0.7.1/css/bulma.min.css" integrity="sha256-zIG416V1ynj3Wgju/scU80KAEWOsO5rRLfVyRDuOv7Q=" crossorigin="anonymous" />

<title>Démonstrateur Fournisseur de Service</title>

</head>

<body>

<nav class="navbar" role="navigation" aria-label="main navigation">

<div class="navbar-start">

<div class="navbar-brand">

<a class="navbar-item" href="/">

<img src="/img/fc_logo_v2.png" alt="Démonstrateur Fournisseur de Service" height="28">

</a>

</div>

<a href="/" class="navbar-item">

Home

</a>

</div>

<div class="navbar-end">

<div class="navbar-item">

<div class="buttons">

<a class="button is-light" href="/login">Se connecter</a>

</div>

</div>

</div>

</nav>

<section class="hero is-info is-medium">

<div class="hero-body">

<div class="container">

<h1 class="title">

Bienvenue sur le démonstrateur de fournisseur de service

</h1>

<h2 class="subtitle">

Cliquez sur "se connecter" pour vous connecter via <strong>FranceConnect</strong>

</h2>

</div>

</div>

</section>

<section class="section is-small">

<div class="container">

<h1 class="title">Récupérer vos données via FranceConnect</h1>

<p>Pour récupérer vos données via <strong>FranceConnect</strong> cliquez sur le bouton ci-dessous</p>

</div>

</section>

<section class="section is-small">

<div class="container has-text-centered">

<!-- FC btn -->

<a href="/data" class="button is-link">Récupérer mes données via FranceConnect</a>

</div>

</section>

<footer class="footer custom-content">

<div class="content has-text-centered">

<p>

<a href="https://partenaires.franceconnect.gouv.fr/fcp/fournisseur-service"

target="_blank"

alt="lien vers la documentation France Connect">

<strong>Documentation FranceConnect Partenaires</strong>

</a>

</p>

</div>

</footer>

<!-- This script brings the FranceConnect tools modal which enable "disconnect", "see connection history" and "see FC FAQ" features -->

<script src="https://fcp.integ01.dev-franceconnect.fr/js/franceconnect.js"></script>

</body>

</html>

Pour plus de clarté, exposition via Ngrok :

ubuntu@k3s1:~$ curl -s https://ngrok-agent.s3.amazonaws.com/ngrok.asc | sudo tee /etc/apt/trusted.gpg.d/ngrok.asc >/dev/null && echo "deb https://ngrok-agent.s3.amazonaws.com buster main" | sudo tee /etc/apt/sources.list.d/ngrok.list && sudo apt update && sudo apt install ngrok

ubuntu@k3s1:~$ ngrok http 10.26.23.35:80

ngrok (Ctrl+C to quit)

Add Okta or Azure to protect your ngrok dashboard with SSO: https://ngrok.com/dashSSO

Session Status online

Account eddi now (Plan: Free)

Version 3.1.1

Region Europe (eu)

Latency -

Web Interface http://127.0.0.1:4040

Forwarding https://f1e1-172-105-130-186.eu.ngrok.io -> http://10.26.23.35:80

Connections ttl opn rt1 rt5 p50 p90

0 0 0.00 0.00 0.00 0.00

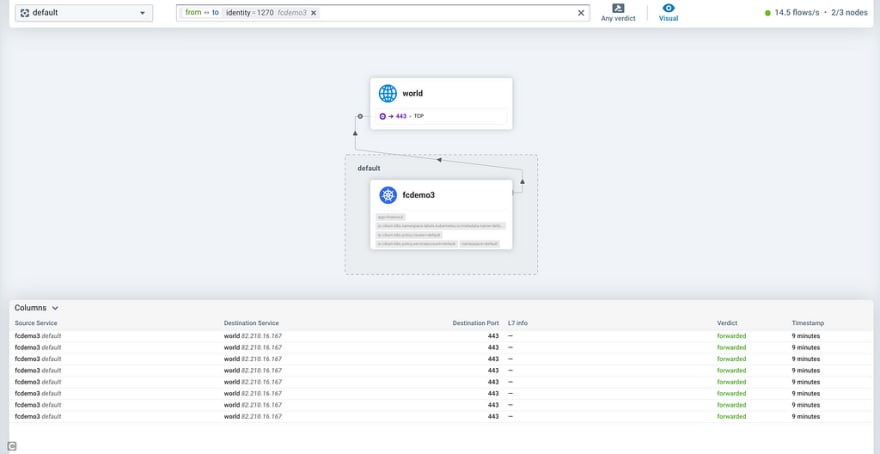

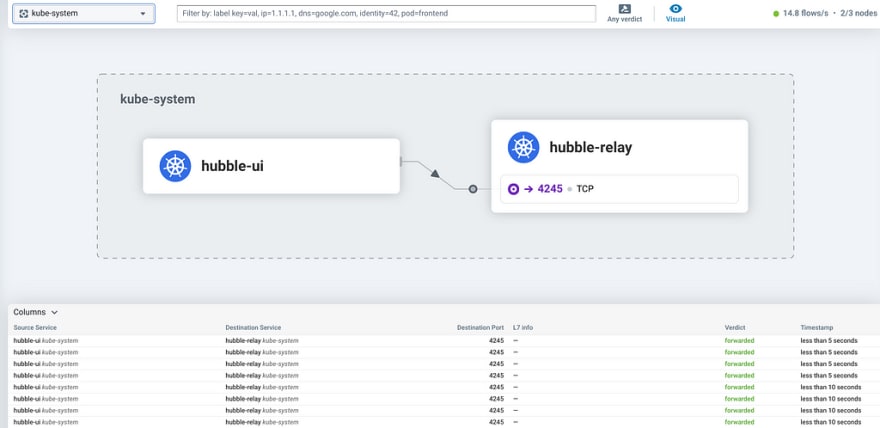

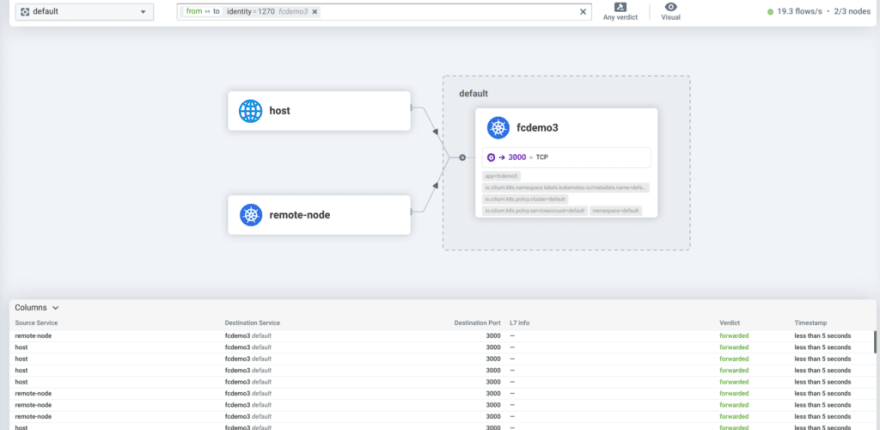

Un petit regard côté observabilité dans Cilium avec Hubble et son interface graphique fournie également en mode LoadBalancer :

ubuntu@k3s1:~$ kubectl get svc -A

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 72m

kube-system kube-dns ClusterIP 10.43.0.10 <none> 53/UDP,53/TCP,9153/TCP 72m

kube-system metrics-server ClusterIP 10.43.252.18 <none> 443/TCP 72m

kube-system hubble-peer ClusterIP 10.43.92.181 <none> 443/TCP 49m

kube-system traefik LoadBalancer 10.43.155.56 10.26.23.32 80:30264/TCP,443:31266/TCP 48m

kube-system hubble-relay LoadBalancer 10.43.128.151 10.26.23.33 80:31405/TCP 45m

kube-system hubble-metrics ClusterIP None <none> 9965/TCP 45m

kube-system hubble-ui LoadBalancer 10.43.66.116 10.26.23.34 80:30183/TCP 45m

default fcdemo-service LoadBalancer 10.43.212.198 10.26.23.35 80:30918/TCP 19m

root@localhost:~# ssh -L 0.0.0.0:80:10.43.66.116:80 ubuntu@10.26.23.163

Il faut noter qu’il est également offert la possibilité de configurer l’équilibrage de charge par proxy pour les services Kubernetes en utilisant Cilium.

Ce qui est utile pour des cas d’utilisation tels que l’équilibrage de charge gRPC.

Une fois activé, le trafic vers un service Kubernetes sera redirigé vers un proxy Envoy géré par Cilium pour l’équilibrage de charge. Cette fonctionnalité est indépendante de la fonctionnalité Kubernetes Ingress Support (Proxy Load Balancing for Kubernetes Services (beta)) :

Proxy Load Balancing for Kubernetes Services (beta) - Cilium 1.13.0 documentation

Test avec ce déploiement comprenant notamment des charges de travail de test consistant en un client de déploiement et un service echo-service avec deux Pods en backend :

ubuntu@k3s1:~$ kubectl apply -f https://raw.githubusercontent.com/cilium/cilium/HEAD/examples/kubernetes/servicemesh/envoy/test-application-proxy-loadbalancing.yaml

configmap/coredns-configmap created

deployment.apps/client created

deployment.apps/echo-service created

service/echo-service created

ubuntu@k3s1:~$ watch -c kubectl get po,svc -A

ubuntu@k3s1:~$ CLIENT=$(kubectl get pods -l name=client -o jsonpath='{.items[0].metadata.name}')

ubuntu@k3s1:~$ kubectl exec -it $CLIENT -- curl -v echo-service:8080/

* Trying 10.43.199.92:8080...

* Connected to echo-service (10.43.199.92) port 8080 (#0)

> GET / HTTP/1.1

> Host: echo-service:8080

> User-Agent: curl/7.83.1

> Accept: */*

>

* Mark bundle as not supporting multiuse

< HTTP/1.1 200 OK

< X-Powered-By: Express

< Vary: Origin, Accept-Encoding

< Access-Control-Allow-Credentials: true

< Accept-Ranges: bytes

< Cache-Control: public, max-age=0

< Last-Modified: Wed, 21 Sep 2022 10:25:56 GMT

< ETag: W/"809-1835f952f20"

< Content-Type: text/html; charset=UTF-8

< Content-Length: 2057

< Date: Wed, 01 Mar 2023 21:12:11 GMT

< Connection: keep-alive

< Keep-Alive: timeout=5

<

<html>

<head>

<link

rel="stylesheet"

href="https://use.fontawesome.com/releases/v5.8.2/css/all.css"

integrity="sha384-oS3vJWv+0UjzBfQzYUhtDYW+Pj2yciDJxpsK1OYPAYjqT085Qq/1cq5FLXAZQ7Ay"

crossorigin="anonymous"

/>

<link rel="stylesheet" href="style.css" />

<title>JSON Server</title>

</head>

<body>

<header>

<div class="container">

<nav>

<ul>

<li class="title">

JSON Server

</li>

<li>

<a href="https://github.com/users/typicode/sponsorship">

<i class="fas fa-heart"></i>GitHub Sponsors

</a>

</li>

<li>

<a href="https://my-json-server.typicode.com">

<i class="fas fa-burn"></i>My JSON Server

</a>

</li>

<li>

<a href="https://thanks.typicode.com">

<i class="far fa-laugh"></i>Supporters

</a>

</li>

</ul>

</nav>

</div>

</header>

<main>

<div class="container">

<h1>Congrats!</h1>

<p>

You're successfully running JSON Server

<br />

✧*。٩(ˊᗜˋ*)و✧*。

</p>

<div id="resources"></div>

<p>

To access and modify resources, you can use any HTTP method:

</p>

<p>

<code>GET</code>

<code>POST</code>

<code>PUT</code>

<code>PATCH</code>

<code>DELETE</code>

<code>OPTIONS</code>

</p>

<div id="custom-routes"></div>

<h1>Documentation</h1>

<p>

<a href="https://github.com/typicode/json-server">

README

</a>

</p>

</div>

</main>

<footer>

<div class="container">

<p>

To replace this page, create a

<code>./public/index.html</code> file.

</p>

</div>

</footer>

<script src="script.js"></script>

</body>

</html>

* Connection #0 to host echo-service left intact

L’ajout d’une politique en couche 7 introduit le proxy Envoy dans le chemin de ce trafic. La requête passant maintenant par le proxy Envoy et se dirigeant ensuite vers le backend …

ubuntu@k3s1:~$ kubectl annotate service echo-service service.cilium.io/lb-l7=enabled

service/echo-service annotated

ubuntu@k3s1:~$ kubectl exec -it $CLIENT -- curl -v echo-service:8080/

* Trying 10.43.199.92:8080...

* Connected to echo-service (10.43.199.92) port 8080 (#0)

> GET / HTTP/1.1

> Host: echo-service:8080

> User-Agent: curl/7.83.1

> Accept: */*

>

* Mark bundle as not supporting multiuse

< HTTP/1.1 200 OK

< X-Powered-By: Express

< Vary: Origin, Accept-Encoding

< Access-Control-Allow-Credentials: true

< Accept-Ranges: bytes

< Cache-Control: public, max-age=0

< Last-Modified: Wed, 21 Sep 2022 10:25:56 GMT

< ETag: W/"809-1835f952f20"

< Content-Type: text/html; charset=UTF-8

< Content-Length: 2057

< Date: Wed, 01 Mar 2023 21:12:33 GMT

< Connection: keep-alive

< Keep-Alive: timeout=5

<

<html>

<head>

<link

rel="stylesheet"

href="https://use.fontawesome.com/releases/v5.8.2/css/all.css"

integrity="sha384-oS3vJWv+0UjzBfQzYUhtDYW+Pj2yciDJxpsK1OYPAYjqT085Qq/1cq5FLXAZQ7Ay"

crossorigin="anonymous"

/>

<link rel="stylesheet" href="style.css" />

<title>JSON Server</title>

</head>

<body>

<header>

<div class="container">

<nav>

<ul>

<li class="title">

JSON Server

</li>

<li>

<a href="https://github.com/users/typicode/sponsorship">

<i class="fas fa-heart"></i>GitHub Sponsors

</a>

</li>

<li>

<a href="https://my-json-server.typicode.com">

<i class="fas fa-burn"></i>My JSON Server

</a>

</li>

<li>

<a href="https://thanks.typicode.com">

<i class="far fa-laugh"></i>Supporters

</a>

</li>

</ul>

</nav>

</div>

</header>

<main>

<div class="container">

<h1>Congrats!</h1>

<p>

You're successfully running JSON Server

<br />

✧*。٩(ˊᗜˋ*)و✧*。

</p>

<div id="resources"></div>

<p>

To access and modify resources, you can use any HTTP method:

</p>

<p>

<code>GET</code>

<code>POST</code>

<code>PUT</code>

<code>PATCH</code>

<code>DELETE</code>

<code>OPTIONS</code>

</p>

<div id="custom-routes"></div>

<h1>Documentation</h1>

<p>

<a href="https://github.com/typicode/json-server">

README

</a>

</p>

</div>

</main>

<footer>

<div class="container">

<p>

To replace this page, create a

<code>./public/index.html</code> file.

</p>

</div>

</footer>

<script src="script.js"></script>

</body>

</html>

* Connection #0 to host echo-service left intact

Cilium et kube-router coopèrent pour utiliser kube-router pour le peering BGP et la propagation des routes et Cilium pour l’application des politiques et l’équilibrage des charges.

Using Kube-Router to Run BGP - Cilium 1.13.0 documentation

ou de l’utilisation conjointe de BIRD :

Using BIRD to run BGP - Cilium 1.13.0 documentation

Le routage à travers les clusters Kubernetes est offert avec Cluster Mesh :

Topology Aware Routing across Clusters with Cluster Mesh

Récapitulatif des nouveautés induites dans la dernière version de Cilium et quelques liens supplémentaires …

Posted on March 1, 2023

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.

Related

March 1, 2023