GitHub security: what does it take to protect your company from credentials leaking on GitHub?

Jean

Posted on May 20, 2020

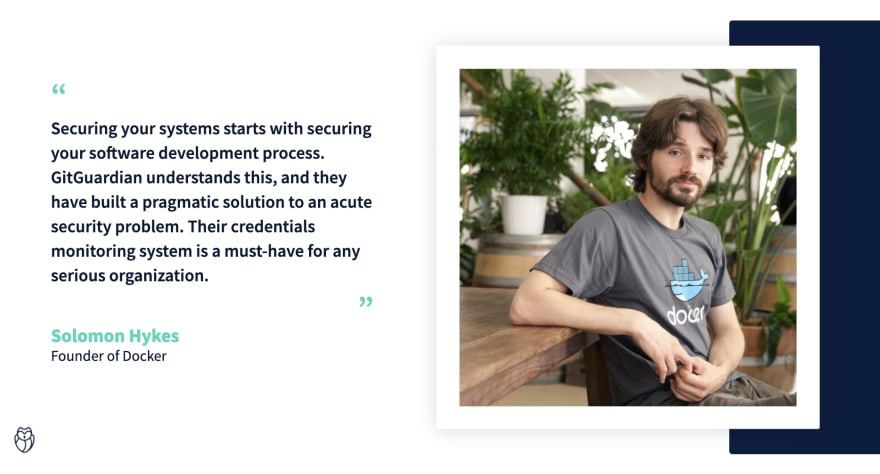

This post was originally written by GitGuardian's CEO Jérémy Thomas. This guide is intended for CISOs, Application Security, Threat Response, and other security professionals who want to protect their companies from credentials leaking on GitHub.

Read this guide if:

- You are aware of the risks of corporate credentials leaking on public GitHub. If you still need convincing, we have 3 years of historical GitGuardian monitoring data that we can filter down to your company domain name, aggregate to remove sensitivity, and share with you upon request, without you taking any of your time to talk to our sales reps if you don’t want to:

Get three years of historical GitGuardian monitoring data filtered down to your company domain name

- You are in the market for a solution, and would like to investigate the requirements such a solution should have.

Disclaimer: I am the CEO of GitGuardian, which offers solutions for detecting, alerting and remediating secrets leaked within GitHub, therefore this article may contain some biases. GitGuardian has been monitoring public GitHub for over 3 years which is why we are uniquely qualified to share our views on this important security issue. Security professionals are often overwhelmed by an army of vendors, many of which are equipped with disputable facts and figures, and favor the use of scare tactics. These professionals therefore prefer to leverage their network or peer recommendations to make buying decisions. I am confident that the information in this guide can be backed up by solid and objective evidence. If you’d like to share your comments on it, please email me directly at jeremy [dot] thomas [at] gitguardian [dot-com].

Requirements for public GitHub monitoring and why they are important

We’ve classified requirements in functional categories:

We will go through these requirements one by one and explain why they are important.

Define & Monitor your perimeter

Monitoring your perimeter requires the ability to automatically associate repositories, developers and published code with your organization. There are millions of commits per day on public GitHub, how can organizations look through the noise and focus exclusively on the information that is of direct interest to them?

Organization repositories monitoring

These are the repositories that are listed under your company’s GitHub Organization, if your company has one. This only concerns companies which have open-source projects. Less than 20% of corporate leaks on GitHub occur within public repositories owned by organizations. The majority of the remaining leaks occur on developers’ personal repositories, and a small portion also occurs on IT service providers' or other suppliers’ repositories.

Developers’ personal public repositories monitoring

Around 80% of corporate leaks on GitHub occur on their developers’ personal public repositories. And yes, I’m really talking about corporate leaks, not personal ones.

In the vast majority of the cases, these leaks are unintentional, not malevolent. They happen for many reasons:

- Developers typically have one GitHub account that they use both for personal and professional purposes, sometimes mixing the repositories.

- It is easy to misconfigure git and push wrong data.

- It is easy to forget that the entire git history is still publicly visible even if sensitive data has since been deleted from the actual version of source code.

Detect incidents

Sensitive information that is leaked on the platform generally falls under two categories:

- What developers call “secrets”,

- Intellectual Property like proprietary source code.

Secret: anything that gives access to a system: API keys, database connection strings, private keys, usernames and passwords. Secrets can give access to cloud infrastructure, databases, payment systems, messaging systems, file sharing systems, CRMs, internal portals, ...

It is very rare, in our experience, to see valid PII leaked on the platform, although we often see secrets giving access to systems containing PII.

High precision

Precision answers the question: “What is the percentage of sensitive information that you detect that is actually sensitive?”. This question is perfectly legitimate, especially in the context of SOCs being overwhelmed with too many false positive alerts.

Precision is easily measurable: the vendor sends alerts, and users can give feedback through a “true alert” / “false alert” button. Your vendor should be able to present precision metrics, backed by strong evidence records and well-defined methodology.

High recall

This one is a bit tougher than precision. Recall answers the question: "What is the percentage of sensitive information you failed to detect?". Having a high recall means having a small number of missed secrets. This question is also very important, considering the impact that a single undetected credential can have for an organization.

Recall is more complicated to measure than precision. This is because finding sensitive information in source code is like finding needles in a haystack: there are a lot more sticks than there are needles. You need to manually go through thousands of sticks in order to realize that you’ve missed a needle or two. A decent proxy for recall is the number of individual API keys and additional sensitive information supported by your vendor.

A good algorithm is able to achieve excellence in precision AND recall.

Ability to detect unprefixed credentials

Some secrets are easier to find than others, especially prefixed credentials that are strictly defined by a distinctive, unambiguous pattern.

The majority of published credentials however, don’t fall into this category. Therefore, any solution based entirely on prefix detection will miss a lot of leaked credentials. Your vendor must be able to detect Datadog keys or OAuth tokens for example, using techniques involving a combination of entropy statistics and sophisticated pattern matching applied not on the presumed key itself, but on its context.

Keyword monitoring

When choosing keywords that you would like to be alerted on, make sure keywords are distinctive enough to be uniquely linked to your company. Good keywords are typically: internal project names (providing they are not common), internal URLs or a reserved IP address range (although not technically a keyword).

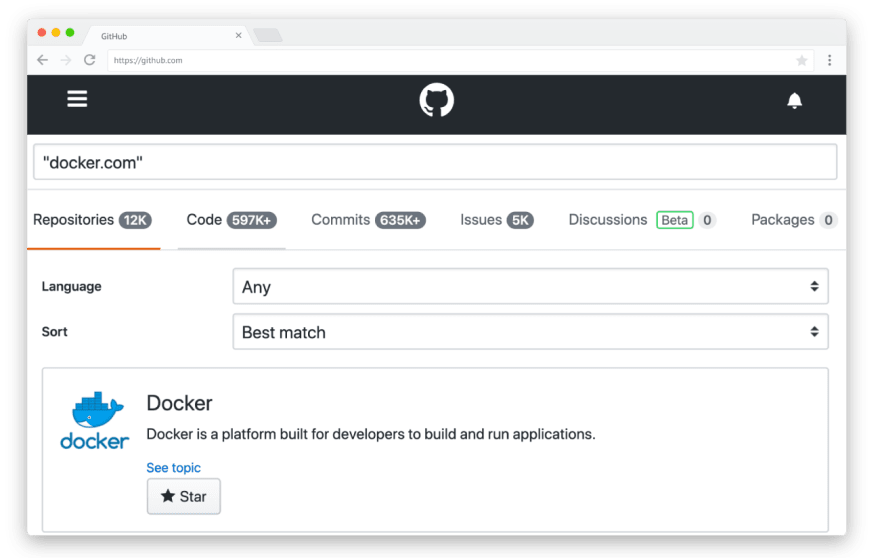

Do you want to know if a given keyword is distinctive enough? Try using the GitHub built-in search for an estimation of the results it would bring (this is just an estimation, as the GitHub search is rather limited).

A concrete example with “docker.com”. With over 597K source codes containing the keyword, it is not a good candidate for keyword matching.

Alert

Real-time alerting

When remediating GitHub leaks, you are in a race against time.

This is especially true when discussing leaked credentials (as opposed to Intellectual Property):

- You are not fighting against the information being more and more widely spread. Because the moment you invalidate the credential, it no longer creates a threat meaning you are no longer concerned if it is further disseminated (except for brand reputation considerations), since it does not give access to anything anymore.

- You are fighting against the credentials being exploited. Credentials are extremely easy to exploit by anyone, without any specific knowledge.

Any detection that involves human operators (typically filtering too many false positives) and is not fully automated is probably already too long to react. You must expect your vendor to come up with strong evidence that their reaction time is counted in minutes, not hours or days.

Developer alerting

Facing a leak can be a tough process that requires speed, and knowledge from multiple people (typically Threat Response / Application Security / Developers).

Since the developer responsible for the leak is at the forefront of the issue, they can be your first responders. This is especially the case if your solution raised the alert fast enough after the leak occurred, so that your developer is still in front of their computer.

- The developer generally knows what the credential gives access to, services and applications that rely on it, other developers who use it, etc. But they often don’t have the right to revoke the credentials and redistribute them.

- Application Security or “DevOps” personnel have the ability to inspect logs generated during the time the key was exposed, evaluate the way a potential hacker could have moved to other systems from this entry point, revoke the credentials and redistribute them.

- Threat Response will make sure that procedures are followed, in terms of investigation, remediation, internal communications, public relations, legal, lessons learned and feedback loop.

Remediate

Integration with your remediation workflow

It is quite obvious that the solution should be integrated with your preferred SIEM, ITSM, ticketing system or chat.

One thing to keep in mind: if your organization is spread over multiple geographies / time zones, automatically associating a GitHub leak with a geography for incident dispatching purposes might not be always possible without first providing additional information to your vendor . This means that you will either have to provide your vendor with a list of your developers and their geographies, or indicate a single entry point that has global responsibilities for your vendor to alert you.

Collaboration with developers

Potential damage can rarely be estimated just by looking at the code surrounding the leaked credential, and sensitive corporate credentials are often leaked on developers’ personal projects. When alerted about a GitHub leak, your first remediation step will always be to check whether or not the developer is still working in your company, and to reach out to them with a questionnaire to gather input for impact assessment and incident prioritization.

Log & Analyze

Logging can answer multiple needs, depending of course on the data that is logged: post-incident analysis, reporting to management, demonstrating compliance to customers or auditors, security (audit trail), or transparency in what the solution is really doing.

I’d like to briefly insist on this last point: ask your vendor for proof points! An ideal solution would provide a detailed list of every monitored developer and repository, as well as logs of every single commit that was analyzed, and reproducible results of conducted scans.

About GitGuardian

As the CEO of GitGuardian, I’m always extremely grateful for the time and trust that security professionals give us. We’ve built a sales organization that is thoroughly trained to behave with extreme respect and professionalism. This is how we sell cybersecurity software at GitGuardian:

- No scare tactics.

- Since we’ve been monitoring GitHub for 3 years now, we can provide you with personalized data from your company’s perimeter. We aggregate the data to remove any sensitive content and in order for you to evaluate whether or not it is worth dedicating time to evaluate our solution.

- Consultative approach: we’ll come up with questions to help you evaluate your needs, and are always keen on sharing the technical details of what we do with your tech teams.

- Later in the sales process, we’ll show your security team the GitGuardian dashboard populated with actual data from your company.

- If we feel we’re not a good fit for your needs, we’ll let you know early in the process.

Contact us to protect your company from credentials leaking on GitHub

Posted on May 20, 2020

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.

Related

October 26, 2024