Chat with your databases using LangChain

Vishnu Sivan

Posted on May 9, 2023

The rise of Large Language Models (LLMs) has brought about a significant shift in technology, empowering developers to create applications that were once beyond imagination. LangChain is an orchestration tool for prompts that leverages the capabilities of LLMs (Large Language Models) to transform the way you communicate with your database. With LangChain, you can easily converse with your database and obtain precise responses in real-time, just as if you were talking to a close friend.

In this tutorial, we will be connecting to PostgreSQL database and initiating a conversation with it using Langchain without querying the database through SQL.

Getting Started

Table of contents

- Introduction to LangChain

- Why use LangChain

- LangChain Structure

- Applications of LangChain

- Creating a question answering app using LangChain

- Creating a simple LLM call using LangChain

- Creating a prompt template

- Interacting with databases using LangChain

Introduction to LangChain

LangChain is an open-source library that offers developers a comprehensive set of resources to develop applications that run on Large Language Models (LLMs). By establishing a mechanism for linking LLMs to external data sources, such as personal documents or the internet. Developers can utilize LangChain to string together a sequence of commands to create sophisticated applications. In short, LangChain serves as a framework that enables the execution of a series of prompts to attain a specific outcome.

Why LangChain

LangChain is an important tool for developers as it makes building of complex applications using LLMs easier. It allows users to connect LLMs to other data sources. The applications can act a wider range of information by connecting LLMs to other data sources. This makes the applications more powerful and versatile.

Langchain also provides features including:

- Flexibility: LangChain is a highly flexible and extensible framework that allows easy component swapping and chain customization to cater to unique requirements.

- Speed: The LangChain development team is continually enhancing the library’s speed, ensuring that users have access to the most recent LLM functionalities.

- Community: LangChain has a strong, engaged community where users can always seek assistance if necessary.

LangChain Structure

The framework is organized into seven modules. Each module allows you to manage a different aspect of the interaction with the LLM.

Image credits: LangChain 101: Build Your Own GPT-Powered Applications — KDnuggets

- LLM LLM is the fundamental component of LangChain. It is a wrapper around the large language model which enables in utilization of the functionalities and capabilities of the model.

- Chains Many a time, to solve tasks a single API call to an LLM is not enough. This module allows other tools to be integrated. For example, you may need to get data from a specific URL, summarize the returned text, and answer questions using the generated summary. This module allows multiple tools to be concatenated in order to solve complex tasks.

- Prompts Prompts are at the core of any NLP application. It is how users interact with the model to try and obtain an output from it. It is important to to know how to write an effective prompt. LangChain provides prompt templates that enables users to format input and other utilities.

- Document Loaders and Utils LangChain’s Document Loaders and Utils modules facilitate connecting to data sources and computations respectively. The utils module provides Bash and Python interpreter sessions amongst others. These are suitable for applications where the users need to interact directly with the underlying system or when code snippets are needed to compute a specific mathematical quantity or to solve a problem instead of computing answers at once.

- Agents An agent is an LLM that makes a decision, takes an action and makes an observation on what has been done, and continues this cycle until the task is completed. LangChain library provides agents that can take actions based on inputs along the way instead of a hardcoded deterministic sequence.

- Indexes The best models are often those that are combined with some of your textual data, in order to add context or explain something to the model. This module helps us do just that.

- Memory This module enables users to create a persisting state between calls of a model. Being able to use a model that remembers what has been said in the past will improve our application.

Applications of LangChain

These are some of the applications of LangChain.

- Querying Datasets with Natural Language LLMs can write SQL queries using natural language. LangChain’s document loaders, index-related chains, and output parser help load and parse the data to generate results. Alternatively, inputting data structure to the LLM is a more common approach.

- Interacting with APIs LangChain’s chain and agent features enable users to include LLMs in a longer workflow with other API calls. This is useful for usecases, such as retrieving stock data or interacting with a cloud platform.

- Building a Chatbot Generative AI holds promise for chatbots that behave realistically. LangChain’s prompt templates provide control over the chatbot’s personality and responses. The message history tool allows for greater consistency within a conversation or even across multiple conversations by giving the chatbot a longer memory than what LLMs provide by default.

Creating a question answering app using LangChain

In the last section we covered basic understanding of LangChain. In the following section we will build a question answering app using LangChain. Follow the steps given below to build a basic question answering app using LangChain.

Installing dependencies

- Create and activate a virtual environment by executing the following command. ```

python -m venv venv

source venv/bin/activate #for ubuntu

venv/Scripts/activate #for windows

- Install `langchain`,`openai` and `python-environ` libraries using pip.

pip install langchain openai python-environ

### Setting up environment variables

Openai key is required to access langchain. Follow the steps to create a new openai key.

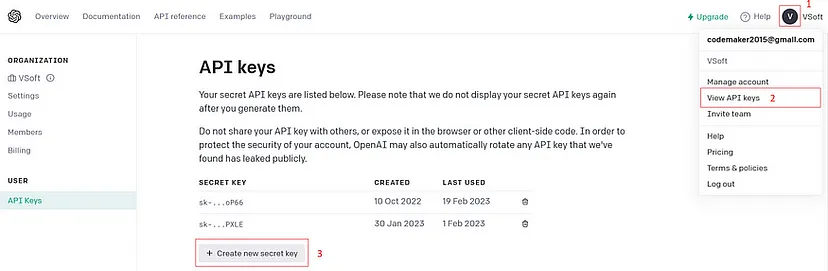

- Open platform.openai.com.

- Click on your name or icon option which is located on the top right corner of the page and select “API Keys” or click on the link — Account API Keys — OpenAI API.

- Click on create new secret key button to create a new openai key.

- Create a file named `.env` and add the `openai` key as follows.

OPENAI_API_KEY=

### Creating simple LLM call using LangChain

Create a new python file langchain_demo.py and add the following code to it.

from langchain.llms import OpenAI

Accessing the OPENAI KEY

import environ

env = environ.Env()

environ.Env.read_env()

API_KEY = env('OPENAI_API_KEY')

Simple LLM call Using LangChain

llm = OpenAI(model_name="text-davinci-003", openai_api_key=API_KEY)

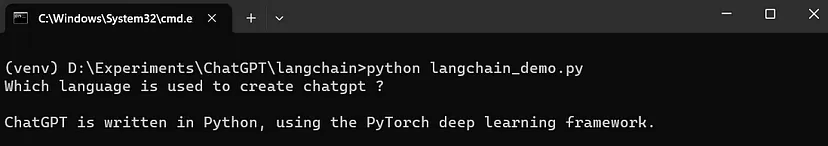

question = "Which language is used to create chatgpt ?"

print(question, llm(question))

We have imported the OpenAI wrapper from langchain. The OpenAI wrapper requires an openai key. The OpenAI key is accessed from the environment variables using the environ library. Initialize it to a llm variable with `text-davinci-003` model. Finally, define a question string and generate a response (`llm(question)`).

### Run the script

Run the LLM call using the following command.

python langchain_demo.py

You will get the output as follows.

### Creating a prompt template

Create a new python file langchain_demo.py and add the following code to it.

from langchain.llms import OpenAI

Accessing the OPENAI KEY

import environ

env = environ.Env()

environ.Env.read_env()

API_KEY = env('OPENAI_API_KEY')

Creating a prompt template and running the LLM chain

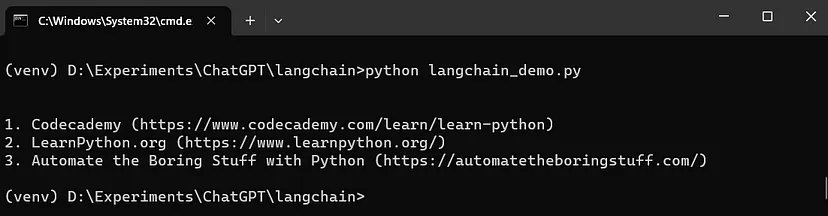

from langchain import PromptTemplate, LLMChain

template = "What are the top {n} resources to learn {language} programming?"

prompt = PromptTemplate(template=template,input_variables=['n','language'])

chain = LLMChain(llm=llm,prompt=prompt)

input = {'n':3,'language':'Python'}

print(chain.run(input))

We have imported PromptTemplate and LLMChain from langchain. Create a prompt template for getting top resources to learn a programming language by specifying template and the input_variables. Create a LLMChain and chain.run() method to run the LLM chain to get the result.

### Run the script

Run the LLM chain using the following command.

python langchain_demo.py

You will get the output as follows.

## Interacting with databases using LangChain

In this section, we will create an app to interact with the postgres database in a natural way (without querying it directly).

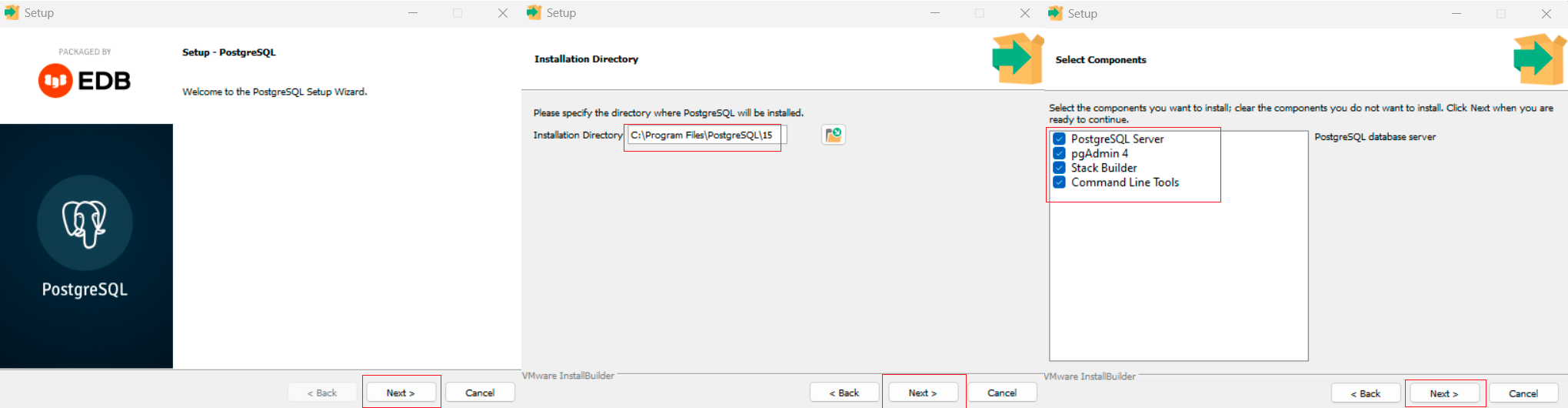

### Installing postgres

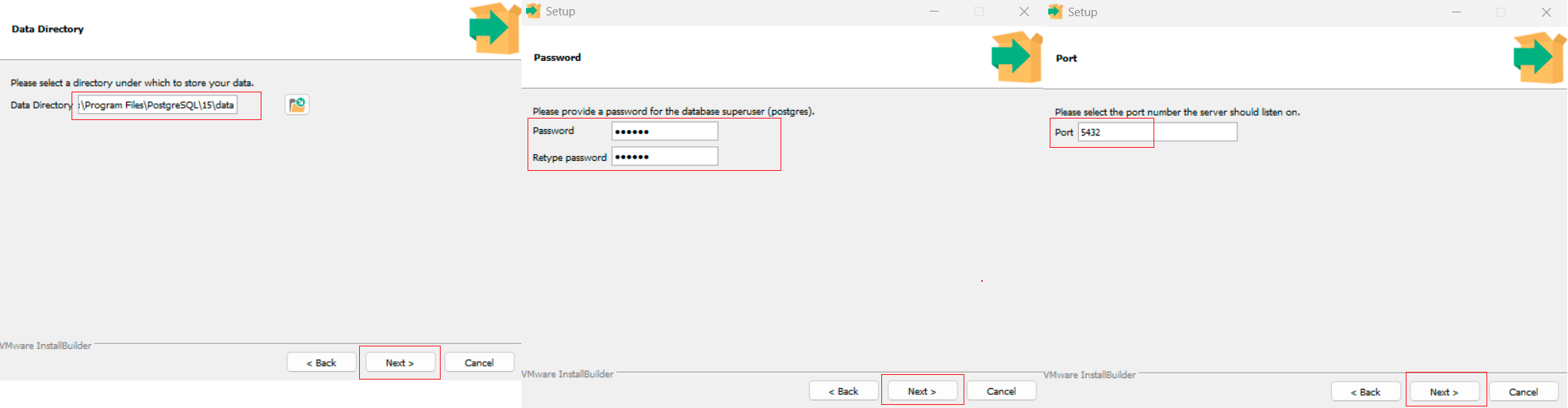

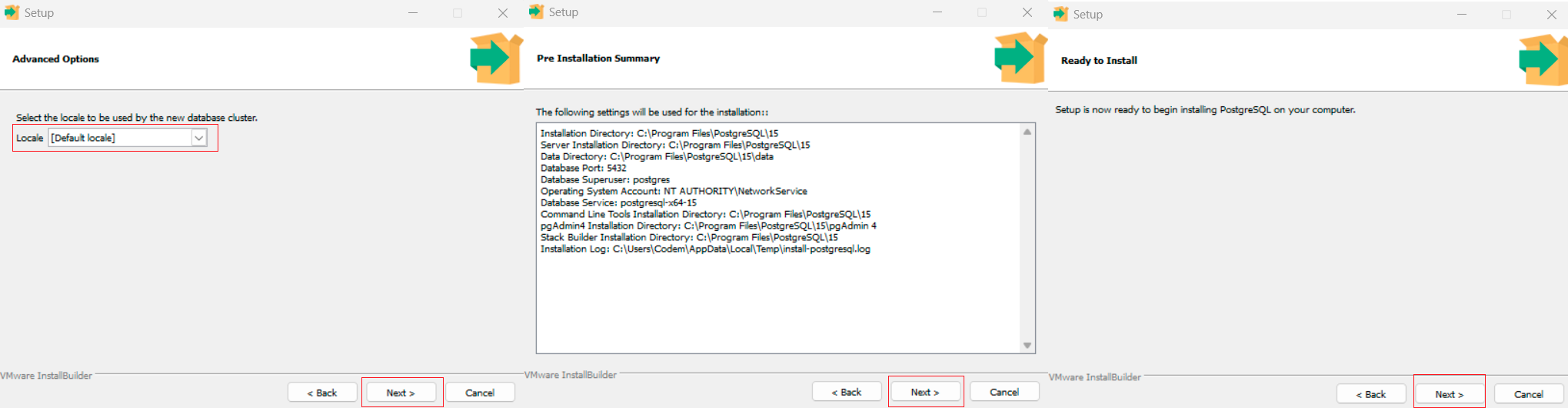

Open the URL [Community DL Page (enterprisedb.com)](https://www.enterprisedb.com/downloads/postgres-postgresql-downloads) and download the package based on your operating system. [[download for windows](https://sbp.enterprisedb.com/getfile.jsp?fileid=1258422)]

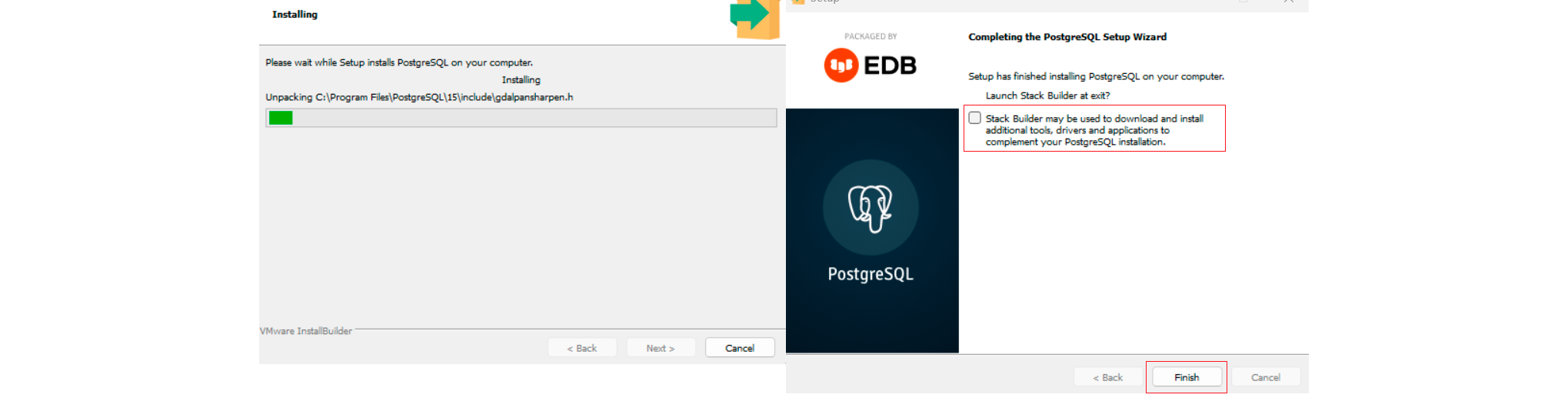

Open the installer and continue with the default values. Specify a root password and complete the installation.

### Creating database

The postgres software has been installed. Create a database table called tasks to keep the task details. This database can be used as a data source for the langchain.

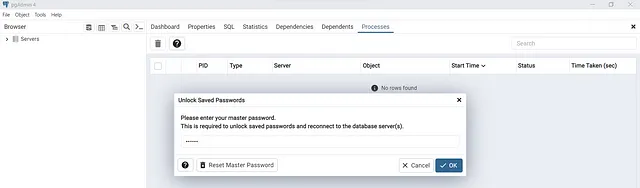

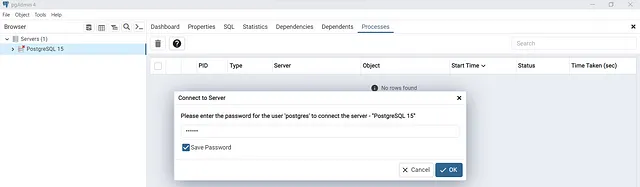

- Open pgAdmin4 application.

- Provide the root password to show the databases.

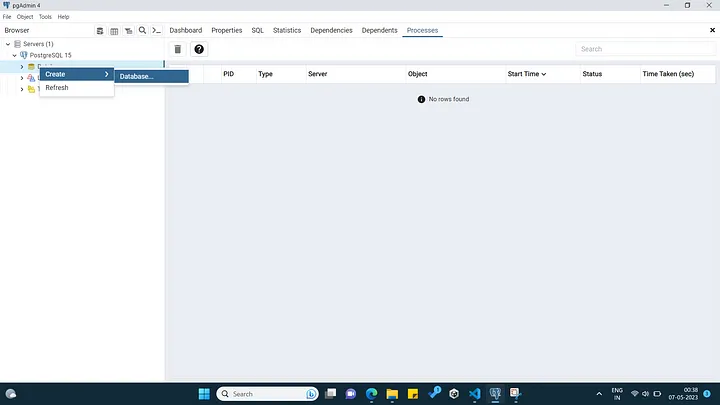

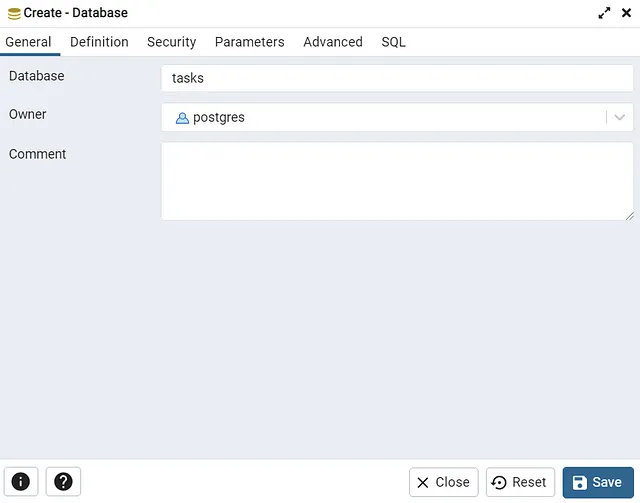

- Right click on the databases and select create → Database. Provide a database name and click on Save to finish the database creation.

### Installing dependencies

- Create and activate a virtual environment by executing the following command.

python -m venv venv

source venv/bin/activate #for ubuntu

venv/Scripts/activate #for windows

- Install `langchain`,`openai`, `python-environ` and `psycopg2` libraries using pip.

pip install langchain openai python-environ psycopg2

### Create tables and insert data

Create a new python file `db.py` and add the following code to it.

import psycopg2

import environ

env = environ.Env()

environ.Env.read_env()

Establish a connection to the PostgreSQL database

conn = psycopg2.connect(

host='localhost',

port=5432,

user='postgres',

password=env('DBPASS'),

database=env('DATABASE')

)

Create a cursor object to execute SQL commands

cursor = conn.cursor()

Create the tasks table if it doesn't exist

cursor.execute('''CREATE TABLE IF NOT EXISTS tasks

(id SERIAL PRIMARY KEY,

task TEXT NOT NULL,

completed BOOLEAN,

due_date DATE,

completion_date DATE,

priority INTEGER)''')

Insert sample tasks into the tasks table

cursor.execute("INSERT INTO tasks (task, completed, due_date, completion_date, priority) VALUES (%s, %s, %s, %s, %s)",

('Complete the web page design', True, '2023-05-01', '2023-05-03', 1))

cursor.execute("INSERT INTO tasks (task, completed, due_date, completion_date, priority) VALUES (%s, %s, %s, %s, %s)",

('Create login and signup pages', True, '2023-05-03', '2023-05-05', 2))

cursor.execute("INSERT INTO tasks (task, completed, due_date, completion_date, priority) VALUES (%s, %s, %s, %s, %s)",

('Product management', False, '2023-05-05', None, 3))

cursor.execute("INSERT INTO tasks (task, completed, due_date, completion_date, priority) VALUES (%s, %s, %s, %s, %s)",

('Cart and wishlist creation', False, '2023-05-08', None, 4))

cursor.execute("INSERT INTO tasks (task, completed, due_date, completion_date, priority) VALUES (%s, %s, %s, %s, %s)",

('Payment gateway integration', False, '2023-05-10', None, 5))

cursor.execute("INSERT INTO tasks (task, completed, due_date, completion_date, priority) VALUES (%s, %s, %s, %s, %s)",

('Order management', False, '2023-05-10', None, 6))

Commit the changes and close the connection

conn.commit()

conn.close()

We have installed the `psycopg2` library and accessed environment variables DBPASS and `DATABASE` from the `.env` file. The conn object will establish a connection to the PostgreSQL database using `psycopg2.connect()` method. The SQL queries for creating the task table and inserting some values in it will be executed with the help of cursor object.

### Running script

To create the task table and insert values to it, run the db.py script using the following command.

python db.py

#### Setting up SQL Database Chain

Create a new python file `app.py` and add the following code to it.

from langchain import OpenAI, SQLDatabase, SQLDatabaseChain

import environ

env = environ.Env()

environ.Env.read_env()

API_KEY = env('OPENAI_API_KEY')

Setup database

db = SQLDatabase.from_uri(

f"postgresql+psycopg2://postgres:{env('DBPASS')}@localhost:5432/{env('DATABASE')}",

)

setup llm

llm = OpenAI(temperature=0, openai_api_key=API_KEY)

Create db chain

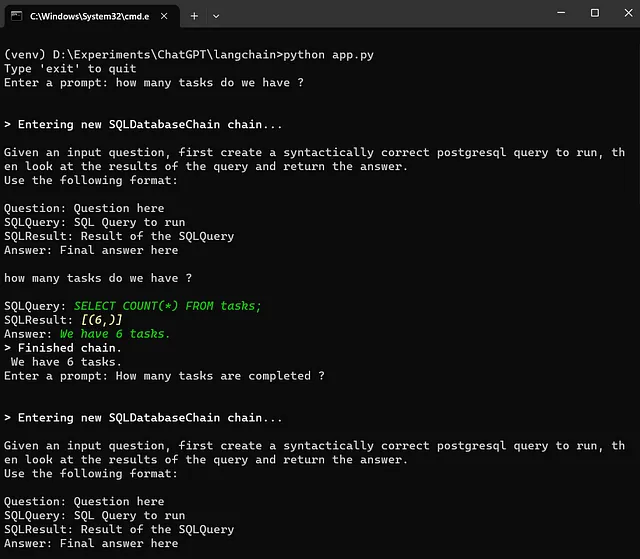

QUERY = """

Given an input question, first create a syntactically correct postgresql query to run, then look at the results of the query and return the answer.

Use the following format:

Question: Question here

SQLQuery: SQL Query to run

SQLResult: Result of the SQLQuery

Answer: Final answer here

{question}

"""

Setup the database chain

db_chain = SQLDatabaseChain(llm=llm, database=db, verbose=True)

def get_prompt():

print("Type 'exit' to quit")

while True:

prompt = input("Enter a prompt: ")

if prompt.lower() == 'exit':

print('Exiting...')

break

else:

try:

question = QUERY.format(question=prompt)

print(db_chain.run(question))

except Exception as e:

print(e)

get_prompt()

**Understanding the code:**

- Import `langchain` modules OpenAI, SQLDatabase, and `SQLDatabaseChain`

- Access OPENAI_API_KEY from the environment variables file.

- Setup the database connection using `SQLDatabase.from_uri()` method by specifying the connection URL.

- Create llm object using `OpenAI()` by specifying the temperature and the `openai_api_key`.

- Create the database chain object called `db_chain` using `SQLDatabaseChain()` by specifying the llm and database objects.

- `get_prompt()` takes user input from the console and creates a query in the format by mentioning the question as an argument. It runs the SQL database chain using `db_chain.run()` method.

### Runing the app

Run the SQL database chain using the following command.

python app.py

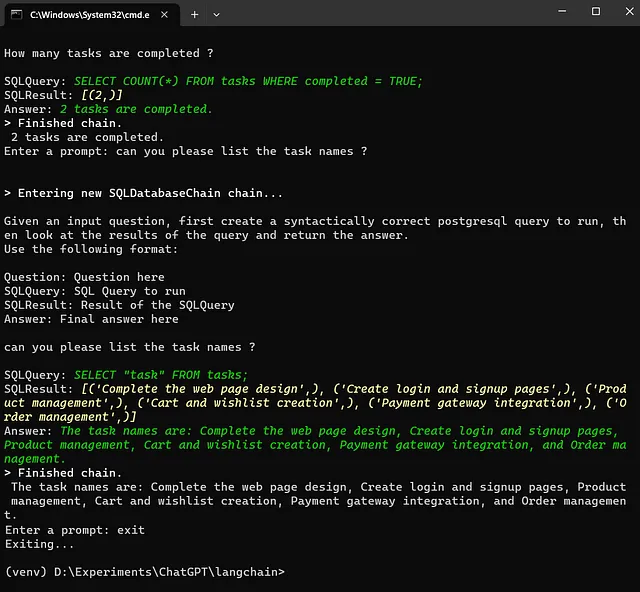

You will get the output as follows,

There you have it! Your first langchain app in python :)

Thanks for reading this article.

Thanks Gowri M Bhatt for reviewing the content.

If you enjoyed this article, please click on the clap button 👏 and share to help others find it!

The full source code for this tutorial can be found here,

[GitHub - codemaker2015/sqldatabasechain-langchain-demo | github.com](https://github.com/codemaker2015/sqldatabasechain-langchain-demo)

The article is also available on [Medium](https://codemaker2016.medium.com/chat-with-your-databases-using-langchain-bb7d31ed2e76).

Here are some useful links,

- https://youtu.be/zaYTXQFR0_s

- [Welcome to LangChain | python.langchain.com](https://python.langchain.com/en/latest/)

- [Develop applications powered by Language Models with LangChain

Get started using LangChain with Python to leverage LLMs

towardsdatascience.com](https://towardsdatascience.com/develop-applications-powered-by-language-models-with-langchain-d2f7a1d1ad1a)

Posted on May 9, 2023

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.