From a pet server to a Kubernetes cluster

David Morcillo

Posted on August 20, 2019

Originally published at Codegram's blog

Introduction

Today I am here to tell you a story about how we migrated our infrastructure and deployment strategy: from being worried all the time to rollout new versions with confidence.

At Codegram, we developed a product called Empresaula. It is a business simulation platform for students (you can read more about it here), and we cannot afford downtimes because it makes classes very difficult to follow for both teachers and students.

In the following schema, you can see the before/after picture of our simplified infrastructure:

We started with a single server called production-server. People usually use other cool names like john-snow or minas-tirith, but we kept it simple. Our database was in another server with automatic backups, so we felt safe until our beloved server was unreachable, and we needed to act fast. That day our office looked like this:

Nowadays, we use Kubernetes, and we have a real sense of safety. The idea of Kubernetes is to avoid feeling attached to any server (you don't name it), you describe your desired system instead. Kubernetes will monitor your system, and if at any point something is wrong, it will try to recover it. Kubernetes handles everything for us so, if everything goes down, we can relax like this:

Before explaining how Kubernetes works, we need to talk about the underlying technology: containers.

Docker: containers everywhere

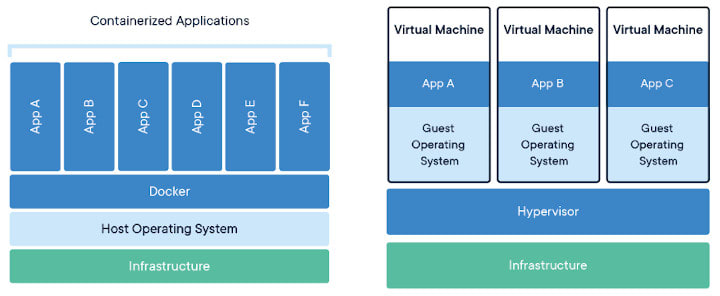

A container is just a portable application. The most used containerization technology is Docker, and it is the default when you are using Kubernetes. A container is usually compared with virtual machines, but they are very different:

A hypervisor manages virtual machines, and every virtual machine it needs to emulate everything: an operating system, memory, CPU, etc. A container needs a container runtime (i.e., Docker) and is executed in the host operating system. When you have containerized your application, you can run it in any server with the Docker engine installed.

To run your application in Docker you need to write a Dockerfile. In the following snippet, you can see an example to create a container for a Rust application:

# Use the rust base image

FROM rust:latest as cargo-build

# Install dependencies

RUN apt-get update && apt-get install musl-tools -y

# Add another Rust compilation target

RUN rustup target add x86_64-unknown-linux-musl

# Install cargo dependencies

WORKDIR /usr/src/backend

COPY Cargo.toml Cargo.toml

RUN mkdir src/

RUN echo "fn main() {println!(\"if you see this, the build broke\")}" > src/main.rs

RUN RUSTFLAGS=-Clinker=musl-gcc cargo build --release --target=x86_64-unknown-linux-musl

RUN rm -f target/x86_64-unknown-linux-musl/release/deps/backend*

# Compile the Rust application

COPY . .

RUN RUSTFLAGS=-Clinker=musl-gcc cargo build --release --target=x86_64-unknown-linux-musl

# Use the alpine base image

FROM alpine:latest

# Copy the generated binary

COPY --from=cargo-build /usr/src/backend/target/x86_64-unknown-linux-musl/release/backend /usr/local/bin/backend

# Run the application

CMD ["backend"]

The Dockerfile is like a recipe of how your application is built and executed. You create a Docker image using that recipe to run the container wherever you want.

Managing containers manually could be cumbersome, so we need something to orchestrate them: let's see how Kubernetes can help with this.

A brief introduction to Kubernetes

Kubernetes is very well defined in their website:

Kubernetes is an open-source system for automating deployment, scaling, and management of containerized applications

In other words, Kubernetes is a container orchestrator that given the desired state, it will schedule the needed tasks to reach that state.

To understand how Kubernetes works, we need to know the smallest unit of scheduling: the pod. In the following schema, you can see different kinds of pods:

The most straightforward type of pod is a 1-to-1 mapping of a container. In some cases, if you have two containers with a tied connection, you can consider having them in the same pod (i.e., a backend application and a message logging system). You can also include Docker volumes inside a pod but be aware that a pod could be moved to another server inside the Kubernetes cluster at any moment.

Kubernetes has a lot of abstractions over pods like the Deployment. A Deployment describes how many replicas of the same pod do you want in your system and when you change anything, it automatically rollouts a new version. In the following snippet, you can see the manifest file (i.e., my-backend.deployment.yaml) of a Deployment object:

apiVersion: 'apps/v1'

kind: 'Deployment'

metadata:

name: 'k8s-demo-backend'

spec:

replicas: 2

selector:

matchLabels:

app: 'backend'

template:

metadata:

labels:

app: 'backend'

spec:

containers:

- name: 'backend'

image: 'k8s-demo-backend'

imagePullPolicy: 'Never'

ports:

- containerPort: 8000

Here I'm specifying that my system should have two replicas of a pod running the k8s-demo-backend Docker image. If I want to rollout a new version, I need to change the previous file (i.e., changing the docker image to k8s-demo-backend:v2).

If at some point one of our servers in the cluster goes down and Kubernetes detects that we don't have these two replicas running it will schedule a new pod deployment (even deploy a new server to allocate the pod if needed).

We cannot access a pod from the outside by default because they are connected to a private network inside the cluster. If you need access from the outside you need to add a Service. The Service is another abstraction that describes how to connect from the outside to a pod (or a group of pods). In the following snippet (i.e., my-backend.service.yaml) you can see an example of a Service:

apiVersion: 'v1'

kind: 'Service'

metadata:

name: 'k8s-demo-backend-service'

spec:

type: 'LoadBalancer'

selector:

app: 'backend'

ports:

- port: 8000

targetPort: 8000

This Service describes a LoadBalancer that will give access from the outside to the group of pods that match the selector app=backend. Any user accessing the cluster using the port 8000 will be redirected to the same port in a pod described in the previous Deployment. The Service represents an abstraction, and it will behave differently depending on our cluster (i.e., if the cluster is running in the Google Kubernetes Engine it will create a Layer 4 Load balancer to the server pool in our cluster).

Demo time!

I have created a repository with a small example of a system that can be deployed in a Kubernetes cluster. You can run a local cluster using minikube, or if you are using Docker for mac you can run a local cluster enabling it in the settings.

Assuming you have your cluster created and you have cloned the repository you need to build the Docker images first:

$ docker build -t k8s-demo-frontend frontend

$ docker build -t k8s-demo-frontend backend

Then you can open a new terminal and run the following command to run every second the Kubernetes CLI command to list the pods, Services, and Deployments in our cluster.

$ watch -n 1 kubectl get pod,svc,deploy

In the repository there a few manifest files that can be applied to the cluster with the following command:

$ kubectl apply -f .

In the other terminal, you should be able to see the list of resources created by Kubernetes. Since we have created a LoadBalancer, we should have an EXTERNAL-IP in the services list to access our application. If you are running the cluster in your local machine, you can visit the application in http://localhost:8080. If everything works correctly, you should be able to see a small Vue application that can submit a form to a Rust server and prompts a message.

If you want to keep playing with the demo, try to change the number of replicas and apply the manifest files again.

Final notes

The transition from the pet server to the Kubernetes wasn't as smooth as described in this article, but it was worth it. We learned a lot during the process and now we are never afraid to rollout new versions of our application. We stopped being worried about our infrastructure and Kubernetes simplified our lives in so many levels so we can just focus on delivering value to the final user. Also we can relax like this cat:

Here you can see a list of useful resources if you want to go deeper into the topic:

- https://kubernetes.io/

- https://github.com/ramitsurana/awesome-kubernetes

- https://labs.play-with-k8s.com/

- https://www.katacoda.com/courses/kubernetes

- https://cloud.google.com/kubernetes-engine/

Shameless plug

This post was heavily inspired by a lightning talk that I delivered in the meetup group Full Stack Vallès.

Do you also want a rock-solid infrastructure and not worry about scaling your servers? Throw us a line at hello@codegram.com and we'll be glad to help you!

Posted on August 20, 2019

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.

Related

November 20, 2024

September 19, 2024