SPA with 4x100% lighthouse score — Part 3: Weather forecast app

Tomas Rezac

Posted on April 1, 2020

After previous two parts of the series, we know why I've chosen Svelte and Sapper to reach our goal and how to build with them a super performant app. We also have a functional ‘Hello world’ app with maximum lighthouse score.

In this 3rd part, I'll show you "production quality app", I made, as proof anyone can build a nice looking functional app with a great load performance. I'll not explain line by line, how the app was made, but will rather share my experience of building this app and dealing with all the restrictions, I imposed to myself.

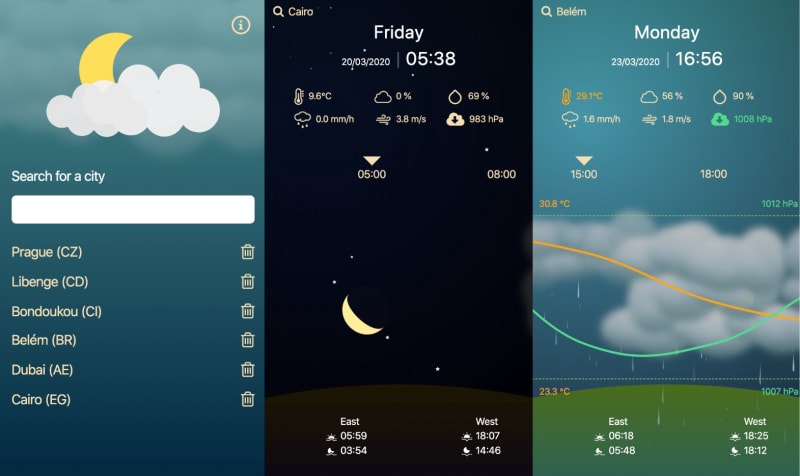

The app showcase

Let's check few screens from the final app:

UPDATE 28th of April 2020: As city search API was removed from open weather API, I provide temporarily link to a specific city (Prague) and you do not have a chance to search city from the main page (I'll try to fix it soon)

You can try the app here or check its code on github

(Please don’t use it as regular app, it is limited to 60 requests per hour using openweathermap API. The app is meant just as a demo)

Here is a list of features the app can do:

PWA — works offline with cached data

Is installable on phone as a web app

Search forecast by city + Remember searched cities

Each of six main weather parameters can be visualised as a chart

Shows forecast via animated scenery (generated clouds with different size, opacity and colour based on rain, cloudiness and sun angle)

Shows animated rain and snow fall, based on it intensity

Shows animated thunderstorms

Shows times of sun/moon rise/set and animates sun/moon accordingly

Shows sun/moon directions from East to West

Shows sun/moon angle above horizon, based on time and period of year

Shows phases of moon

The app is not overloaded with functionality, but it is more than one needs from one screen of an app.

App size limitation

Good news is, that with Sapper each screen is lazy loaded. If you can reach the best lighthouse score on every single page, then your app can be as big as your imagination. You can still prefetch any routes in advance, either once processor is free of work - you can leverage the new window.requestIdleCallback() api. Or simply after user submits such an offer. Asking user to prefetch all routes makes sense, in case he/she is going to use your app in offline mode.

The conclusion: the extent of an app does not really matter, because every page is lazy-loaded by default.

My journey to 100% lighthouse SPA

You can think, I just took the optimised 'Hello World' app from last article and gradually turned it to the weather app without ever dropping below 100% in Lighthouse performance. Well I did not. I even dropped to something like 50% for a moment. Let’s check the hiccups I had, one by one.

1) Requests chaining

Sapper was build with some ideas in mind. One of them is not to load same things twice. In reality it means, if some component is loaded in several routes, it is bundled in a separate chunk. Also pages are not composed only from pre-rendered html and one JS file, but rather two or more, one for routing and minimal svelte api and one for the main component. It makes sense, you don’t want to load same components or the svelte and sapper api on every page again, you want to serve it from the service worker. With http2 many small request are actually good as they can be downloaded and parsed in parallel. The only drawback comes to play, when some code is dependent on code in different file. Unfortunately that is the case of Sapper builds.

After I got warning by Lighthouse about Requests chaining, I decided to get rid of it. Beside rewriting Sapper from scratch, there was only one solution, to rewrite Sapper, just a little and let it generate <link href="/client/index.ae0f46b2.js" rel="modulepreload"> for every single JS file. The rel=modulepreload tells the browser to start downloading and parsing a file before it is requested from real code.

As I was already at this, I also manually added links to 3rd party api: <link href="//api.openweathermap.org" rel="preconnect"><link href="//api.openweathermap.org" rel="dns-prefetch"> to <svelte:head>. Those help with getting DNS info before you ever call it. All these little tweaks have real impact on Time To Interactive.

If you are interested, there is a fork of Sapper on my Github with preload support. I changed what was needed, but was not 100% sure of what I was exactly doing ;) so there is no PR to Sapper — sorry. To be honest, Sapper source code would really appreciate some finishing touches, there are lines of dead code, some //TODOs etc.. Compared to very well maintained Svelte code base, I had a feeling that none cares much about Sapper. If you are good with Webpack or Rollup, I do encourage you to have a look and do something for Sapper community ;)

2) Main thread overloaded

Another warning by Lighthouse told me, that my main thread is too busy. It was a time to use some other threads :) If you are not familiar with javascript threads and Web Workers in particular, the important things to know are

- Worker's code is executed in parallel to code in main thread.

- It is executed in different file and

- main communication between worker's code and your main thread is done over

postMessage()api.

Post message api only lets you send strings back and forth, which is not very nice.

Luckily there is a 2kb Comlink library wrapping this communication to a promise based api. Moreover, it lets you call remote functions as if they were in the same thread. With Comlink, I moved to separate threads all the calculations related to a position of sun and moon and moon phases. It was a perfect fit as Web Worker’s only bottleneck is size of data being transferred. You don’t want to send pictures through it, because the serialisation and deserialisation would be very expensive. In my case I just sent latitude, longitude and time to a worker and it returned back stuff like directions, angles, phases. Because these calculations are quite complex, I was able to save some meaningful time from the main thread. With Comlink you can outsource even trivial tasks, as the overhead is minimal. Here is little example:

worker.js

import * as Comlink from "comlink";

const workerFunctions = {

factorial(n) {

if (n === 0) {

return 1;

} else {

return n * this.factorial( n - 1 );

}

}

}

Comlink.expose(workerFunctions);

main.js

import * as Comlink from "comlink";

const workerFunctions = Comlink.wrap(new Worker("worker.js"));

workerFunctions.factorial(50).then(console.log); // 3.0414093201713376e+64

3. Below the fold

Most significant drop in performance was caused by my cloud generator. I started with a naive implementation. I took all 40 records of weather forecast for next 5 days and for each of them, if it was rainy, I generated a cloud via Canvas. Generating 40 clouds is time and memory consuming, nothing one can afford when aiming for best-in-class performance. I needed to get rid of computations, which are related to stuff below the fold. So I implemented ‘infinity scroll’ with on demand cloud generation. As you scroll further, new clouds are generated. To avoid generation of same clouds twice (when you scroll back) I used powerful functional technique called memoization.

It simply, by creation of closure, adds a caching ability to any pure function you want. If you later call a memoized function with same arguments, it skips any computation and gives you the result from cache. In my case, it granted me yet another advantage. My clouds are actually partly random (the generator function is not pure, ups :0 ). And I don’t want to see different clouds for same scroll positions, when I scroll backward. The memoization ensured that cloud is randomised only on first call, second time I got it from cache :)

Let check together simple memoization function:

function memoize(func) {

const cache = {};

return function memoized(...args) {

const key = JSON.stringify(args);

if (key in cache) return cache[key];

return (cache[key] = func(...args));

};

}

Here is one example how to use it:

function addOne(x){

return x +1;

}

const memoizedAddOne = memoize(addOne);

memoizedAddOne(1); // value counted => 2

memoizedAddOne(1); // value served from cache => 2

It makes sense to use this technique for any pure function, which is often called with same arguments. You shouldn’t use it for cases, where there are thousands of calls with different arguments as it would consume lot of memory by creating huge cache objects.

4. Lazy loaded functionality

If we can avoid loading of any content or code to a browser, we should avoid it. Beside lazy loaded pages, we can use IntersectionObserver to lazy load images, as user scrolls down a page. These are widely used techniques, which should be used where possible. Moreover, there is out of a box support for lazy loaded code in new versions of bundlers like Webpack or Rollup. It is called dynamic import, and it gives you the power to import code on demand from inside functions.

I used dynamic imports to load charting functionality once it is requested by user. You can see it in my app. Only after you click on one of the 6 icons, code responsible for drawing svg paths is downloaded and executed.

In rollup the syntax is very straightforward:

async function showStats(event) {

const smoother = await import("../../helpers/smooth-curve.js");

smoother.getPath();

…

Final Results

I am happy to say, that the Weather app got 4x100% in Lighthouse audit. It is SPA, PWA, installable on phones, with some limited support for offline usage.

Conclusion

As you can see, modern tools and libraries like Rollup and Comlink make lazy loaded and performant app architecture so easy. I would say, there is no excuse not to use similar techniques in web apps and JS heavy pages, especially in those dedicated to general public.

I hope that the app I made is good enough example of what can be done in field of load performance. I am aware of poor animation performance of the app on slower machines. And I know, that too many animations triggered by scroll event is no-go. But this app has never been meant as something anyone should use in daily life. It was just satisfying for me to add more and more animations to it and make it more like a real world experience, rather than presenting some boring numbers.

The animation performance could be improved by using OffscreenCanvas inside a web-worker, but as it’s not supported by all current browsers, I decided not to use it. Maybe one day, I’ll return to this series and make the animation flow in 60 fps, who knows.

I hope you enjoyed the series and and learned something new.

In case you haven't checked it yet, here is The weather app

Aloha!

Posted on April 1, 2020

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.