Serverless WordPress architecture on AWS

Carl Alexander

Posted on August 17, 2021

Serverless PHP is a new exciting technology that has the potential to remove a lot of the burden of hosting PHP applications. One type of application that has the most to gain from serverless PHP is WordPress. Serverless PHP eases the burden of scaling WordPress while offering the same performance benefits that you'd get with a top tier host.

To understand how serverless PHP works with WordPress, we'll look at the current state of the modern WordPress server architecture. This architecture has evolved a lot over the last decade. (You can read more about it here.) Gone are the days of just hosting a WordPress site with an Apache server! There's a lot more to hosting a WordPress site now.

The good news is that hosting a serverless WordPress site looks a lot like it does with a modern WordPress server stack. The big change is that you're going to replace a lot of the architecture components with services from a cloud provider.

Now, the services that you'll use and how they fit together will vary from one cloud provider to another. With serverless PHP, the most popular cloud provider is AWS. That's why we'll focus on serverless WordPress architecture on AWS.

It's also worth noting that this is the same architecture that you'd get using Ymir. The only difference is that Ymir takes care of managing it all for you. (That's also why it's a DevOps platform.) But if you don't mind putting all the pieces together yourself or with the help of another tool (such as the serverless framework, this article will help you achieve that.

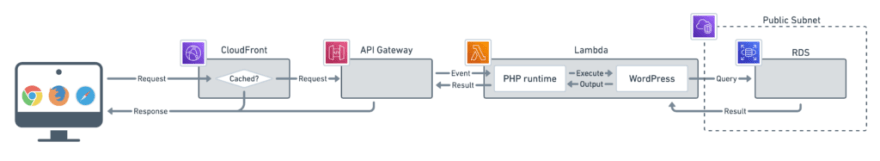

The modern WordPress server architecture

Let's begin with the modern WordPress server architecture. What does it like? Below is a diagram that shows the big picture of what's going on when a browser makes a request for a WordPress page.

Caching before PHP

First, the HTTP request your browser makes is going to hit a HTTP cache. This HTTP cache could be a combination of a content delivery network (also known as CDN) like Cloudflare, a dedicated server like Varnish or a page caching plugin. But whatever you're using, the goal is the same.

You want to cache WordPress responses.

That's because if PHP (and by extension WordPress) is a lot slower than your HTTP cache. So you want your HTTP responses to come from the HTTP cache as much as possible. If the HTTP cache can't respond to your request, then it'll forward the request to WordPress.

Caching on the PHP side

Once you hit PHP, things are a bit more straightforward. The higher the PHP version, the faster WordPress runs. That's why you always try to use the highest PHP version that WordPress supports.

The last point of optimization comes when WordPress makes queries to the MySQL database. These queries often account for most of the slowness that WordPress will have responding to your request. To make WordPress faster, you want to reduce the number of queries WordPress makes as much as possible.

You can achieve this by using an object cache plugin. An object cache plugin stores the results of a MySQL query in a high performance persistent cache. (Usually Memcached or Redis.) It then checks the cache before performing a query and returns the result if it was present. (Much like the HTTP cache does for our HTTP requests.)

This drastically reduces the time that WordPress takes to respond to a request. Especially if your WordPress project has plugins that make a lot of queries such as with WooCommerce. That's why it's an essential component of the modern WordPress server architecture.

Serverless WordPress architecture

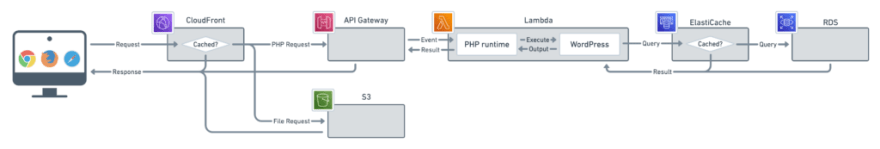

Now that we've gone over the modern WordPress server architecture, let's look how it translates to serverless. The reason we went over it is that the essential components stay the same. The difference is that all these components are going to be handled by specific AWS services.

HTTP cache using CloudFront

So let's go back to our browser, the first thing that your HTTP request is going to hit is CloudFront. CloudFront is the AWS content delivery network. You can use it to do a lot of different things for your serverless WordPress site.

WordPress sites only use CloudFront (or any other CDN) to cache assets like CSS/JS files and media files. But you can also use it as a HTTP cache. This is where the real performance gains happen.

Using CloudFront as a HTTP cache will significantly reduce the response time of your WordPress site. But because CloudFront is also a content delivery network, this reduction applies to all visitors regardless of where they are in the world. This is a big difference compared to most WordPress HTTP cache solutions which aren't globally distributed.

If you'd rather not use CloudFront to do page caching, you can also configure it to just cache CSS/JS files and media files. You're also free to not use CloudFront and either use another content delivery network or none. But beware that performance won't be nearly as good without a content delivery network.

On the way to Lambda: API Gateway or Elastic Load Balancing

If CloudFront can't respond to a request, it'll forward the request to your serverless PHP application hosted on AWS Lambda. Now, much like a regular PHP application, a serverless PHP application isn't exposed to the internet. You need to use another service to act as an intermediary between the two.

With a regular PHP application, this would be the role of your web server. But with serverless PHP, there's no web server per se. Instead, you need to use one of two services to communicate with AWS Lambda. These are API Gateway or Elastic Load Balancing.

In general, you'll want to use an API gateway. (That's why it's the one shown on the diagram.) You should only use a load balancer is when you're dealing with hundreds of million of requests per month. (For example, you'll save several thousand dollars per month between serving a billion requests with a load balancer compared to an API gateway.) But most WordPress sites don't get that much traffic so using an API gateway is the cheaper option.

API gateway types

AWS also has two different API gateways: HTTP API and REST API. You can use either of them. That said, there are some important differences between the two.

First, there's the question of cost. The REST API costs more than the HTTP API. That's because the REST API has more features than the HTTP API.

What are these extra features? Well, it offers http-to-https redirection. But this isn't necessary if you use CloudFront to do page caching. It'll handle the http-to-https redirection.

It also offers edge optimized caching. But this is also something that CloudFront handles for us. In fact, you can't use both REST API edge optimized caching and CloudFront at the same time.

A REST API also supports wildcard subdomains. This is only important if you want to host a subdomain multisite installation. Otherwise, you don't need it either. (You can also work around the HTTP API limitation with wildcard subdomains by using CloudFront functions.)

This is all to show you that most of time you don't need the additional REST API features. So you're better off paying less and using the HTTP API instead.

Lambda

Once the request reaches either an API gateway or a load balancer, it gets converted to an event. This event triggers the Lambda function to run.

This Lambda function has two components. The PHP runtime which converts the event to a PHP FastCGI request. The other one is your WordPress project which is what the FastCGI request will run.

Now, you can create a PHP runtime but it's probably better to use an existing one. Bref is the popular open source PHP runtime for AWS Lambda so it's a good choice to pick. Otherwise, Ymir's PHP runtime is also open source.

ElastiCache

If you're using an object cache, you'll need a Memcached or Redis cache. ElastiCache is the AWS service that manages those. So you'll need to create one if you want to use a persistent object cache.

If you do decide to use one, be aware that you'll need to put your Lambda function on a private subnet to access it. This, in turn, will require a NAT gateway. You can also replace the NAT gateway with a specific EC2 instance that acts as the gateway. (You can read about how to do it here.)

VPC

This is a good time to bring up the virtual private cloud also known as VPC. AWS requires that certain resources such as your cache and database (which we'll see next!) be connected to a VPC. Some have to be on a private subnet (network without access to the internet) while others can also be on a public subnet (network with access to the internet).

If all you don't use ElastiCache, your interactions with a VPC are minimal. You'll just need a public subnet for the RDS database. Here's an updated diagram showing the VPC without ElastiCache.

Things are a more complicated if you need to use private resources like ElastiCache. This is where you need to add the NAT gateway discussed earlier. But you also need to change certain resources to use the private subnet such as your Lambda function. (By default, Lambda Functions don't need a subnet.)

You'll also need a bastion host to connect to the resources. A bastion host is an EC2 instance that acts as a bridge to the private subnet. For example, a bastion host is necessary if you want to connect to your database server if it's on the private subnet.

Above is a diagram showing the same architecture with ElastiCache, NAT gateway, bastion host and the private subnet. As you can see, there are more moving parts. It's also more complicated in terms of VPC configuration.

RDS

The last component of our serverless WordPress architecture is the database. RDS is the service that manages relational databases like MySQL and MariaDB.

If you use a public subnet, your database is going to be publicly accessible. This isn’t much a security problem as long as you don’t share the database server address. But this makes the use of a strong password when creating it even more important.

That said, if it’s on a private subnet, you should set a strong password anyway! But you don’t have to worry about the database being publicly accessible. However, like we mentioned with the VPC, you’ll need to create a bastion host to connect to it.

What about media uploads?

One thing we haven't talked about yet is uploads. What happens to uploads when you don't have a server? Well, that part is a lot more complicated than what we've seen so far!

Above is an updated diagram showing you what our serverless WordPress architecture looks like with media uploads. Things start off the same with requests going through CloudFront. Once the request hits CloudFront, it'll decide whether this is a serverless PHP request or a request for a media file. (Not just an uploaded file)

If it's a media file, the request instead goes to S3 which is the most well known AWS service. It's a file storage service that lets you store files in the cloud inexpensively. There are a lot of WordPress plugins that let you upload files to S3.

How most S3 plugins work

That said, there are some notable differences when you use S3 with serverless WordPress compared to a plugin. The biggest one is how you send your WordPress media files to S3. With a plugin, it looks like this:

You upload a media file to your WordPress server like you would normally without a plugin. The plugin itself takes care to upload the file to S3 once it's on the server. Most of the time, this happens in the background using a WordPress cron.

How does file uploads work with S3 and serverless WordPress?

With serverless, you can't do that because there's no server to upload the file to first. Instead, you need to upload it to S3 right away. The proper way to do that is to use a signed URL.

A signed URL is a temporary URL that you can use to perform an operation on S3. So what you need to do is contact S3 and it'll create a signed URL. You then use this signed URL to upload the file to S3 without compromising your security.

This is a lot harder to do in practice since WordPress doesn't make it easy to take over this process. You also don't have direct access to the files on the server to create the different file sizes either. So you need to develop a way to create those as well.

This is by far the more complicated aspect of the serverless WordPress architecture. It's not really because of anything specific with AWS. It's just because WordPress is an old piece of software. Its creators designed it assuming you'd always have a server to upload files to. (The same with most S3 upload plugins as well.) There's no simple way to tell it you don't want to do it.

That said, WordPress is very extensible. You can write a plugin (like the Ymir one) that can hijack the WordPress media upload process. (Another plugin that supports direct upload is Media Cloud.) That's what's necessary for it to work with serverless.

Different, but also familiar

As you can see, a serverless WordPress installation isn't quite the same as a modern WordPress server architecture. But it's grounded in the same fundamentals. It's just that you have to rely on services from cloud providers like AWS.

But that's also what a lot of hosts already do. They rely on services like a content delivery network (CloudFront), managed databases (RDS) and managed Redis caches (ElastiCache). So there are some similar elements between the two.

But as the issue with media uploads highlighted, a lot of the architectural challenges with serverless come from WordPress itself. It's not that it can't run on Lambda. It's that some of its inner workings assume there will always be a server present.

That said, overcoming these issues can be worthwhile. This is especially true if you need to scale WordPress or, even more so, WooCommerce. Serverless offers unparalleled scaling potential. That's also why it's the ideal platform for WooCommerce.

Posted on August 17, 2021

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.