Introduction to Analyzer in Elasticsearch

Brilian Firdaus

Posted on November 22, 2020

If we want to create a good search engine with Elasticsearch, knowing how Analyzer works is a must. A good search engine is a search engine that returns relevant results. When the user queried something in our Search Engine, we need to return the documents relevant to the user query.

One component we can tune so Elasticsearch can return relevant documents is Analyzer. Analyzer is a component responsible for processing the text we want to index and is one component that control which documents are more relevant when querying.

A bit about Inverted Index

Since Analyzer correlates tightly to Inverted Index, we need to understand about what Inverted Index is first.

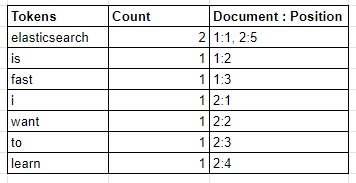

Inverted Index is a data structure for storing a mapping between token to the document identifiers that have the term. Other than document identifiers, the Inverted Index also stores the token position relative to the documents. Since Elasticsearch map the tokens with document identifiers, when you do a query to Elasticsearch, it can easily get the documents you want and returns the documents quick.

Indexing documents into Inverted Index

Let’s say that we want to index 2 documents:

Document 1: Elasticsearch is fast

Document 2: I want to learn Elasticsearch

Let’s take a peek into the Inverted Index and see the result of the Analysis and Indexing process:

As you can see, the terms are counted and mapped into document identifiers and its position in the document. The reason we don’t see the full document Elasticsearch is fast or I want to learn Elasticsearch is because they go through Analysis process, which is our main topic in this article.

Querying into Inverted Index

There is one thing to note regarding querying to Inverted Index. The Elasticsearch will only get the documents with the same term as the one queried.

We can easily test this by using two types of Elasticsearch’s query, Match Query and Term Query. Basically, the Match Query will go through an Analysis process while Term Query won’t. if you’re interested in the difference between them, you can read in my other articles “Elasticsearch: Text vs. Keyword”

If you try to do a Term Query Elasticsearch to the index in the example above, you won’t get any result. This happens because the token in the Inverted Index is elasticsearch with lowercase e. While when you try the same using Match Query, Elasticsearch will analyze the query into elasticsearch before searching in the Inverted Index. Hence, the query will return results.

What is Analyzer in Elasticsearch?

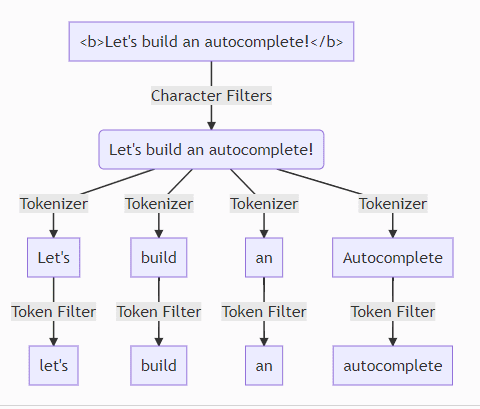

When we insert a text document into the Elasticsearch, the Elasticsearch won’t save the text as it is. The text will go through an Analysis process performed by an Analyzer. In the Analysis process, an Analyzer will first transform and split the text into tokens before saving it to the Inverted Index.

For example, inserting Let’s build an Autocomplete! to the Elasticsearch will transform the text into 4 terms, let’s, build, an, and autocomplete.

The analyzer will affect how we search the text, but it won’t affect the content of the text itself. With the previous example, if we search for let, the Elasticsearch will still return the full text Let’s build an autocomplete! instead of only let.

Elasticsearch’s Analyze API

Elasticsearch provide a very convenient API that we can use to test and visualize analyzer:

Request

curl --request GET \

--url http://localhost:9200/_analyze \

--header 'Content-Type: application/json' \

--data '{

"analyzer":"standard",

"text": "Let'\''s build an autocomplete!"

}'

Response

{

"tokens": [

{

"token": "let's",

"start_offset": 0,

"end_offset": 5,

"type": "<ALPHANUM>",

"position": 0

},

{

"token": "build",

"start_offset": 6,

"end_offset": 11,

"type": "<ALPHANUM>",

"position": 1

},

{

"token": "an",

"start_offset": 12,

"end_offset": 14,

"type": "<ALPHANUM>",

"position": 2

},

{

"token": "autocomplete",

"start_offset": 15,

"end_offset": 27,

"type": "<ALPHANUM>",

"position": 3

}

]

}

Elasticsearch Analyzer Components

Elasticsearch’s Analyzer has three components you can modify depending on your use case:

- Character Filters

- Tokenizer

- Token Filter

Character Filters

The first process that happens in the Analysis process is Character Filtering, which removes, adds, and replaces the characters in the text.

There are three built-in Character Filters in Elasticsearch:

- HTML Strip Character Filters: Will strip out html tag and characters like

<b>,<i>,<div>,<br />, et cetera. - Mapping Character Filters: This filter will let you map a term into another term. For example, if you want to make the user can search an emoji, you can map “:)” to “smile”

- Pattern Replace Character Filter: Will replace a regular expression pattern into another term. Be careful though, using Pattern Replace Character Filter will slow down your documents indexing process.

Tokenizer

After Character Filtering process, our text proceeds to the Tokenization process by a Tokenizer. Tokenization splits your text into tokens. For example, previously we transformed Let’s build an autocomplete to terms let’s, build, an, and autocomplete. The transformation process of splitting the text into 4 tokens is done by Tokenizer.

There are too many Tokenizer to write in this article in the Elasticsearch. If you’re interested, you can find the list in the Elasticsearch Documentation.

Some of the most common used Tokenizer are:

-

Standard Tokenizer: Elasticsearch’s default Tokenizer. It will split the text by white space and punctuation -

Whitespace Tokenizer: A Tokenizer that split the text by only whitespace. -

Edge N-Gram Tokenizer: Really useful for creating an autocomplete. It will split your text by white space and characters in your word. e.g.Hello->H,He,Hel,Hell,Hello.

Note that you need to be careful with Tokenizer because too many of it would slow down your insert process.

Token Filter

Token Filtering is the third and the ending process in Analysis. This process will transform the tokens depending on the Token Filter we use. In Token Filtering process, we can lowercase, remove stop words, and add synonyms to the terms.

There are also so many Token Filter in the Elasticsearch which you can also read on their documentation.

he most common usage of Token Filter is lowercase token filter which will lowercase all your tokens.

Standard Analyzer

Standard Analyzer is the default analyzer of the Elasticsearch. If you don’t specify any analyzer in the mapping, then your field will use this analyzer. It uses grammar based Tokenization specified in https://unicode.org/reports/tr29/, and it works pretty well with most language.

The standard analyzer uses:

Standard TokenizerLower Case Token Filter-

Stop Token Filter(disabled by default) So with those components, it basically does: - Tokenize the text into tokens by white space and punctuation

- Lowercase the tokens

- If you enable Stop Token Filter, it will remove stop words Let’s try explaining a document “Let’s learn about Analyzer!” with Standard Analyzer: Request

curl --request GET \

--url http://localhost:9200/_analyze \

--header 'Content-Type: application/json' \

--data '{

"analyzer":"standard",

"text": "Let'\''s learn about Analyzer!"

}'

Response

{

"tokens": [

{

"token": "let's",

"start_offset": 0,

"end_offset": 5,

"type": "<ALPHANUM>",

"position": 0

},

{

"token": "learn",

"start_offset": 6,

"end_offset": 11,

"type": "<ALPHANUM>",

"position": 1

},

{

"token": "about",

"start_offset": 12,

"end_offset": 17,

"type": "<ALPHANUM>",

"position": 2

},

{

"token": "analyzer",

"start_offset": 18,

"end_offset": 26,

"type": "<ALPHANUM>",

"position": 3

}

]

}

We can see that Standard Analyzer split the text into tokens by white space. It also removes the punctuation ! because there is no more token after it. We can also see that all the tokens are lower cased because Standard Analyzer uses Lower Case Token Filter.

In my previous article, “Create a Simple Autocomplete With Elasticsearch”, we only use the Standard Analyzer and can achieve creating a simple autocomplete. By using a custom analyzer that contain our chosen Character Filters, Tokenizer, and Token Filter, we can make a more advanced autocomplete that will produce more relevant results.

Custom Analyzer

Custom Analyzer is an analyzer in which we can define its name and components according to what we want.

To create a custom analyzer, we have to define it in our Elasticsearch settings, which we can do when creating an index:

curl --request PUT \

--url http://localhost:9200/autocomplete-custom-analyzer \

--header 'Content-Type: application/json' \

--data '{

"settings": {

"analysis": {

"analyzer": {

"cust_analyzer": {

"type": "custom",

"tokenizer": "whitespace",

"char_filter": [

"html_strip"

],

"filter": [

"lowercase"

]

}

}

}

}

}'

We just created a custom analyzer with Whitespace Tokenizer, html_strip character filter, and lowercase filter. But is there any way to test the analyzer before we use it in our index?

Let’s try it out using text Let’s build an autocomplete! and compare it with standard analyzer:

Standard Analyzer

Request

curl --request POST \

--url http://localhost:9200/autocomplete-custom-analyzer/_analyze \

--header 'Content-Type: application/json' \

--data '{

"analyzer": "standard",

"text":"<b>Let'\''s build an autocomplete!</b>"

}'

Response

{

"tokens": [

{

"token": "b",

"start_offset": 1,

"end_offset": 2,

"type": "<ALPHANUM>",

"position": 0

},

{

"token": "let's",

"start_offset": 3,

"end_offset": 8,

"type": "<ALPHANUM>",

"position": 1

},

{

"token": "build",

"start_offset": 9,

"end_offset": 14,

"type": "<ALPHANUM>",

"position": 2

},

{

"token": "an",

"start_offset": 15,

"end_offset": 17,

"type": "<ALPHANUM>",

"position": 3

},

{

"token": "autocomplete",

"start_offset": 18,

"end_offset": 30,

"type": "<ALPHANUM>",

"position": 4

},

{

"token": "b",

"start_offset": 33,

"end_offset": 34,

"type": "<ALPHANUM>",

"position": 5

}

]

}

cust_analyzer

Request

curl --request POST \

--url http://localhost:9200/autocomplete-custom-analyzer/_analyze \

--header 'Content-Type: application/json' \

--data '{

"analyzer": "cust_analyzer",

"text":"<b>Let'\''s build an autocomplete!</b>"

}'

Response

{

"tokens": [

{

"token": "let's",

"start_offset": 0,

"end_offset": 5,

"type": "word",

"position": 0

},

{

"token": "build",

"start_offset": 6,

"end_offset": 11,

"type": "word",

"position": 1

},

{

"token": "an",

"start_offset": 12,

"end_offset": 14,

"type": "word",

"position": 2

},

{

"token": "autocomplete!",

"start_offset": 15,

"end_offset": 28,

"type": "word",

"position": 3

}

]

}

We can see some differences between them:

- The results of

standard analyzerhas 2 b token while cust_analyzer does not. This happens because thecust_analyzerstrip away the html tag completely. - The

standard analyzersplit the text by the white space or special characters like<,>, and!while thecust_analyzeronly split the text by white space - The

standard analyzerstrip away special characters whilecust_analyzerdoes not. We can see it by the difference of theautocomplete!token.

So everything is as we expected. Our analyzer behave like what we want. Our last step is to apply it to the field by using mapping:

curl --request PUT \

--url http://localhost:9200/autocomplete-custom-analyzer/_mapping \

--header 'Content-Type: application/json' \

--data '{

"properties": {

"standard-text": {

"type": "text",

"analyzer": "standard"

},

"cust_analyzer-text": {

"type": "text",

"analyzer": "cust_analyzer"

}

}

}'

Now, we mapped our standard-text field to use standard analyzer, and cust_analyzer-text to use cust_analyzer.

Let’s index a document Let’s build an autocomplete! and try it out!

curl --request POST \

--url http://localhost:9200/autocomplete-custom-analyzer/_doc \

--header 'Content-Type: application/json' \

--data '{

"standard-text": "<b>Let'\''s build an autocomplete!</b>"

}'

curl --request POST \

--url http://localhost:9200/autocomplete-custom-analyzer/_doc \

--header 'Content-Type: application/json' \

--data '{

"cust_analyzer-text": "<b>Let'\''s build an autocomplete!</b>"

}'

Let’s try query b with bool query and see what happens:

Request

curl --request POST \

--url http://localhost:9200/autocomplete-custom-analyzer/_doc/_search \

--header 'Content-Type: application/json' \

--data '{

"query": {

"bool": {

"should": [

{

"term": {

"standard-text": "b"

}

},

{

"term": {

"cust_analyzer-text": "b"

}

}

]

}

}

}'

Response

{

"took": 4,

"timed_out": false,

"_shards": {

"total": 1,

"successful": 1,

"skipped": 0,

"failed": 0

},

"hits": {

"total": {

"value": 1,

"relation": "eq"

},

"max_score": 0.39556286,

"hits": [

{

"_index": "autocomplete-custom-analyzer",

"_type": "_doc",

"_id": "Zb6ZoXUB41vbg1J3Q6nx",

"_score": 0.39556286,

"_source": {

"standard-text": "<b>Let's build an autocomplete!</b>"

}

}

]

}

}

The only result is the document we index using standard analyzer because in the standard analyzer we didn’t strip the html tag with html_strip character filter while in cust_analyzer we did.

Let’s try one more query, autocomplete!:

Request

curl --request POST \

--url http://localhost:9200/autocomplete-custom-analyzer/_doc/_search \

--header 'Content-Type: application/json' \

--data '{

"query": {

"bool": {

"should": [

{

"term": {

"standard-text": "autocomplete!"

}

},

{

"term": {

"cust_analyzer-text": "autocomplete!"

}

}

]

}

}

}'

Response

{

"took": 3,

"timed_out": false,

"_shards": {

"total": 1,

"successful": 1,

"skipped": 0,

"failed": 0

},

"hits": {

"total": {

"value": 1,

"relation": "eq"

},

"max_score": 0.2876821,

"hits": [

{

"_index": "autocomplete-custom-analyzer",

"_type": "_doc",

"_id": "Zr6ZoXUB41vbg1J3VKmt",

"_score": 0.2876821,

"_source": {

"cust_analyzer-text": "<b>Let's build an autocomplete!</b>"

}

}

]

}

}

Now, the only result is the document we index using cust_analyzer. Since the standard analyzer split the document into tokens by white space and punctuation, it removes the ! character. The cust_analyzer only split the documents by white space, so the ! is not removed.

Conclusion

Analyzer is an important component you need to learn if you want to create a good Search Engine. Understanding it is the first step to control which documents to show to the users when they query words.

The next step to understand how to create a good Search Engine is to understand the relevance score calculation, boosting, querying and feature to use, which I intend to write too. So, wait for it 🙂

Alas, I want to say thank you for everyone that reads until the end!

Posted on November 22, 2020

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.