Generating a Concordance

David Berry

Posted on January 28, 2024

Merriam-Webster defines a concordance as an alphabetical index of the principal words in a book or the works of an author with their immediate contexts. It is useful for building an index of words in a given text. In this article we will build a concordance generator using Java. The concordance should list the word, the number of times it appears in the text, and the sentences in which the word appears. The text that we will work with is the Gettysburg Address

Creating the project

Let's start by creating the project. We will use the maven quickstart archetype to generate and empty project:

mvn archetype:generate -DarchetypeGroupId=org.apache.maven.archetypes -DarchetypeArtifactId=maven-archetype-quickstart -DarchetypeVersion=1.4

You will be asked to fill in the following questions, and can use groupIds and packages that make sense for you:

Define value for property 'groupId': org.exaxis.concordance

Define value for property 'artifactId': concordance

Define value for property 'version' 1.0-SNAPSHOT: :

Define value for property 'package' org.exaxis.concordance: :

Confirm properties configuration:

groupId: org.exaxis.concordance

artifactId: concordance

version: 1.0-SNAPSHOT

package: org.exaxis.concordance

Y: : Y

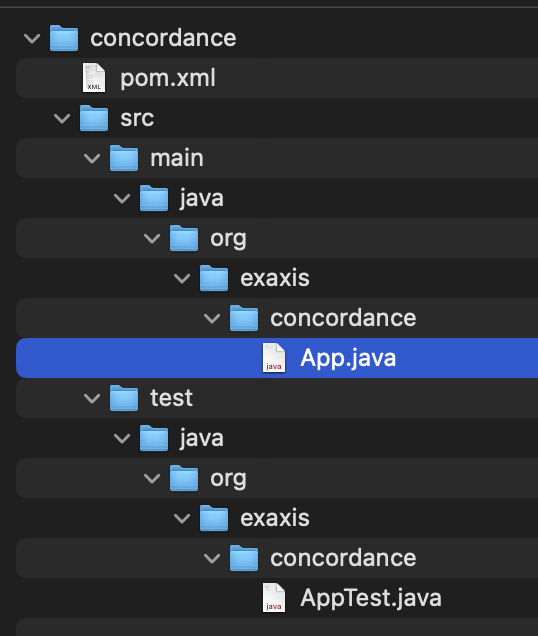

When you are done, you should have a project that looks like this:

Setting Up Main

The first thing we are going to do is setup main. By default, quickstart sets up main to be a "Hello World" program.

/**

* Hello world!

*

*/

public class App

{

public static void main( String[] args )

{

System.out.println( "Hello World!" );

}

}

We want to keep all the logic out of main so we will need a Concordance class to encapsulate the logic. Main will instantiate a Concordance from a file name and print out the concordance. We will also need to get the filename from the args passed into main. The following code should suffice:

/**

* Generate a Concordance from the file passed in

*/

public class App

{

public static void main( String[] args )

{

// make sure we have enough arguments

if (args.length==0)

usage();

// grab the filename from the last argument

String filename = args[args.length-1];

try {

//load the concordance from the file

Concordance concordance = Concordance.fromFile(filename);

// print the concordance

concordance.print(System.out);

} catch (Exception e) {

errorMessageAndExit("Error processing file: "+filename+e.getLocalizedMessage());

}

}

private static void usage(){

errorMessageAndExit("Usage: java -jar target/concordance-1.0-SNAPSHOT.jar inputFile");

}

private static void errorMessageAndExit(String message){

System.err.println(message);

System.exit(1);

}

}

In short, this code:

- makes sure there is at least one argument.

- assumes the last argument is the filename.

- Creates a concordance using the Concordance.fromFile() factory method.

- Prints the concordance to System.out using the Concordance.print() method.

- If the concordance throws an exception, the error will be printed and the return code will be 1.

The Concordance class

In order for App.main to compile, we will need a Concordance Class with a static fromFile method, and a print method. Here is the basic class

public class Concordance {

public void print(PrintStream printStream) {

printStream.println("Not Implemented");

}

public static Concordance fromFile(String filename) throws IOException {

return fromReader(new FileReader(filename));

}

public static Concordance fromString(String s) throws IOException {

return fromReader(new StringReader(s));

}

public static Concordance fromReader(Reader reader) throws IOException {

try (BufferedReader bufferedReader = new BufferedReader(reader)) {

return fromBufferedReader(bufferedReader);

} catch (Exception e) {

throw e;

}

}

public static Concordance fromBufferedReader(BufferedReader bufferedReader) throws IOException {

// Process the text

process(bufferedReader);

// return the Concordance

return new Concordance();

}

private static void process(BufferedReader bufferedReader) throws IOException {

}

}

There are several factory methods (fromFile, fromString, fromReader, and fromBufferedReader) to allow us to turn a file or a string into a BufferedReader. A file will be used from the command line and strings can be used for testing. It is fromBufferedReader where the text is processed and a Concordance is generated and returned.

But what is a concordance? As stated above, the concordance is an alphabetical list of words and there context, being the number of occurrences and the sentences that the word appears in.

What structure(s) would be best for storing this info. Let's start with the word usage info. We would need the word, the occurrences, and the list of sentences that it appears in. The sentences can be represented by the sentence number in the text. The following class would work:

public class WordUsage {

private final String word;

private final ArrayList<Integer> sentences = new ArrayList<>();

public WordUsage(String word, int sentence){

this.word = word;

addSentence(sentence);

}

public WordUsage(String word, int[] locations){

this.word = word;

Arrays.sort(locations);

for (int sentence : locations)

this.sentences.add(sentence);

}

public String getWord() {

return word;

}

public int getOccurrences() {

return sentences.size();

}

public List<Integer> getSentences() {

return sentences;

}

public void addSentence(int sentence){

sentences.add(sentence);

}

}

WordUsage stores the word, and the sentences that it appears in. The occurrences is the number of sentences it appears in. There is a constructor for creating the WordUsage for the first sentence, or multiple sentences at once. addSentence allows you to add additional sentences to the WordUsage as they are found.

Now we need a structure to store the words alphabetically with their usage data. A TreeMap seems to good fit as it is a sorted map that can hold the usage data for the word. We can add a TreeMap<String, WordUsage> to the Concordance class to hold the concordance data. Using the TreeMap means that we can retrieve the sorted WordUsages without any additional sorting. We can now define a constructor which takes the TreeMap to instantiate the Concordance class. This also gives us the return value for the process method, and we can complete the fromBufferedReader factory method. The updated code is:

public class Concordance {

private final TreeMap<String, WordUsage> wordMap;

private Concordance(TreeMap<String, WordUsage> wordMap){

this.wordMap = wordMap;

}

public static Concordance fromBufferedReader(BufferedReader bufferedReader) throws IOException {

// Process the text

// return the Concordance

return new Concordance(process(bufferedReader));

}

private static TreeMap<String, WordUsage> process(BufferedReader bufferedReader) throws IOException {

assert(bufferedReader != null);

TreeMap<String, WordUsage> wordUsageMap = new TreeMap<>();

// process the bufferedReader

return wordUsageMap;

}

}

The only thing left to do is generate the TreeMap<String, WordUsage>. In order to make sure it is as efficient as possible we will implement a simple single-pass lexer which will break the text into words to be added to the concordance. Whitespace, punctuation, and end of sentence characters will be used to identify word boundries. End of sentence characters will also be used to increment the current sentence number. Here is the code for the processor:

private static TreeMap<String, WordUsage> process(BufferedReader bufferedReader) throws IOException {

assert(bufferedReader != null);

TreeMap<String, WordUsage> wordUsageMap = new TreeMap<>();

// process the bufferedReader

int currentSentence = 1;

StringBuilder stringBuilder = new StringBuilder();

// Use a simple single-pass lexer to return the words of the corpus

int ch = bufferedReader.read();

while (ch != -1){

char curr = (char)ch;

// Are we at the end of the word

if (Character.isWhitespace(curr)||isEndOfSentence(curr)||isPunctuation(curr)) {

if (!stringBuilder.isEmpty()){

// add the word to the TreeMap

addWord(wordUsageMap, stringBuilder.toString().toLowerCase(), currentSentence);

stringBuilder = new StringBuilder();

}

if (isEndOfSentence(curr)) {

++currentSentence;

}

} else {

stringBuilder.append(curr);

}

ch = bufferedReader.read();

}

return wordUsageMap;

}

private static void addWord(TreeMap<String, WordUsage> wordStatsMap, String word, int sentence){

WordUsage wordUsage = wordStatsMap.get(word);

if (wordUsage == null) {

wordStatsMap.put(word, new WordUsage(word, sentence));

} else {

wordUsage.addSentence(sentence);

}

}

private static boolean isEndOfSentence(char ch){

return ch=='.'||ch=='?'||ch=='!';

}

private static boolean isPunctuation(char ch){

return ch == ',' || ch == ':' || ch == ';' || ch == '"' || ch == '\'' || ch == '(' || ch == ')' || ch == '-';

}

The only thing left is to fill in the Concordance.print method so we can print out the results of the concordance. Since the printing will require printing the WordUsages, we will add a toString method to the WordUsage class. Here are the print and toString methods:

Concordance.java

public void print(PrintStream printStream) {

for (WordUsage wordUsage : wordMap.values()){

printStream.println(wordUsage.toString());

}

}

WordUsage.java

private String printLocations(){

StringBuilder sb = new StringBuilder();

for(int sentence: sentences){

sb.append(sentence);

sb.append(',');

}

sb.deleteCharAt(sb.length()-1);

return sb.toString();

}

@Override

public String toString() {

return String.format("%15s: {%d:%s}", getWord(), getOccurrences(), printLocations());

}

Finally, Running the concordance on the Gettysburg Address is done as follows:

java -jar target/concordance-1.0-SNAPSHOT.jar src/test/resources/GettysburgAddress.txt

and produces the following output:

a: {7:1,2,3,4,4,6,10}

above: {1:7}

add: {1:7}

advanced: {1:9}

ago: {1:1}

all: {1:1}

altogether: {1:5}

and: {6:1,1,2,5,7,10}

any: {1:2}

are: {3:1,2,3}

as: {1:4}

battlefield: {1:3}

be: {2:9,10}

before: {1:10}

birth: {1:10}

brave: {1:7}

brought: {1:1}

but: {2:6,8}

by: {1:10}

can: {5:2,6,6,6,8}

cause: {1:10}

civil: {1:2}

come: {1:4}

conceived: {2:1,2}

consecrate: {1:6}

consecrated: {1:7}

continent: {1:1}

created: {1:1}

dead: {3:7,10,10}

dedicate: {2:4,6}

dedicated: {4:1,2,9,10}

detract: {1:7}

devotion: {2:10,10}

did: {1:8}

died: {1:10}

do: {1:5}

earth: {1:10}

endure: {1:2}

engaged: {1:2}

equal: {1:1}

far: {2:7,9}

fathers: {1:1}

field: {1:4}

final: {1:4}

fitting: {1:5}

for: {5:4,9,10,10,10}

forget: {1:8}

forth: {1:1}

fought: {1:9}

four: {1:1}

freedom: {1:10}

from: {2:10,10}

full: {1:10}

gave: {2:4,10}

god: {1:10}

government: {1:10}

great: {3:2,3,10}

ground: {1:6}

hallow: {1:6}

have: {5:4,7,9,10,10}

here: {8:4,7,8,8,9,9,10,10}

highly: {1:10}

honored: {1:10}

in: {4:1,2,6,10}

increased: {1:10}

is: {3:5,9,10}

it: {5:5,7,8,9,10}

larger: {1:6}

last: {1:10}

liberty: {1:1}

little: {1:8}

live: {1:4}

lives: {1:4}

living: {2:7,9}

long: {2:2,8}

measure: {1:10}

men: {2:1,7}

met: {1:3}

might: {1:4}

nation: {5:1,2,2,4,10}

never: {1:8}

new: {2:1,10}

nobly: {1:9}

nor: {1:8}

not: {5:6,6,6,10,10}

note: {1:8}

now: {1:2}

of: {5:3,4,10,10,10}

on: {2:1,3}

or: {2:2,7}

our: {2:1,7}

people: {3:10,10,10}

perish: {1:10}

place: {1:4}

poor: {1:7}

portion: {1:4}

power: {1:7}

proper: {1:5}

proposition: {1:1}

rather: {2:9,10}

remaining: {1:10}

remember: {1:8}

resolve: {1:10}

resting: {1:4}

say: {1:8}

score: {1:1}

sense: {1:6}

seven: {1:1}

shall: {3:10,10,10}

should: {1:5}

so: {3:2,2,9}

struggled: {1:7}

take: {1:10}

task: {1:10}

testing: {1:2}

that: {13:1,2,3,4,4,4,5,10,10,10,10,10,10}

the: {11:1,7,8,9,9,10,10,10,10,10,10}

their: {1:4}

these: {2:10,10}

they: {3:8,9,10}

this: {4:1,5,6,10}

those: {1:4}

thus: {1:9}

to: {8:1,4,7,9,9,10,10,10}

under: {1:10}

unfinished: {1:9}

us: {3:9,10,10}

vain: {1:10}

war: {2:2,3}

we: {10:2,3,4,5,6,6,6,8,10,10}

what: {2:8,8}

whether: {1:2}

which: {2:9,10}

who: {3:4,7,9}

will: {1:8}

work: {1:9}

world: {1:8}

years: {1:1}

Conclusion

A concordance generator is a useful tool to build an index of words from a body of text. The body of text can be processed in a single pass, and the sorted list of word usage information is simply the values of the TreeMap

The code for this project can be found on GitHub.

Posted on January 28, 2024

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.