Brian Douglas

Posted on March 9, 2023

This TikTok/Tweet about Circuit City is blowing up right now. The premise is that Circuit City went out of business during the last recession, adding Circuit City to the resume.

Related to that, I want to walk through one of the first AI projects I had the pleasure of seeing built by @nutlope and shared on Twitter, which is twitterBio.com.

header image was generated using midjourney

What is twitterBio?

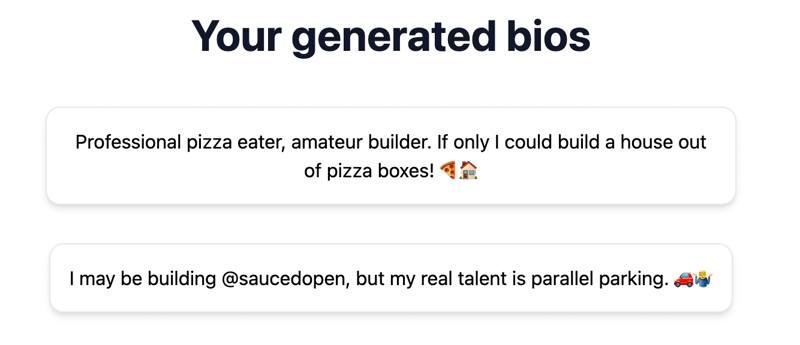

Generate your Twitter bio with OpenAI's GPT and Vercel Edge Functions. The experience is straightforward, where you copy your current bio, and the tool returns a generated bio based on the type selected.

I was pretty impressed with the "funny" bio generated for me.

Nutlope

/

twitterbio

Nutlope

/

twitterbio

Generate your Twitter bio with Mixtral and GPT-3.5.

How does it work?

I have observed how OpenAI works in my previous posts in the 9 days of OpenAI series, so don't expect a deep dive there. But when I look at this code, something jumps out of this OpenAIStream service.

When reading the next.js code, all API interactions are in the API folder. It makes it easy to focus on understanding how OpenAI works when I know where to look.

But I digress. Here is the API that generates the bio. Note work of OpenAI is abstracted in the OpenAIStreamPayload util.

// pages/api/generate.ts

const payload: OpenAIStreamPayload = {

model: "gpt-3.5-turbo", // "ChatGPT API"

messages: [{

role: "user",

content: prompt //

}],

temperature: 0.7,

top_p: 1,

frequency_penalty: 0,

presence_penalty: 0,

max_tokens: 200,

stream: true,

n: 1,

};

const stream = await OpenAIStream(payload);

Now to look at this OpenAIStreamPayload. This service is actually calling ReadableStream from the node api, which I was actually unfamiliar with, and the MDN docs on ReadableStreams were a bit dense to my surprise.

To put it plainly, the ReadableStream allows you to deal with data as it comes in. OpenAI may fragment your response while it processes your prompt. This is where reading the stream comes in handy.

note: the developer left the inline comments

// utils/OpenAIStream.ts

const stream = new ReadableStream({

async start(controller) {

// callback

function onParse(event: ParsedEvent | ReconnectInterval) {

if (event.type === "event") {

const data = event.data;

// https://beta.openai.com/docs/api-reference/completions/create#completions/create-stream

...

try {

const json = JSON.parse(data);

const text = json.choices[0].delta?.content || "";

if (counter < 2 && (text.match(/\n/) || []).length) {

// this is a prefix character (i.e., "\n\n"), do nothing

return;

}

const queue = encoder.encode(text);

controller.enqueue(queue);

counter++;

} catch (e) {

// maybe parse error

controller.error(e);

}

}

}

// stream response (SSE) from OpenAI may be fragmented into multiple chunks

// this ensures we properly read chunks and invoke an event for each SSE event stream

const parser = createParser(onParse);

// https://web.dev/streams/#asynchronous-iteration

for await (const chunk of res.body as any) {

parser.feed(decoder.decode(chunk));

}

},

At the time, this project was created using streams with OpenAI, but it appears to be in production now. You can read more on leveraging streams in the completions documentation.

My takeaway from this code read is that the streaming data from OpenAI may be helpful when dealing with more significant prompts, like bio or description rewrites—also, TIL ReadableStreams in node.

Also, if you have a project leveraging OpenAI or similar, leave a link in the comments. I'd love to take a look and include it in my 9 days of OpenAI series.

Find more AI projects using OpenSauced

Stay saucy.

Posted on March 9, 2023

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.