Using The Microsoft Face API to Create Mario Kart Astrology

Chloe Condon 🎀

Posted on May 20, 2019

The following blog post will walk you through how to use the Microsoft Azure Face API to detect emotions from images. If you’re looking for a quick summary and overview on the Face API, I recommend starting here with our documentation, or taking 5 minutes to complete a Quickstart Tutorial, so you have some context on how to use the API and deploy a React App to GitHub pages. You can also view the GitHub repo here.

If you’d like to skip straight to the code, scroll down to the Let’s get to the code! section below.

🍄⭐️🏁 Or, if you want to skip ahead and see what your Mario Kart player says about you (based on my personal and very non-scientific analysis), go here: https://chloecodesthings.github.io/Mario-Kart-Astrology/ 🏁⭐️🍄

Happy learning!

-Chloe

P.S. For folks who prefer to listen/watch, there's a video of me talking about this on YouTube! 🍿🎬

👉Optional sound bite to get this party started. 🍄⭐️🕹

It all began with a tweet a couple months ago:

In my opinion, your chosen Mario Kart player says WAY more than a zodiac sign does. If they choose Toad, you're like "ok- you're an introvert", and if they choose Bowser you're like "ok who hurt u?".14:35 PM - 21 Mar 2019

It was something silly and fun that I thought I’d share with the internet (for context, most of my tweets are really bad engineering dad jokes). I had always been interested in astrology- it’s by no means science, but I found it interesting that folks have historically attached meaning to the stars. While I find star signs and horoscopes to be interesting, I have never assigned too much value to them. It felt like a lot of generalizations based on my date of birth, which I had zero control over. Also, perhaps I was a little over being called “Miss Cleo” all through elementary and middle school… thanks 90s television commercials 😑

However, in my mind, choosing a Mario Kart player has a lot more accurate data about my personality than my horoscope sign ever has. Your player isn’t something randomly assigned to you, it’s a big personal choice (Mario Kart race-to-race, that is). It not only says something about your personality, but it also speaks to what you value in a player. For me, I have always gone the small/cute/pink route. Usually I play as Peach, Toadette, or an occasional “wild” moment where I’ll be Yoshi. I like these characters because they are lightweight and move fast. I’ve always noticed that my boyfriend Ty Smith has played as Bowser, Wario, and Dry Bones- all very “evil” and somewhat large characters. I asked him why he chooses these and he said “They’re fun- and the idea of a big lizard on a go-kart is kind of funny”. So, in short my boyfriend is big and evil… just kidding- he’s a pretty small dude who is very nice. So, while I didn’t think they were accurate representations of people’s likes/moods/etc., they were fun to think about and create correlations. Much like its fun to notice folks who look similar to/have personality traits similar to their dogs.

The more I read the comments in my tweets, the more interested I was in the correlation. Folks began to comment asking what their player said about them, and as I joke I began to respond to comments like these:

Which then led to Stephen Radachy to make this…:

Stephen Radachy@stephenradachy

Stephen Radachy@stephenradachy @ChloeCondon 10 down 33 to go 🤓😂! docs.google.com/spreadsheets/d…04:45 AM - 22 Mar 2019

@ChloeCondon 10 down 33 to go 🤓😂! docs.google.com/spreadsheets/d…04:45 AM - 22 Mar 2019

And then make this amazingness:

Stephen Radachy@stephenradachy

Stephen Radachy@stephenradachy @ChloeCondon Update: Google sheets are 2000 and late, static GH pages are 3008 🤓 stephenradachy.github.io/Mario-Kart-Ast…08:01 AM - 23 Mar 2019

@ChloeCondon Update: Google sheets are 2000 and late, static GH pages are 3008 🤓 stephenradachy.github.io/Mario-Kart-Ast…08:01 AM - 23 Mar 2019

Yes, Twitter can be trash at times, but then something magical like this happens. 🔮 Stephen made this adorable site using React, NES.css, nes-react, and the Super Mario Wiki and posted it on Twitter. Here’s a screenshot:

The most amazing part? He made it all open source! To quote Stephen, “open source is the best source”! So, after a bit of travel, and playing around with the Microsoft Face API, I finally got around to making my very own Mario Kart Astrology using Microsoft Face algorithms, GitHub pages, and a little creativity. Here’s how I did it!

“Let’s-a-go!” ⭐️🍄

Before we get into the technical details, here’s a diagram of what I built and what I’ll be walking you through:

🏁 Let’s get to the code! 🏁

Step 1: Use the Microsoft Face API

A sample image of the Face API from the Microsoft Docs

If you’re unfamiliar with the Azure Face API, it’s a cognitive service that provides algorithms for detecting, recognizing, and analyzing human faces in images. Its capabilities range from face detection, face verification, finding similar faces, face grouping, and person identification. For my project, I wanted to be able to detect emotion (to then translate to a silly “horoscope” type message on my own).

After learning about the Face API with Suz Hinton earlier this year and seeing Susan Ibach’s Confoo talk in Montreal (she used hockey player images to analyze emotion and sentiment- you can view the code examples and slide deck here), I knew I just had to build something fun and creative with it. After reading the Face API docs, and completing a free Python Quickstart on Microsoft Learn (don’t worry- there are other languages for the quickstarts, too!), I was ready to assess my data and start building!

But I quickly ran into a problem… it wasn’t able to detect anything from an animated cartoon face. 😭

So, I put on my creative hat and found a workaround: I couldn’t use animated Mario characters, but I could use Mario cosplay images! And so, I ran the following on various URLS of cosplayers:

Here's the code (don’t worry- we’ll break this down in a sec!)

Starting with the code from the Python Quickstart, I began playing with photos I found on the internet of Mario Kart cosplayers. I only needed data returned to me about the emotions of the characters, but I kept elements like “facialHair” and “age” for fun to see if it detected that the images of Baby Mario I used in fact confirmed it was a baby (spoiler alert: it did 👶🏻), and if a fake mustache on a Mario cosplayer would register (another spoiler alert: it did 👨🏻). Once I determined that there were enough images online for me to make “horoscopes” for a majority of Mario Kart characters (sadly, there aren’t too many folks dressing up as Iggy or Ludwig 😭), I began processing images of all the characters. Here is an example and breakdown of the results the FaceAPI returned on a character:

The image below features the Mario Kart character Lemmy Koopa. He’s a rainbow… turtle-looking(?) creature who appears to be pretty happy. He’s also considered a villain in the Mario universe.

Below is a cosplay image I used to run through the Face API to determine the character emotions.

Then I ran the following to get results back on the emotions detected in this image. You’ll notice that I have kept other attributes (in addition to emotion) just for fun under params.

NOTE: You’ll have to insert and include YOUR-SUBSCRIPTION-KEY & YOUR-FACEAPI-URL from your Microsoft account. Read the FaceAPI documentation for more info.

Then, after running my Python file with the command…

python detect-face.py

…the following data is returned.

[{“faceId”: “06d686ab-66d7–4d57-b9a3–4e0bfb0e80de”, “faceRectangle”: {“width”: 147, “top”: 174, “height”: 147, “left”: 695}, “faceAttributes”: {“emotion”: {“sadness”: 0.0, “neutral”: 0.27, “contempt”: 0.001, “disgust”: 0.0, “anger”: 0.0, “surprise”: 0.0, “fear”: 0.0, “happiness”: 0.729, “age”: 26.0, “makeup”: {“lipMakeup”: false, “eyeMakeup”: false}, “accessories”: [{“confidence”: 0.89, “type”: “glasses”}], “facialHair”: {“sideburns”: 0.1, “moustache”: 0.1, “beard”: 0.1}, “hair”: {“invisible”: false, “hairColor”: [{“color”: “black”, “confidence”: 0.83}, {“color”: “other”, “confidence”: 0.64}, {“color”: “brown”, “confidence”: 0.46}, {“color”: “blond”, “confidence”: 0.4}, {“color”: “gray”, “confidence”: 0.4}, {“color”: “red”, “confidence”: 0.23}], “bald”: 0.27}, “headPose”: {“yaw”: 10.7, “roll”: 24.2, “pitch”: -8.0}, “smile”: 0.729}}]

Ok, that’s a bunch of text! Let’s break it down in a more readable format.

Face ID

Each face in the image (only 1 in this case) is given a unique persisted face ID.

faceId: 06d686ab-66d7–4d57-b9a3–4e0bfb0e80de

Face Rectangle

Every face detected in the image corresponds to a faceRectangle field in the response. It is a set of pixel coordinates (left, top, width, height) marking the located face. Using these coordinates, you can get the location of the face as well as its size. In the API response, faces are listed in size order from largest to smallest.

width: 147, top: 174, height: 147, left: 695

Emotions

According to my results, Lemmy is overall a pretty happy Koopa. He shows a small amount of contempt, and is otherwise neutral. Based on these results, I’ll create a fun horoscope later.

sadness: 0.0, neutral: 0.27, contempt: 0.001, disgust: 0.0, anger: 0.0, surprise: 0.0, fear: 0.0, happiness: 0.729

MakeUp/Accessories/Facial Hair/Hair/Head Pose/Smile

In this sample, I also included some parameters in addition to emotion. You’ll notice below that it was able to estimate the age of the person, if they’re wearing makeup, accessories (it’s 0.89 confident on Lemmy wearing glasses- which I agree with since it’s technically a mask). On this particular image, we aren’t returned much data on sideburns/mustache/beard since this person is wearing a wig and mask. We’re detecting a multitude of hair colors since its a rainbow wig. The headpose is also listed- if we specify this attribute when detecting faces (see How to Detect Faces), you will be able to query it later (you can read more about the capabilities of headpose here). And last but not least- smile (pretty self explanatory)!

age: 26.0

makeup

lipMakeup: false

eyeMakeup: false

accessories

confidence”: 0.89

type: glasses

facialHair

sideburns: 0.1

moustache: 0.1

beard: 0.1

hair:

invisible: false

hairColor

color: black, confidence: 0.83

color: other, confidence: 0.64

color: brown, confidence: 0.46

color: blond, confidence: 0.4

color: gray, confidence: 0.4

color: red, confidence: 0.23

bald: 0.27

headPose

yaw: 10.7, roll: 24.2, pitch: -8.0

smile: 0.729

Needless to say, this was some of the most fun data entry I’ve ever done.

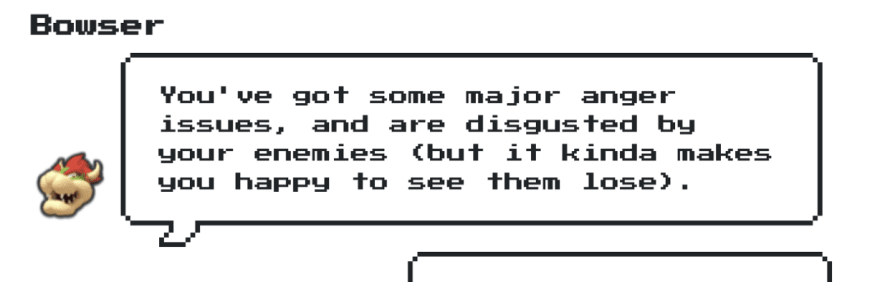

Step 2: Write Some Silly “Horoscopes” Based on the Data

(About as accurate as my horoscope this week.)

Once I obtained data on the moods of the cosplay images, I got creative and made fun short & sweet horoscopes based on them. This didn’t use any technology except for my own brain, but was a fun part of the project!

Step 3: Add some NES.css, and deploy to GitHub Pages!

Last, but not least (and perhaps one of my favorite takeaways from this project) I took some time to learn about and play with NES.css. It’s an adorable CSS framework that has the cutest 8-bit NES theme you’ve ever seen. Stephen had used it in his original site, and I’m so glad he did! Now I want to add it to everything I make. 😍

And now, you can view it live on GitHub Pages! If you’re looking for info on how to deploy your own React project to GitHub Pages, check out this great tutorial by Gurjot Singh Makkar.

This project may have been a silly one, but it was a fun way to play with the Face API, learn more about the capabilities of Azure Cognitive Services, and display the data in a creative way. Want to play with the Face API yourself? Try out the 5 Minute Quickstarts for free in the Microsoft docs! I’m looking forward to seeing your horoscopes featuring cosplay of Pokemon, Disney Princesses, and the Rick and Morty characters in the future 😉🔮

Thanks for reading this far! And special thanks to Suz Hinton, Christoffer Noring, Brandon Minnick, and Susan Ibach. Extra special thanks to Stephen Radachy for the original concept, as well as B.C.Rikko, Igor Guastalla de Lima, Trezy, and all the other amazing contributors to NES.css! 🍄⭐️🎮

Posted on May 20, 2019

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.