Forecast AWS charges using cost and usage report, AWS Glue databrew and Amazon forecast

Martin Nanchev

Posted on January 31, 2023

Ever since I started working with AWS, I was always wondering if my cost estimations are correct. One problem is that cost estimation depends heavily on assumptions(like no seasonality), which are not always correct. AWS added the possibility to forecast monthly costs using cost explorer. The problem is that sometimes you want to estimate the costs for specific service and when you tried using AWS Cost Explorer you get:

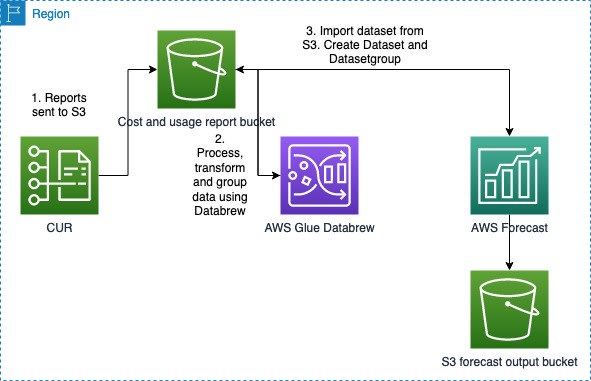

In such cases there is another possibility to use Cost and Usage report, together with AWS Glue Databrew and Amazon forecast to forecast expenses for specific service based on 1 to n historical values daily or hourly. Below is a proposed architecture:

The architecture needs two buckets. One Bucket for storing the cost and usage report and one for storing the forecast output. The cost and usage report should also be configured. Below is a sample configuration:

After the report and the required buckets are in place, we need to create a data-set in Glue databrew, a recipe, which transforms the data-set using discrete transformation steps. The glue between the data-set and the recipe is called databrew project, which connects the recipe to the data-set. After the project is available we have the possibility to schedule a job each day, which transforms and cleans the cost and usage report to be ready for the amazon forecast:

AWS Glue Databrew looks like excel macro on steroids, it automates the transformation and cleaning of large datasets. Example:

The results of the transformation job are saved to the output bucket as csv and serves as input for the forecast. The data from the parquet is divided into multiple parts:

With S3 select query we can check the columns and values in one of the csv objects:

The amazon forecast is created using custom resource because there is not forecast resource in CDK:

We create a data-set from the csv files in the S3 output bucket. A datasetgroup is a container for datasets. After that we import the data-set in the datasetgroup, which is about 40 minutes. Last step is to train the time series model(predictor), which is performed using AutoML. AutoML select best algorithm like DeepAR, that is suitable for data-set. The sliding window is 7 days, but it could be more if you have more data. The training time for Amazon Forecast is about 2 hours and 40 minutes for the forecast. As last step we can create a forecast. The report will include the 0.5, 0.9 and 0.1 quartiles. Below is shown an example:

One important note, that was not mentioned is that the the model above was using costs per hour, but the same is possible for costs per day, which is normally used in production. This is the reason why the costs for the DocumentDb go down after midnight 24.10.2021 and are about 6$ for the day. This means that the costs will be around $180 for the Month with some degree of certainty.

You can also do this for different accounts by specifying the account id:

Summary: If you want a more granular forecasting of the costs hourly or daily, based on specific service, then AWS Glue databrew and Amazon Forecast will do the job. I would suggest to use daily and not hourly forecast, but the article serves just as and example and overview of the possibilities of these services. The source code is available below.

Sources/Source code:

GitHub - mnanchev/aws_cdk_forecast_cost_and_usage: Forecasting costs using costs and usage report

Forecasting AWS spend using the AWS Cost and Usage Reports, AWS Glue DataBrew, and Amazon Forecast…

Posted on January 31, 2023

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.

Related

November 29, 2024