"Cross-Region Data Mirroring: 🚀 A Deep Dive into Cross-Region Replication Using Amazon S3 Batch Operation 🔄"

Sarvar Nadaf

Posted on August 18, 2024

Hello There!!!

Called Sarvar, I am an Enterprise Architect, Currently working at Deloitte. With years of experience working on cutting-edge technologies, I have honed my expertise in Cloud Operations (Azure and AWS), Data Operations, Data Analytics, and DevOps. Throughout my career, I’ve worked with clients from all around the world, delivering excellent results, and going above and beyond expectations. I am passionate about learning the latest and treading technologies.

We are currently looking at the Amazon S3 batch operations. In other words, we are performing cross-region replication that is, replicating an object from one S3 bucket to another regions s3 bucket with the help of Amazon S3 batch operations. Let me begin by going over in detail how we will configure the Amazon S3 batch operation to achieve cross-region replication. We will also discuss what Amazon S3 batch operation is, what we can do with S3 batch operations and how price is charged to each operation. So let's get going.

What is Amazon S3 Batch Operations?

When it comes to handling enormous volumes of data in your storage warehouse, Amazon S3 Batch Operations is comparable to an incredibly effective tool (S3). Suppose you had millions of boxes (objects) that you needed to transport fast between places, add labels to, change security settings for, resuscitate frozen files, execute special programs, and quickly remove undesired items. S3 Batch Operations can do all of this and more. It's a hassle-free approach that streamlines complex operations without needing intricate coding or robotics. S3 Batch Operations is a powerful and user friendly tool that automates data management in Amazon S3, saving you time and effort whether you're working with hundreds or millions of files.S3 Batch Operations is revolutionary because it makes it simple to manage and arrange large datasets with a few clicks.

What we can do with Amazon S3 Batch Operations:

Here is the list of activities we can perform on S3 Bucket using Amazon S3 Batch Operations.

- Transfer objects across regions, buckets, or accounts.

- Modify objects tags and other metadata.

- Change ACLs, or object access control lists.

- Restore S3 Glacier's archived objects.

- Invoke AWS Lambda functions on Buckets or Objects.

- Delete objects in S3 Buckets.

Let's Start with Step by Step implementation of Cross-Region Replication Using Amazon S3 Batch Operation:

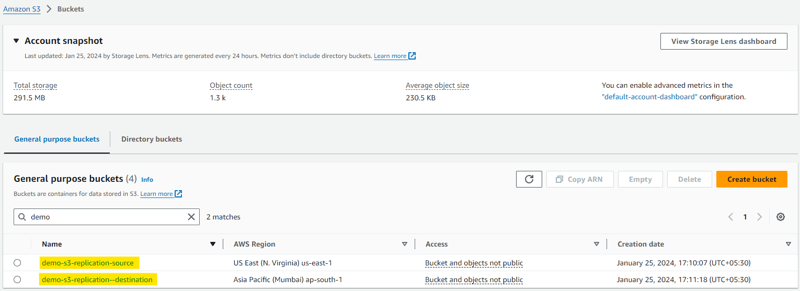

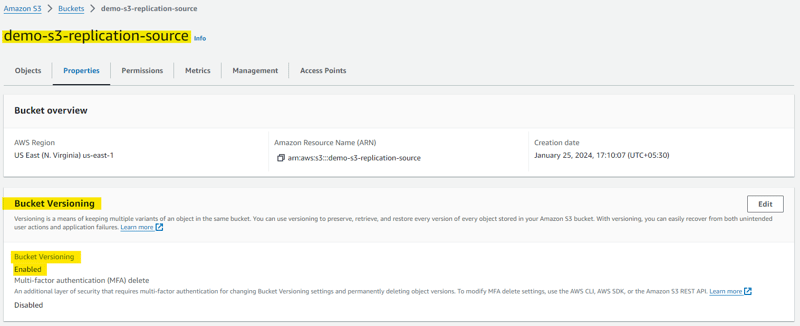

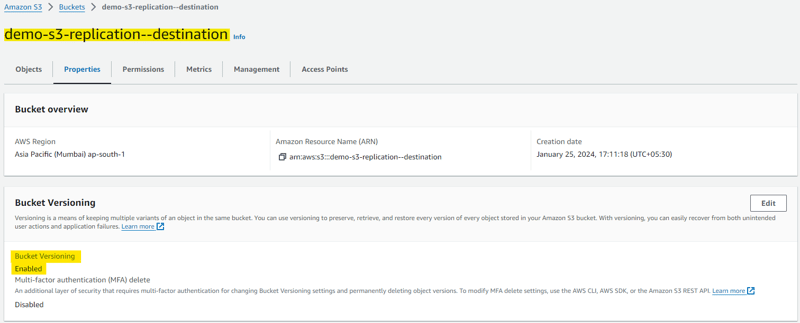

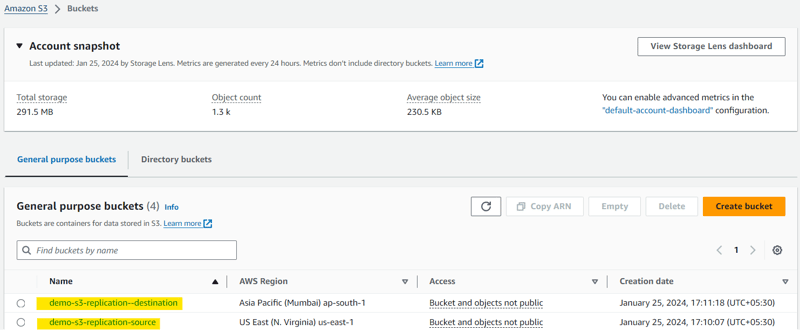

I created two buckets for this tutorial. The first source bucket is formed in the North Virginia region, while the second bucket of distinction is created in the Mumbai region.

Perquisites: (Mandatory)

Step 1: Create IAM Role

We are creating a necessary role for this tutorial in this stage. Therefore, you should first adjust the source and destination bucket names in the IAM Policy that you create using the sample IAM policy that is provided below.

{

"Version": "2012-10-17",

"Statement": [

{

"Action": [

"s3:PutObject",

"s3:PutObjectAcl",

"s3:PutObjectTagging"

],

"Effect": "Allow",

"Resource": "arn:aws:s3:::DestinationBucket/*"

},

{

"Action": [

"s3:GetObject",

"s3:GetObjectAcl",

"s3:GetObjectTagging",

"s3:ListBucket"

],

"Effect": "Allow",

"Resource": [

"arn:aws:s3:::SourceBucket",

"arn:aws:s3:::SourceBucket/*"

]

},

{

"Effect": "Allow",

"Action": [

"s3:GetObject",

"s3:GetObjectVersion"

],

"Resource": [

"arn:aws:s3:::ManifestBucket/*"

]

},

{

"Effect": "Allow",

"Action": [

"s3:PutObject"

],

"Resource": [

"arn:aws:s3:::ReportBucket/*"

]

}

]

}

Once you create the IAM policy lets move to create IAM Role and attached policy we have created above and add the Trust Relationships as below.

{

"Version":"2012-10-17",

"Statement":[

{

"Effect":"Allow",

"Principal":{

"Service":"batchoperations.s3.amazonaws.com"

},

"Action":"sts:AssumeRole"

}

]

}

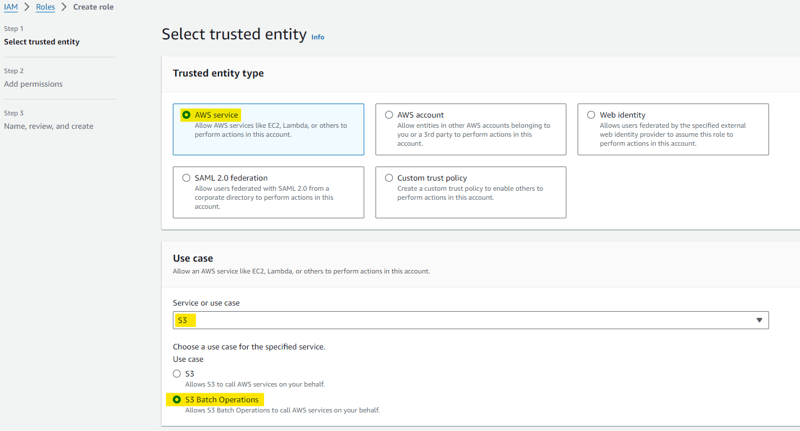

While creating Role please select below options. if you select below options then there will be no need to update trust relationships in IAM Role.

Step 2: S3 Bucket Overview

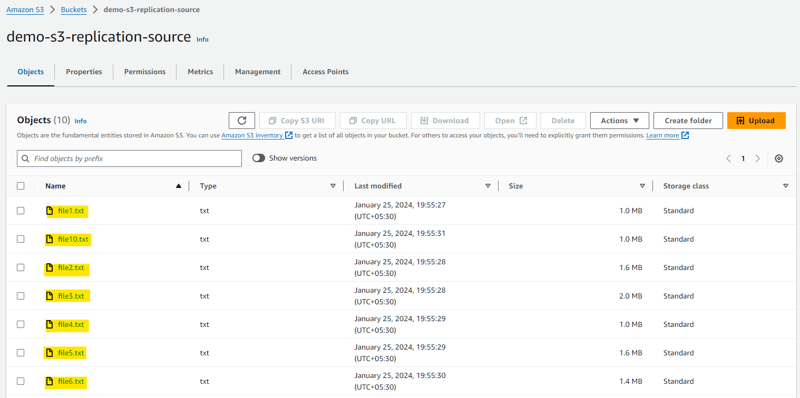

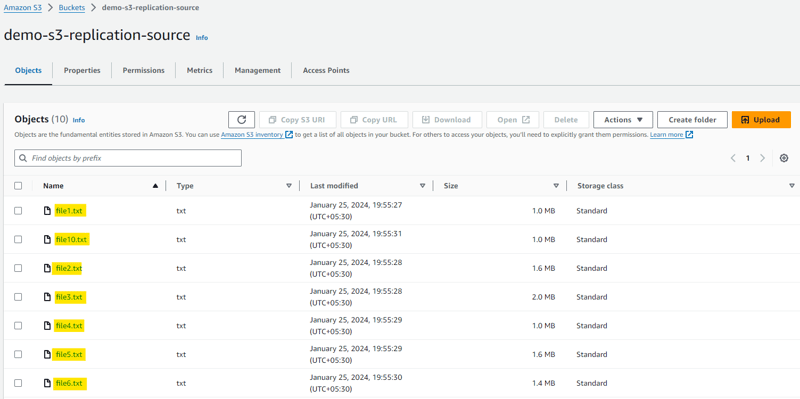

I've included a step to see what's in the bucket and to view the bucket details at the same time. And don't worry, if you're following along with this tutorial and aren't sure how to upload files, I'll give you a script that you can run on your cloudshell to create a dummy file that's between one and two MB in size and upload it to your S3 bucket.

As you can see, we created two buckets for this tutorial, source and differentiation buckets, as you can see in the naming convention.

As you can see below, I created and uploaded 10 files to the source bucket using a Python script.

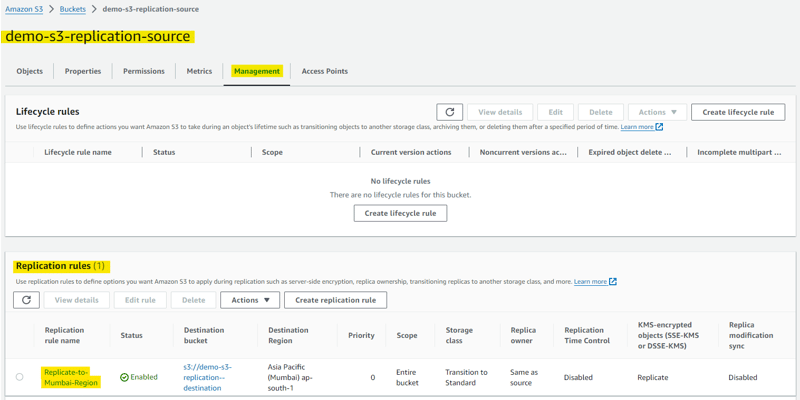

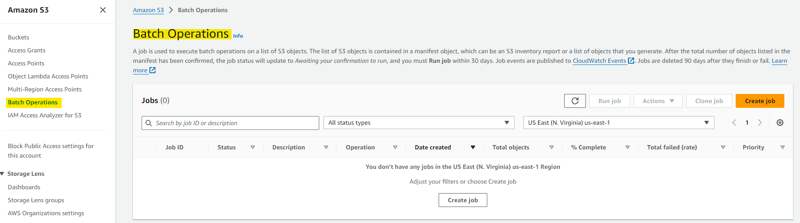

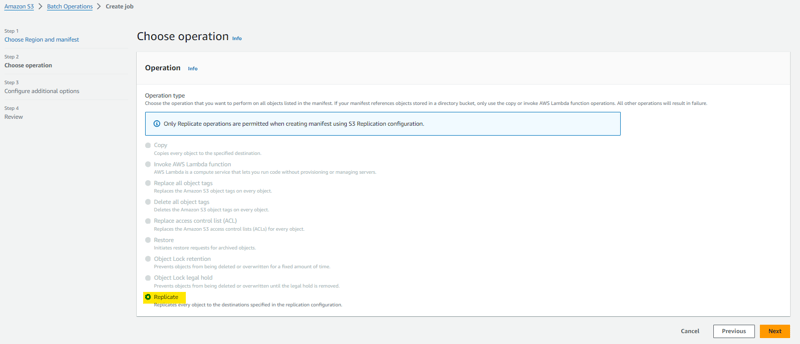

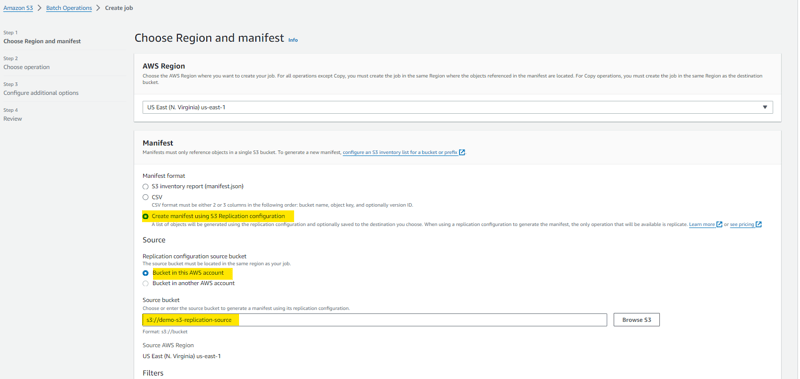

Step 3: Let's Start S3 Batch Operations Configurations:

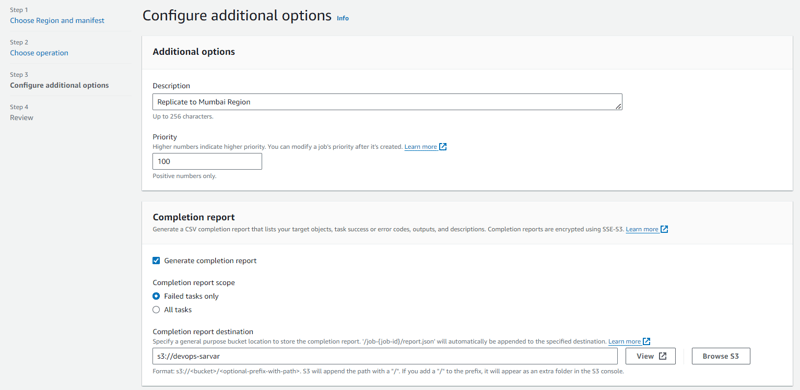

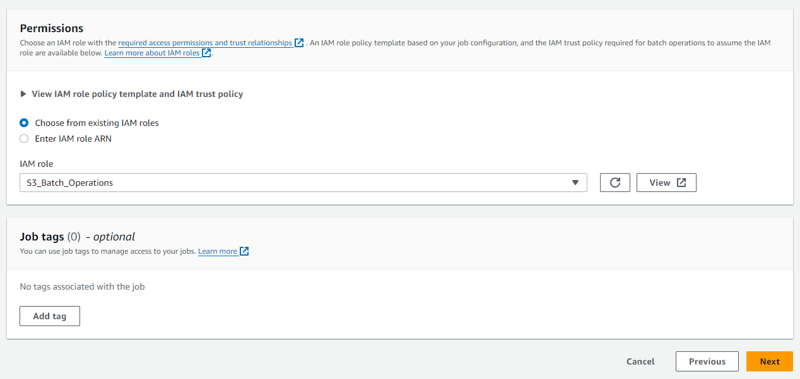

Here you just follow the step as shown below which will help you to configure a batch operation job for the replica.

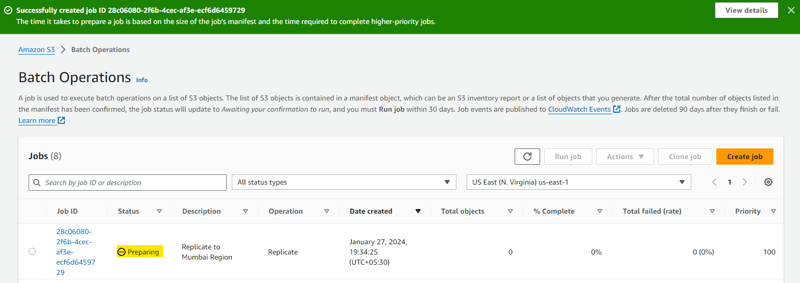

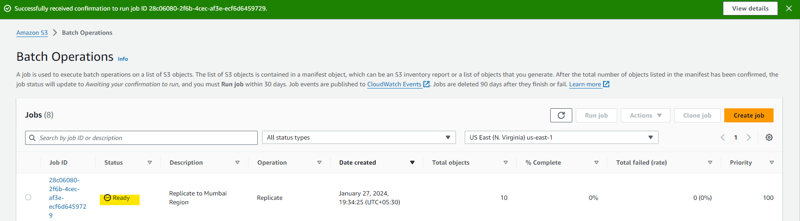

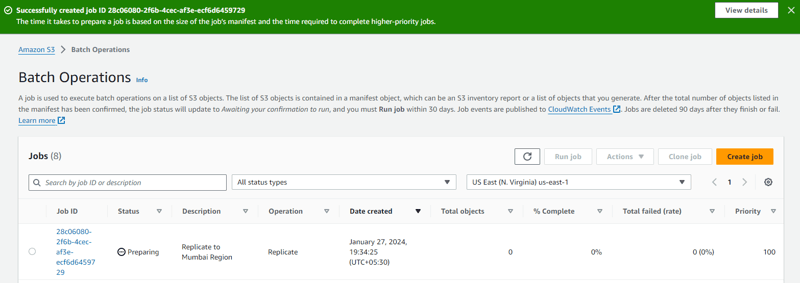

Once you click on Next option and review all the option just click on create and here you go you will see the Batch operation job has been created successfully as shown below.

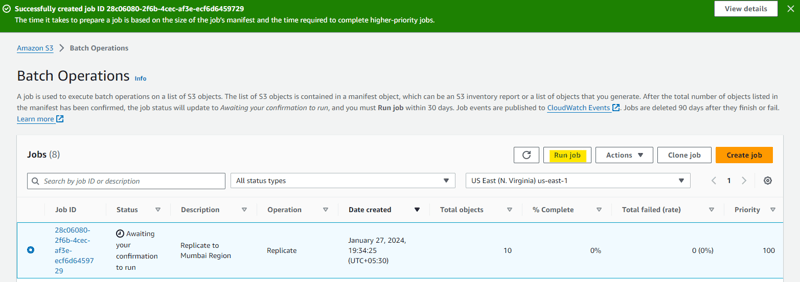

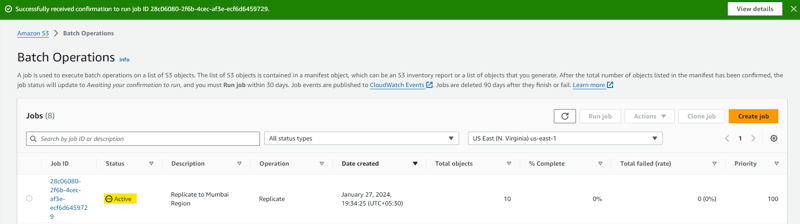

As you can seen job is Preview stage and once it will comes to ready stage you are all set to Run the job.

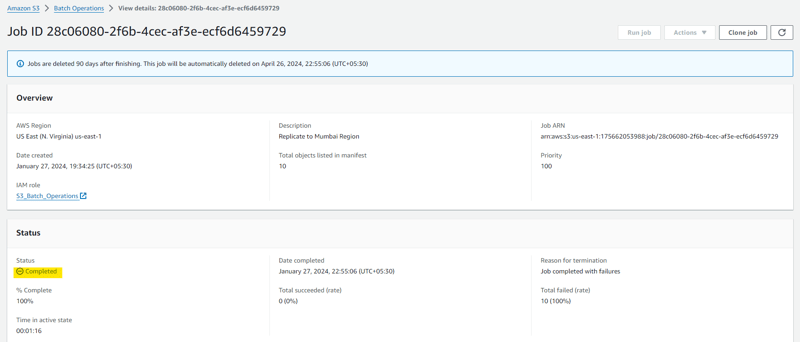

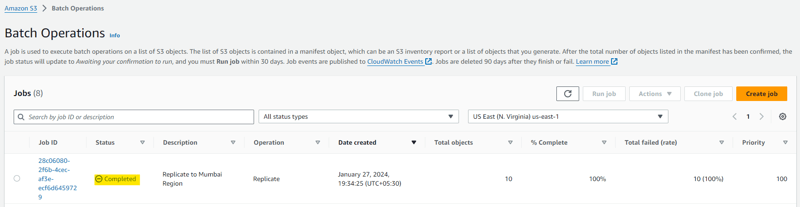

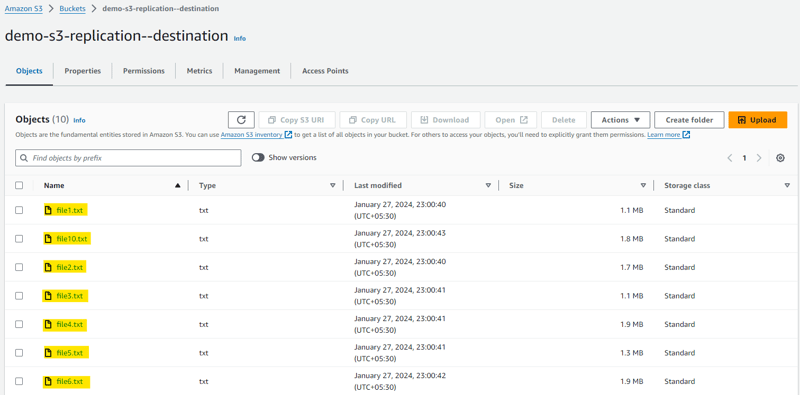

Finally the batch opration job of replication is completed successfully and you can able to see the status in both the snapshots below.

Step 4: Output Stage

Congratulations you have successfully replicated data to cross region. You can refer both the source bucket where actual data is present and the second bucket where data is replicated successfully.

Pricing for S3 Batch Operations:

There are two primary prices associated with S3 Batch Operations. First off, there is a charge currently $0.25 USD per task for every job you submit. This cost is incurred irrespective of the quantity of products or the particular task you're carrying out. A per object operation cost makes up the second part and is determined by the quantity of objects impacted by your work as well as the nature of the operation. As of right now, each million object operations will cost $1.00 USD. It can be used for a number of tasks, including restoring archived things from S3 Glacier, changing access control lists, altering metadata, and copying objects.

Conclusion: Amazon S3 Batch Operations makes handling large datasets easy, it streamlines data management in Amazon S3. It simplifies operations like transferring items and changing metadata at scale with a few clicks or a single API request. This managed solution offers scalability, job prioritization, progress monitoring, and comprehensive completion reporting for effective storage operations on billions of items, doing away with the requirement for proprietary applications.

— — — — — — — —

Here is the End!

Thank you for taking the time to read my article. I hope you found this article informative and helpful. As I continue to explore the latest developments in technology, I look forward to sharing my insights with you. Stay tuned for more articles like this one that break down complex concepts and make them easier to understand.

Remember, learning is a lifelong journey, and it’s important to keep up with the latest trends and developments to stay ahead of the curve. Thank you again for reading, and I hope to see you in the next article!

Happy Learning!

Posted on August 18, 2024

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.