Jacklyn Biggin

Posted on August 4, 2023

Autocode is a developer platform designed to make it easy to build APIs, automations, and bots. We’ve built a community of over 30,000 developers on Discord. This community is at the heart of what we do, but moderating at such a scale can be challenging.

We’ve learned a lot about Discord server moderation over the last two years, and in this article, I’m going to share our five best practices to follow when moderating a Discord server.

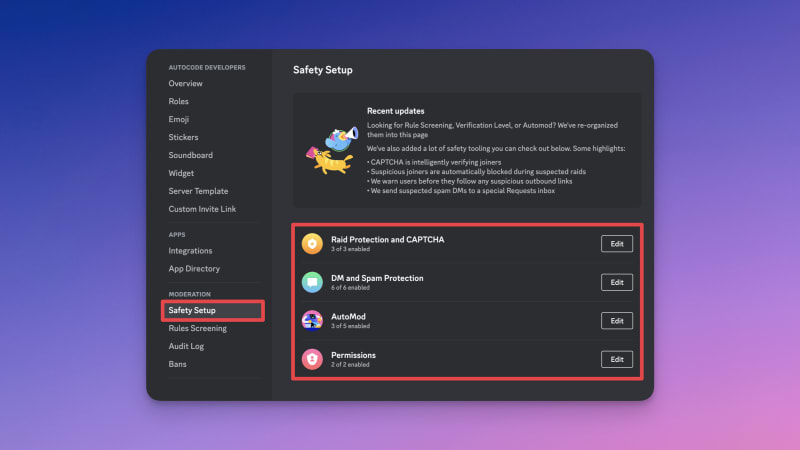

1: Use Discord’s built in safety tools

Discord provides a surprisingly robust set of moderation tools out of the box. While they’re often not powerful enough on their own - especially for larger servers - they can provide a good first line of defense against bad actors.

The features that we’ve found most powerful are:

- Raid Protection, which can protect your server against floods of compromised accounts joining to spam it with malicious links.

- Verification Level, which can be found under the DM and Spam Protection setting. We have this set to medium, meaning that accounts which have been registered on Discord for less than five minutes will be unable to talk on our server.

- AutoMod features, especially the built in filters you can enable under the Commonly Flagged Words option. We found that enabling those filters and the Block Spam Content setting blocked around half of the spam content posted in our server.

- Explicit image filter, available under the AutoMod settings, because although it does false positive sometimes, people can be wild on the internet.

However, we have found that the built-in features alone aren’t enough for a server of our size. For example, while you can setup AutoMod rules using Regex, you’re limited to a maximum of ten filters. That brings us onto our next point…

2: Build your own moderation tools

There’s no one-size-fits-all solution to Discord moderation, so building your own tools that fit into your community can be a good next step once you outgrow Discord’s built-in features. If you’re curious about how you can start building a moderation bot, check out our how to build a Discord moderation bot guide!

One issue that we ran into was that people were using non-English alphabets to bypass our AutoMod rules. To counter this, we added a function to transliterate the content of all messages into a standard English alphabet, and then run the content through a bunch of regular expressions. The regular expressions look for content such as malicious links, free nitro scams, and other terms that indicate a message was posted by a bad actor. If there’s a match, we automatically delete the message and mute the user. The content that caused the moderation action is automatically logged, and we use the logs to tweak the filters to reduce false-positives.

Building these custom tools in-house also helps us cater them to our community’s needs. For example, we normally block all Discord server invite links from being posted in our server, as we find them to be a magnet for spam. However, we once wanted to do some market research on what kind of servers our users were part of.

As we custom-built our moderation tools, we were able to modify them to exclude that channel from the filter. However, we then ran into the issue that spambots were posting links to some spicy servers in that channel. So, we modified our filter again to automatically scan the titles of any Discord invite links posted, and to delete messages containing anything age-restricted or indicative of spam. Spambots defeated! 🎉

3: Empower your community members with custom tools

Empowering community members can be a vital part of your moderation strategy. How this looks depends on your community. Here at Autocode, we have a small team, so we empowered trusted community members to be able to mute bad actors. All mutes, and metadata relating to the cause of the mute (such as message content) are logged, so our team can review them if something doesn’t seem quite right. We use muting, rather than handing out the ability to kick or ban users, as it is less destructive if a bad actor did manage to gain access to it.

Other teams might find that involving community members with moderation looks different. Sometimes, something as simple as providing tools for users to report malicious activity to your staff team can be enough. On Autocode’s community server, we provide this option through a slash command.

4: Build only what your community needs in the moment

Every community has different needs, and those needs will change as your community grows. Therefore, it’s important to not start building processes before they’re needed, and to always ask yourself “why?” whenever you add another tool to your moderation toolkit. Just because a feature is available, doesn’t mean you need to - or necessarily should - use it.

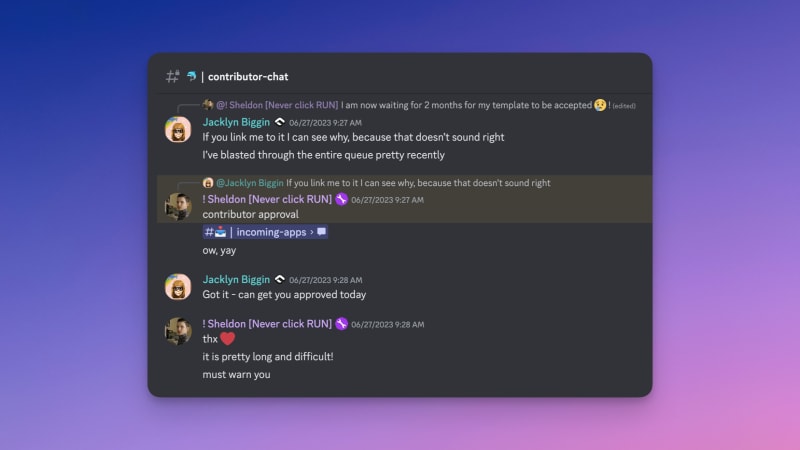

The people who know what you need best are your community members, especially the most active ones. They’re probably seeing patterns in spam or malicious content that’s slipping through the cracks that you’re not, or are able to identify more covert behavior coming from bad actors, such as stirring drama up via direct messages. Create a way to communicate directly with these members - we do this by automatically assigning a “Contributor” role to anyone who is active within Autocode’s ecosystem, and asking them questions via a private channel.

5: Build trust by being present

Building and utilizing moderation tools can be useful, but ultimately, the most important part of any moderation strategy is building trust. Trust building can have a ton of benefits, including:

- Making it possible to catch issues within your community earlier, as community members proactively reach out and give you a heads up

- Reducing the need for hands-on moderation from your team, as the community will partially moderate itself

- Providing opportunities to involve key community members beyond just moderation - we’ve been able to involve them in lots of things, from freelance contracts to involvement in content

The easiest way to build trust is to simply be present within your community. By becoming a recognizable face, your community members will get used to communicating with you. Beyond that, it’s important to be consistent - don’t give certain people unwarranted special treatment, and be consistent if you do have to kick or ban anyone.

We take things a step further by opening up our Discord audit log to the most active members of our community. This provides a level of transparency, and allows them to verify that we’re not doing anything shady in terms of banning people or deleting messages. In fact, we’ve historically opened up our audit log to everyone when controversial decisions were made that some community members disagreed with. This helped put out flames on burning fires - it’s difficult to be angry at people when you can see that they’re telling the truth.

Next steps

I hope you learned something new today! If you have any questions, please don’t hesitate to reach out. And, if you’re ready to jump into building your first Discord bot to help moderate your community, our how to build a Discord bot guide will get you up and running in just a few minutes.

Good luck with growing your community!

Posted on August 4, 2023

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.