Špela Giacomelli

Posted on February 14, 2024

In a world of ever-changing technology, testing is an integral part of writing robust and reliable software. Tests verify that your code behaves as expected, make it easier to maintain and refactor code, and serve as

documentation for your code.

There are two widely used testing frameworks for testing Django applications:

- Django's built-in test framework, built on Python's unittest

- Pytest, combined with pytest-django

In this article, we will see how both work.

Let's get started!

What Will We Test?

All the tests we'll observe will use the same code, a BookList endpoint.

The Book model has title, author, and date_published fields. Note that the default ordering is set by date_published.

# models.py

class Book(models.Model):

title = models.CharField(max_length=20)

author = models.ForeignKey(Author, on_delete=models.CASCADE, blank=True, null=True)

date_published = models.DateField(null=True, blank=True)

class Meta:

ordering = ['date_published']

The view is just a simple ListView:

# views.py

from django.views.generic import ListView

from .models import Book

class BookListView(ListView):

model = Book

Since the test examples will test the endpoint, we also need the URL:

# urls.py

from django.urls import path

from . import views

urlpatterns = [

path("books/", views.BookListView.as_view(), name="book_list"),

]

Now for the tests.

Unittest for Python

Unittest is Python's built-in testing framework. Django extends it with some of its own functionality.

Initially, you might be confused about what methods belong to unittest and what are Django's extensions.

Unittest provides:

- TestCase, serving as a base class.

-

setUp()andtearDown()methods for the code you want to execute before or after each test method.setUpClass()andtearDownClass()also run once per wholeTestClass. - Multiple types of assertions, such

as

assertEqual,assertAlmostEqual, andassertIsInstance.

The core part of the Django testing framework is TestCase.

Since it subclasses unittest.TestCase, TestCase has all the same functionality but also builds on top of it, with:

-

setUpTestData class method: This creates data once per

TestCase(unlikesetUp, which creates test data once per test). UsingsetUpTestDatacan significantly speed up your tests. - Test data loaded via fixtures.

- Django-specific assertions, such as

assertQuerySetEqual,assertFormError, andassertContains. - Temporary override settings you can set up during a test run.

Django's testing framework also provides a Client that doesn't rely on TestCase and so can be used with pytest. Client serves as a dummy browser, allowing users to create GET and POST requests.

How Tests Are Built with Unittest

Unittest supports test discovery, but the following rules must be followed:

- The test should be in a file named with a

testprefix. - The test must be within a class that subclasses

unittest.TestCase(that includes usingdjango.TestCase). Including 'Test' in the class name is not mandatory. - The name of the test method must start with

test_.

Let's see what tests written with unittest look like:

# tests.py

from datetime import date

from django.test import TestCase

from django.urls import reverse

from .models import Book, Author

class BookListTest(TestCase):

@classmethod

def setUpTestData(cls):

author = Author.objects.create(first_name='Jane', last_name='Austen')

cls.second_book = Book.objects.create(title='Emma', author=author, date_published=date(1815, 1, 1))

cls.first_book = Book.objects.create(title='Pride and Prejudice', author=author, date_published=date(1813, 1, 1))

def test_book_list_returns_all_books(self):

response = self.client.get(reverse('book_list'))

response_book_list = response.context['book_list']

self.assertEqual(response.status_code, 200)

self.assertIn(self.first_book, response_book_list)

self.assertIn(self.second_book, response_book_list)

self.assertEqual(len(response_book_list), 2)

def test_book_list_ordered_by_date_published(self):

response = self.client.get(reverse('book_list'))

books = list(response.context['book_list'])

self.assertEqual(response.status_code, 200)

self.assertQuerySetEqual(books, [self.first_book, self.second_book])

You must always put your test functions inside a class that extends a TestCase class. Although it's not required, we've included 'Test' in our class name so it's easily recognizable as a test class at first glance. Notice that the TestCase is imported from Django, not unittest — you want access to additional Django functionality.

What's Happening Here?

This TestCase aims to check whether the book_list endpoint works correctly. There are two tests — one checks that all Book objects in the database are included in the response as book_list, and the second checks that the books are listed by ascending publishing date.

Although the tests appear similar, they evaluate two distinct functionalities, which should not be combined into a single test.

Since both tests need the same data in the database, we use the class method setUpTestData for preparing data — both tests will have access to it without repeating the process twice.

Unlike the two Book objects, the Author object is not needed outside the setup method, so we don't set it as an instance attribute. I switched the order of the two books to ensure that the correct order isn't accidental.

The two tests look very similar — they both make a GET request to the same URL and extract the book_list from context. Methods inside the TestCase class automatically have access to client, which is used to make a request.

Up to this point, both tests did the same thing — but the assertions differ.

The first test asserts that both books are in the response context, using assertIn. It also ensures the length of the response.context['book_list'] object. The second test uses Django's assertQuerySetEqual. This assertion checks the ordering by default, so it does exactly what we need.

Both tests also check the status_code, so you know immediately if the URL doesn't work.

Running Our Test with Unittest

We can run a test with Django's test command:

(venv)$ python manage.py test

This is the output:

Found 2 test(s).

Creating test database for alias 'default'...

System check identified no issues (0 silenced).

..

----------------------------------------------------------------------

Ran 2 tests in 0.089s

OK

Destroying test database for alias 'default'...

What if we run a failing test? For example, if the order of returned objects doesn't match the desired result:

(venv)$ python manage.py test

Found 2 test(s).

Creating test database for alias 'default'...

System check identified no issues (0 silenced).

F

======================================================================

FAIL: test_book_order (bookstore.test.BookListTest)

----------------------------------------------------------------------

Traceback (most recent call last):

File "/bookstore/test.py", line 17, in test_book_order

self.assertEqual(books, [self.second_book, self.first_book])

AssertionError: Lists differ: [<Book: Pride and Prejudice>, <Book: Emma>] != [<Book: Emma>, <Book: Pride and Prejudice>]

First differing element 0:

<Book: Pride and Prejudice>

<Book: Emma>

- [<Book: Pride and Prejudice>, <Book: Emma>]

+ [<Book: Emma>, <Book: Pride and Prejudice>]

----------------------------------------------------------------------

Ran 2 tests in 0.085s

FAILED (failures=1)

Destroying test database for alias 'default'...

As you can see, the output is quite informative — you learn which test failed, in which line, and why. The test failed because the lists differ; you can even see both lists, so you can quickly determine where the problem lies.

Another essential thing to notice here is that only the second test failed — figuring out what's wrong can be more challenging if there's a single test.

Pytest for Python

Pytest is an excellent alternative to unittest. Even though it doesn't come built-in to Python itself, it is considered more pythonic than unittest. It doesn't require a TestClass, has less boilerplate code, and has a plain assert statement. Pytest has a rich plugin ecosystem, including a specific Django plugin, pytest-django.

The pytest approach is quite different from unittest.

Among other things:

- You can write function-based tests without the need for classes.

- It allows you to create fixtures: reusable components for setup and teardown. Fixtures can have different scopes (e.g.,

module), allowing you to optimize performance while keeping a test isolated. - Parametrization of the test function means it can be efficiently run with different arguments.

- It has a single, plain

assertstatement.

Pytest and Django

pytest-django serves as an adapter between Pytest and Django. Testing Django with pytest without pytest-django is technically possible, but not practical nor recommended.

Along with handling Django's settings, static files, and templates, it brings some Django test tools to pytest, but also adds its own:

- A

@pytest.mark.django_dbdecorator that enables database access for a specific test. - A

clientthat passes Django'sClientin the form of a fixture. - The

admin_clientfixture returns an authenticatedClientwith admin access. - A

settingsfixture that allows you to temporarily override Django settings during a test. - The same special Django assertions as

Django TestCase.

How Tests Are Built with Pytest

Like unittest, pytest also supports test discovery.

As you'll see below, the out-of-the-box rules are (these rules can be easily changed):

- The files must be named test_*.py or *_test.py.

- There's no need for test classes, but if you want to use them, the name should have a Test prefix.

- The function names need to be prefixed with test.

Let's see what tests written with pytest look like:

from datetime import date

import pytest

from django.urls import reverse

from bookstore.models import Author, Book

@pytest.mark.django_db

def test_book_list_returns_all_books(client):

author = Author.objects.create(first_name='Jane', last_name='Austen')

second_book = Book.objects.create(title='Emma', author=author, date_published=date(1815, 1, 1))

first_book = Book.objects.create(title='Pride and Prejudice', author=author, date_published=date(1813, 1, 1))

response = client.get(reverse('book_list'))

books = list(response.context['book_list'])

assert response.status_code == 200

assert first_book in books

assert second_book in books

assert len(books) == 2

@pytest.mark.django_db

def test_book_list_ordered_by_date_published(client):

author = Author.objects.create(first_name='Jane', last_name='Austen')

second_book = Book.objects.create(title='Emma', author=author, date_published=date(1815, 1, 1))

first_book = Book.objects.create(title='Pride and Prejudice', author=author, date_published=date(1813, 1, 1))

response = client.get(reverse('book_list'))

books = list(response.context['book_list'])

assert response.status_code == 200

assert books == [first_book, second_book]

What's Happening Here?

These two tests assess the same functionality as the previous two unittest tests — the first test ensures all the books in the database are returned, and the second ensures the books are ordered by publication date. How do they differ?

- They're entirely standalone — no class or joint data preparation is needed. You could put them in two different files, and nothing would change.

- Since they need database access, they require a

@pytest.mark.django_dbdecorator that comes from pytest-django. If there's no DB access required, skip the decorator. - Django's

clientneeds to be passed as a fixture since you don't automatically have access to it. Again, this comes from pytest-django. - Instead of different assert statements, a plain

assertis used.

The lines that execute the request and that turn the book_list in the response are precisely the same as in unittest.

Fixtures in Pytest

In pytest, there's no setUpTestData. But if you find yourself repeating the same data preparation code over and over again, you can use a fixture.

The fixture in our example would look like this:

# fixture creation

import pytest

@pytest.fixture

def two_book_objects_same_author():

author = Author.objects.create(first_name='Jane', last_name='Austen')

second_book = Book.objects.create(title='Emma', author=author, date_published=date(1815, 1, 1))

first_book = Book.objects.create(title='Pride and Prejudice', author=author, date_published=date(1813, 1, 1))

return [first_book, second_book]

# fixture usage

@pytest.mark.django_db

def test_book_list_ordered_by_date_published_with_fixture(client, two_book_objects_same_author):

response = client.get(reverse('book_list'))

books = list(response.context['book_list'])

assert response.status_code == 200

assert books == two_book_objects_same_author

You mark a fixture with the @pytest.fixture decorator. You then need to pass it as an argument for a function to use.

You can use fixtures to avoid repetitive code in the preparation step or to improve readability, but don't overdo it.

Running a Test with Pytest for Django

For pytest to work correctly, you need to tell django-pytest where Django's settings can be found. While this can be done in the terminal (pytest --ds=bookstore.settings), a better option is to use pytest.ini.

At the same time, you can use pytest.ini to provide other configuration options.

pytest.ini looks something like this:

[pytest]

;where the django settings are

DJANGO_SETTINGS_MODULE = bookstore.settings

;changing test discovery

python_files = tests.py test_*.py

;output logging records into the console

log_cli = True

Once DJANGO_SETTINGS_MODULE is set, you can simply run the tests with:

(venv)$ pytest

Let's see the output:

================================================================================================= test session starts ==================================================================================================

platform darwin -- Python 3.10.5, pytest-7.4.3, pluggy-1.3.0

django: settings: bookstore.settings (from option)

rootdir: /bookstore

plugins: django-4.5.2

collected 2 items

bookstore/test_with_pytest.py::test_book_list_returns_all_books_with_pytest PASSED [ 50%]

bookstore/test_with_pytest.py::test_book_list_ordered_by_date_published_with_pytest PASSED [100%]

================================================================================================== 2 passed in 0.58s ===================================================================================================

This output is shown if log_cli is set to True. If it's not explicitly set, it defaults to False, and the output of each test is replaced by a simple dot.

And what does the output look like if the test fails?

================================================================================================= test session starts ==================================================================================================

platform darwin -- Python 3.10.5, pytest-7.4.3, pluggy-1.3.0

django: settings: bookstore.settings (from ini)

rootdir: /bookstore

configfile: pytest.ini

plugins: django-4.5.2

collected 2 items

bookstore/test_with_pytest.py::test_book_list_returns_all_books_with_pytest PASSED [ 50%]

bookstore/test_with_pytest.py::test_book_list_ordered_by_date_published_with_pytest FAILED [100%]

======================================================================================================= FAILURES =======================================================================================================

___________________________________________________________________________________________________ test_book_order ____________________________________________________________________________________________________

client = <django.test.client.Client object at 0x1064dbfa0>, books_in_correct_order = [<Book: Emma>, <Book: Pride and Prejudice>]

@pytest.mark.django_db

def test_book_order(client, books_in_correct_order):

response = client.get(reverse('book_list'))

books = list(response.context['book_list'])

> assert books == books_in_correct_order

E assert [<Book: Pride... <Book: Emma>] == [<Book: Emma>...nd Prejudice>]

E At index 0 diff: <Book: Pride and Prejudice> != <Book: Emma>

E Full diff:

E - [<Book: Emma>, <Book: Pride and Prejudice>]

E + [<Book: Pride and Prejudice>, <Book: Emma>]

=============================================================================================== short test summary info ================================================================================================

FAILED bookstore/test_with_pytest.py::test_book_list_ordered_by_date_published_with_pytest - assert [<Book: Pride... <Book: Emma>] == [<Book: Emma>...nd Prejudice>]

============================================================================================= 1 failed, 1 passed in 0.78s ==============================================================================================

If log_cli is set to True, you can easily see which test passed and which failed. Although there's a long output on the error, you can often find enough information on why the test failed in the short test summary info.

Unittest vs. Pytest for Python

There's no consensus as to whether unittest or pytest is better. The opinions of developers differ, as you can see

on StackOverflow or Reddit.

A lot depends on your own preferences or those of your team.

If most of your team is used to pytest, there is no need to switch, and vice-versa.

However, if you don't have your preferred way of testing yet, here's a quick comparison between the two:

| unittest (with Django test) | pytest (with pytest-django) |

|---|---|

| No additional installation needed | Installation of pytest and pytest-django required |

| Class-based approach | Functional approach |

| Multiple types of assertions | A plain assert statement |

Setup/TearDown methods within TestCase class |

Fixture system with different scopes |

| Parametrization isn't supported (but it can be done on your own) | Native support for parametrization |

How to Approach Tests in Django

Django encourages rapid development, so you might feel that you don't actually need tests as they would just slow you down. However, tests will, in the long run, make your development process faster and less error-prone. Since Django provides a lot of building blocks that allow you to accomplish much with only a few lines of code, you may end up writing way more tests than actual code.

One thing that can help you cover most of your code with tests is code coverage. "Code coverage" is a measure that tells you what percentage of your program's code is executed during a test suite run. The higher the percentage, the bigger the portion of the code being tested, which usually means fewer unnoticed problems.

Coverage.py is the go-to tool for measuring code coverage of Python programs. Once installed, you can use it with either unittest or pytest.

If using pytest, you can install pytest-cov — a library that integrates Coverage.py with pytest.

It is widely used, but the coverage.py official documentation states that it's mostly unnecessary.

The commands for running coverage are a little different for unittest and pytest:

- For unittest:

coverage run manage.py test - For pytest:

coverage run -m pytest

Those commands will run tests and check the coverage, but you must run a separate command to get the output.

To see the report in your terminal, you need to run the coverage report command:

$(venv) coverage report

Name Stmts Miss Cover

------------------------------------------------------------------------------------------------------

core/__init__.py 0 0 100%

core/settings.py 34 0 100%

core/urls.py 3 0 100%

bookstore/__init__.py 0 0 100%

bookstore/admin.py 19 1 95%

bookstore/apps.py 6 0 100%

bookstore/models.py 64 7 89%

bookstore/urls.py 3 0 100%

bookstore/views.py 34 7 79%

------------------------------------------------------------------------------------------------------

TOTAL 221 15 93%

Percentages ranging from 75% to 100% are seen as giving "good coverage". Aim for a score higher than 75%, but keep in mind that just because your code is well-covered by tests, doesn't mean it is any good.

A Note on Tests

Test coverage is one aspect of a good test suite. However, as long as your coverage is not below 75%, the exact percentage is not that significant.

Don't write tests just for the sake of writing them or to improve your coverage percentage. Not everything in Django needs testing — writing useless tests will slow down your test suite and make refactoring a slow and painful process.

You should not test Django's own code — it's already been tested. For example, you don't need to write a test that checks if an object is retrieved with get_object_or_404 — Django's testing suite already has that covered.

Also, not every implementation detail needs to be tested.

For example, I rarely check if the correct template is used for a response — if I change the name, the test will fail without providing any real value.

How Can AppSignal Help with Testing in Django?

The trouble with tests is that they're written in isolation — when your app is live, it might not behave the same, and the user will probably not behave just as you expected.

That's why it's a good idea to use application monitoring — it can help you track errors and monitor performance.

Most importantly, it can inform you when an error is fatal, breaking your app.

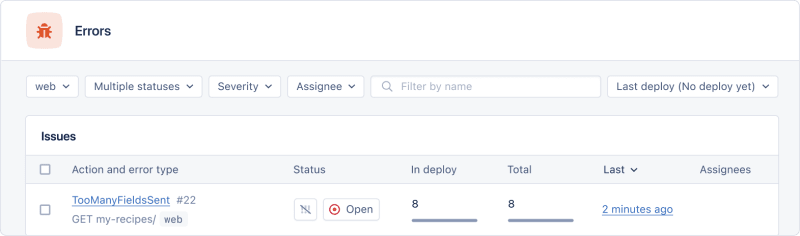

AppSignal integrates with Django and can help you find the errors you miss when writing tests.

With AppSignal, you can see how often an error occurs and when. Knowing that can help you decide how urgently you need to fix the error.

Since you can integrate AppSignal with your Git repository, you can also pinpoint the line in which an error occurs. This simplifies the process of identifying an error's cause, making it easier to fix. When an error is well understood, it's also easier to add tests that prevent it and similar errors in the future.

Here's an example of an error from the Issues list under the Errors dashboard for a Django application in AppSignal:

Wrapping Up

In this post, we've seen that you have two excellent options for testing in Django: unittest and pytest. We introduced both options, highlighting their unique strengths and capabilities. Which one you choose largely hinges on your personal or project-specific preferences.

I hope you've seen how writing tests is essential to having a high-quality product that provides a seamless user experience.

Happy coding!

P.S. If you'd like to read Python posts as soon as they get off the press, subscribe to our Python Wizardry newsletter and never miss a single post!

Posted on February 14, 2024

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.