Beyond Code Completion: Better Prompt Context to Supercharge Your AI Coding Workflow

Pete Cheslock

Posted on March 12, 2024

AI code completion tools like GitHub Copilot have revolutionized the way we write code. These tools not only speed up coding tasks but also help in adding features and fixing bugs more efficiently.

This post will show you how I use tracing, sequence diagrams, and other personal observability data with Generative AI, via a RAG (Retrieval-Augmented Generation) model to get better AI guidance for application development. Imagine feeding AI observability data like traces, performance timing, sequence diagrams, API calls, and other information that maps out your application’s interactions. The result? A hyper-personalized instructional guide on how your application operates and code suggestions empowering you to make informed decisions like a senior staff engineer or software architect.

With the strategic use of AI and runtime data, the path from code suggestion to architecture suggestion becomes not just accessible, but intuitive. This is more than AI coding assistance; it's creating a development process where information is synthesized and presented to you to elevate your code decision making and expertise within your group.

How could you quickly become an expert on your codebase?

Let’s show you how this works.

Shifting your AI from Code Suggestions to Thinking Partner

There are many tools that will statically index your code base and send them as context to the AI to provide code suggestions about your codebase. But there is still a big missing piece of context for generative AI coding assistants that can sometimes lead to bad code suggestions. Tools like Copilot and other AI coding assistants don’t know anything about your application when it runs. This results in between 50-70% of code suggestions being rejected by developers.

Adding tracing data to AI generated prompts can improve the quality of code suggestions. It can also help you get answers to different kinds of questions from generative AI coding tools which are trained on static open source code.

You can follow this process with any large token AI system like Claude by identifying tracing data relevant to the code you are working on, using it as context to prompt OpenAI or other LLMs. Generally, you’d generate tracing data by implementing OpenTelemetry (aka OTEL) libraries into your application, adding spans to your functions with Jaeger, or using commercial SaaS tools like Honeycomb and Datadog.

In this example, I’ll use AppMap to generate tracing data and other runtime data about my code in my code editor. This will provide a structured way of describing how my code executes when it runs.

I will also use Navie, the AI integration for LLMs from AppMap. By default, Navie uses OpenAI GPT-4 integration with pre-programmed prompts to consider runtime data. I will feed the AI a combination of code snippets, traces, and sequence diagrams as context of this particular code interaction I am working on.

In this case I’ve installed AppMap installed into a python powered web store which uses Django.

To get tracing data I use Request Recording to generate an individual sequence diagram for every API request I make. With the libraries installed, and by simply interacting with my application I get a complete view of the entire code sequence automatically without having to spend any additional work adding telemetry, traces, or spans to my code.

You could also use OTEL to instrument your app and capture similar data. But generally there is a larger development effort in identifying where in the code you need to add spans and traces too. The advantage of AppMap’s traces is that they are created without having to modify the codebase.

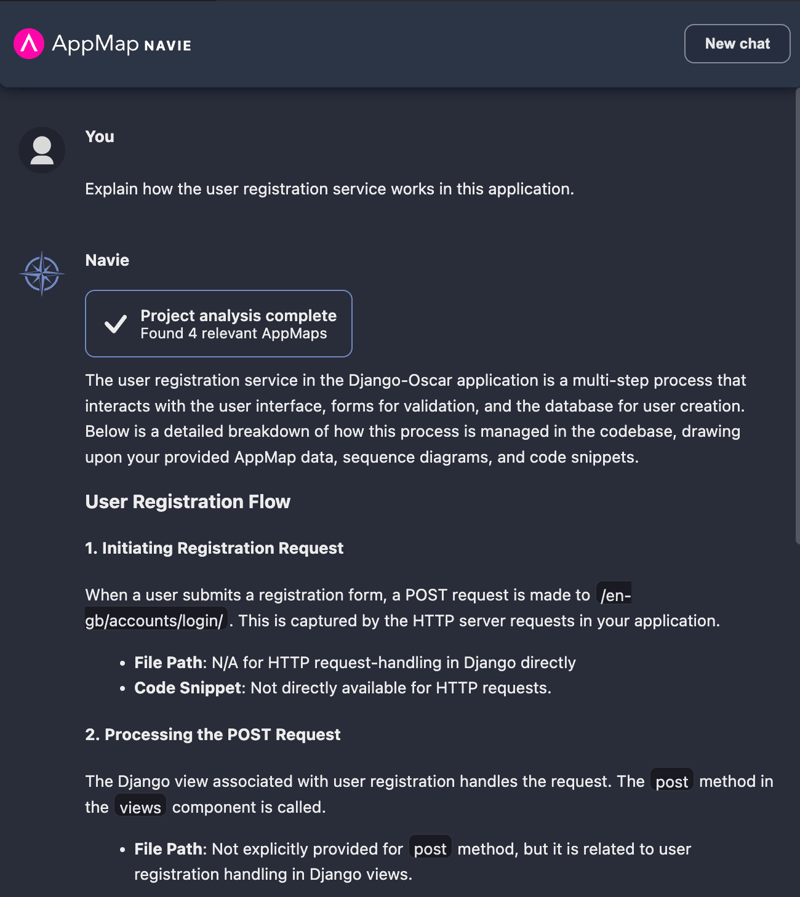

Now we’ve got some detailed traces and sequence diagrams and other runtime data. I could spend time looking through them to get a better understanding of how my new user registration process works, but instead let’s ask a question to our AI assistant, Navie.

Runtime data gives AI new powers to answer questions about how apps work

So using runtime data, let’s ask new kinds of questions beyond “how does this code work”. Let’s ask “how does this feature” or “how does this service works”, starting with the registration service.

AI has detailed information about the code and how the app works, so when you include code in the prompt to GenAI you can understand the code in the context of the app. This is in contrast to a generic explanation of what the code does using an internet search and static analysis as the only source of information.

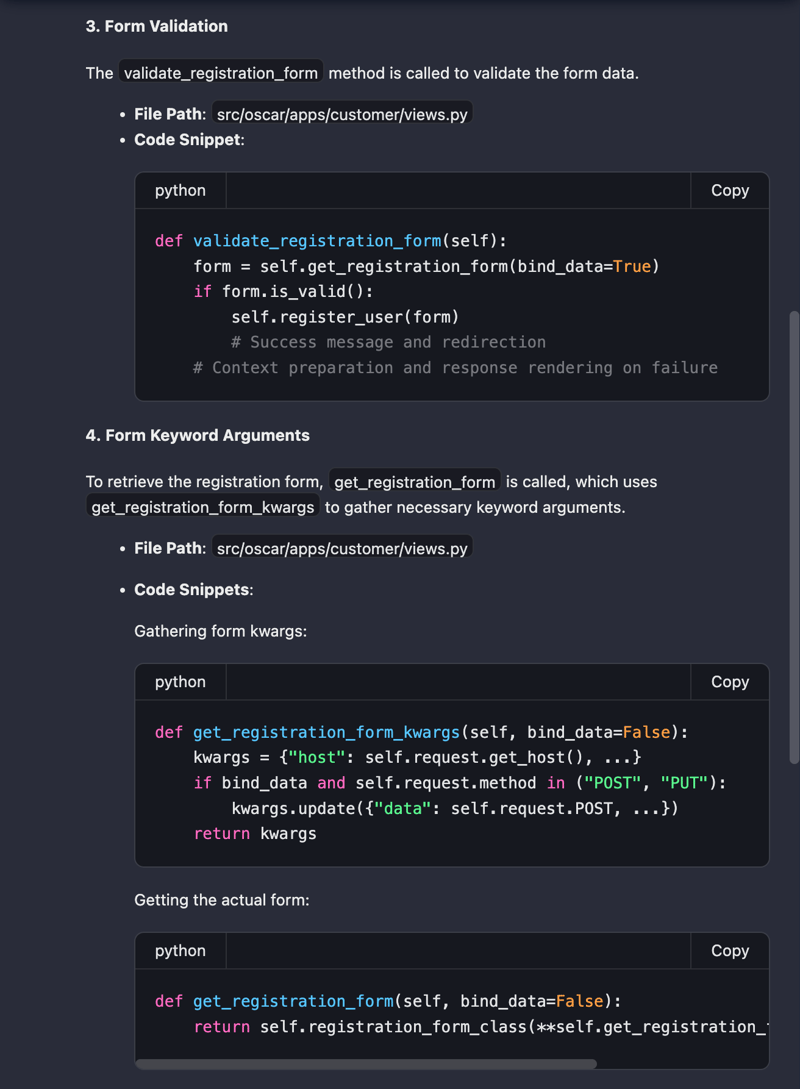

Now I’ve learned how this API endpoint works, from API down to the database with runtime data.

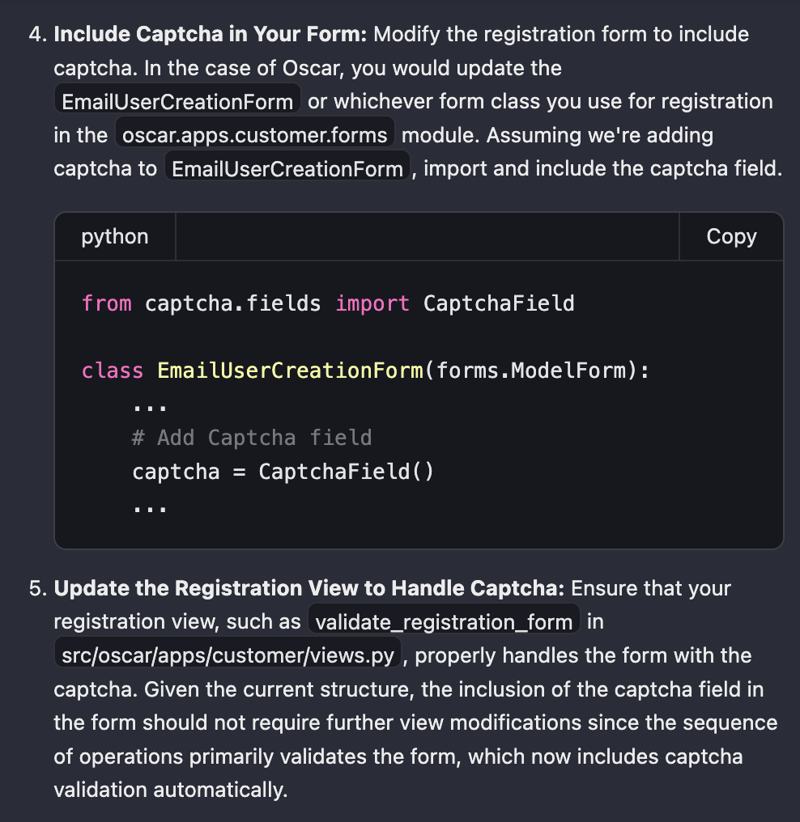

So now my AI has context, and I can go further and do things like ask it to implement a new captcha service. I will get a very detailed solution that is relevant to my codebase.

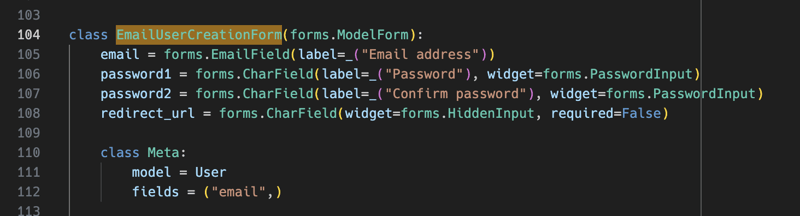

Because the GenAI now has the sequence diagram and the specific functions and code snippets for the API interaction, it’s able to tell me exactly what files to go to and what functions to change.

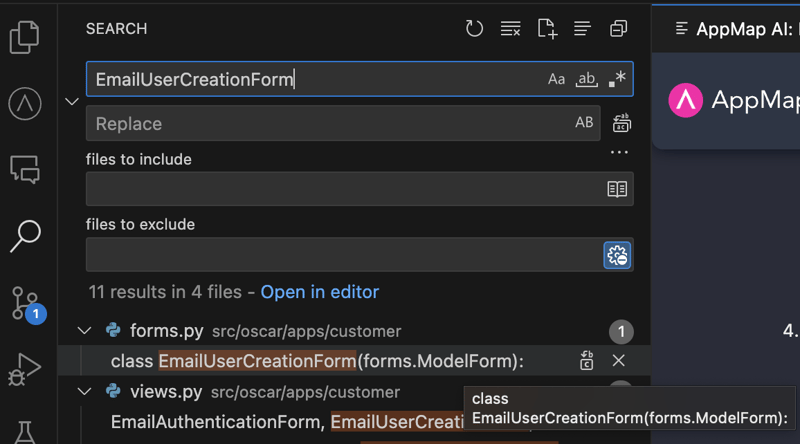

And now when searching for that function in my code editor:

I know exactly where to go to add this line to which file.

Why can’t I just use Copilot or GPT-4 directly?

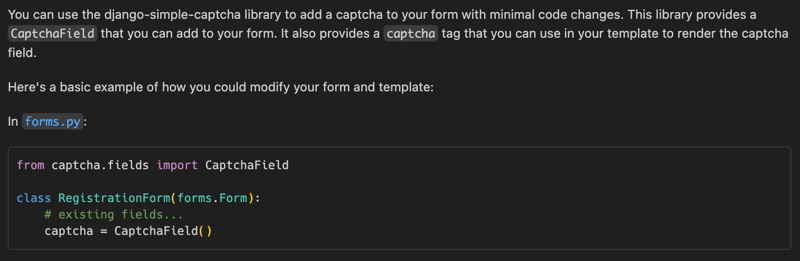

Now what would happen if I just asked Copilot or GPT-4 directly for information about how to add this simple captcha feature to my app? Even though tools like Copilot have ways of using your “workspace” as context in the question, it has no idea how the code runs and thus you would only get generic answers based on existing public knowledge.

For example this response from Copliot.

The only problem here is that there is no RegistrationForm class in my project. As Navie told us in the previous section, the class where I would actually need to add this is called EmailUserCreationForm.

Now you can see how adding tracing data and other runtime context improves the AI coding experience across your application. Now you have a software architect or a senior staff engineer pair programming with you. Without knowledge of your code’s runtime, these generic responses will leave you continuing to hunt and search through a complex code base. Adding runtime behavior providea context-aware insights to power hyper-personalized responses to your toughest software questions.

Check out this video demo watching me add this feature, with the help of AppMap Navie.

To learn more about getting started with AppMap Navie, head over to getappmap.com

Posted on March 12, 2024

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.

Related

March 12, 2024