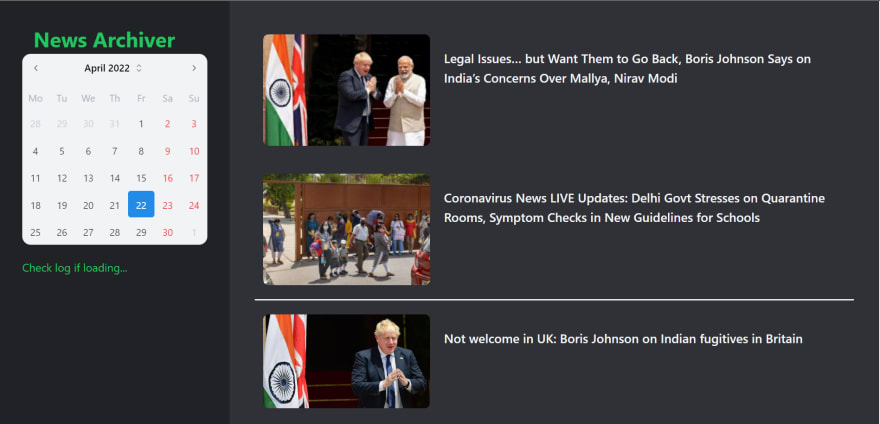

News Archiver

Anuj Singh

Posted on April 22, 2022

Overview of My Submission

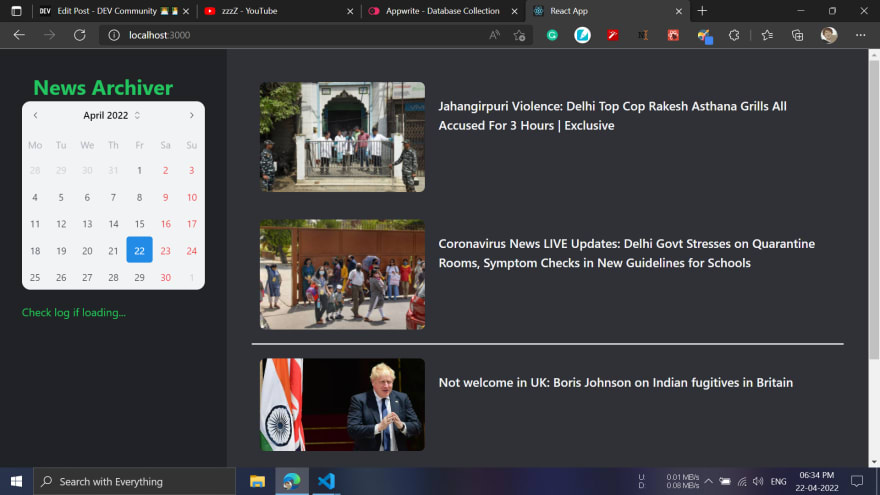

Archives headlines/content from news websites (selected news website which are for now New18 and IndiaToday it can be change) every 3hr. which allows users to historically see the news and how it’s reported on different sites.

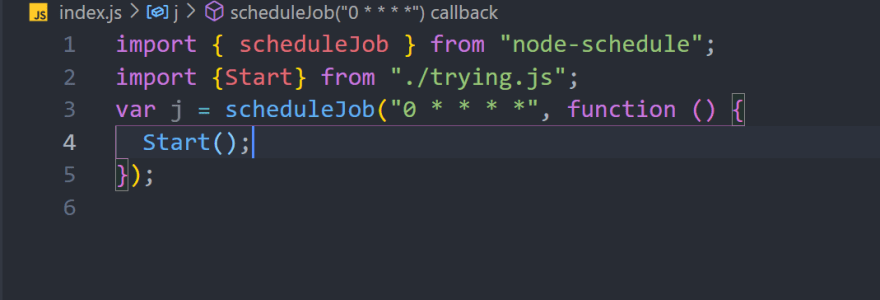

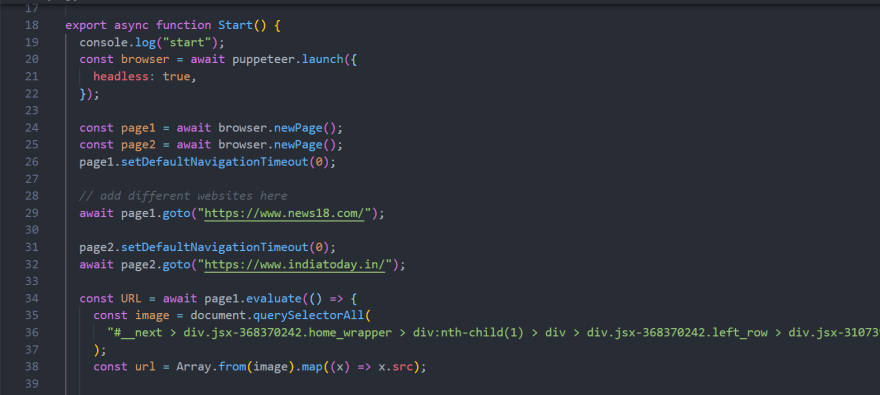

By using a package node-schedule to run background job every 3hr. and puppeteer for scraping the content from a website and this date will be saved in Appwrite database.

Render this data on a client-side application (website).

Submission Category:

Web2 Wizards

Link to Code

Frontend/Client-Side Application

for that i have to host the app on a node server or with a appwrite droplete on digitalocean to regular collect the data from sites. MY DIGITALOCEAN CREDIT IS LOW SO I CAN'T HOST SORRY

Live website without data because I didn't host my application on a appwrite droplet to collect the data and display it sorry for that 🙇♀️.

Backend/Server-Side Application

Additional Resources / Info

Do check it out in 1.5x for a quick walkthrough on my application and how it works

A quick walk through of my Application and basically how it works

PS- I didn't focus on security so that's why my id is still shows in main app. And if I have to I can just set it the .env

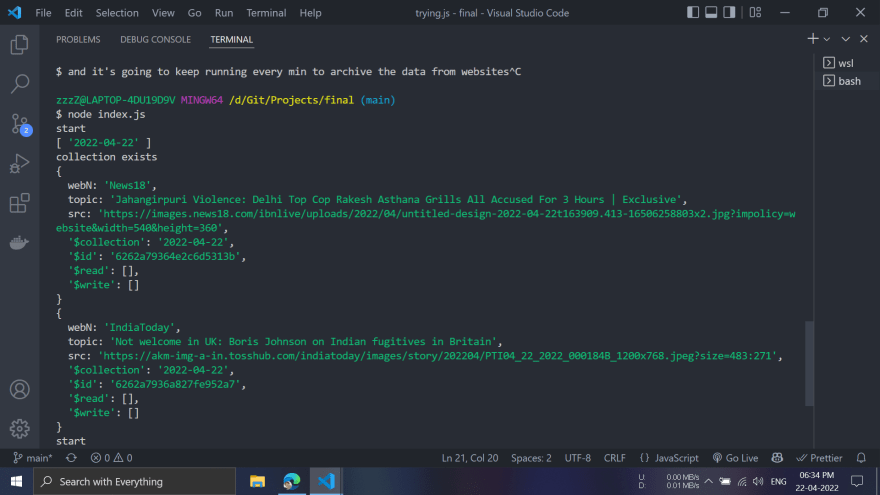

Backend

- Run node index.js

- Cron schedule can be set to any time (for now let say every min * * * * *)

- After that it Scrape data mainly img and headline

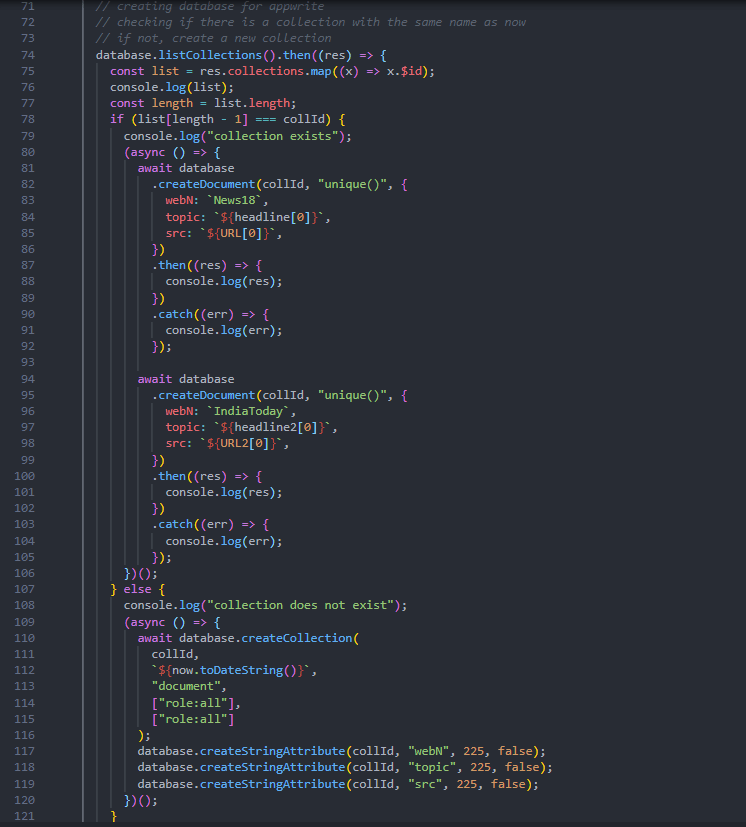

- Now coming up it check the collection list

- Basically for 1 things

- Does a collection exit with current date it if not then create a collection with given attributes

- If collection exits then create the document in that collection with the scraped data

That's it for the Backend

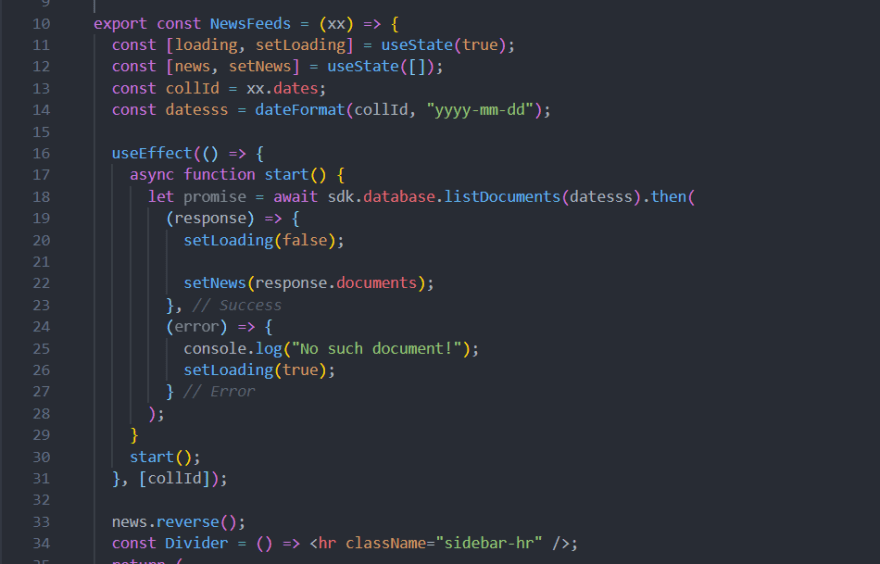

FontendRenders the data that was in collection

And how it render the data of the selected date?

Well it's easy To start of I created the collection with Date id

For more info you can connect with me on Twitter

OoO

The backend can be hosted on digitalocean so it can always keep running with appwrite droplet. If you are wondering.

Posted on April 22, 2022

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.