What is RDS Proxy? Exploring through benchmarks

Anthony Accomazzo

Posted on December 30, 2020

I was surprised when a customer wrote in to explain that he noticed - for simple queries - the Airtable API was about as performant as querying his Sync Inc-provisioned Airtable database.

We'd expect a database query to be at least an order of magnitude faster than an API query. So I had to investigate: What was the explanation for this?

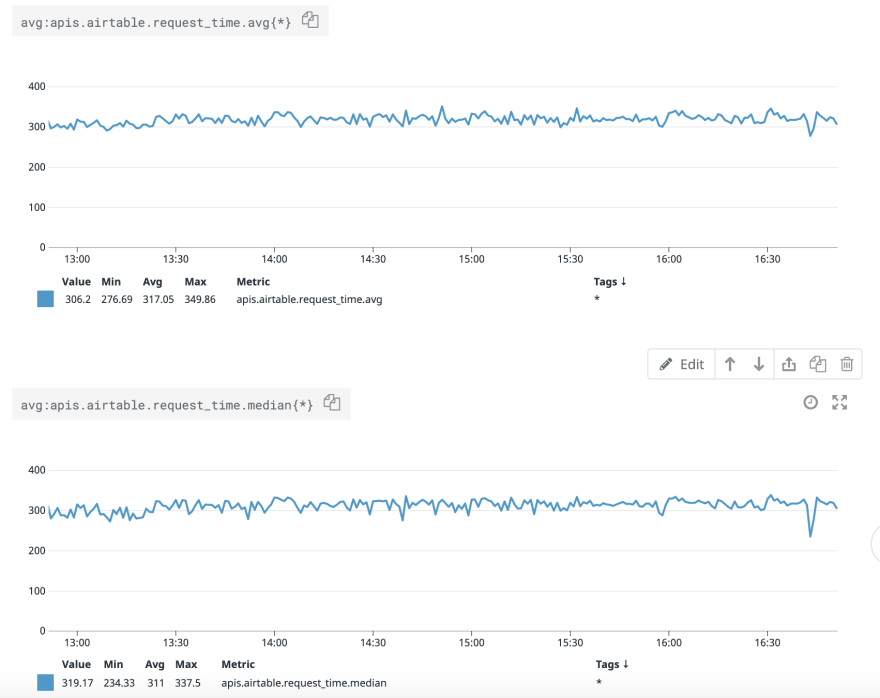

Airtable's API is pretty consistent and responsive, with a mean and median request time of 350ms:

This is about what you'd expect from a standard list request against a third-party API with 100 items returned per request.

So how could a database query get up to 350ms? A little digging, and we discovered that the customer was querying his database via a Lambda function. This meant opening a connection to his Sync Inc db before each query. A solid lead.

I wanted to see how much overhead the connection times were adding. So I wrote some simple benchmark functions in Go.

The timing for the benchmarking is handled by this simple function:

func timeTrack(start time.Time, name string) {

elapsed := time.Since(start)

log.Printf("%s took %s", name, elapsed)

}

You can log how long a function execution took in Go using this function in combination with defer like so:

func benchmarkQuery(count int) {

defer timeTrack(time.Now(), "benchmarkQuery")

for i := 0; i < count; i++ {

funcUnderTest()

}

}

Above, the arguments to timeTrack() are evaluated immediately, meaning the first argument is set to time.Now() - the time benchmarkQuery() was invoked.

But defer waits to invoke timeTrack() until right before the function benchmarkQuery() returns. Which, in this case, will be after the for loop executing funcUnderTest() count times has completed. Neat trick.

With a timing function in place, I wrote out some benchmarks. We want to benchmark two different kinds of behavior:

- A function that opens a connection on every loop and then makes a request

- A function that opens a connection then loops and makes requests

This should help us understand how much time is spent establishing the connection and how much time is spent executing the query.

Here's what the function that opens a connection every loop looks like:

func benchmarkOpen(count int) {

defer timeTrack(time.Now(), "benchmarkOpen")

for i := 0; i < count; i++ {

db := openDb()

defer db.Close()

rows, err := db.Query("SELECT id from purchase_orders limit(100);")

rows.Close()

if err != nil {

log.Printf("Got error: %v", err)

}

}

}

And the one that opens a connection then makes a bunch of queries on that connection:

func benchmarkQuery(count int) {

defer timeTrack(time.Now(), "benchmarkQuery")

db := openDb()

defer db.Close()

for i := 0; i < count; i++ {

rows, err := db.Query("SELECT id from purchase_orders limit(100);")

rows.Close()

if err != nil {

log.Printf("Got error: %v", err)

}

}

}

(Note: It's important to run rows.Close(), otherwise the connection is not immediately released - which means Go's database/sql will default to just opening a new connection for the next request, defeating this test.)

Using these two functions, we're prepared to find out:

- What kind of impact does re-creating a db connection on each run have on the wall time of a 1-off execution?

- Does using RDS proxy have an effect on that wall time?

- What are the basic load characteristics of a db.r5.large getting hammered with new connection requests? How does RDS Proxy help it perform?

This inquiry is all motivated by the nature of Lambda functions, which the customer uses. And indeed Amazon recently released RDS proxy to address this precise architecture:

Many applications, including those built on modern serverless architectures, can have a large number of open connections to the database server, and may open and close database connections at a high rate, exhausting database memory and compute resources. Amazon RDS Proxy allows applications to pool and share connections established with the database, improving database efficiency and application scalability.

RDS Proxy gotchas

Before we get into the benchmarks, I want to touch on the gotchas that sucked up a lot of my time. (I guess after all these years I haven't learned my lesson: The AWS UX is just not going to help you use their products. You have to read the user guide from end-to-end.)

Here are the two that tripped me up big time:

1. RDS Proxy only supports specific database engine versions – and the latest RDS Postgres is not one of them.

The first time I went to setup a new RDS proxy, I didn't see any available databases in the dropdown:

After much trial and error, I ended up spinning up a new Aurora db. At last, I saw something populate the dropdown.

It wasn't until much later I learned that Postgres 12 - the engine I use everywhere - just isn't supported yet.

(What would have been nice: Showing me all my RDS databases in that drop-down, but just greying out the ones that are not supported by Proxy.)

2. You can only connect to your RDS Proxy from inside the same VPC

This one was a time sink. There are few things I dread more than trying to connect to something on AWS and getting a timeout. There are about a half-dozen layers (security groups, IGWs, subnets) that all have to be lined up just so to get a connection online. The bummer is that there's no easy way to debug where a given connection has failed.

So, the first wrench was when I discovered - after much trial and error - that I couldn't connect to my RDS Proxy from my laptop.

(How about a small bone, right next to "Proxy endpoint," that lets me know the limitations associated with this endpoint?)

Now that we're up and connected

RDS vs RDS Proxy

With my RDS Proxy 101 hard-won, we're ready to run some benchmarks.

I copied the benchmark binary up to an EC2 server co-located with the RDS database and RDS proxy.

Per above:

-

benchmarkOpenopens a connection and then runs a query on each loop (sequential) -

benchmarkQueryopens a connection first, then each loop is a query on that connection (also sequential)

Here's what I got running this against the RDS database directly, 1000 times per, with a 10s pause between:

# RDS direct, same datacenter

$ ./main

2020/12/25 00:08:14 Starting benchmarks...

2020/12/25 00:08:19 benchmarkOpen took 5.846447324s

2020/12/25 00:08:30 benchmarkQuery took 504.32703ms

So:

-

benchmarkOpen- ~5.85ms per loop -

benchmarkQuery- ~0.500ms per loop

Fair to assume the connection handshake takes 5ms, which isn't bad.

This benchmark runs too fast to register on any RDS graphs. But if I loop the benchmark to sustain it over a couple minutes, I can see a healthy connection spike for the first test:

I recognize that in benchmarkOpen, instead of deferring db.Close() I could be nicer to my database and close it immediately. But I kind of like the DB slow boil.

Let's move on to the RDS Proxy. Remember, RDS Proxy has to be in the same VPC as the entity calling it as well as the database. So all three are co-located:

# RDS Proxy, same datacenter

$ ./main

2020/12/25 00:15:21 Starting benchmarks...

2020/12/25 00:15:28 benchmarkOpen took 7.094809555s

2020/12/25 00:15:39 benchmarkQuery took 1.239892867s

Comparing to the first benchmark: It appears the Proxy adds an overhead to each request of 0.7ms (1.2ms - 0.5ms). As we'd expect, connection openings take a bit longer, about 1.15ms longer.

And, sure enough, I can't get the graph to budge. It sustains about 100 connections, with no real CPU spike, with the benchmark sustained over several minutes:

We'll see soon how all this performance translates to Lambda, but the motivation for RDS Proxy is clear: It's less about the client, more about the database. RDS Proxy is just a layer of indirection for our database connections. It doesn't speed up establishing new connections - in fact, we pay a small tax on connection opening. It just saves our database from the thundering herd.

Cross-region

Because we're here, I'm curious: What happens if we run this benchmark cross-region, from US-East-1 to US-West-2?

# RDS - different datacenter

$ ./main

2020/12/25 00:11:12 Starting benchmarks...

2020/12/25 00:15:53 benchmarkOpen took 4m41.607514892s

2020/12/25 00:17:15 benchmarkQuery took 1m11.767803425s

Different story:

-

benchmarkOpen- ~281.6ms per loop -

benchmarkQuery- ~71.77ms per loop

Connections take about 200ms, presumably because they involve a lot of back and forth that require coast-to-coast travel.

The difference is over a full order of magnitude. At this point, opening a database connection and making a query starts to have performance characteristics similar to querying Airtable's API.

Indeed, the customer that opened this inquiry operates out of US-East-1. Bingo.

Opening connections is certainly a factor here. But RDS Proxy won't help, because as we've learned it's not intended to reduce client-side connection opening costs. And even if it did, we couldn't use it cross-region!

So co-locating the database in the customer's data center is the move, and will give their team performance an API can only dream of.

Benchmarking Lambda

Lambda got us into this mess, so let's do an end-to-end benchmark with it. Our direct-to-RDS test will route like this:

API Gateway -> Lambda -> RDS

And our RDS Proxy test will look like this:

API Gateway -> Lambda -> RDS Proxy -> RDS

Each lambda function call will open a new database connection and issue a single query. I just adapted the function above to make one open/query as opposed to doing so inside a for loop.

I'll run a benchmark locally on my computer that will call the API Gateway endpoint. Because I'm running locally, I'm able to save a little work by using Go's native benchmarking facilities:

func BenchmarkRequest(b *testing.B) {

for i := 0; i < b.N; i++ {

resp, err := http.Post("https://[REDACTED]/run", "application/json", nil)

if err != nil {

panic(err)

}

defer resp.Body.Close()

if resp.StatusCode != 200 {

fmt.Println("Received non-200 response. Continuing")

}

}

}

The first test routes to RDS directly:

$ go test -bench=. -benchtime 1000x

goos: darwin

goarch: amd64

pkg: github.com/acco/rds-proxy-bench

BenchmarkRequest-8 1000 128684974 ns/op

PASS

ok github.com/acco/rds-proxy-bench 130.351s

So, 128ms per request.

Now using RDS Proxy:

$ go test -bench=. -benchtime 1000x

goos: darwin

goarch: amd64

pkg: github.com/acco/rds-proxy-bench

BenchmarkRequest-8 1000 118576056 ns/op

PASS

ok github.com/acco/rds-proxy-bench 120.529s

118ms per request.

We're not impressed until we look at the graphs:

The first big spike is the first benchmark, hitting RDS directly. The second non-spike is the second benchmark, routed through the proxy.

The end-to-end Lambda benchmark takes it all home: The purpose of RDS Proxy is foremost to tame connections (and related load) on the database. Any happy gains on the client side will be a result of a relieved database.

Posted on December 30, 2020

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.