Setting up EKS-Anywhere cluster on Baremetal servers in Equinix

abhiniveshjain

Posted on January 13, 2023

Introduction

EKS-Anywhere on Baremetal is AWS feature that was recently released. With this, AWS Elastic Kubernetes Service (EKS) can be setup on all supported baremetal Hardware models. This article covers the EKS-A baremetal setup on Equinix based Baremetal servers.

Pre-requisites

- Equinix account

- Organization in Equinix account

- Project in Equinix account

- Personal ssh key

- Personal API key

- EC2 instance

Equinix account creation and configuration

First create an Equinix account, this will require Credit card details. Once account is created, you should create an Organization and Project.

If you have credit voucher, then apply it via Console->Settings->Billing before you start provisioning machines. It is shown below.

Organization creation and project creation is very straightforward operation.

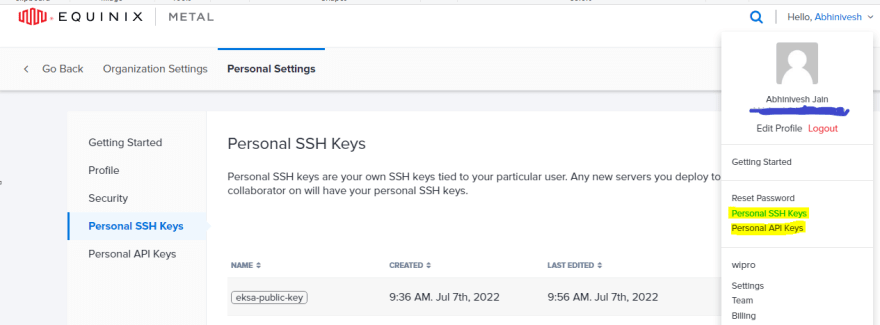

Personal SSH Key addition

You can add personal SSH and personal API key by clicking on your profile .

If you are generating ssh keys from Putty, then remember to use Open-SSH key format for public key and copy that while adding key in your Equinix account. In Putty, it is at the top as shown below.

If you try copying putty public key, then you will get an error “not a valid public key”

If you don’t perform the step of adding personal SSH key, then deployment will fail with below error.

null_resource.wait_for_cloud_init (remote-exec): status: done

null_resource.wait_for_cloud_init: Creation complete after 2m0s [id=8080497012994094038]

╷

│ Error: API Error HTTP 422 must have at least one SSH key or explicitly send no_ssh_keys option

│

│ with equinix_metal_device.eksa_node_cp[0],

│ on main.tf line 36, in resource "equinix_metal_device" "eksa_node_cp":

│ 36: resource "equinix_metal_device" "eksa_node_cp" {

│

│ Error: API Error HTTP 422 must have at least one SSH key or explicitly send no_ssh_keys option

│

│ with equinix_metal_device.eksa_node_dp[0],

│ on main.tf line 76, in resource "equinix_metal_device" "eksa_node_dp":

│ 76: resource "equinix_metal_device" "eksa_node_dp" {

While generating ssh keys, remember to save private key for eksa-admin machine login for running kubectl commands. You can ssh to eksa-admin machine from eksa jump host or from your desktop/laptop.

Now provision ec2 instance which will act as jump host for this entire deployment. Please note it is not mandatory to use ec2 instance, you can use your laptop as well provided you have rights to install terraform and jq

EC2 instance provisioning and configuration

Create t2.micro instance with Ubuntu 20.04 LTS operating system. It should be created in region closer to your Equinix account region where you will be creating the cluster

Add your ssh key and open inbound security rules (in security group) for your IP so that you can login to this machine.

After login to this machine, install Terraform and jq as shown below.

root@ip-172-31-86-91:~# wget https://releases.hashicorp.com/terraform/1.2.4/terraform_1.2.4_linux_amd64.zip

root@ip-172-31-86-91:~# apt install unzip

root@ip-172-31-86-91:~# unzip terraform_1.2.4_linux_amd64.zip

root@ip-172-31-86-91:~# mv terraform /usr/bin/

root@ip-172-31-86-91:~# which terraform

/usr/bin/terraform

root@ip-172-31-86-91:~# terraform -v

Terraform v1.2.4

on linux_amd64

root@ip-172-31-86-91:~# apt update

root@ip-172-31-86-91:~# apt install -y jq

EKS-A cluster creation

P.S. -> Unless required, Do not leave your cluster running for longer time as it costs 5-6 USD per hour so within 24 hrs your bill will be >150 USD. EKS Cluster setup is fully automated, and it takes only 15-20 minutes to setup so it is perfectly ok to setup and run cluster only when it is needed.

To do this cluster creation, follow the steps mentioned below.

root@ip-172-31-86-91:~# git clone https://github.com/equinix-labs/terraform-equinix-metal-eks-anywhere.git

root@ip-172-31-86-91:~# cd terraform-equinix-metal-eks-anywhere/

root@ip-172-31-86-91:~/terraform-equinix-metal-eks-anywhere# ls

LICENSE README.md hardware.csv.tftpl locals.tf main.tf outputs.tf reboot_nodes.sh setup.cloud-init.tftpl variables.tf versions.tf

root@ip-172-31-86-91:~/terraform-equinix-metal-eks-anywhere# terraform init

Initializing the backend...

Initializing provider plugins...

- Finding latest version of hashicorp/local...

.... <<<<<<<<<<Output Truncated to shorten the log>>>>>>>>>>>

Terraform has been successfully initialized!

root@ip-172-31-86-91:~/terraform-equinix-metal-eks-anywhere# terraform apply

var.metal_api_token

Equinix Metal user api token

Enter a value: <Enter you API key from Equinix portal)

var.project_id

Project ID

Enter a value: <Enter your project ID from Equinix Portal>

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

.... <<<<<<<<<<Output Truncated to shorten the log>>>>>>>>>>>

Plan: 19 to add, 0 to change, 0 to destroy.

Changes to Outputs:

+ eksa_admin_ip = (known after apply)

+ eksa_admin_ssh_key = (known after apply)

+ eksa_admin_ssh_user = "root"

+ eksa_nodes_sos = {

+ "eksa-node-cp-001" = (known after apply)

+ "eksa-node-dp-001" = (known after apply)

}

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: yes

tls_private_key.ssh_key_pair: Creating...

equinix_metal_reserved_ip_block.public_ips: Creating...

equinix_metal_vlan.provisioning_vlan: Creating...

random_string.ssh_key_suffix: Creating...

random_string.ssh_key_suffix: Creation complete after 0s [id=842]

equinix_metal_vlan.provisioning_vlan: Creation complete after 1s [id=7bd3984e-f21b-45db-882c-dd6a5a1c72d0]

equinix_metal_reserved_ip_block.public_ips: Creation complete after 1s [id=45d5c21e-d245-4a4f-95bc-94cdd92dad47]

equinix_metal_device.eksa_node_cp[0]: Creating...

equinix_metal_gateway.gw: Creating...

equinix_metal_device.eksa_node_dp[0]: Creating...

tls_private_key.ssh_key_pair: Creation complete after 2s [id=618e4bbec2485418706ef809fbfdfededa470fdd]

local_file.ssh_private_key: Creating...

equinix_metal_ssh_key.ssh_pub_key: Creating...

local_file.ssh_private_key: Creation complete after 0s [id=df7ff74bc7f4b8a64597ae8f22ee91650d8eb7b7]

equinix_metal_gateway.gw: Creation complete after 1s [id=3454fa91-8163-44a3-8230-433db5d54358]

equinix_metal_ssh_key.ssh_pub_key: Creation complete after 0s [id=4eae18dc-2c56-4fab-86f5-463c7a1e702e]

equinix_metal_device.eksa_admin: Creating...

equinix_metal_device.eksa_node_cp[0]: Still creating... [10s elapsed]

equinix_metal_device.eksa_node_dp[0]: Still creating... [10s elapsed]

.... <<<<<<<<<<Output Truncated to shorten the log>>>>>>>>>>>

null_resource.create_cluster: Still creating... [10m20s elapsed]

null_resource.create_cluster (remote-exec): Installing EKS-A secrets on workload cluster

null_resource.create_cluster (remote-exec): Installing resources on management cluster

null_resource.create_cluster (remote-exec): Moving cluster management from bootstrap to workload cluster

null_resource.create_cluster: Still creating... [10m30s elapsed]

null_resource.create_cluster (remote-exec): Installing EKS-A custom components (CRD and controller) on workload cluster

null_resource.create_cluster: Still creating... [10m40s elapsed]

null_resource.create_cluster (remote-exec): Installing EKS-D components on workload cluster

null_resource.create_cluster (remote-exec): Creating EKS-A CRDs instances on workload cluster

null_resource.create_cluster: Still creating... [10m50s elapsed]

null_resource.create_cluster (remote-exec): Installing AddonManager and GitOps Toolkit on workload cluster

null_resource.create_cluster (remote-exec): GitOps field not specified, bootstrap flux skipped

null_resource.create_cluster (remote-exec): Writing cluster config file

null_resource.create_cluster (remote-exec): Deleting bootstrap cluster

null_resource.create_cluster (remote-exec): 🎉 Cluster created!

null_resource.create_cluster: Creation complete after 10m53s [id=2497544909566343714]

Apply complete! Resources: 19 added, 0 changed, 0 destroyed.

Outputs:

eksa_admin_ip = "111.222.333.444" <sample IP>

eksa_admin_ssh_key = "/root/.ssh/my-eksa-cluster-842"

eksa_admin_ssh_user = "root"

eksa_nodes_sos = tomap({

"eksa-node-cp-001" = "8a3418c8-11fb-4aa7-9c39-0588ed8beb0f@sos.sv15.platformequinix.com"

"eksa-node-dp-001" = "197829ca-972c-4e68-8ef4-e5fb85f3df83@sos.sv15.platformequinix.com"

})

root@ip-172-31-86-91:~/terraform-equinix-metal-eks-anywhere#

Congratulations, your EKS cluster is ready.

Verifying Cluster and Creating Sample application

Once cluster is successfully created, we are ready to verify the cluster and run sample application on it. Follow below steps to run sample nginx application.

root@ip-172-31-86-91:~/terraform-equinix-metal-eks-anywhere# cd

root@ip-172-31-86-91:~# ssh -i eksa-privatekey.ppk root@<provide eksa admin IP>

root@eksa-admin:~# ls

eksa-create-cluster.log go hardware.csv my-eksa-cluster my-eksa-cluster.yaml my-eksa-cluster.yaml.orig reboot_nodes.log reboot_nodes.sh snap

root@eksa-admin:~# cd my-eksa-cluster/

root@eksa-admin:~/my-eksa-cluster# ls

my-eksa-cluster-eks-a-cluster.kubeconfig my-eksa-cluster-eks-a-cluster.yaml

root@eksa-admin:~/my-eksa-cluster# ls -l

total 12

-rw------- 1 root 5615 Jul 7 04:36 my-eksa-cluster-eks-a-cluster.kubeconfig

-rwxr-xr-x 1 root 3108 Jul 7 04:40 my-eksa-cluster-eks-a-cluster.yaml

root@eksa-admin:~/my-eksa-cluster# pwd

/root/my-eksa-cluster

root@eksa-admin:~/my-eksa-cluster# export KUBECONFIG=/root/my-eksa-cluster/my-eksa-cluster-eks-a-cluster.kubeconfig

root@eksa-admin:~/my-eksa-cluster# kubectl get nodes

NAME STATUS ROLES AGE VERSION

eksa-node-cp-001 Ready control-plane,master 12m v1.22.10-eks-959629d

eksa-node-dp-001 Ready <none> 10m v1.22.10-eks-959629d

root@eksa-admin:~/my-eksa-cluster# kubectl get po -A -l control-plane=controller-manager

NAMESPACE NAME READY STATUS RESTARTS AGE

capi-kubeadm-bootstrap-system capi-kubeadm-bootstrap-controller-manager-55cbf66f59-77pt2 1/1 Running 0 11m

capi-kubeadm-control-plane-system capi-kubeadm-control-plane-controller-manager-59566fbb79-9qdst 1/1 Running 0 11m

capi-system capi-controller-manager-6f4cb865f6-sj8m6 1/1 Running 0 11m

capt-system capt-controller-manager-bc757b7b-rmkk8 1/1 Running 0 11m

eksa-system rufio-controller-manager-69d74bff87-w4pzs 1/1 Running 0 10m

etcdadm-bootstrap-provider-system etcdadm-bootstrap-provider-controller-manager-5796bcd498-5rntb 1/1 Running 0 11m

etcdadm-controller-system etcdadm-controller-controller-manager-d69f884f-wdbdt 1/1 Running 0 11m

root@eksa-admin:~/my-eksa-cluster# kubectl run eksa-test --image=public.ecr.aws/nginx/nginx:1.19

pod/eksa-test created

root@eksa-admin:~/my-eksa-cluster# kubectl get pod

NAME READY STATUS RESTARTS AGE

eksa-test 1/1 Running 0 7s

root@eksa-admin:~/my-eksa-cluster#

Conclusion

With above mentioned steps, EKS cluster is up and running on Baremetal servers hosted on Equinix. If you want to cleanup this cluster then you can do so by terraform destroy command.

Posted on January 13, 2023

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.