Amit

Posted on March 12, 2024

I am a noob don't learn from this. But Please point out my mistakes.

Mistake in Previous code I used class variable instead of instance variable.

import mpmath as mpMath

mpMath.mp.dps = 5

class Neuron:

def __init__(self,W):

# Weight is an vector.

self.W = W

self.B = np.random.uniform(-1, 1)

def forward(self,X):

self.wX = X.dot(self.W)

self.f_out = self.wX + self.B

return self.f_out

def tanh(self):

self.out_tanh = mpMath.tanh(self.f_out)

return self.out_tanh

neuron = Neuron( np.random.uniform(-1.,1.,size=(784,)).astype(np.float16))

class NLayer:

def __init__(self,input_dimension,number_of_neurons):

self.neurons = []

# A Layer have input and output dimensions.

# I am considering the neurons as output dimensions.

# and Input dimension will be the dimension/lenght of X like 784 in mnist data.

print(f'creating NLayer with {input_dimension} input dimension and {number_of_neurons} neurons \n')

self.num_of_n = number_of_neurons

self.input_length = input_dimension

for n in range(0,number_of_neurons):

# Initialze the weights and bias and create neurons

W = np.random.uniform(-1.,1.,size=(self.input_length,)).astype(np.float16)

self.neurons.append(Neuron(W))

def forward(self,X):

# print(f'Shape of X {X.shape}')

for n in range(self.num_of_n):

self.neurons[n].forward(X)

self.neurons[n].tanh()

# print(f'{len(self.neurons)}')

self.f_out = np.array([float(neuron.out_tanh) for neuron in self.neurons])

return self.f_out

# print(self.neurons[n].out_tanh)

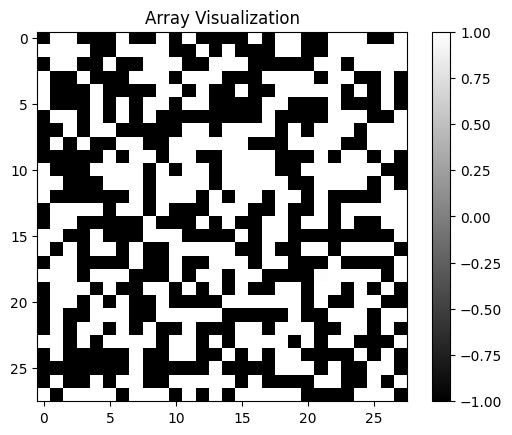

def display_output(self):

# can be used when output dimension is a perfect square

grid_size = int(np.sqrt(len(self.f_out)))

image_matrix = np.reshape(self.f_out, (grid_size, grid_size))

plt.imshow(image_matrix, cmap='gray')

plt.colorbar()

plt.title("Array Visualization")

plt.show()

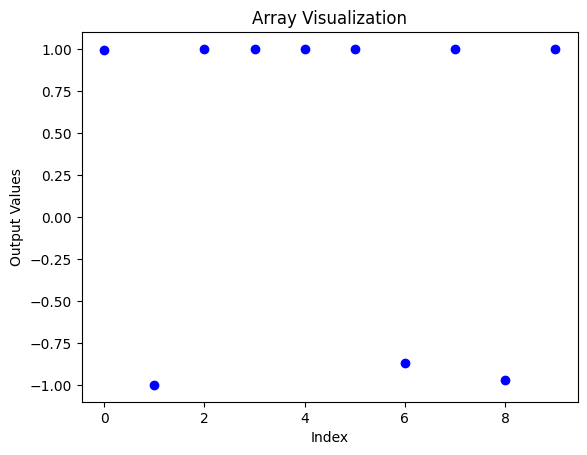

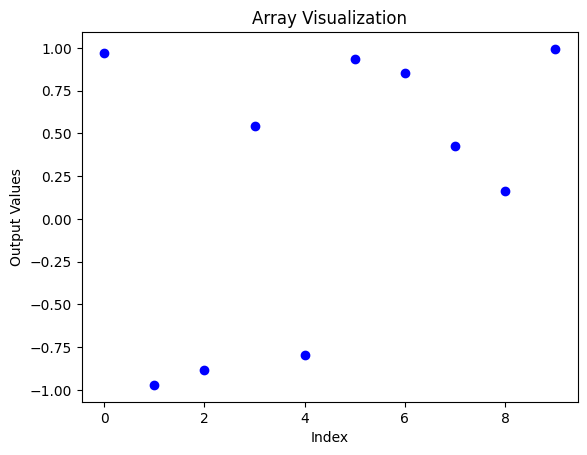

def display_output1(self):

indices = np.arange(len(self.f_out))

plt.scatter(indices, self.f_out, marker='o', color='b')

plt.title("Array Visualization")

plt.xlabel("Index")

plt.ylabel("Output Values")

plt.show()

print(indices.dtype)

# layer_1 = NLayer(784,784)

class NNetwork:

# A network have layers that

def __init__(self,input_dim,hidden_layer_dim_arr):

self.neuronsLayers = []

self.input_dim = input_dim

self.hidden_layer_dim_arr = hidden_layer_dim_arr

self.inputL = NLayer(input_dim,input_dim)

for d in range(0,len(hidden_layer_dim_arr)):

if d == 0:

self.neuronsLayers.append(NLayer(input_dim,hidden_layer_dim_arr[d]))

else:

self.neuronsLayers.append(NLayer(hidden_layer_dim_arr[d-1],hidden_layer_dim_arr[d]))

def train(self):

# update weights

pass

def test(self):

# Don't update weights. check weights.

pass

def predict(self,X):

self.inputL_out = self.inputL.forward(X)

# print(f'Forward out of Input layers is {self.inputL_out}')

self.inputL.display_output1()

for l in range(0,len(self.neuronsLayers)):

if l == 0:

self.neuronsLayers[l].forward(self.inputL_out)

else:

self.neuronsLayers[l].forward(self.neuronsLayers[l-1].f_out)

print(f'Forward output of layer {l} is :--> {self.neuronsLayers[l].f_out}')

self.neuronsLayers[l].display_output1()

def __str__(self):

print(f'Input Dimension {self.input_dim}. Hidden layer dim array {self.hidden_layer_dim_arr}')

return f'Neural Network with {len(self.neuronsLayers)} hidden layer'

nn1 = NNetwork(input_dim=784,hidden_layer_dim_arr=[10,10])

print(nn1)

nn1.predict(train_data[0])

Output of First layer(Input Layer).

The out-put is look like a number when I display forward out of neurons. The below output is tanh()

Output of 2 Layer

Output of 3 Layer

I think Now I should write my training method & how to update weights.

💖 💪 🙅 🚩

Amit

Posted on March 12, 2024

Join Our Newsletter. No Spam, Only the good stuff.

Sign up to receive the latest update from our blog.